View All

EFFECT Photonics Raises Additional $24 Million in Series D, Bringing Total Round to $62 Million

Maynard, MA, June 25, 2025, EFFECT Photonics, a leading provider of next-generation coherent optical solutions for data center and edge networks, today announced it has raised an additional $24 million as part of its Series D financing round. This brings the total raised in the round to $62 million.

The new capital underscores the ongoing commitment of existing investors and their continued confidence in EFFECT Photonics’ leadership in coherent technology and growing commercial momentum with leading industry partners

Maynard, MA, June 25, 2025, EFFECT Photonics, a leading provider of next-generation coherent optical solutions for data center and edge networks, today announced it has raised an additional $24 million as part of its Series D financing round. This brings the total raised in the round to $62 million. The new capital underscores the ongoing commitment of existing investors and their continued confidence in EFFECT Photonics’ leadership in coherent technology and growing commercial momentum with leading industry partners “This funding milestone reinforces the strong market demand we’re seeing and allows us to execute aggressively on our roadmap,” said Roberto Marcoccia, CEO of EFFECT Photonics. “We’re grateful for the continued support of our investors and partners as we scale to meet the needs of a rapidly growing optical interconnect market.” The Series D round follows significant technical and commercial progress. The company’s solutions are enabling the next wave of high-performance networking at the edge and AI infrastructure.

Media Contact:

Harald Graber

EFFECT Photonics

haraldgraber@effectphotonics.com

Tags: EFFECT Photonics, EFFECTPhotonics, Global companies, Growth and evolution

Discover Where Light Meets Digital at OFC2025

Join EFFECT Photonics from April 1 to 3, 2025 at the 50th OFC in San…

Join EFFECT Photonics from April 1 to 3, 2025 at the 50th OFC in San Francisco, California, the world’s largest event for optical networking and communications, to discover firsthand how our technology is transforming where light meets digital. Visit Booth #2850 to learn how EFFECT Photonics’ full portfolio of optical building blocks are enabling 100G coherent to the network edge and next-generation applications.

What to Expect in 2025:

Global Participation: Anticipate over 13,500 participants from 83+ countries, alongside 670 exhibiting companies, making it a truly international affair.

Innovation Hub: Witness the debut of startups and the pioneering strides of industry leaders, setting the tone for the future of optical communications.

Inclusive Programs: Immerse yourself in a diverse array of programs and events designed to encompass the entire ecosystem, with a strong emphasis on inclusivity.

Key Highlights:

Interoperability Demonstrations: Experience live demonstrations by leading optics companies, showcasing seamless integration over OFCnet.

Plenary Sessions: be inspired by industry luminaries as they delve into the transformative power of technology and its impact on our world.

Tags: 100 ZR, 100G, 100gcoherent, access, access networks, bringing100Gtoedge, cloud, cloudedge, coherent, coherentoptics, datacenters, DSP, DSPs, EFFECT Photonics, Integrated Photonics, networkedge, ofc23, opticcommunications, Optics, photonic integration, Photonics, PIC, tunablelasers, wherelightmeetsdigital

Edge Data Centers in the Age of AI

Cloud computing offers scalable and flexible IT resources over the Internet, allowing businesses to avoid…

Cloud computing offers scalable and flexible IT resources over the Internet, allowing businesses to avoid the upfront cost and complexity of owning and maintaining their own IT infrastructure. Cloud services allow organizations to access a wide array of computing resources on demand, such as servers, storage, databases, and software applications.

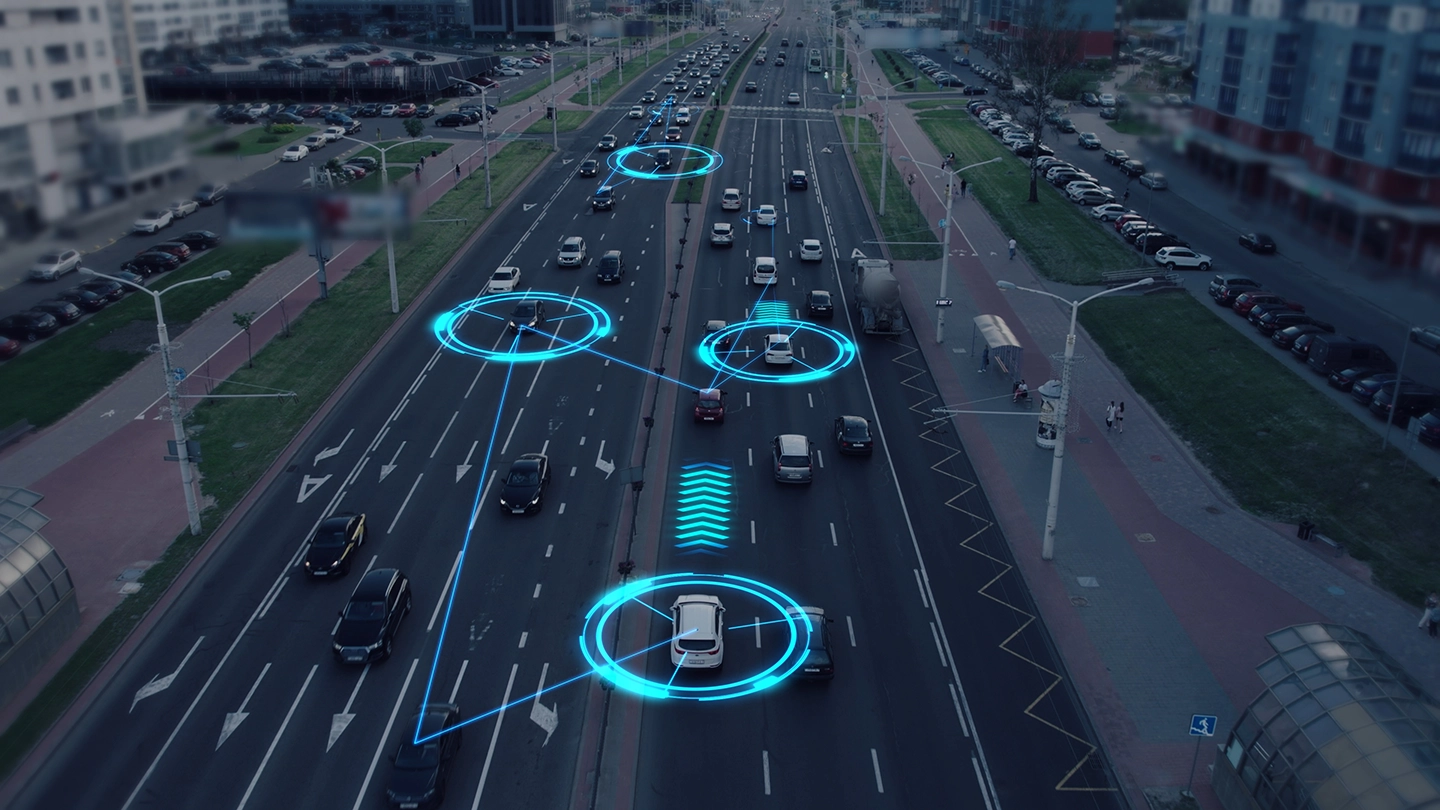

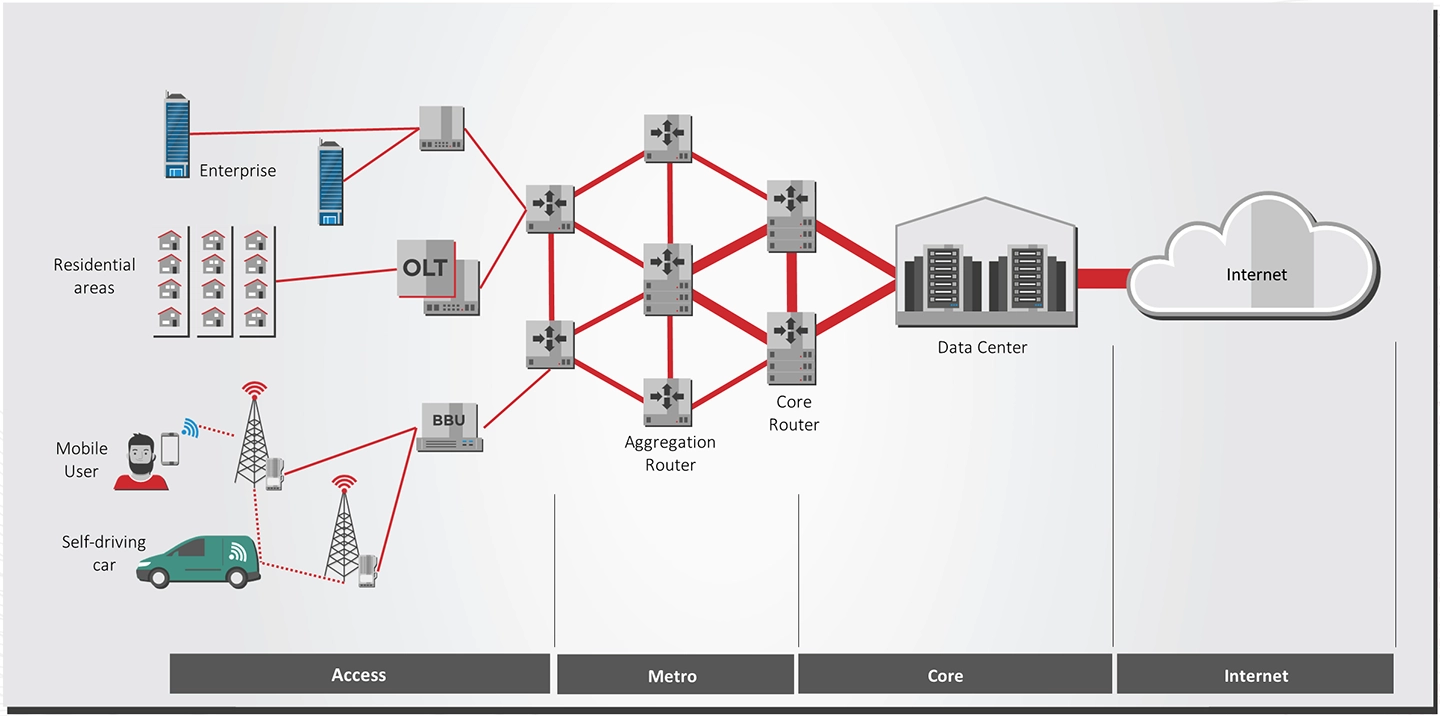

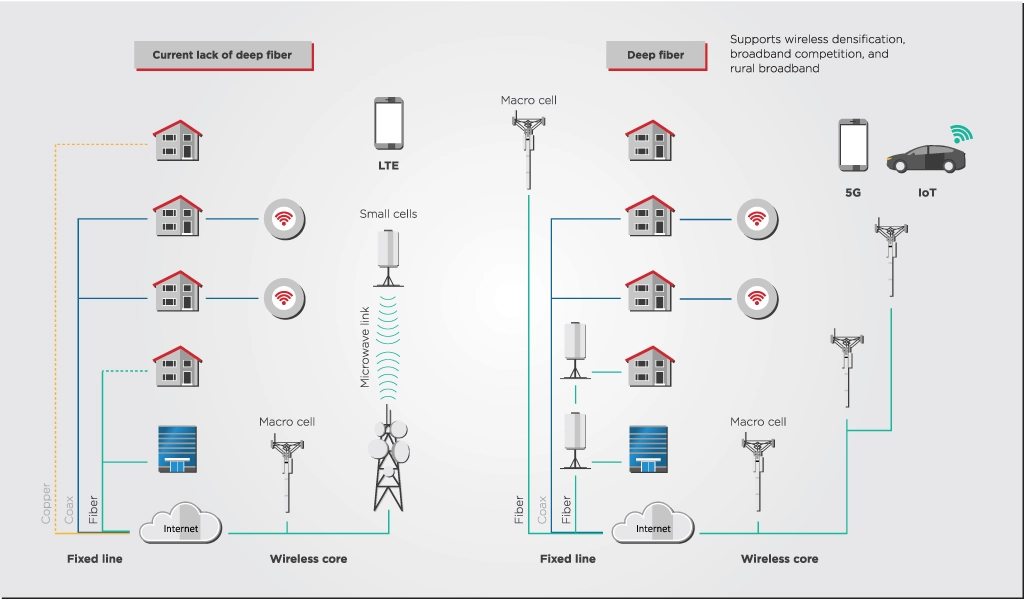

Edge computing complements cloud computing by processing data near the source rather than relying on a central data center. This is important for applications requiring real-time processing and low latency, such as autonomous vehicles, industrial automation, and smart city technologies. By minimizing the distance data must travel, edge computing reduces latency, increases data processing speed, and could also increase data privacy and reliability.

These advantages are causing the global market for edge computing to explode, with STL Partners predicting that the total edge computing addressable market will grow from $9 billion in 2020 to $445 billion in 2030. They also forecast that the number of edge data center sites will triple, from 491 in 2023 to 1584 in 2025.

Various trends are driving the rise of the edge cloud:

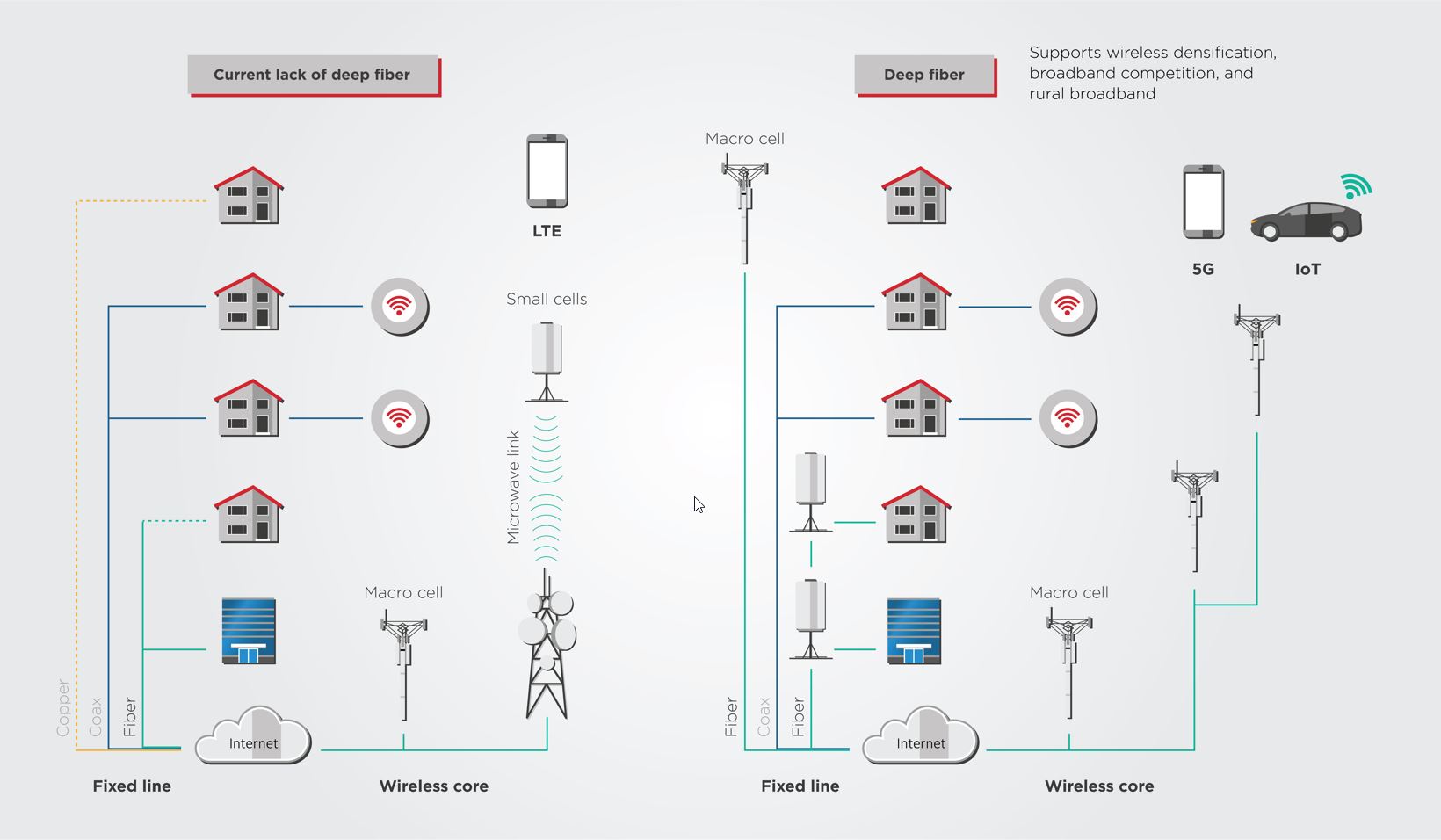

- 5G technology and the Internet of Things (IoT): These mobile networks and sensor networks need low-cost computing resources closer to the user to reduce latency and better manage the higher density of connections and data.

- Content delivery networks (CDNs): The popularity of CDN services continues to grow, and most web traffic today is served through CDNs, especially for major sites like Facebook, Netflix, and Amazon. By using content delivery servers that are more geographically distributed and closer to the edge and the end user, websites can reduce latency, load times, and bandwidth costs as well as increasing content availability and redundancy.

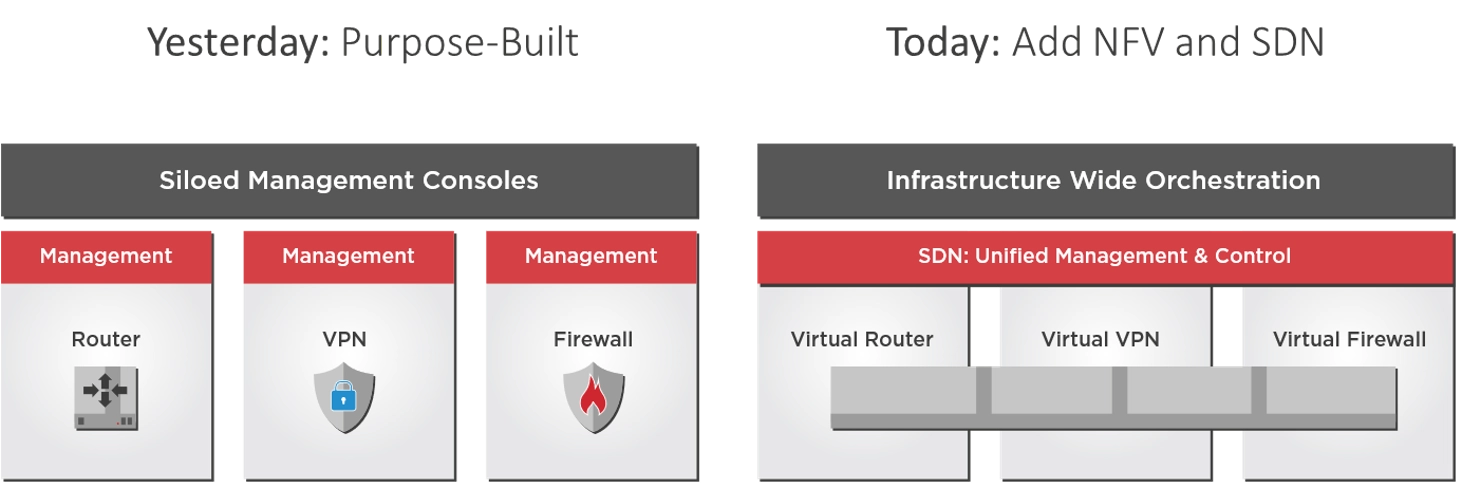

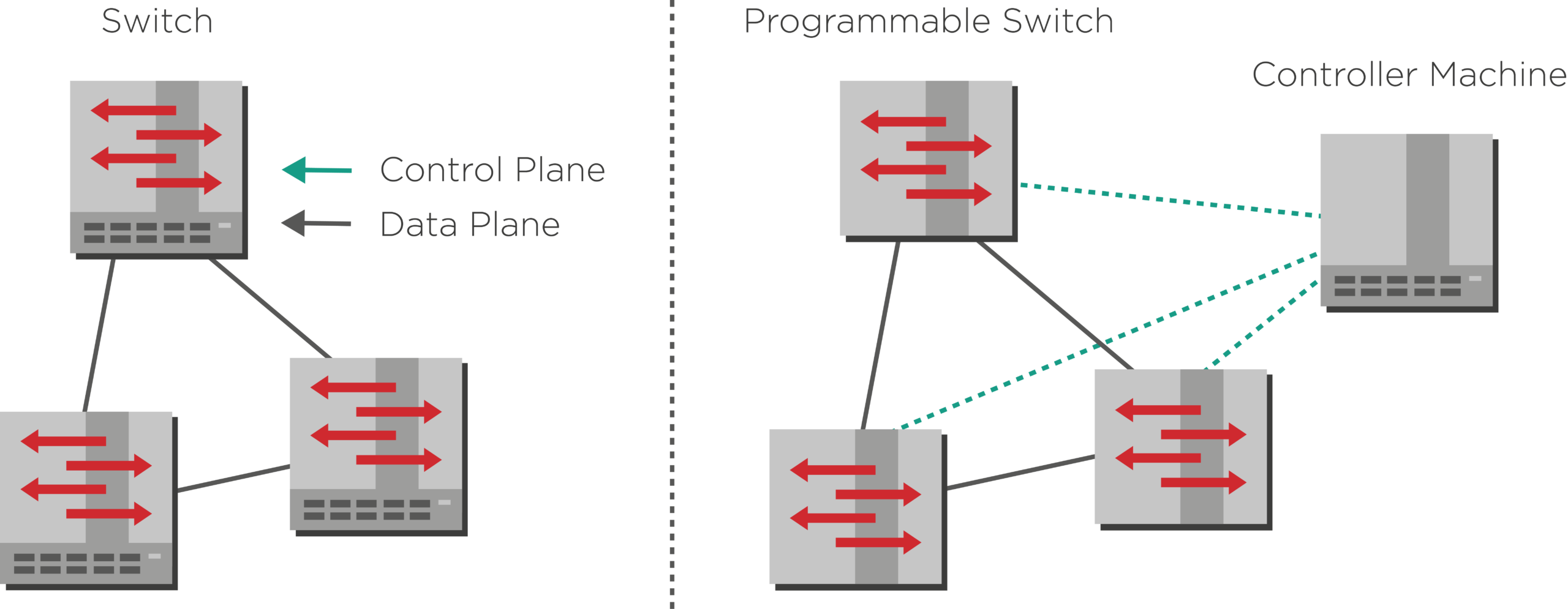

- Software-defined networks (SDN) and Network function virtualization (NFV). The increased use of SDNs and NFV requires more cloud software processing.

- Augment and virtual reality applications (AR/VR): Edge data centers can reduce the streaming latency and improve the performance of AR/VR applications.

However, in this article, we will discuss arguably the most important trend powering the explosive growth of the edge data center sector: artificial intelligence.

The Impact of AI on Edge Data Centers

AI will enhance many of the applications we mentioned before, including real-time analytics, autonomous vehicles, augmented reality, and IoT devices, which require low-latency data processing and immediate decision-making capabilities.

As AI workloads grow, traditional centralized data centers face challenges in handling the sheer volume of data generated at the edge. Due to these challenges, there is increased motivation to move these AI workloads towards the network edge. Edge data centers provide a decentralized solution, distributing computing resources closer to end-users and devices, which reduces the strain on centralized cloud infrastructures.

This proximity to the end user allows for more real-time data processing and decision-making, which is critical for applications that require immediate responses. Industries such as manufacturing, healthcare, retail, and smart cities could benefit from edge AI. For instance, in manufacturing, edge AI can monitor machinery in real-time to predict and prevent failures, enhancing operational efficiency and reducing downtime. In healthcare, edge AI enables real-time patient monitoring, providing immediate alerts to medical staff about critical changes in patient conditions.

The integration of AI at the edge also addresses the growing need for data privacy and security. By processing data locally, sensitive information does not need to be transmitted to centralized cloud servers, reducing the size and risk of data breaches and streamlining compliance with data protection regulations. Moreover, edge AI reduces the bandwidth required for data transfer, as only the necessary information is sent to the cloud, optimizing network resources and reducing costs.

The Value of Edge Data Centers

Several of these applications require lower latencies than before, and centralized cloud computing cannot deliver those data packets quickly enough. As shown in Table 1, a data center on a town or suburb aggregation point could halve the latency compared to a centralized hyperscale data center. Enterprises with their own data center on-premises can reduce latencies by 12 to 30 times compared to hyperscale data centers.

Types of Edge Data Centres

| Types of Edge | Data center | Location | Number of DCs per 10M people | Average Latency | Size | |

|---|---|---|---|---|---|---|

| On-premises edge | Enterprise site | Businesses | NA | 2-5 ms | 1 rack max | |

| Network (Mobile) | Tower edge | Tower | Nationwide | 3000 | 10 ms | 2 racks max |

| Outer edge | Aggregation points | Town | 150 | 30 ms | 2-6 racks | |

| Inner edge | Core | Major city | 10 | 40 ms | 10+ racks | |

| Regional edge | Regional edge | Regional | Major city | 100 | 50 ms | 100+ racks |

| Not edge | Not edge | Hyperscale | State/national | 1 | 60+ ms | 5000+ racks |

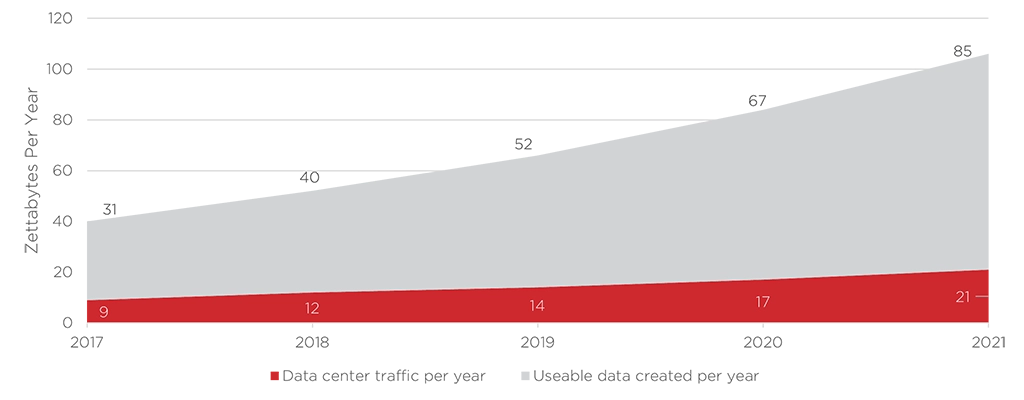

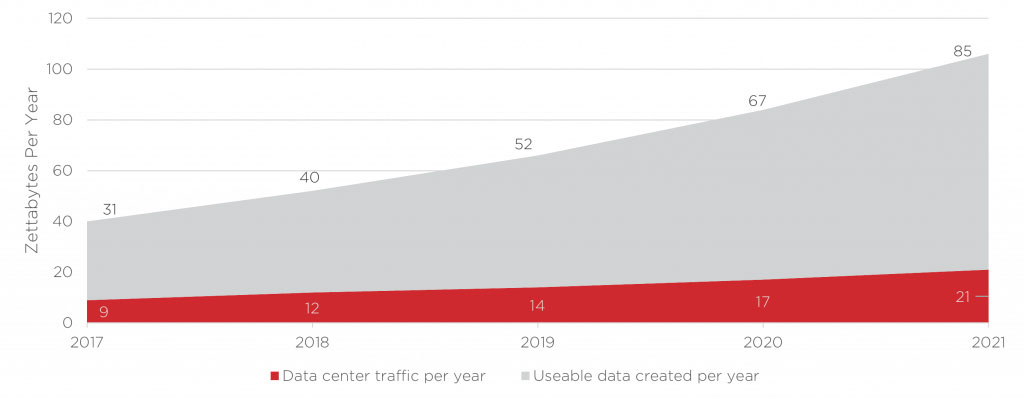

Cisco estimates that 85 zettabytes of useful raw data were created in 2021, but only 21 zettabytes were stored and processed in data centers. Edge data centers can help close this gap. For example, industries or cities can use edge data centers to aggregate all the data from their sensors. Instead of sending all this raw sensor data to the core cloud, the edge cloud can process it locally and turn it into a handful of performance indicators. The edge cloud can then relay these indicators to the core, which requires a much lower bandwidth than sending the raw data.

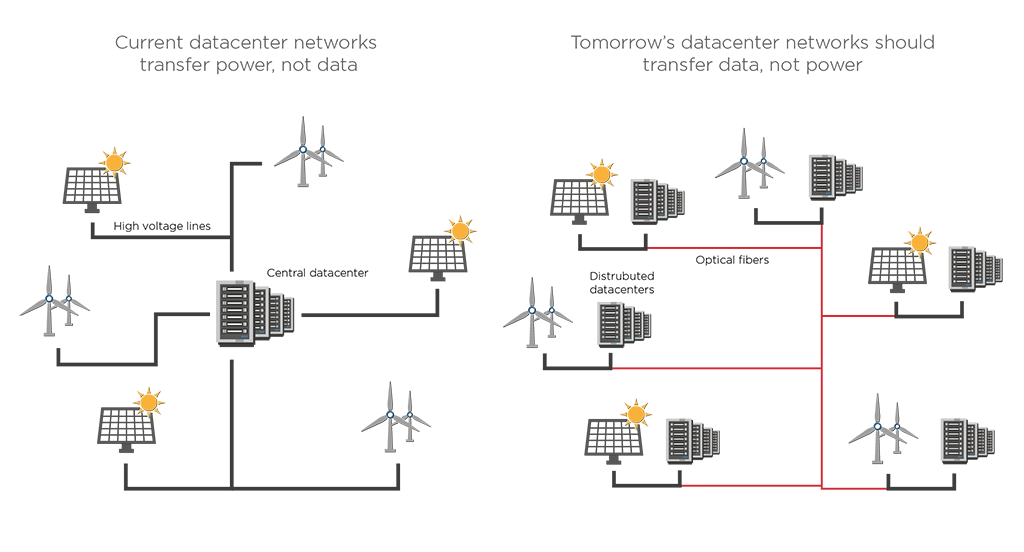

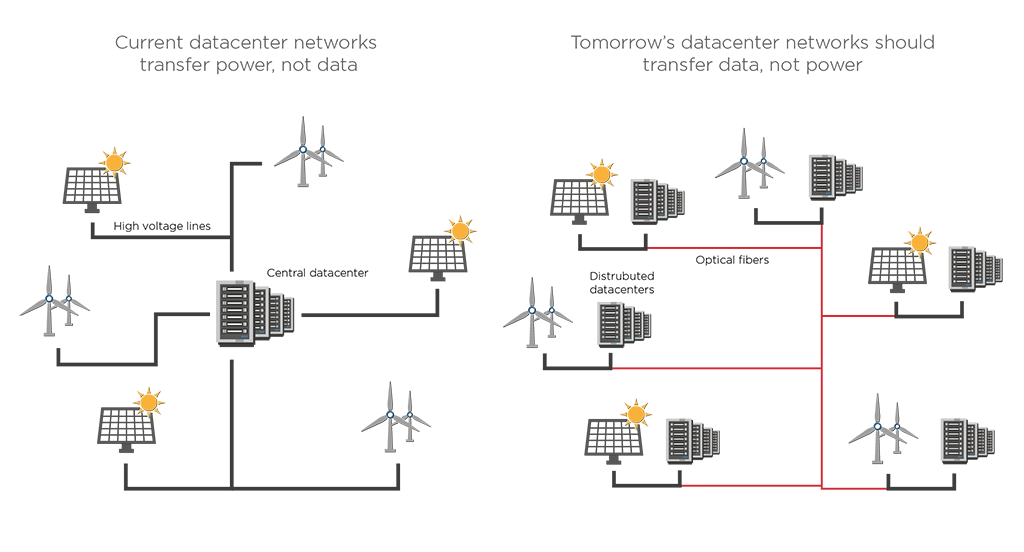

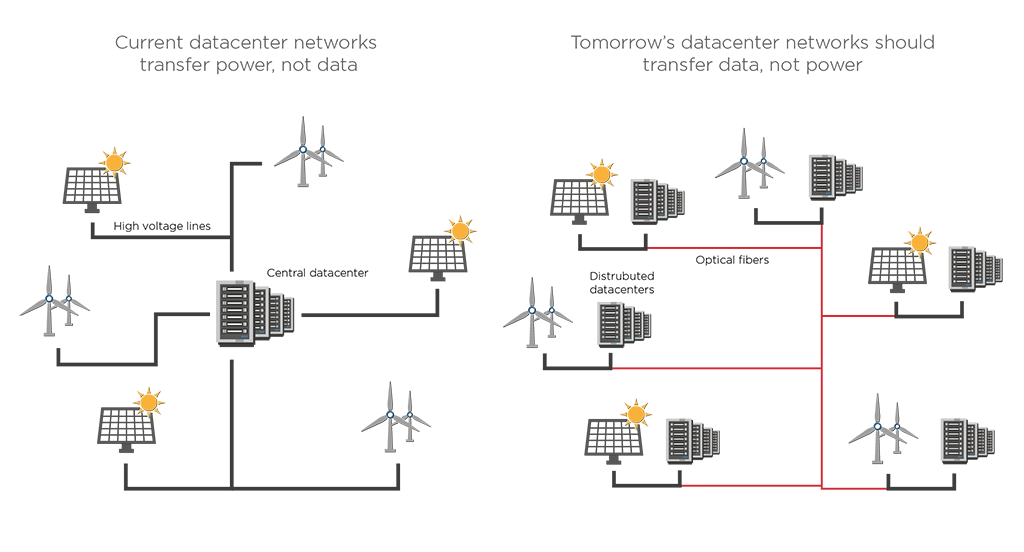

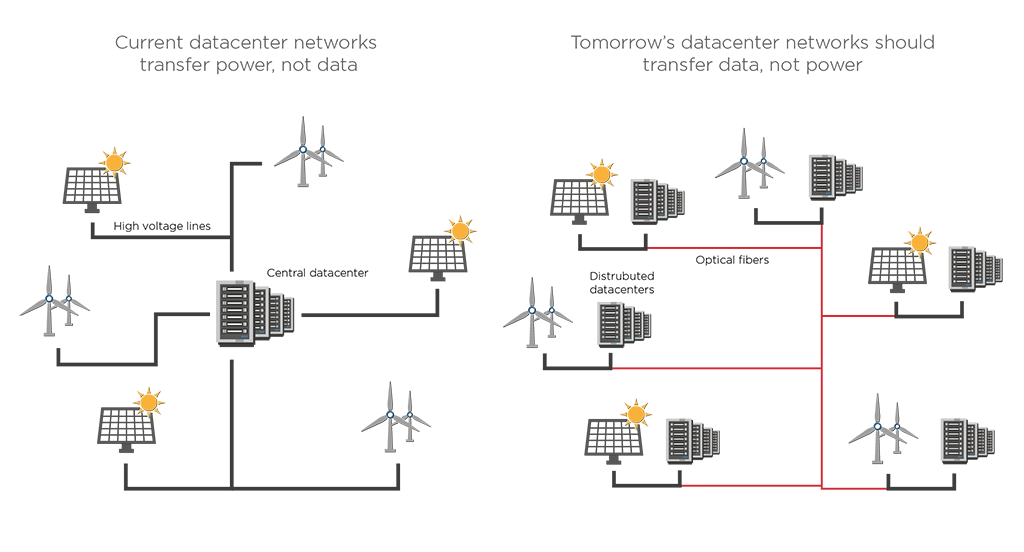

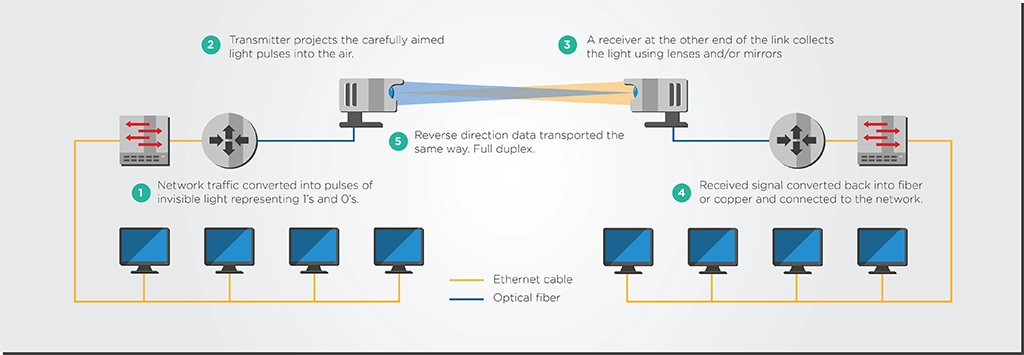

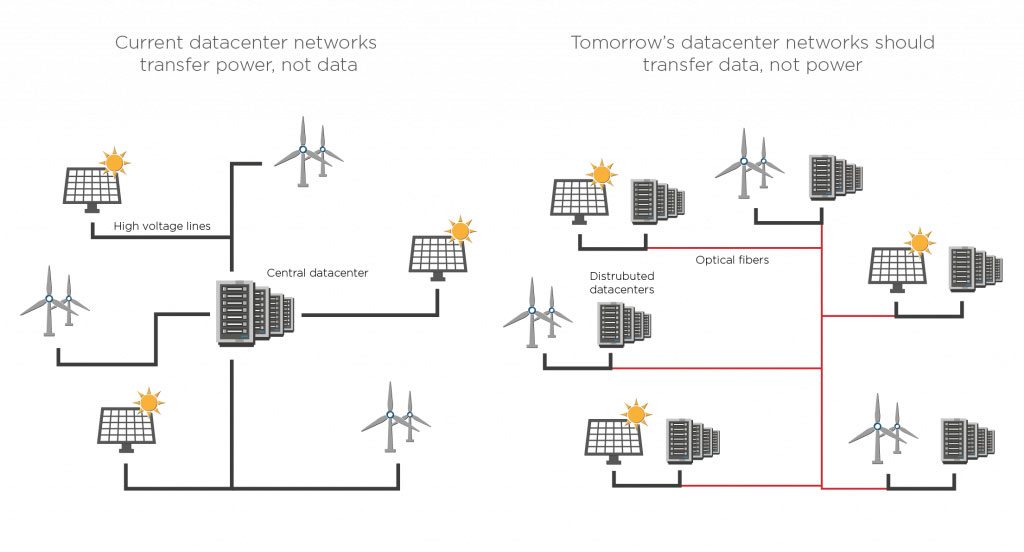

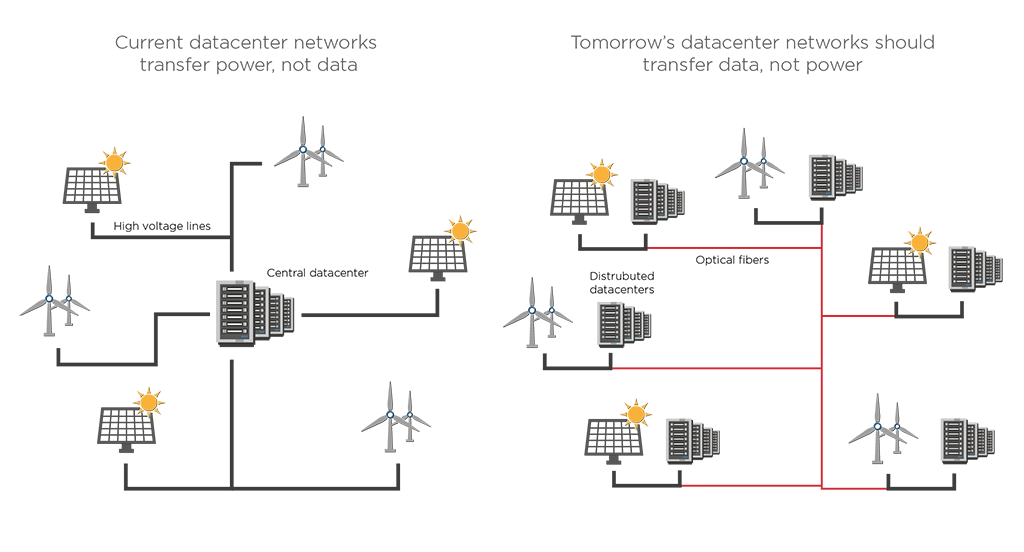

Distributing data centers is also vital for future data center architectures. While centralizing processing in hyper-scale data centers made them more energy-efficient, the power grid often limits the potential location of new hyperscale data centers. Thus, the industry may have to take a few steps back and decentralize data processing capacity to cope with the strain of data center clusters on power grids. For example, data centers can be relocated to areas where spare power capacity is available, preferably from nearby renewable energy sources. EFFECT Photonics envisions a system of datacentres with branches in different geographical areas, where data storage and processing are assigned based on local and temporal availability of renewable (wind-, solar-) energy and total energy demand in the area.

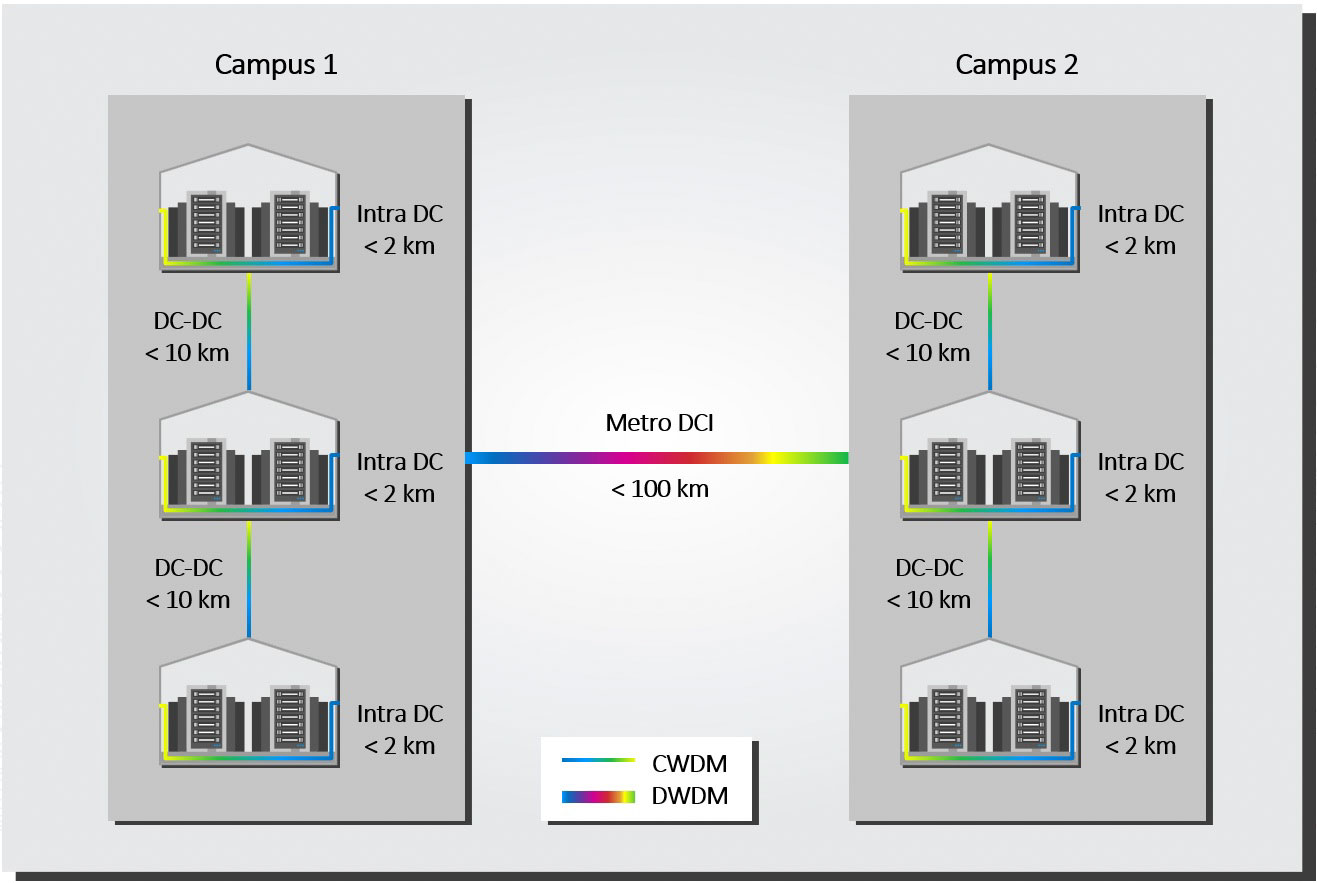

Coherent Technology Simplifies the Scaling of Edge Interconnects

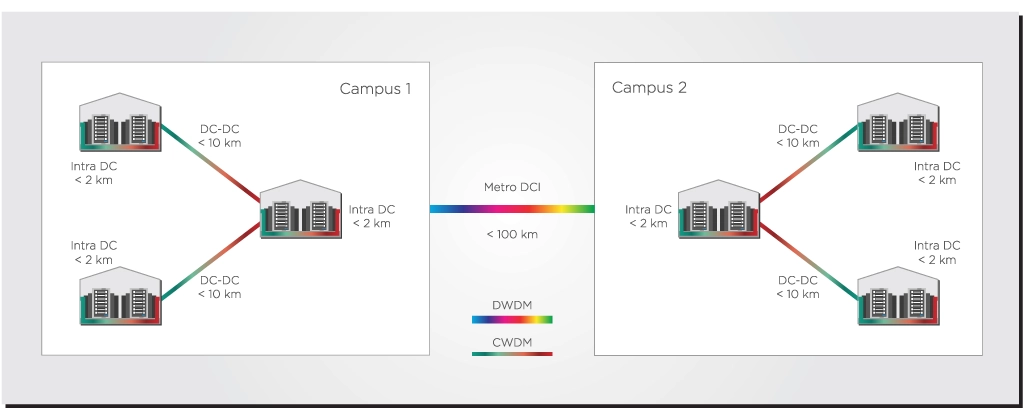

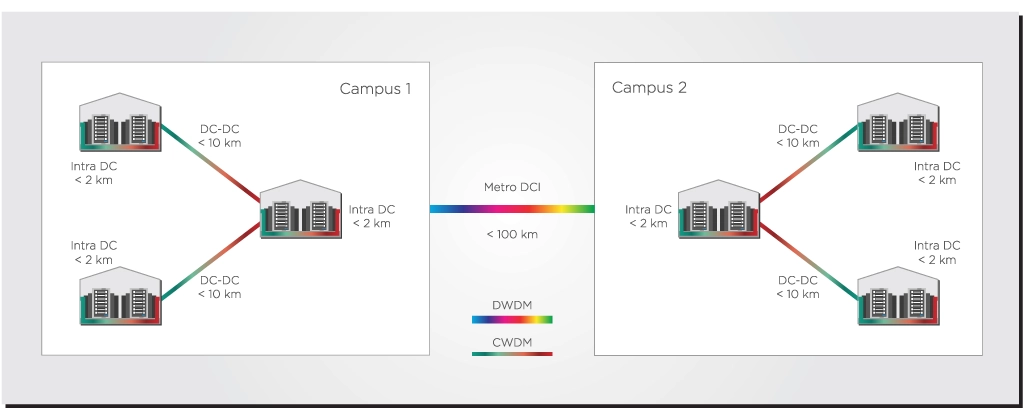

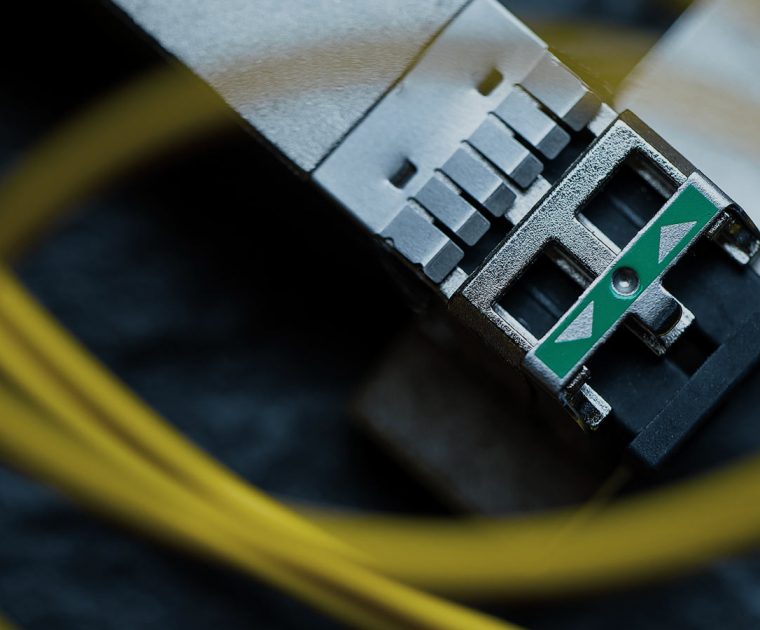

As edge data center interconnects became more common, the issue of how to interconnect them became more prominent. Direct detect technology had been the standard in the short-reach data center interconnects. However, reaching the distances greater than 50km and bandwidths over 100Gbps required for modern edge data center interconnects required external amplifiers and dispersion compensators that increased the complexity of network operations.

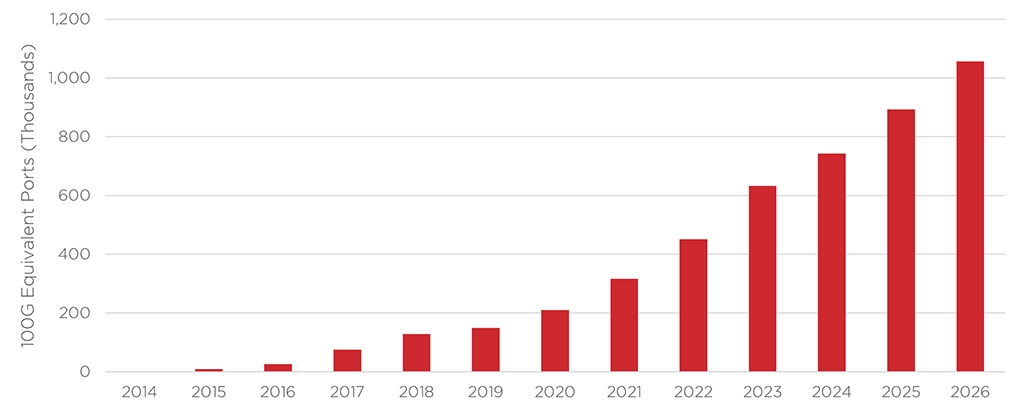

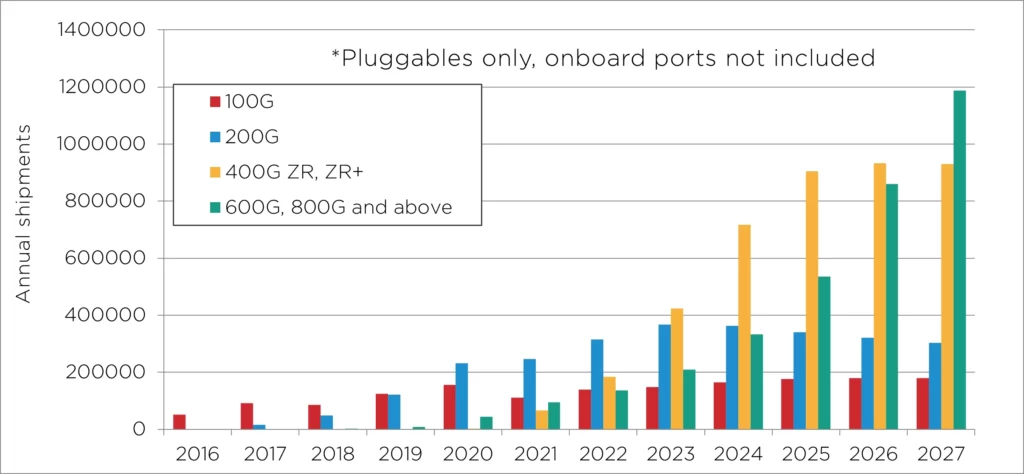

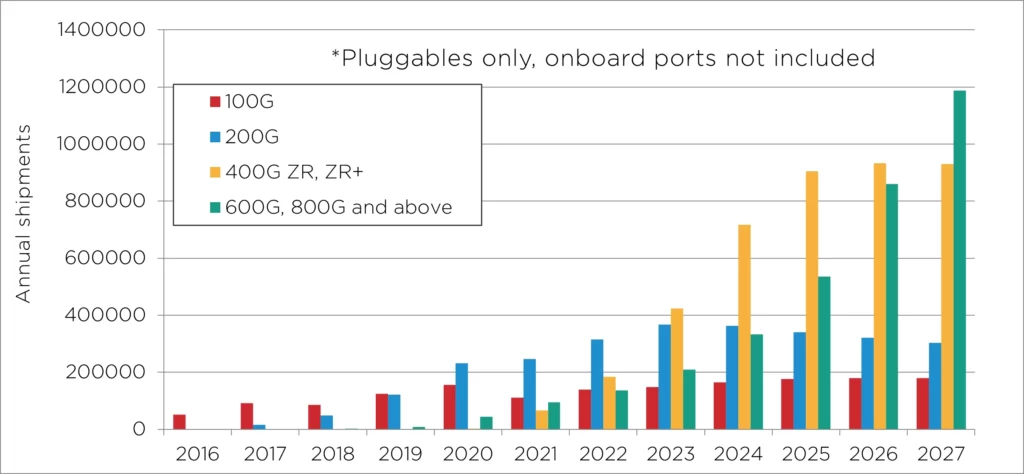

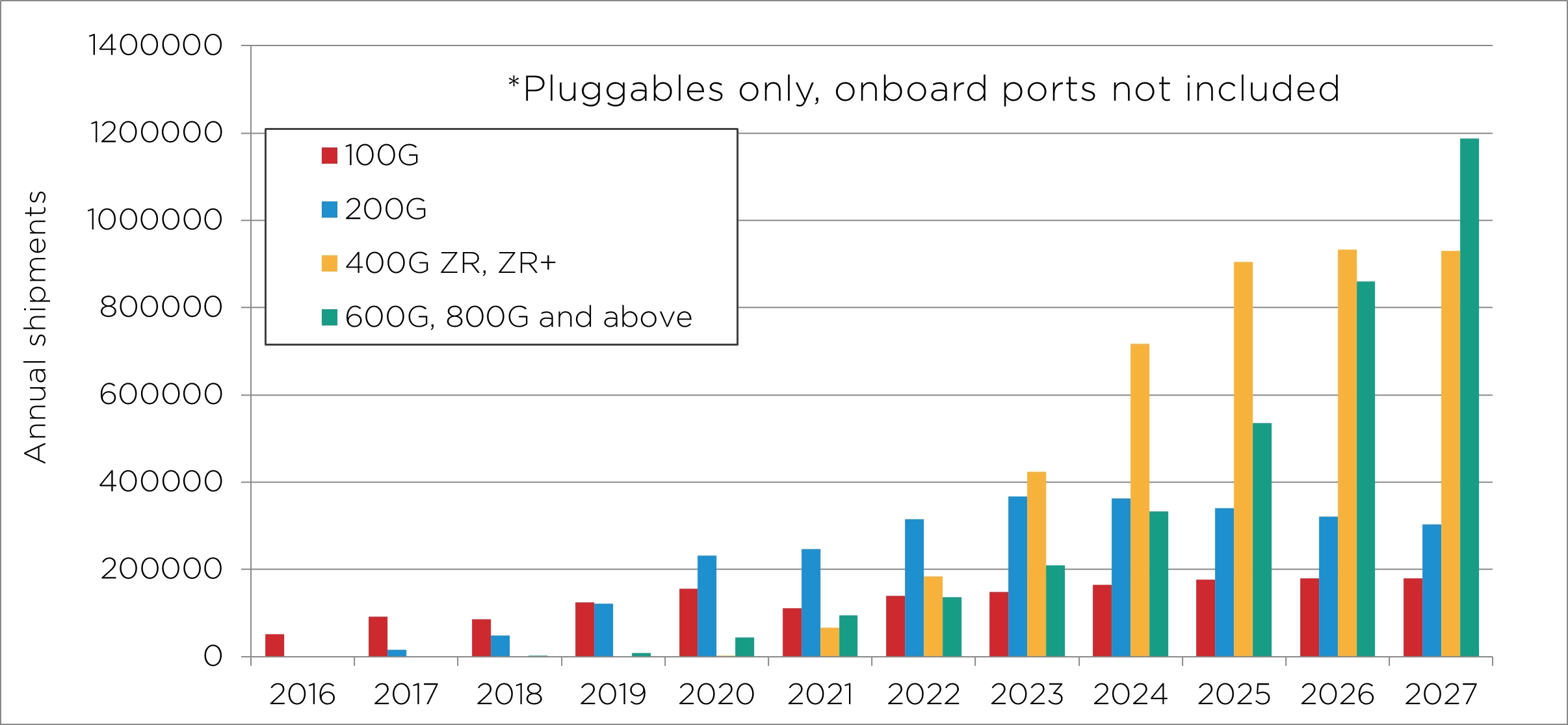

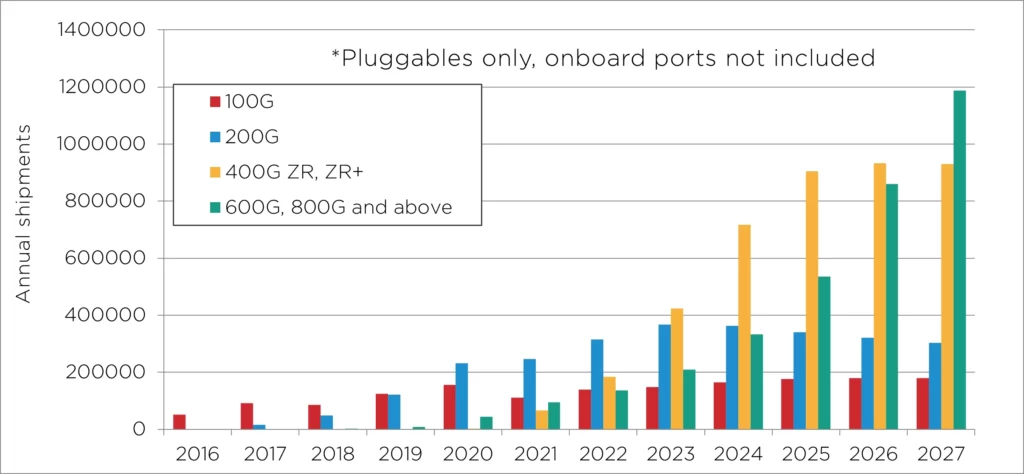

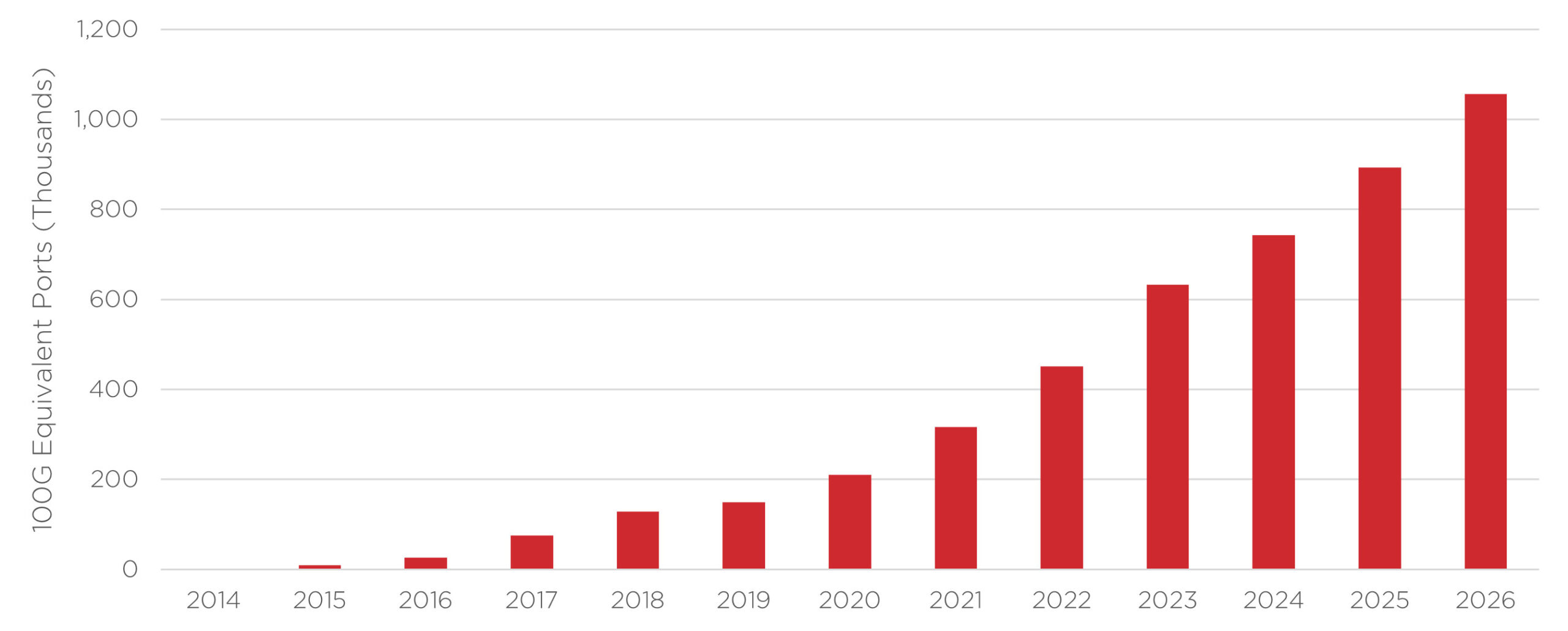

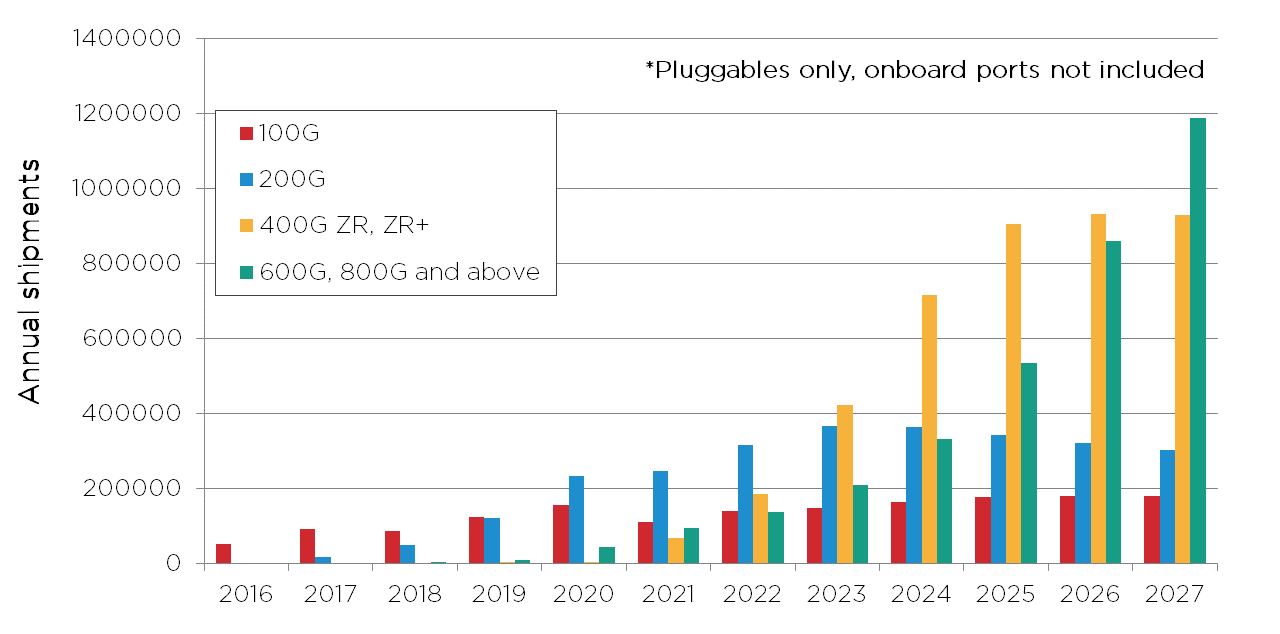

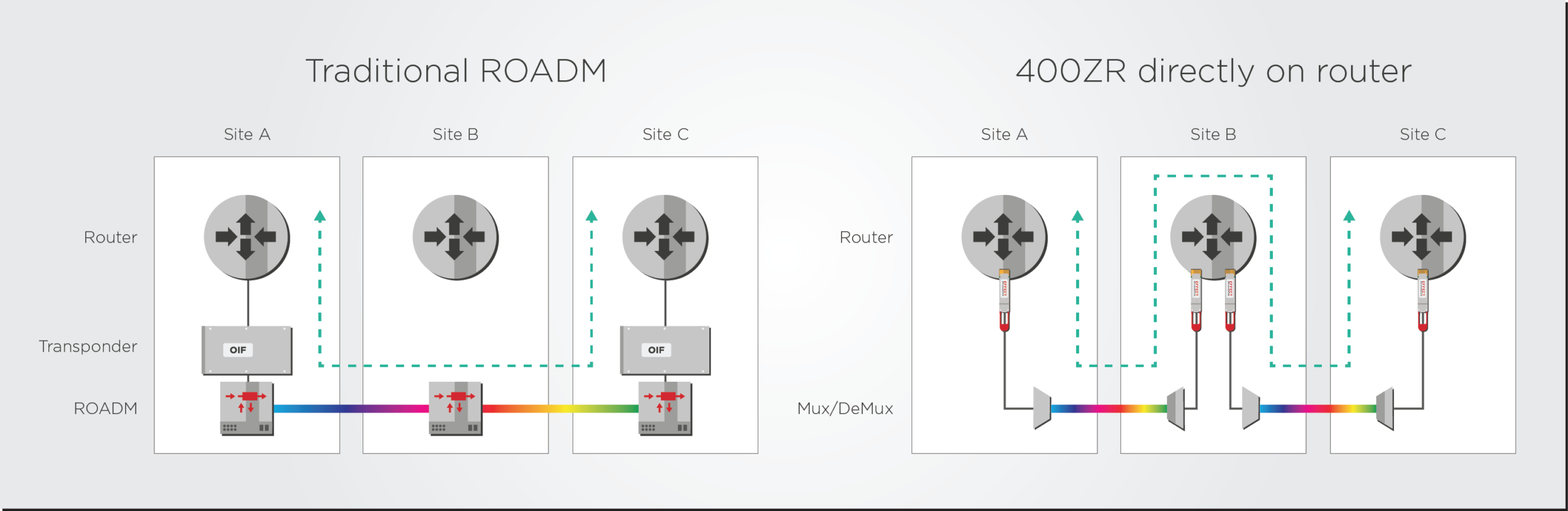

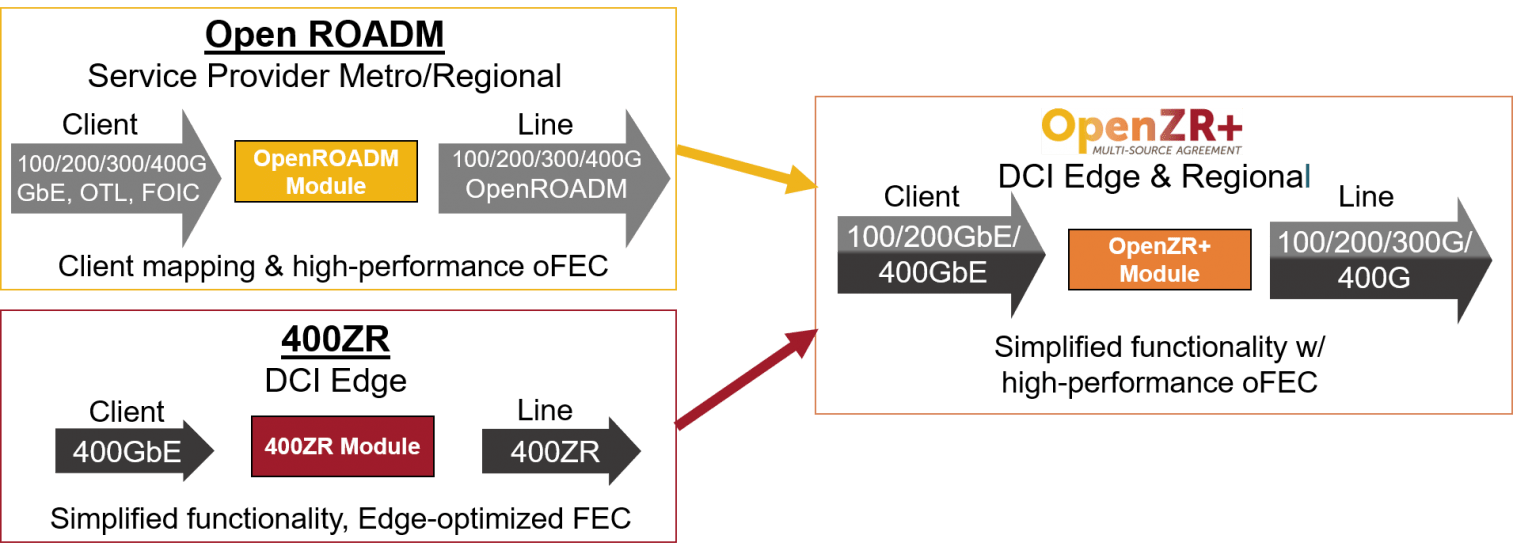

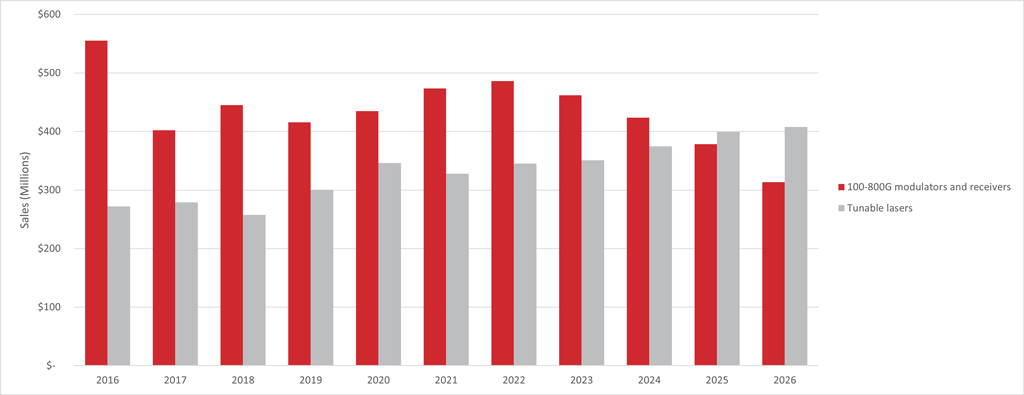

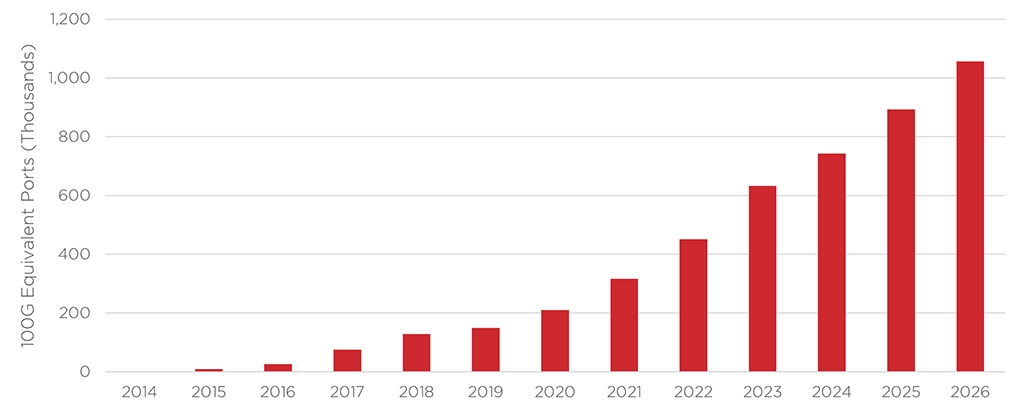

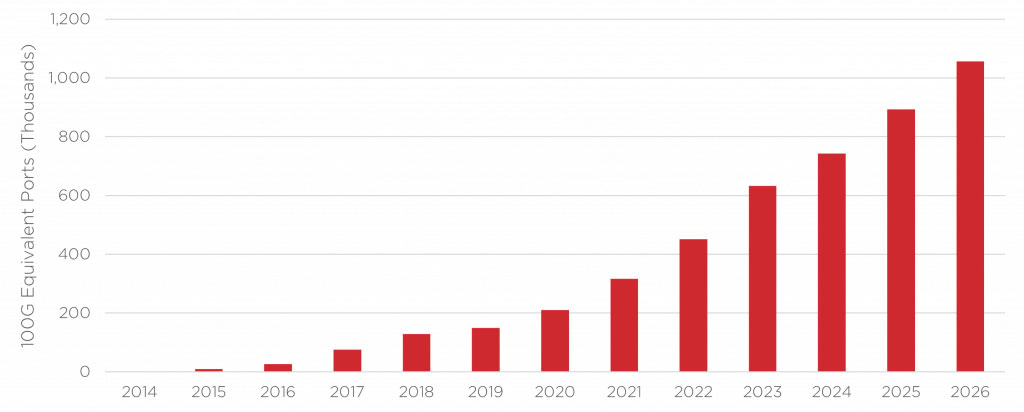

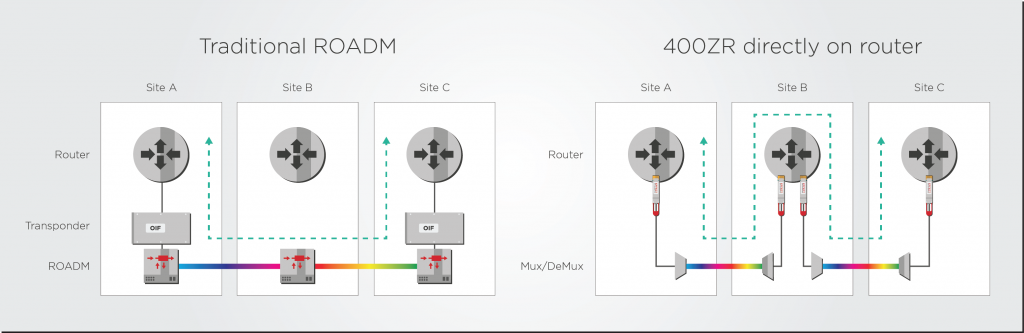

At the same time, advances in electronic and photonic integration allowed longer reach coherent technology to be miniaturized into QSFP-DD and OSFP form factors. This progress allowed the Optical Internetworking Forum (OIF) to create the 400ZR and ZR+ standards for 400G DWDM pluggable modules. With small enough modules to pack a router faceplate densely, the datacom sector could profit from a 400ZR solution for high-capacity data center interconnects of up to 80km. If needed, extended reach 400ZR+ pluggables can cover several hundreds of kilometers. Cignal AI forecasts that 400ZR shipments will dominate in the edge applications, as shown in Figure 3.

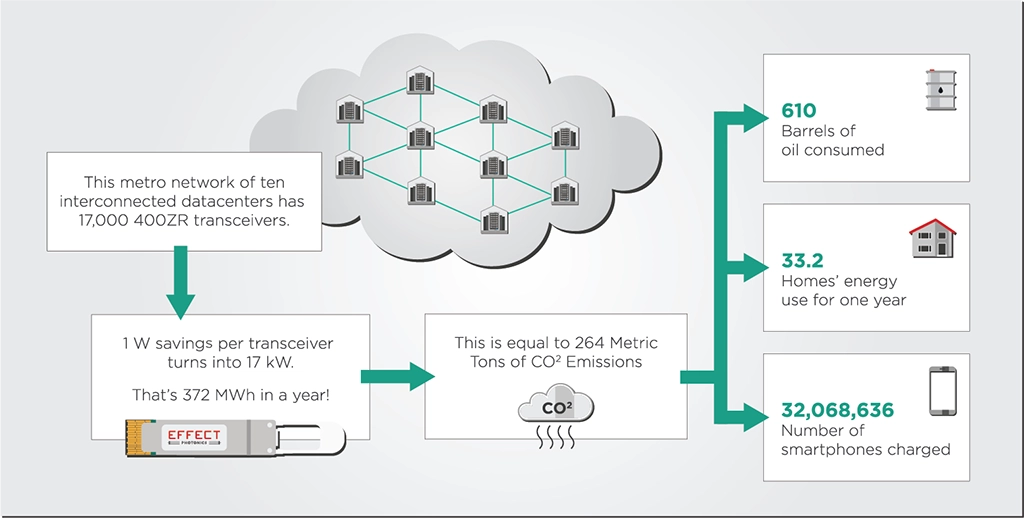

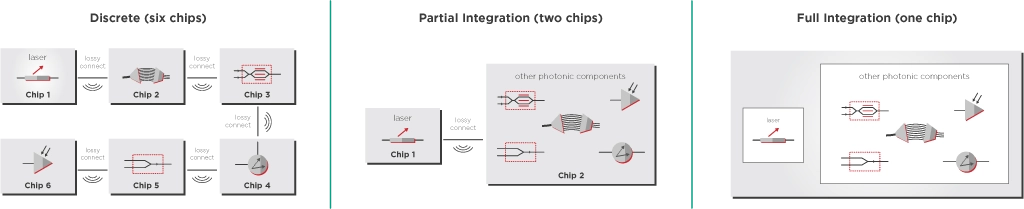

Further improvements in integration can further boost the reach and efficiency of coherent transceivers. For example, by integrating all photonic functions on a single chip, including lasers and optical amplifiers, EFFECT Photonics’ optical System-On-Chip (SoC) technology can achieve higher transmit power levels and longer distances while keeping the smaller QSFP-DD form factor, power consumption, and cost.

Takeaways

Edge computing is becoming increasingly critical for supporting AI-driven applications, such as autonomous vehicles, real-time analytics, and smart city technologies. The proximity of edge data centers to end-users allows for faster data processing, lower latency, and improved data privacy. This shift is driven by the growing need for real-time decision-making capabilities and compliance with data protection regulations. As AI workloads continue to expand, edge data centers provide a decentralized solution that alleviates the pressure on centralized cloud infrastructures and optimizes network resources.

Tags: EFFECT Photonics, Photonics

Leveraging Electronic Ecosystems in Photonics

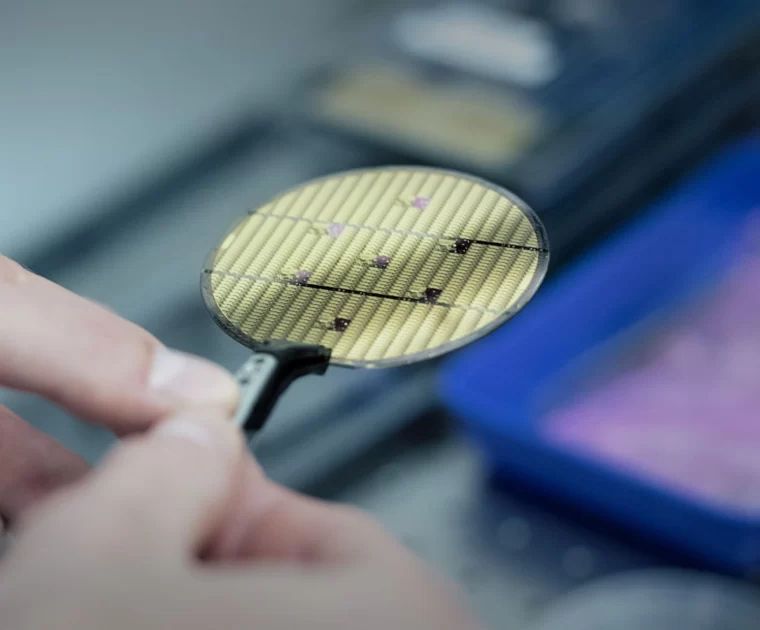

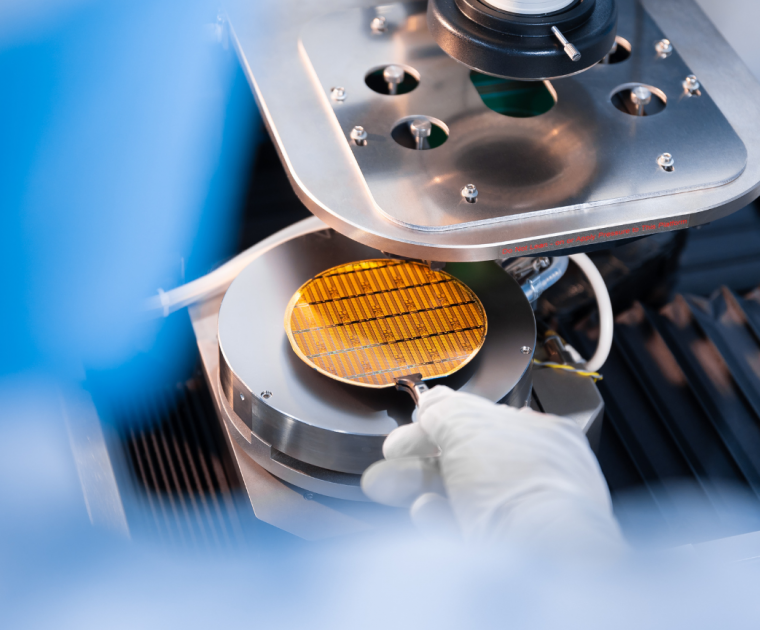

(First published on the 2nd November 2022 updated 4th September 2024) Thanks to wafer-scale technology,…

(First published on the 2nd November 2022 updated 4th September 2024)

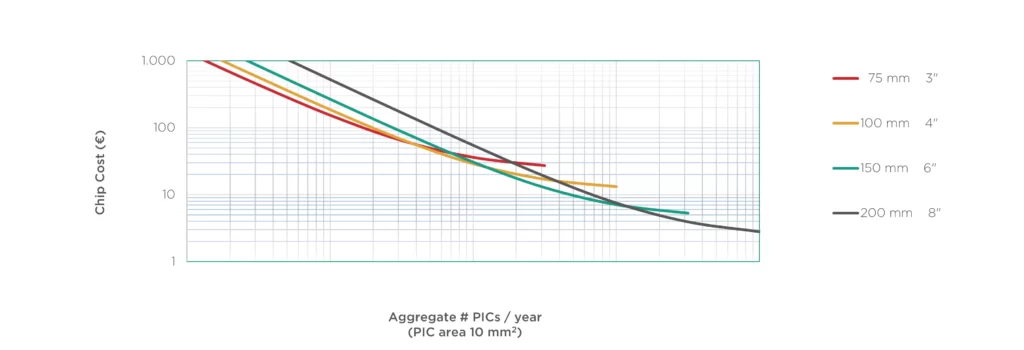

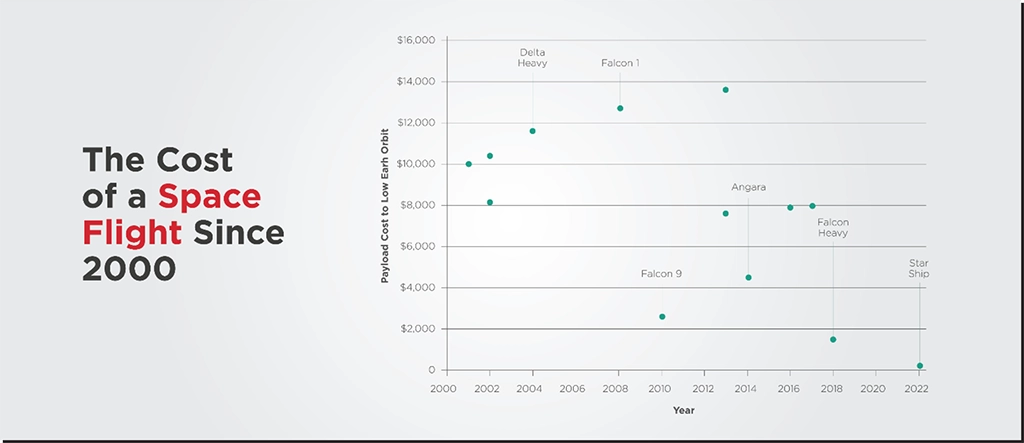

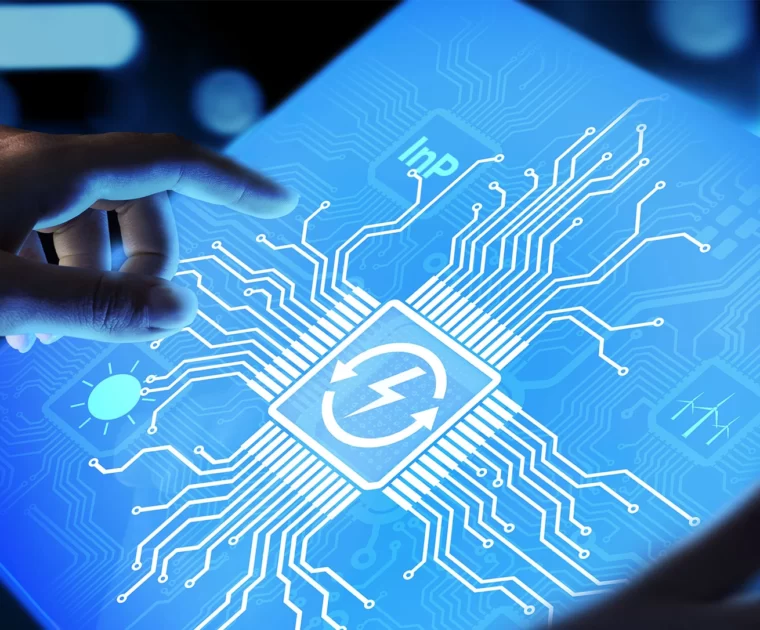

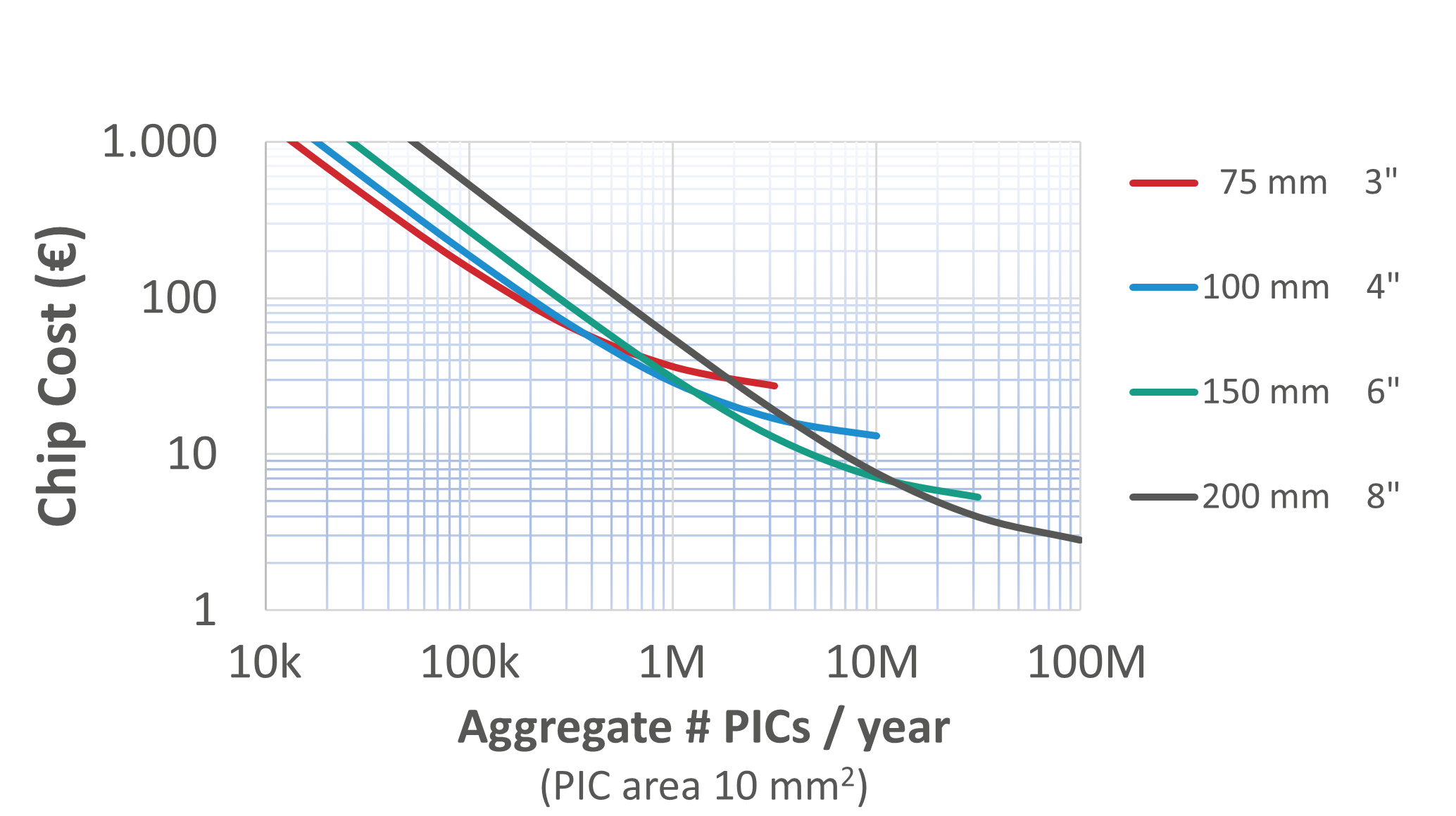

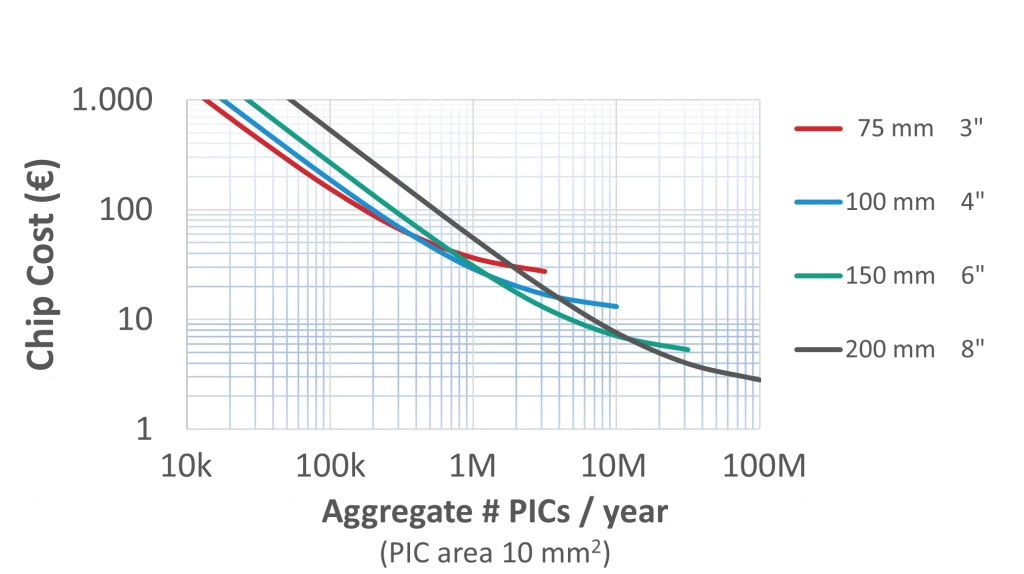

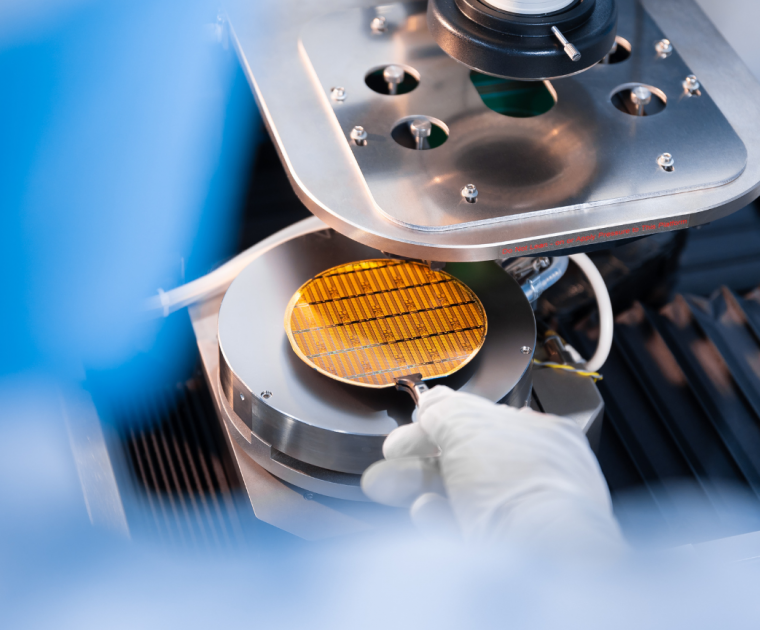

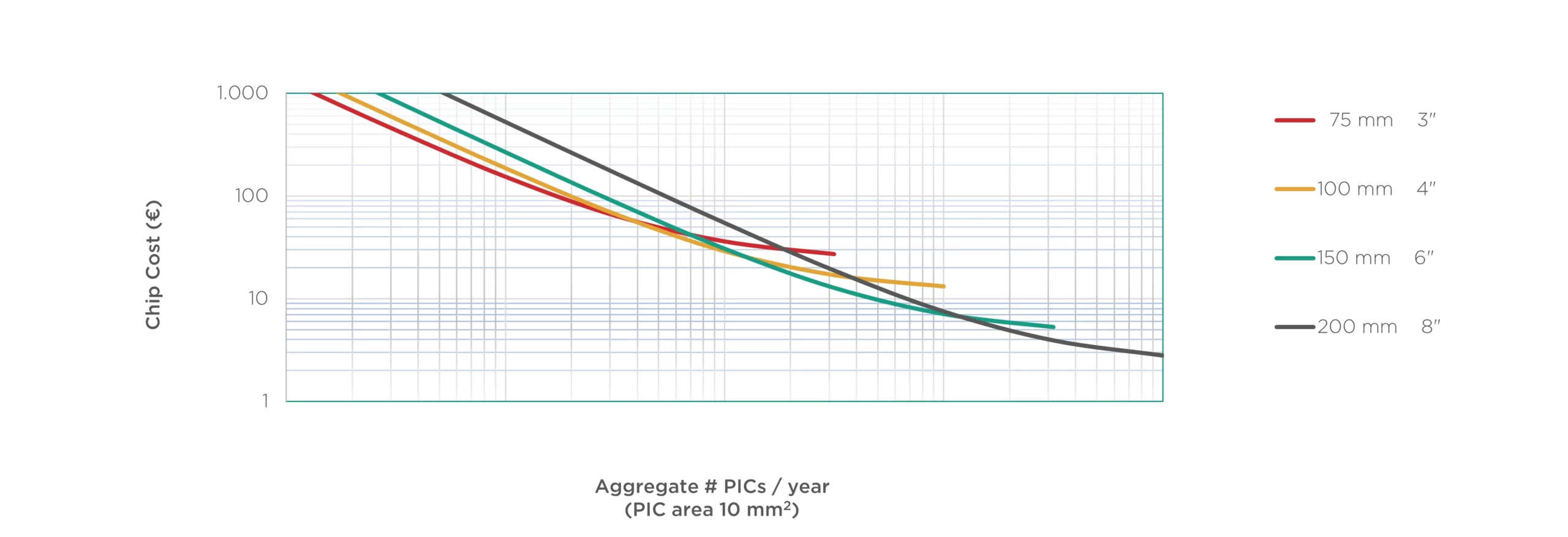

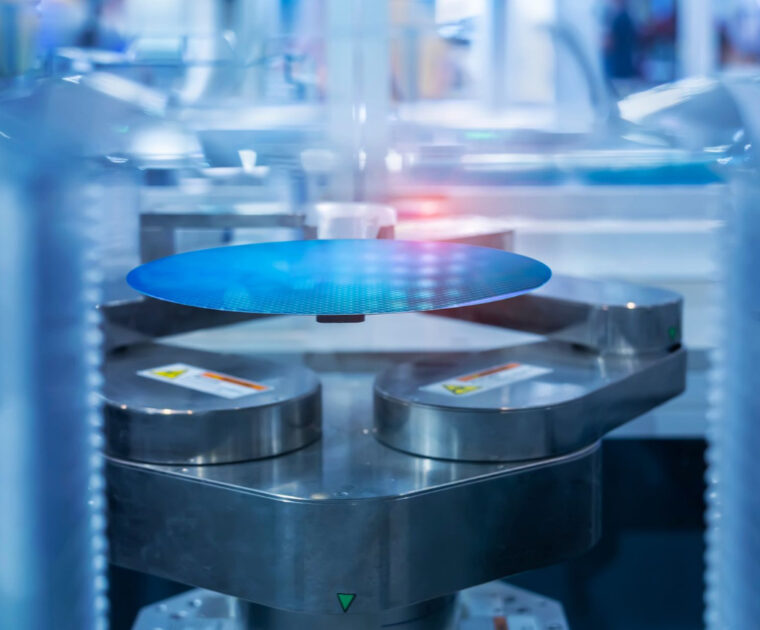

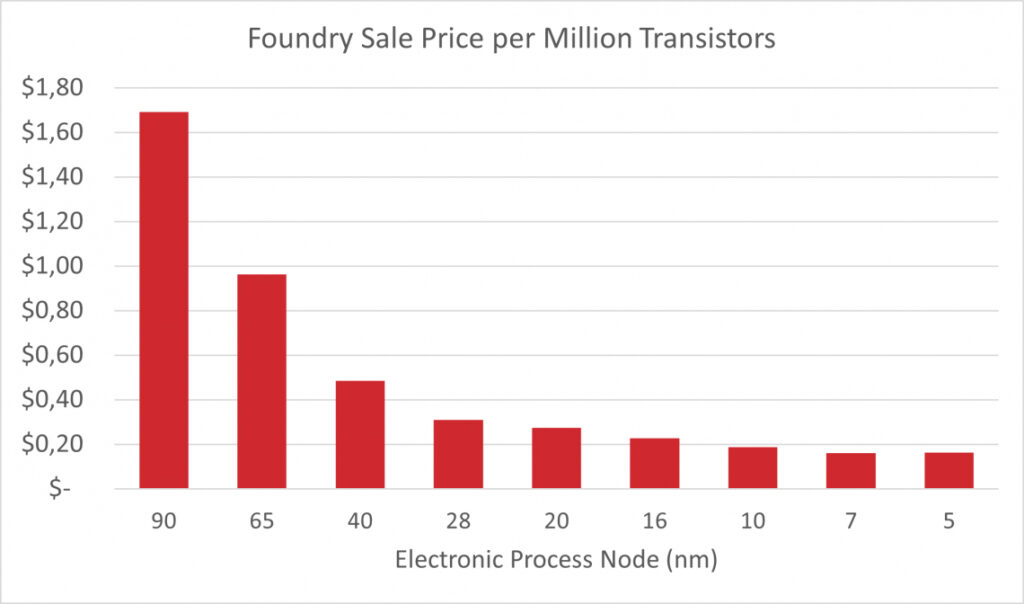

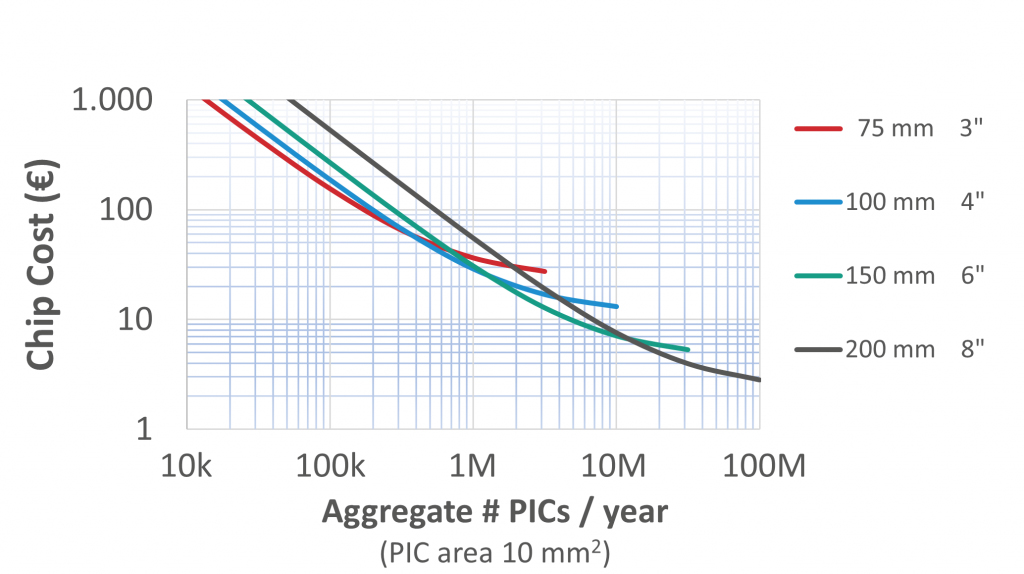

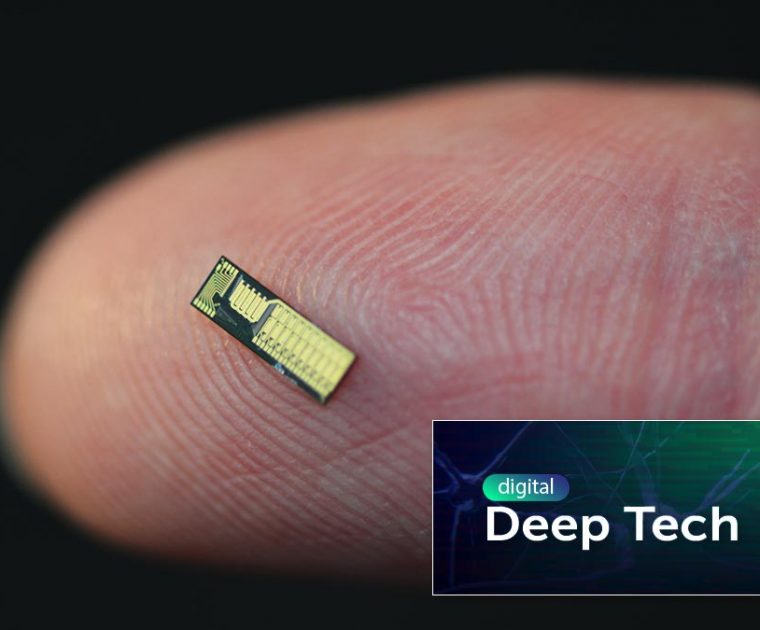

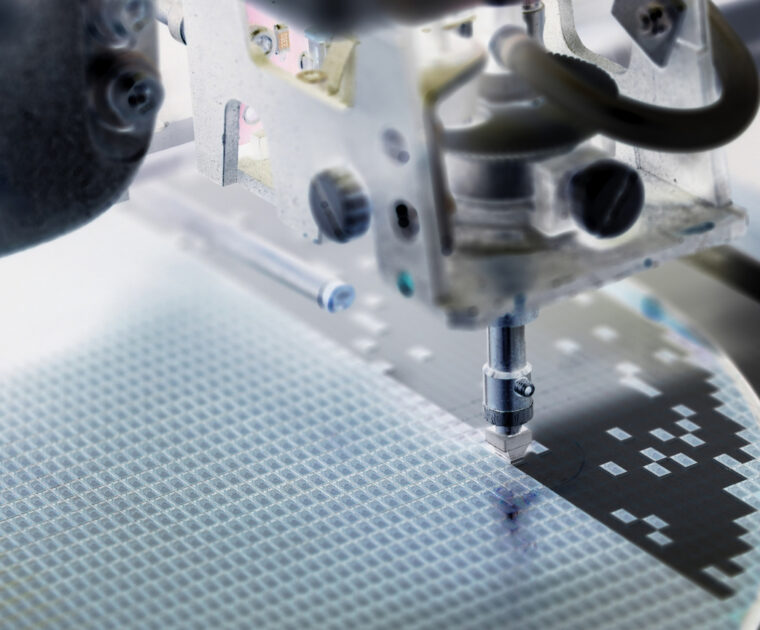

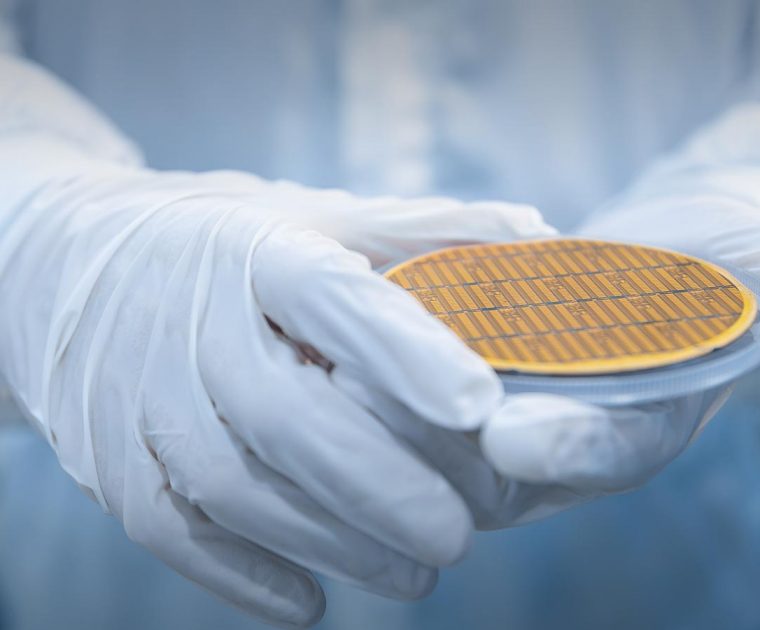

Thanks to wafer-scale technology, electronics have driven down the cost per transistor for many decades. This allowed the world to enjoy chips that every generation became smaller and provided exponentially more computing power for the same amount of money. This scale-up process is how everyone now has a computer processor in their pocket that is millions of times more powerful than the most advanced computers of the 1960s that landed men on the moon.

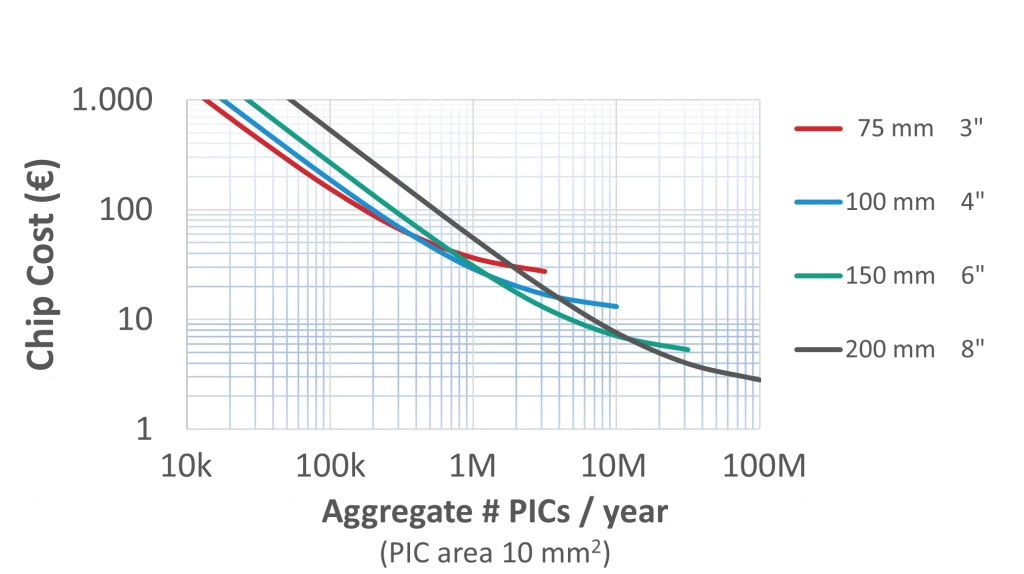

This progress in electronics integration is a key factor that brought down the size and cost of coherent transceivers, packing more bits than ever into smaller areas. However, photonics has struggled to keep up with electronics, with the photonic components dominating the cost of transceivers. If the transceiver cost curve does not continue to decrease, it will be challenging to achieve the goal of making them more accessible across the entire optical network.

To trigger a revolution in the use of photonics worldwide, it needs to be as easy to use as electronics. In the words of our Chief Technology Officer, Tim Koene-Ong:

“We need to buy photonics products from a catalog as we do with electronics, have datasheets that work consistently, be able to solder it to a board and integrate it easily with the rest of the product design flow.”

Tim Koene-Ong, Chief Technology Officer.

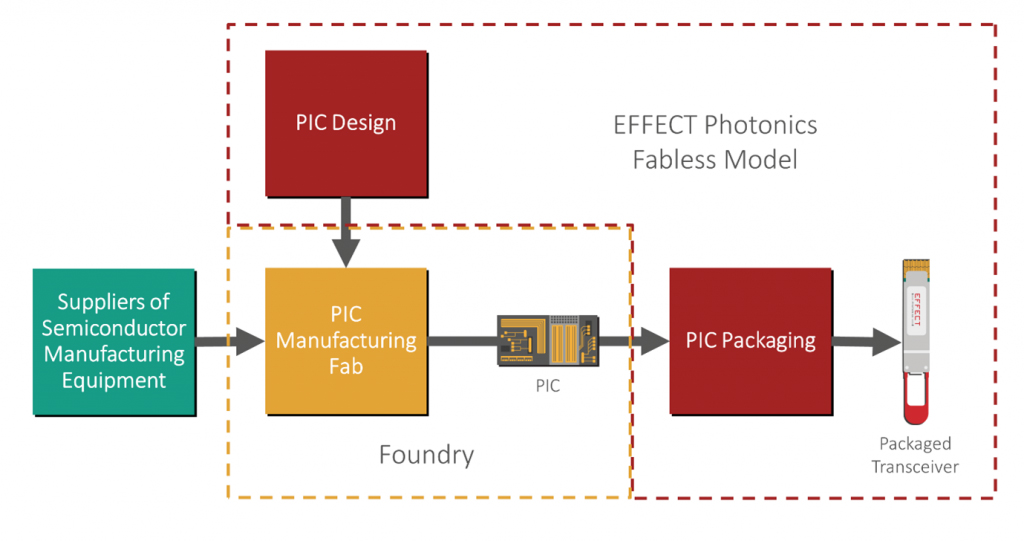

This goal requires photonics manufacturing to leverage existing electronics manufacturing processes and ecosystems. Photonics must embrace fabless models, chips that can survive soldering steps, and electronic packaging and assembly methods.

The Advantages of a Fabless Model

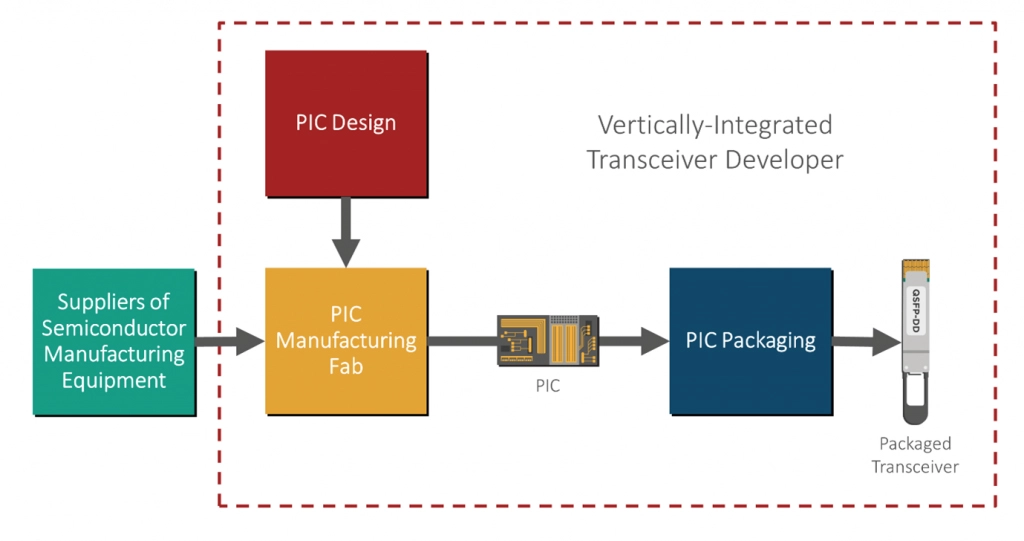

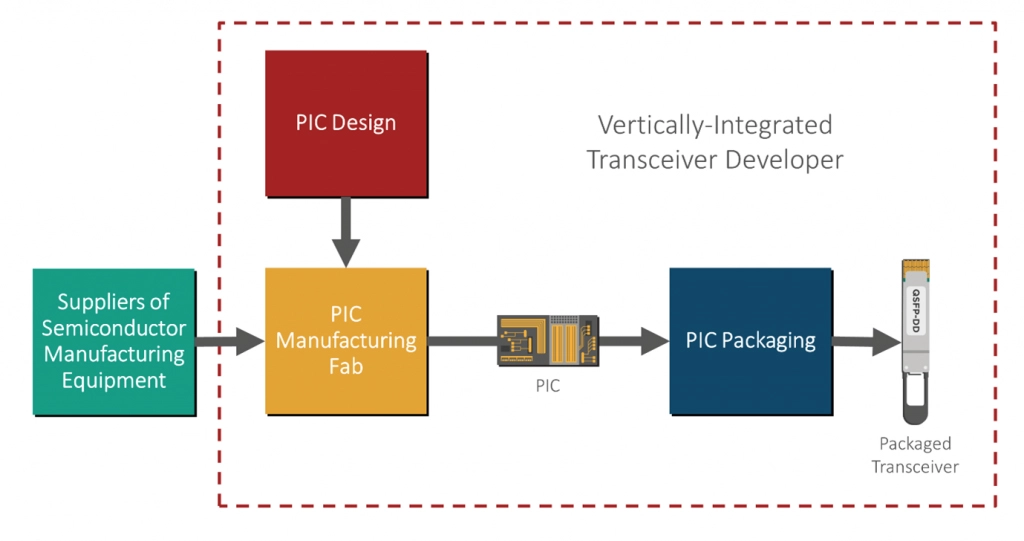

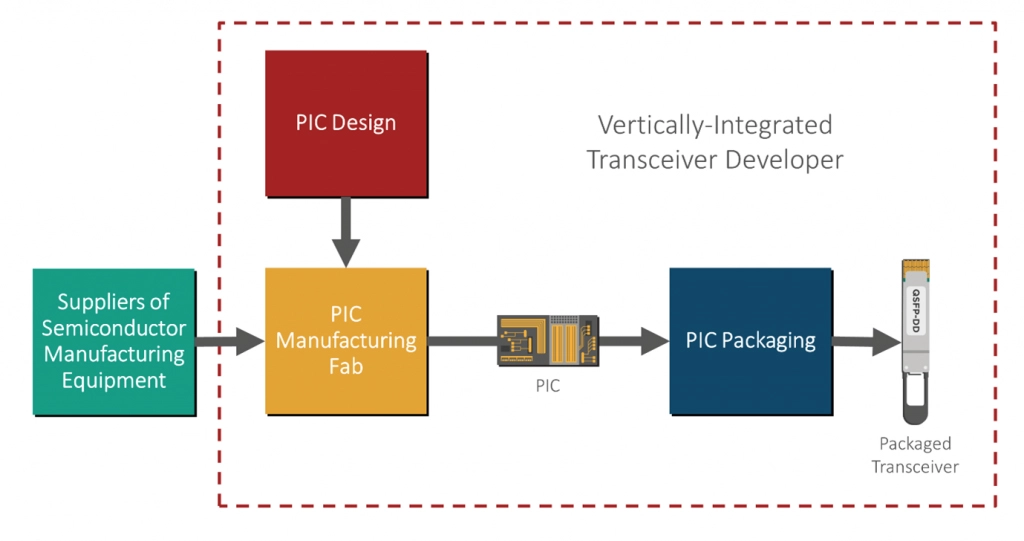

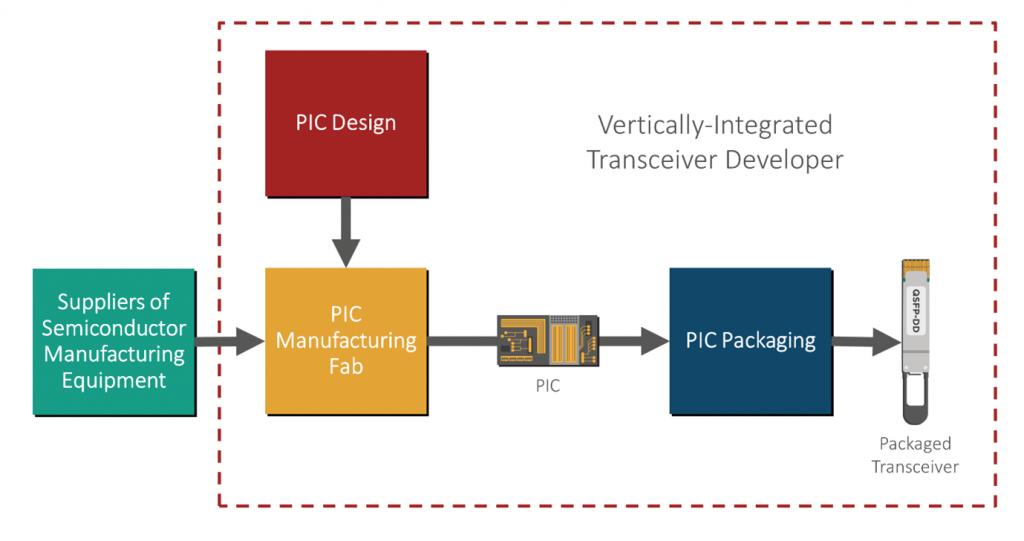

Increasing the volume of photonics manufacturing is a big challenge. Some photonic chip developers manufacture their chips in-house within their fabrication facilities. This approach has substantial advantages, giving component manufacturers complete control over their production process.

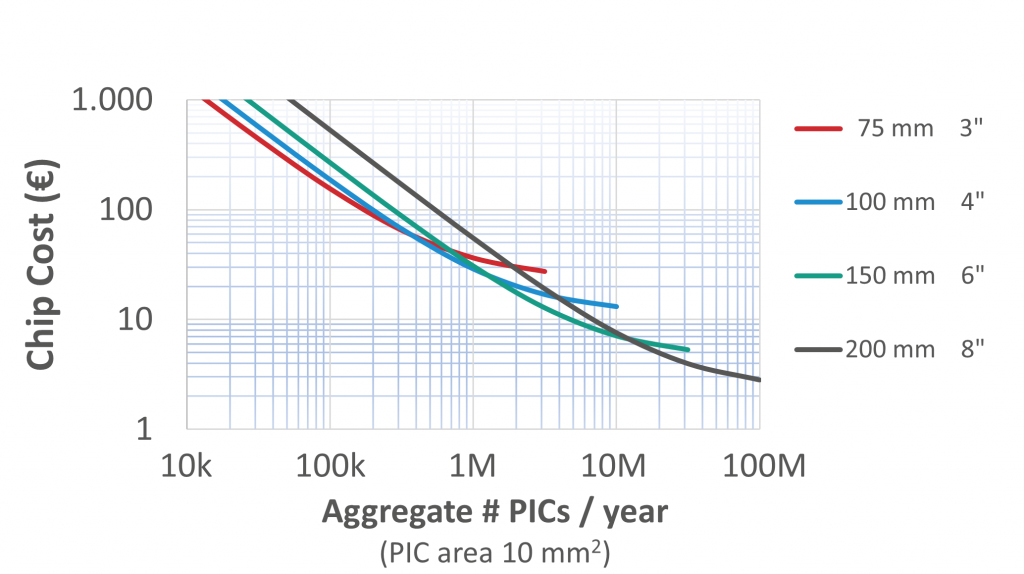

However, this approach has its trade-offs when scaling up. If a vertically integrated chip developer wants to scale up in volume, they must make a hefty capital expenditure (CAPEX) in more equipment and personnel. They must develop new fabrication processes as well as develop and train personnel. Fabs are not only expensive to build but to operate. Unless they can be kept at nearly full utilization, operating expenses (OPEX) also drain the facility owners’ finances.

Especially in the case of an optical transceiver market that is not as big as that of consumer electronics, it’s hard not to wonder whether that initial investment is cost-effective. For example, LightCounting research data estimates that 173 million optical Ethernet transceivers were sold in 2023, while the International Data Corporation estimates that 1.17 billion smartphones were sold in 2023. The latter figure is seven times larger than the entire optical transceiver market.

Electronics manufacturing experienced a similar problem during their 70s and 80s boom, with smaller chip start-ups facing almost insurmountable barriers to market entry because of the massive CAPEX required. Furthermore, the large-scale electronics manufacturing foundries had excess production capacity that drained their OPEX. The large-scale foundries sold that excess capacity to the smaller chip developers, who became fabless. In this scenario, everyone ended up winning. The foundries serviced multiple companies and could run their facilities at total capacity, while the fabless companies could outsource manufacturing and reduce their expenditures.

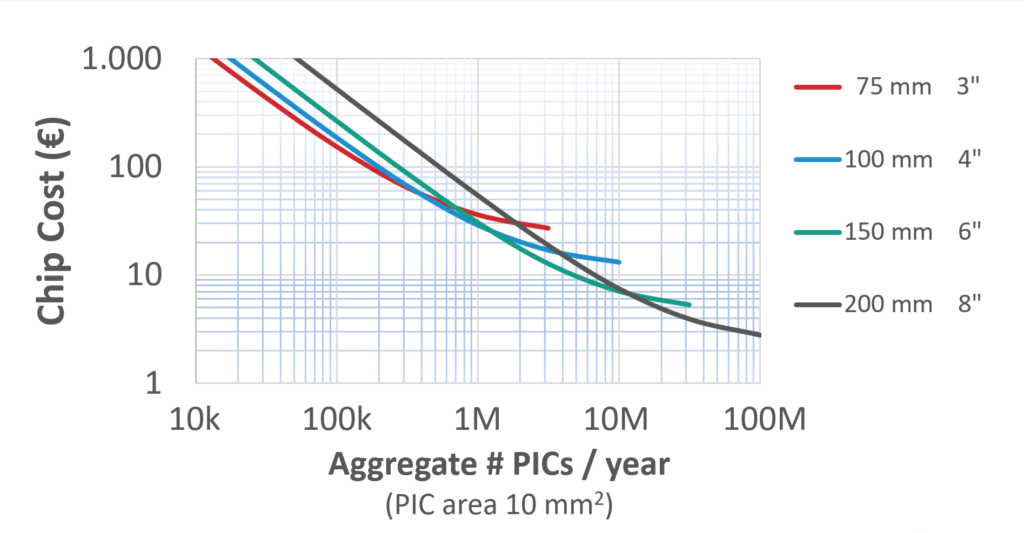

This fabless model, with companies designing and selling the chips but outsourcing the manufacturing, should also be the way for photonics. Instead of going through a more costly, time-consuming process, the troubles of scaling up for photonics developers are outsourced and (from the perspective of the fabless company) become as simple as putting a purchase order in place. Furthermore, the fabless model allows photonics developers to concentrate their R&D resources on the end market. This is the simplest way forward if photonics moves into million-scale volumes.

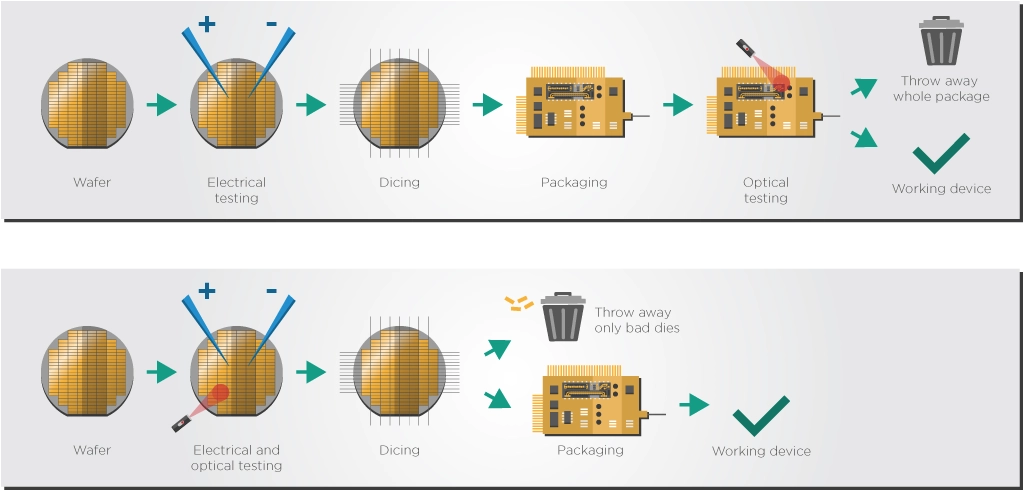

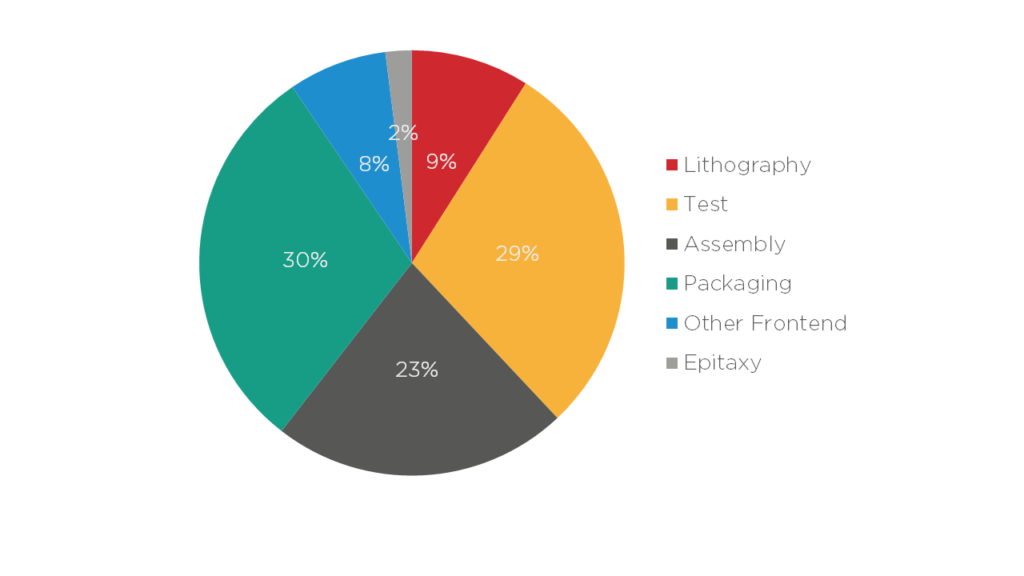

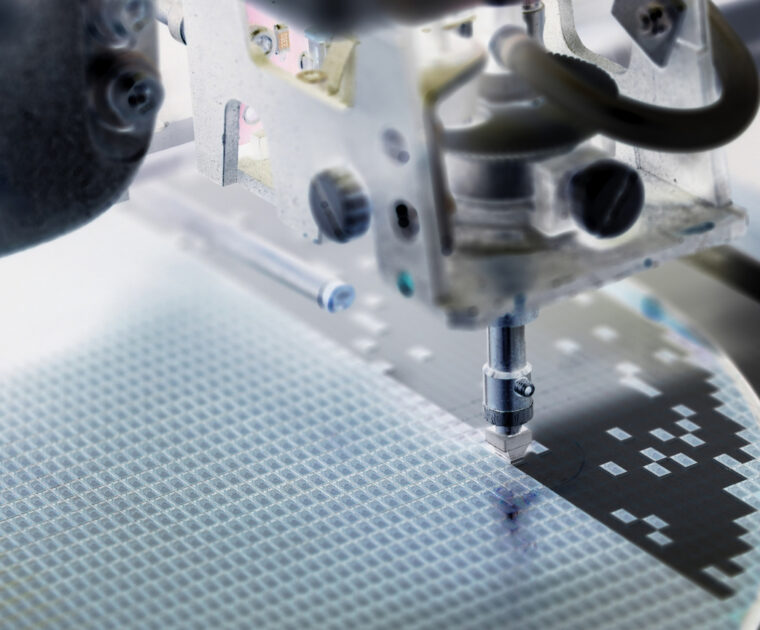

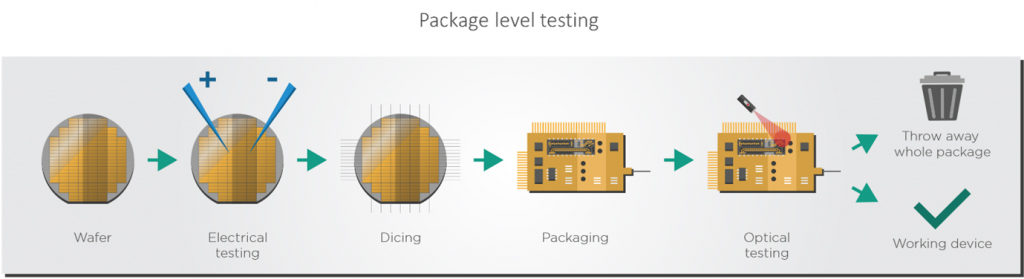

Adopting Electronics-Style Packaging

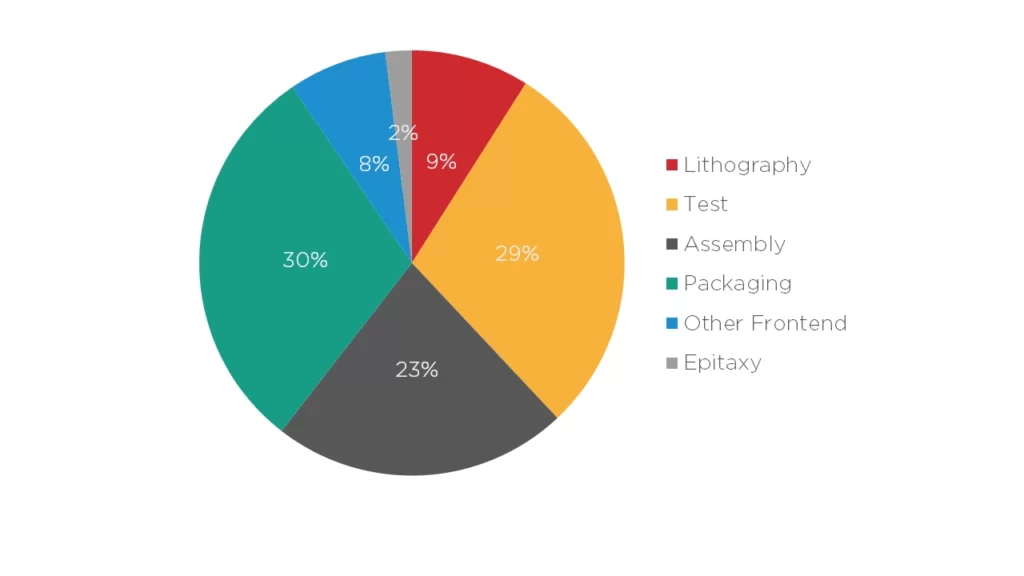

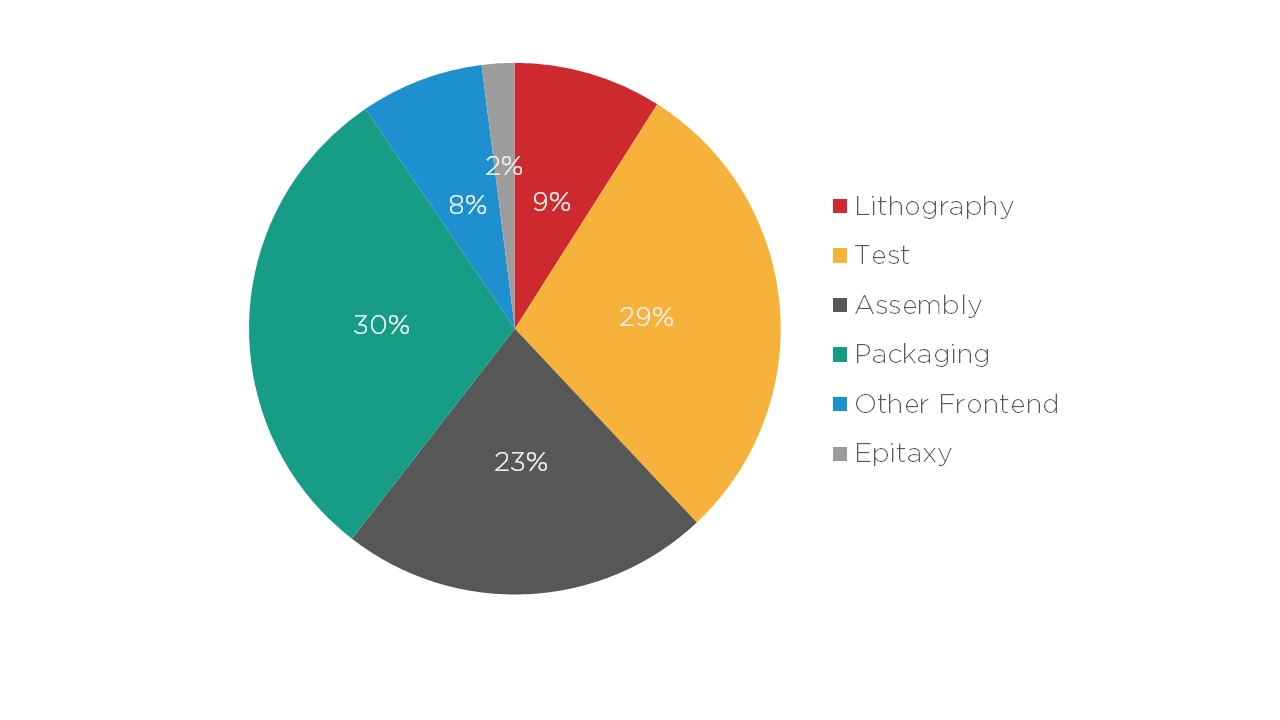

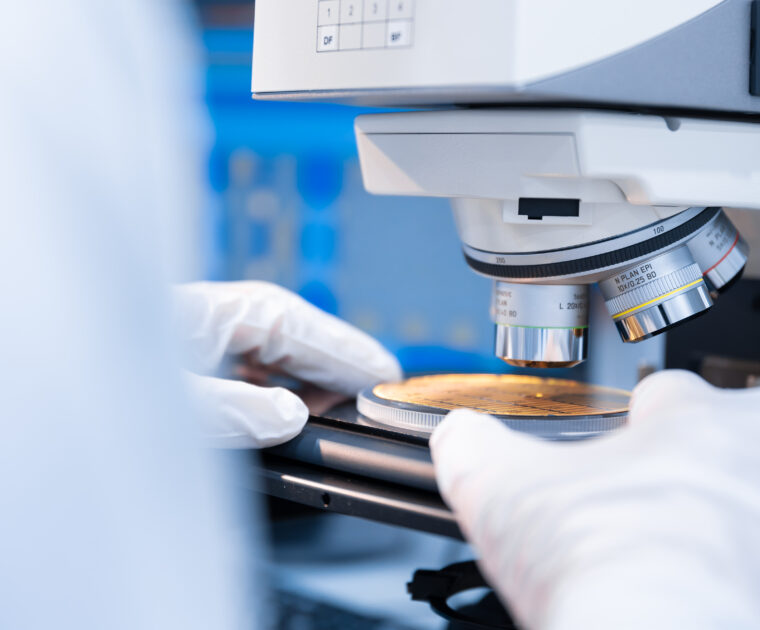

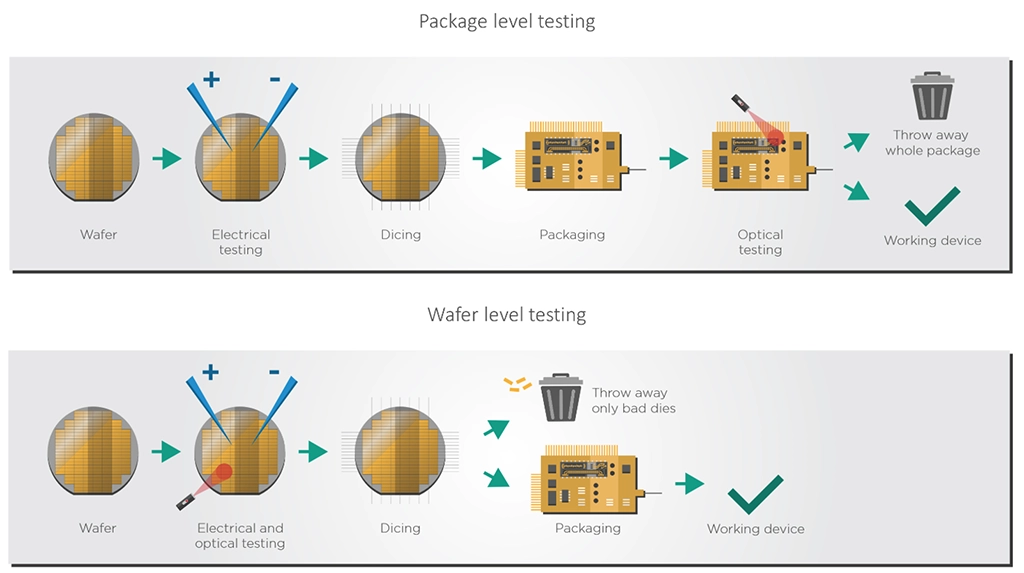

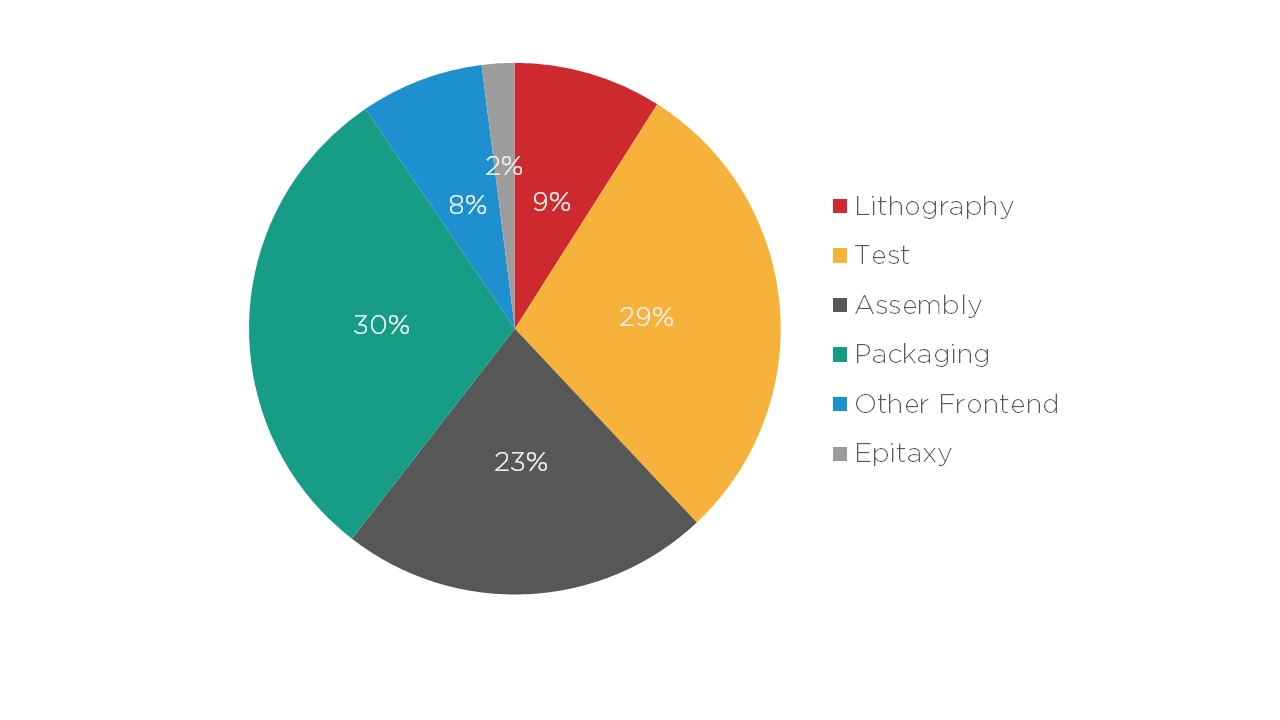

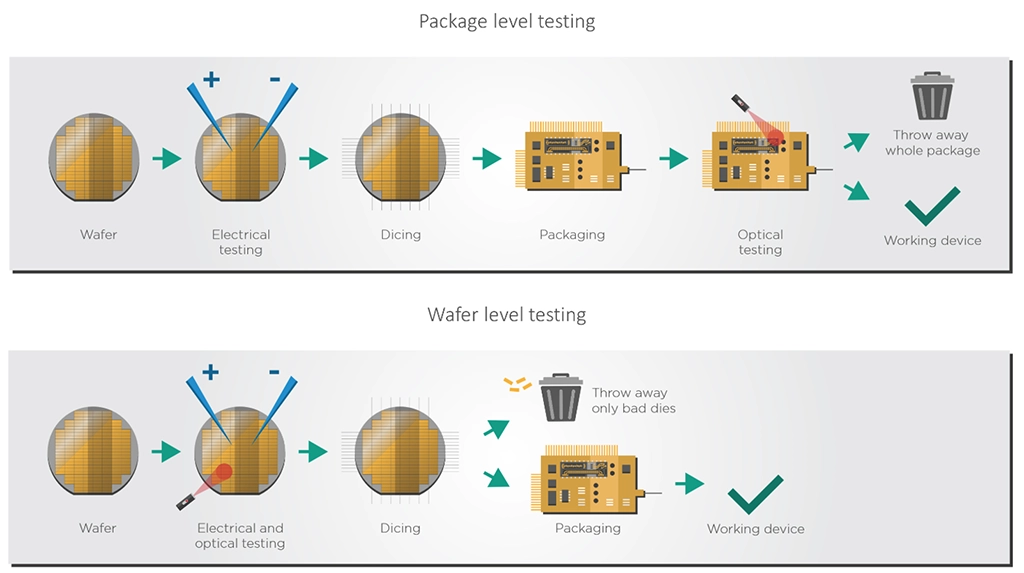

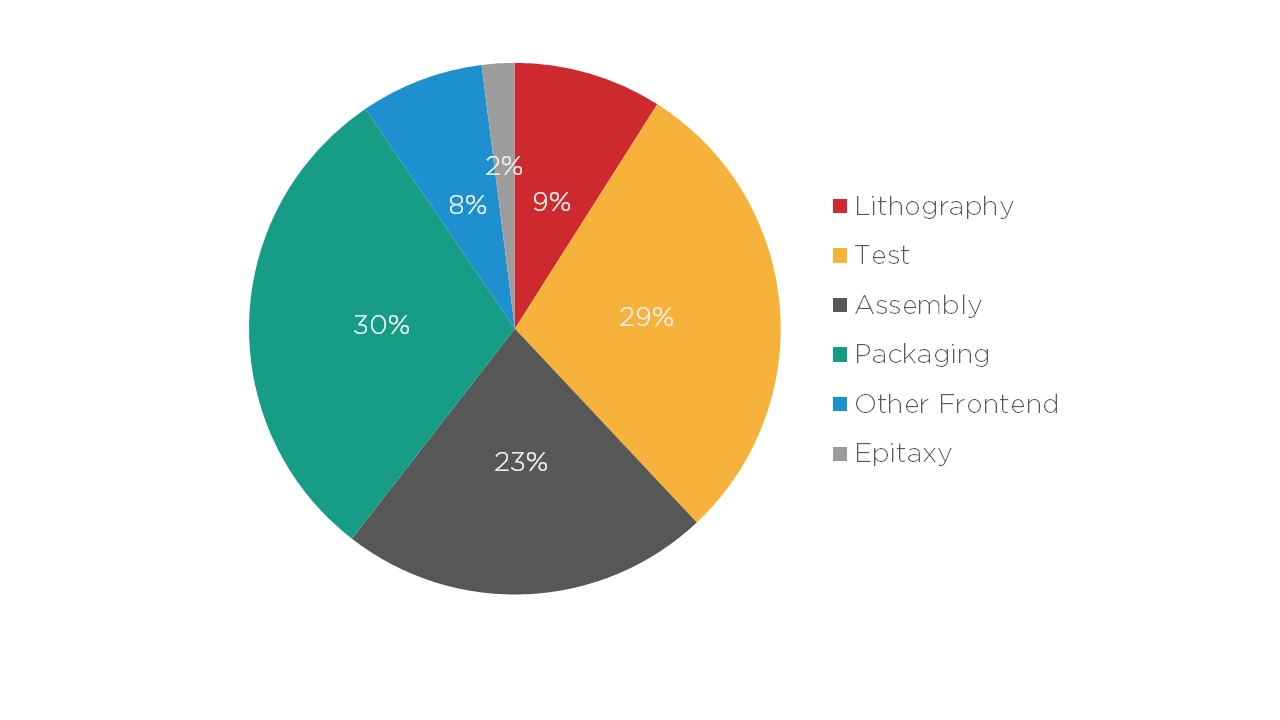

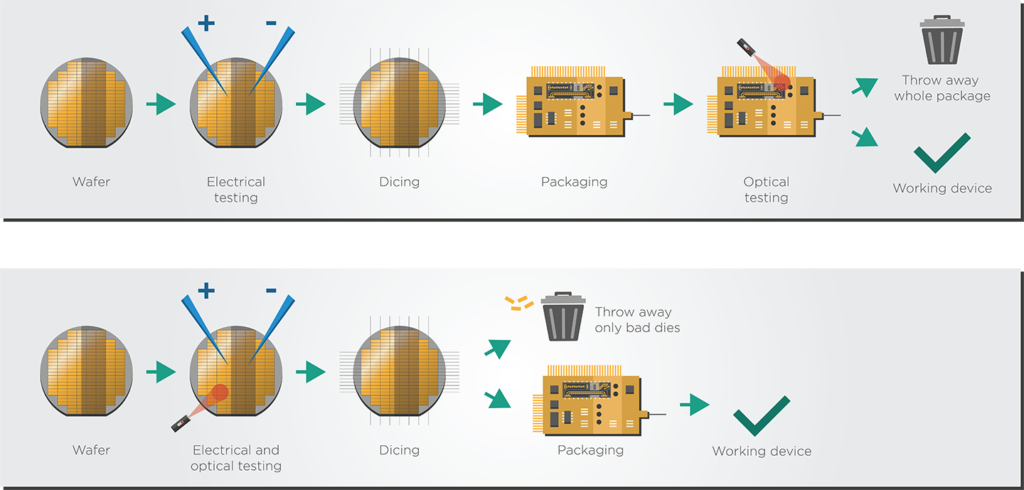

While packaging, assembly, and testing are only a small part of the cost of electronic systems, the reverse happens with photonic integrated circuits (PICs). Researchers at the Technical University of Eindhoven (TU/e) estimate that for most Indium Phosphide (InP) photonics devices, the cost of packaging, assembly, and testing can reach around 80% of the total module cost.

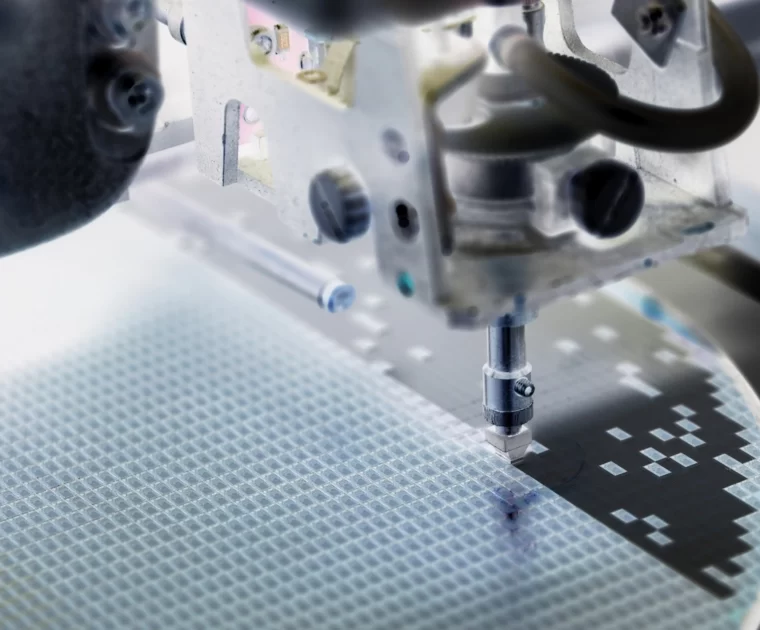

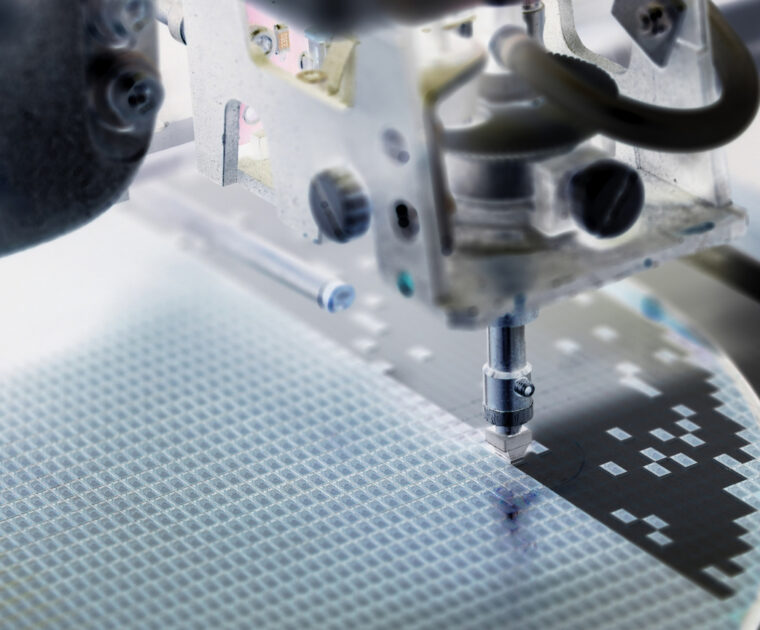

To become more accessible and affordable, the photonics manufacturing chain must become more automated and standardized. The lack of automation makes manufacturing slower and prevents data collection that can be used for process control, optimization, and standardization.

One of the best ways to reach these automation and standardization goals is to learn from electronics packaging, assembly, and testing methods that are already well-known and standardized. After all, building a special production line is much more expensive than modifying an existing production flow.

There are several ways in which photonics packaging, assembly, and testing can be made more affordable and accessible. Below are a few examples:

- Passive alignments: Connecting optical fiber to PICs is one of optical devices’ most complicated packaging and assembly problems. The best alignments are usually achieved via active alignment processes in which feedback from the PIC is used to align the fiber better. Passive alignment processes do not use such feedback. They cannot achieve the best possible alignment but are much more affordable.

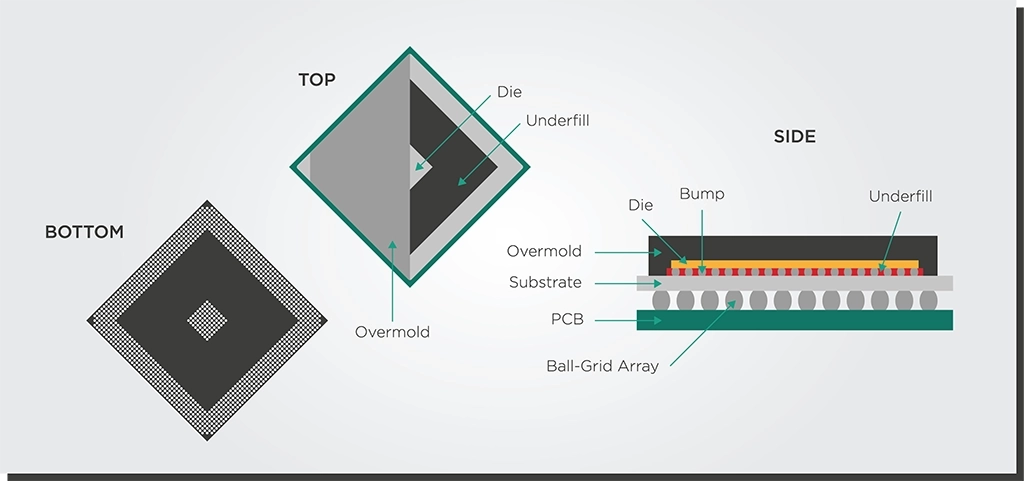

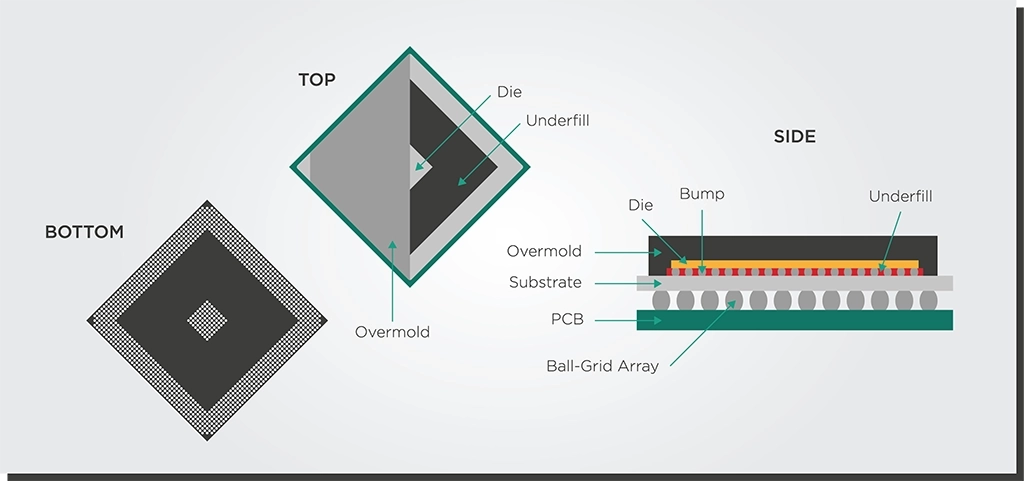

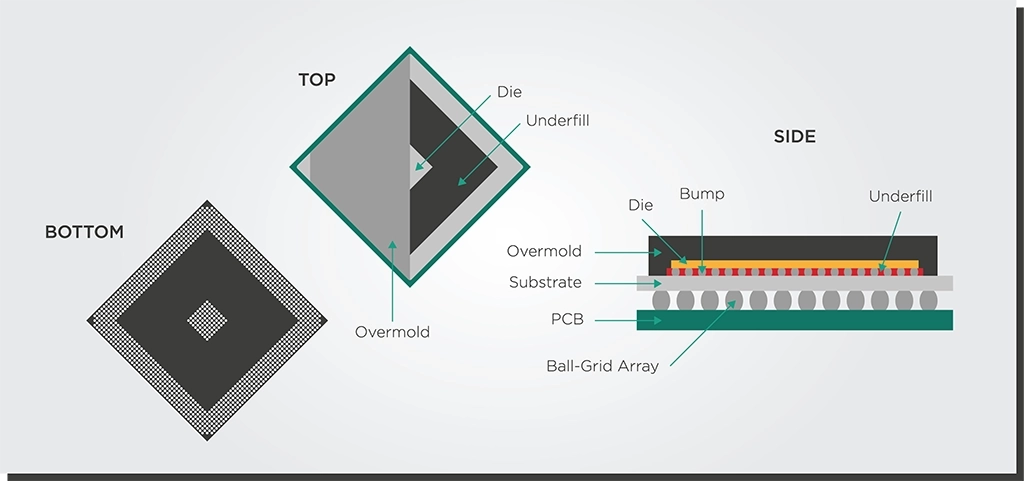

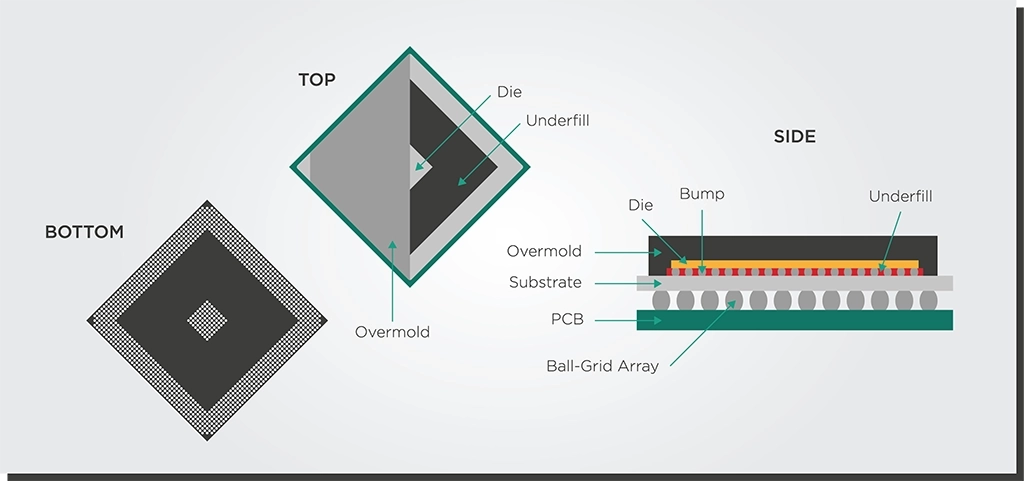

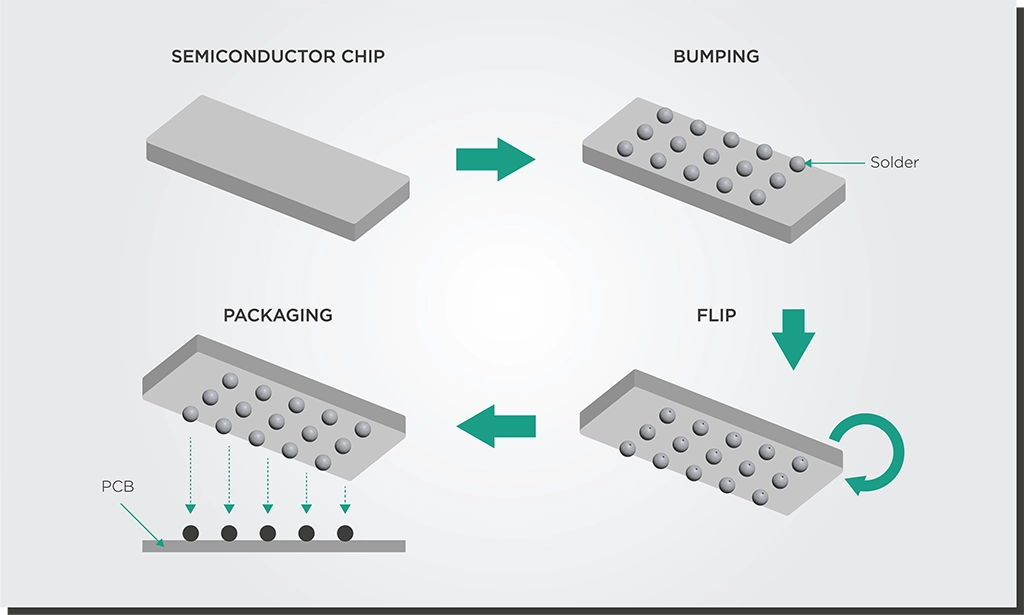

- BGA-style packaging: Ball-grid array packaging has grown popular among electronics manufacturers. It places the chip connections under the chip package, allowing more efficient use of space in circuit boards, a smaller package size, and better soldering.

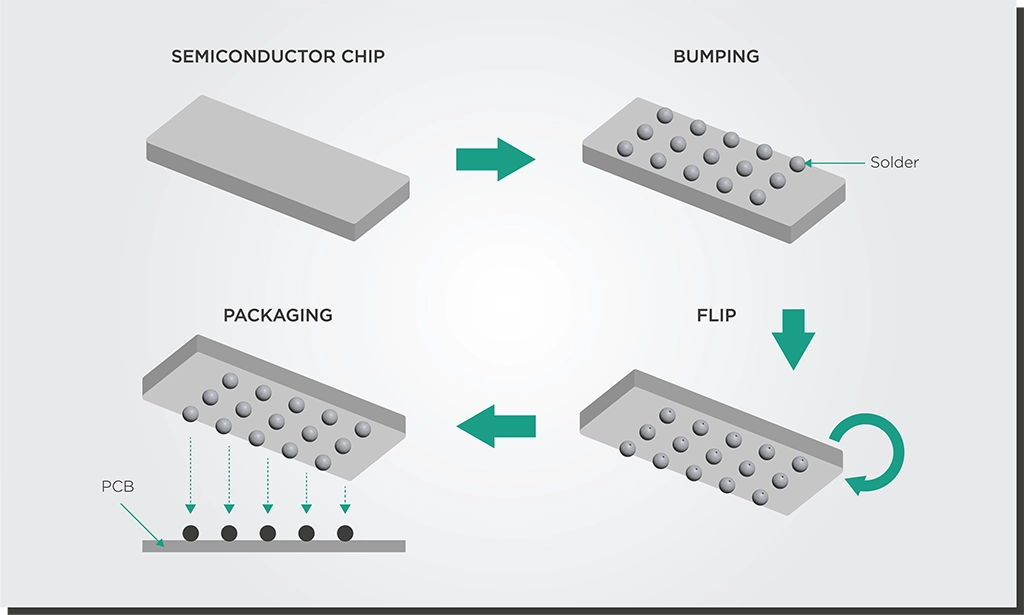

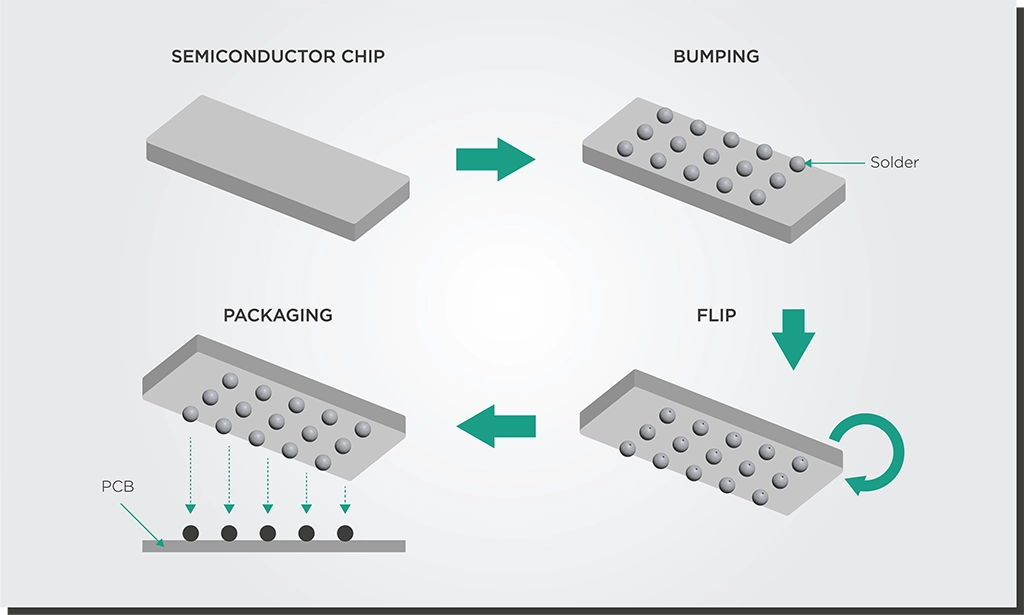

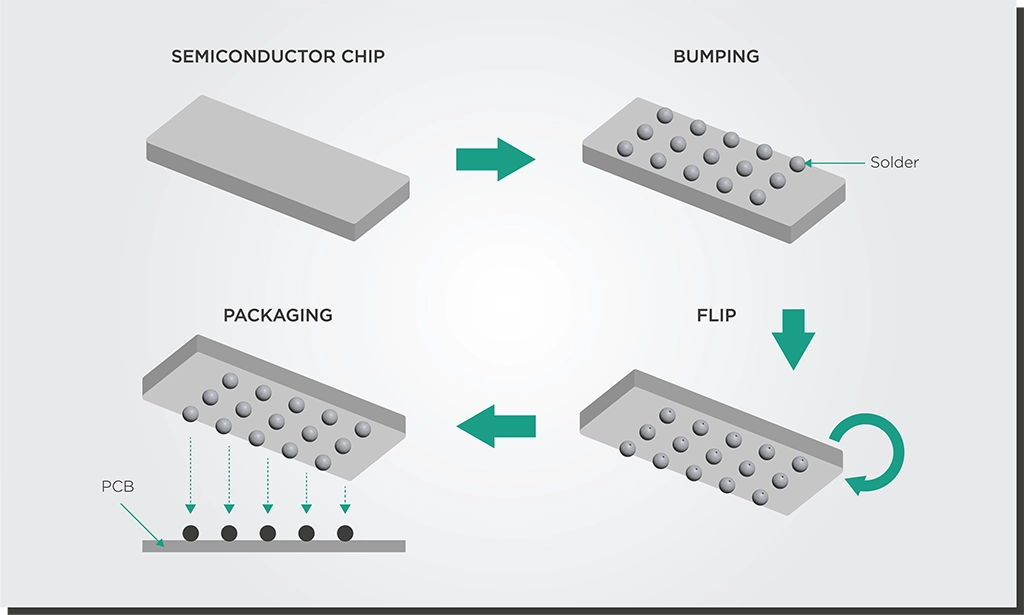

- Flip-chip bonding: A process where solder bumps are deposited on the chip in the final fabrication step. The chip is flipped over and aligned with a circuit board for easier soldering.

These might be novel technologies for photonics developers who have started implementing them in the last five or ten years. However, the electronics industry embraced these technologies 20 or 30 years ago. Making these techniques more widespread will make a massive difference in photonics’ ability to scale up and become as available as electronics.

Making Photonics Chips That Can Survive Soldering

Soldering remains another tricky step for photonics assembly and packaging. Photonics device developers usually custom order a PIC, then wire and die bond to the electronics. However, some elements in the PIC cannot handle soldering temperatures, making it difficult to solder into an electronics board. Developers often must glue the chip onto the board with a non-standard process that needs additional verification for reliability.

This goes back to the issue of process standardization. Current PICs often use different materials and processes from electronics, such as optical fiber connections and metals for chip interconnects, that cannot survive a standard soldering process.

Adopting BGA-style packaging and flip-chip bonding techniques will make it easier for PICs to survive this soldering process. There is ongoing research and development worldwide, including at EFFECT Photonics, to make fiber coupling and other PIC aspects compatible with these electronic packaging methods.

PICs that can handle being soldered to circuit boards will allow the industry to build optical subassemblies that can be made more readily available in the open market and can go into trains, cars, or airplanes.

Conclusion

Photonics must leverage existing electronics ecosystems and processes to scale up and have a greater global impact. Our Chief Technology Officer, Tim Koene-Ong, explains what this means:

Photonics technology needs to integrate more electronic functionalities into the same package. It needs to build photonic integration and packaging support that plays by the rules of existing electronic manufacturing ecosystems. It needs to be built on a semiconductor manufacturing process that can produce millions of chips in a month.

As soon as photonics can achieve these larger production volumes, it can reach price points and improvements in quality and yield closer to those of electronics. When we show the market that photonics can be as easy to use as electronics, that will trigger a revolution in its worldwide use.

Our Chief Technology Officer, Tim Koene-Ong.

This vision is one of our guiding lights at EFFECT Photonics, where we aim to develop optical systems that can impact the world in many different applications.

Tags: automotive sector, BGA style packaging, compatible, computing power, cost per mm, efficient, electronic, electronic board, electronics, fabless, Photonics, risk, scale, soldering, transistor, wafer scale

The Internet of Things: Enhanced Connectivity Through Photonics

The Internet of Things (IoT) is transforming industries by enabling devices to communicate, collect, and…

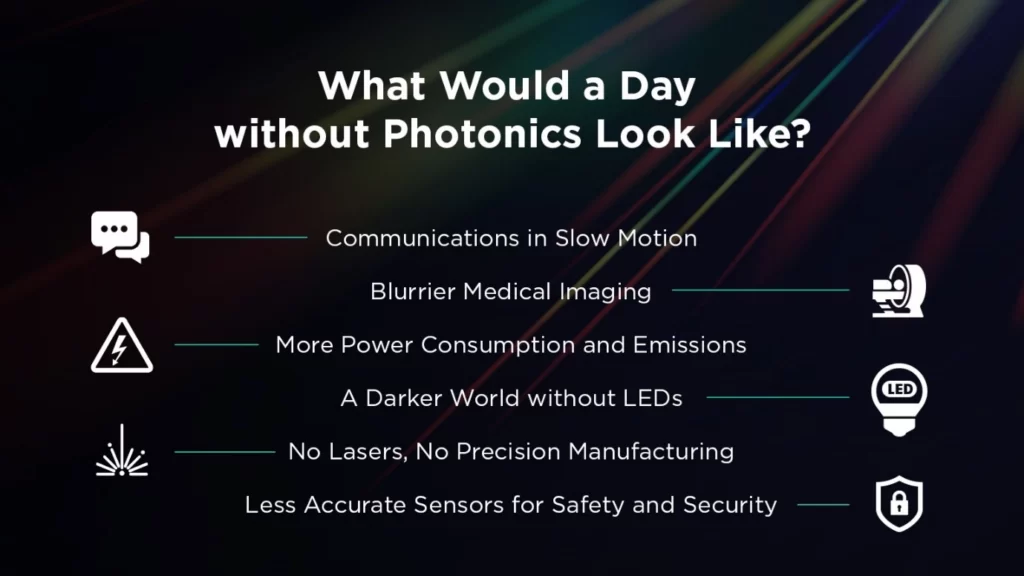

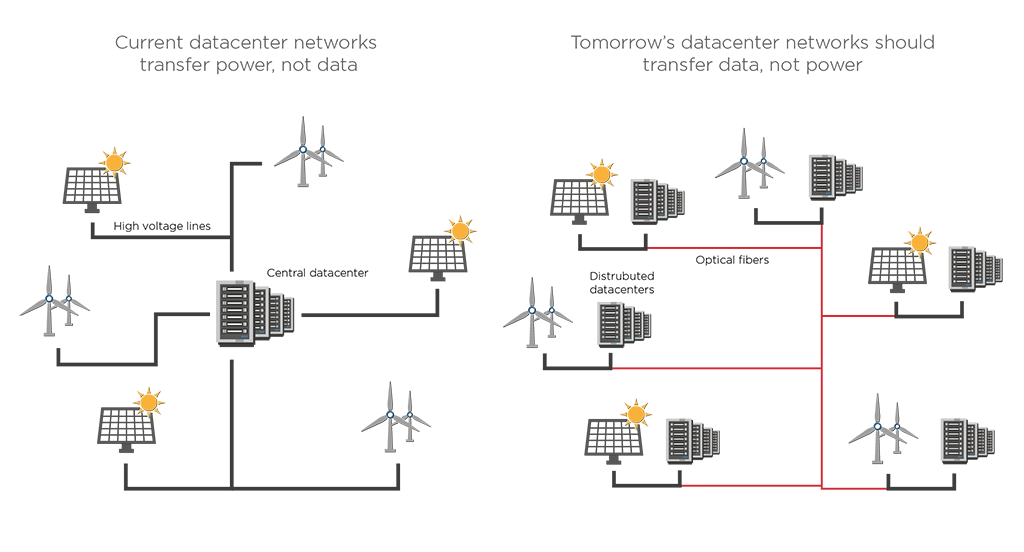

The Internet of Things (IoT) is transforming industries by enabling devices to communicate, collect, and exchange data seamlessly. This interconnected ecosystem relies on robust, high-speed communication technologies to function effectively. Photonics, which involves the use of light to transmit data, plays a critical role in enhancing the connectivity of IoT devices. This article explores the different ways photonics enables IoT, focusing on high-speed data transmission, energy-efficient sensing, and the development of smart, self-powered devices.

High-Speed Data Transmission

The advent of 5G technology, with its promise of ultra-fast speeds and low latency, has enabled many IoT applications, turning mundane devices into smart, interconnected components of broader digital ecosystems. However, this means more devices contribute to the already vast data streams flowing through global networks.

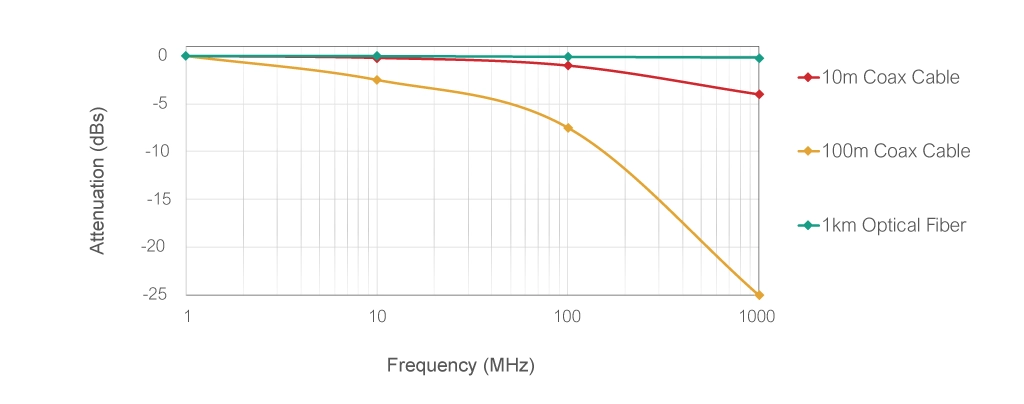

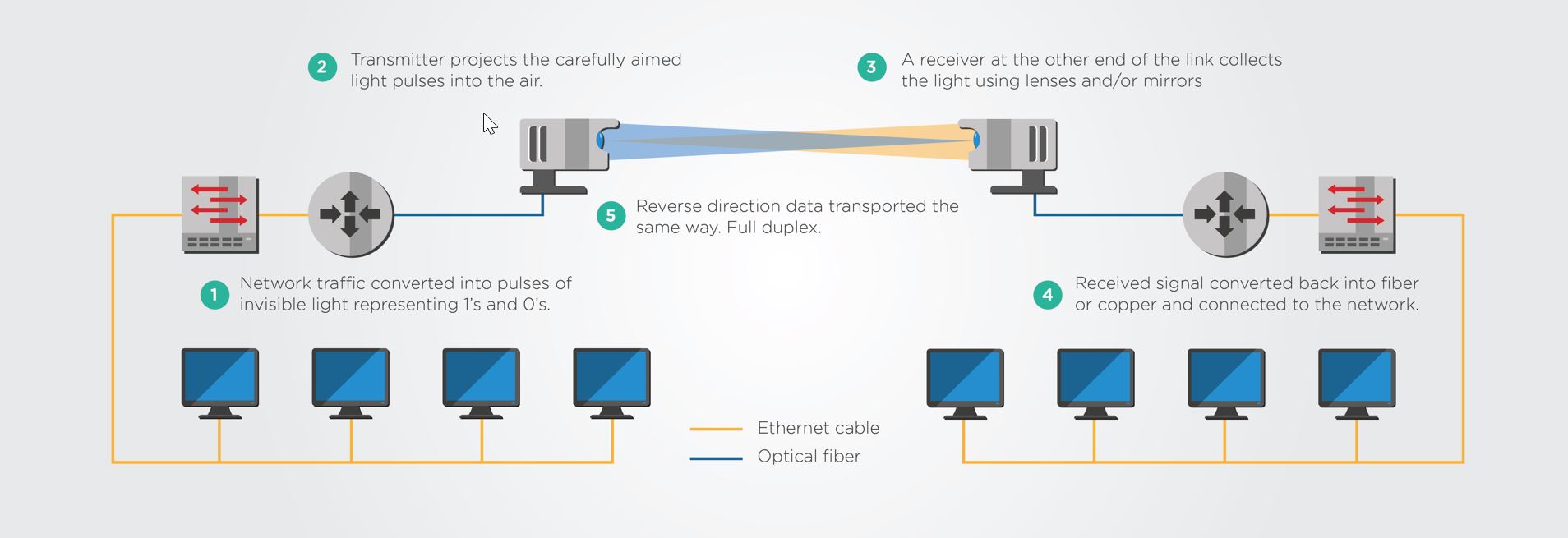

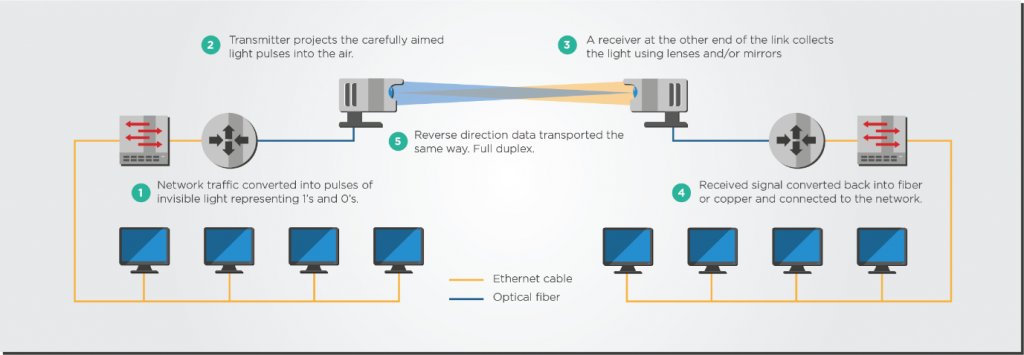

Photonics significantly enhances data transmission speeds in IoT networks, making it possible to handle the massive data volumes generated by connected devices. Optical fibers, which use light to transmit data, offer higher bandwidth and lower latency compared to traditional copper wires. This is essential for applications that require real-time data processing and rapid communication, such as autonomous vehicles, smart grids, and industrial automation.

Energy-Efficient Sensing

Photonics also plays a vital role in the development of energy-efficient sensing technologies for IoT. Photonic sensors offer high sensitivity and accuracy with much low power consumption. This is particularly important for applications in remote or hard-to-reach locations where replacing batteries is impractical.

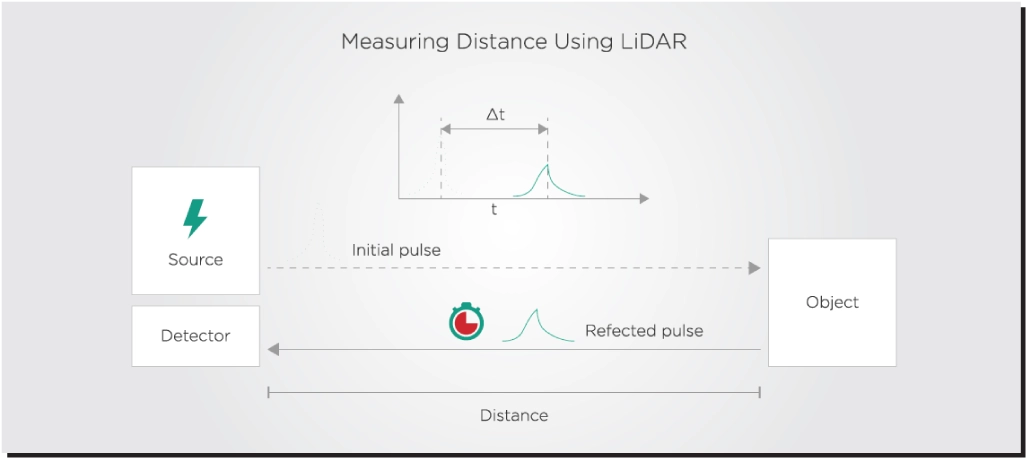

Photonic integrated circuits (PICs) combine multiple optical components on a single chip, enabling faster and more efficient data transmission. For instance, advances in PIC technology have enabled high-performance LiDAR systems, which use laser pulses to create detailed 3D maps of environments. These systems are crucial for applications like autonomous driving, where precise and real-time data is necessary.

Smart, Self-Powered Devices

One of the most exciting developments in photonics for IoT is the creation of self-powered devices. These devices use ambient light to generate the energy needed for their operation, eliminating the need for batteries. This not only reduces maintenance costs but also minimizes environmental impact by decreasing the number of disposable batteries used.

For example, Ambient Photonics is a company that specializes in developing and manufacturing low-light energy harvesting solar cells. The company’s solar cells are thin, efficient, and capable of capturing energy from a wide range of light conditions, including dim indoor settings where traditional solar cells are less effective. Their technology is designed to generate power from low-light environments, such as indoor lighting, which can be used to power various electronic devices without the need for batteries or frequent recharging. This makes them suitable for powering IoT devices, remote sensors, and other small electronics that are often used indoors.

Conclusion

Photonics is enabling the connectivity and functionality of IoT networks. By enabling high-speed data transmission, energy-efficient sensing, and the development of smart, self-powered devices, photonics addresses many of the challenges faced by traditional electronic technologies.

Tags: ambient light, Autonomous Vehicles, connectivity, EFFECT Photonics, energy harvesting, energy-efficient sensing, high-speed data transmission, Internet of Things, IoT, LiDAR systems, OPDs, Optical fibers, OPVs, organic optoelectronics, organic photodetectors, organic photovoltaic cells, photonic integrated circuits, Photonics, PICs, self-powered devices, smart devices, sustainable technology

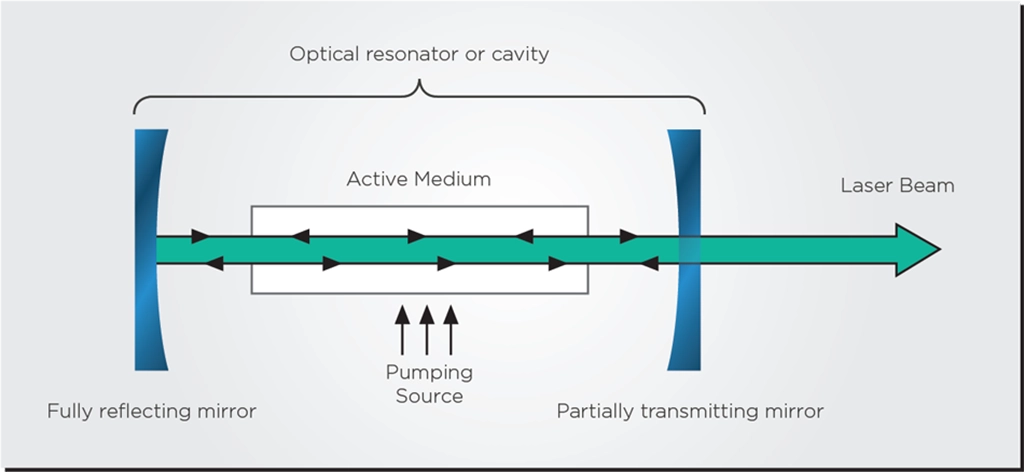

The Lasers Powering AI

Artificial Intelligence (AI) networks rely on vast amounts of data processed and transferred at incredible…

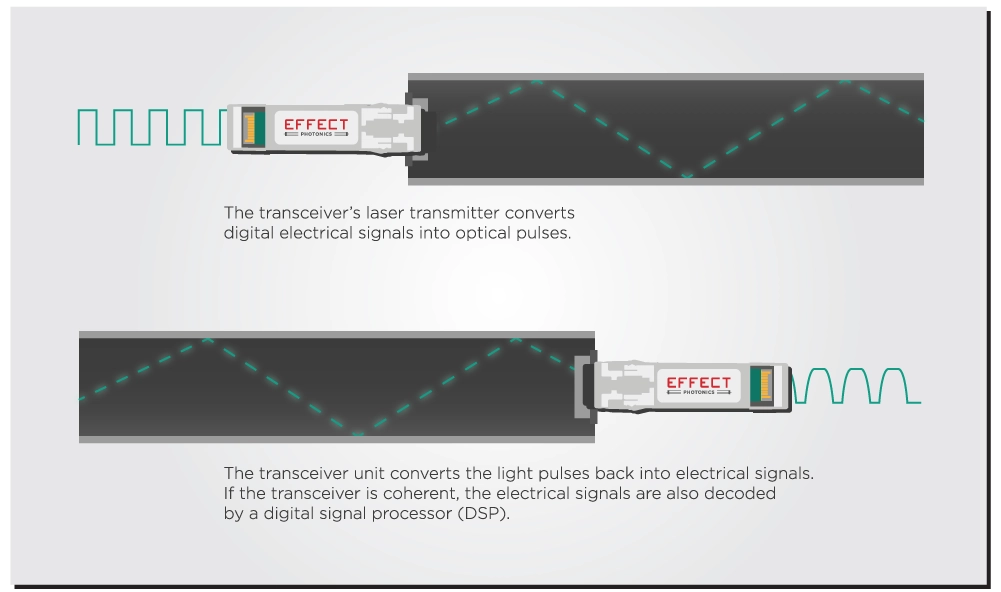

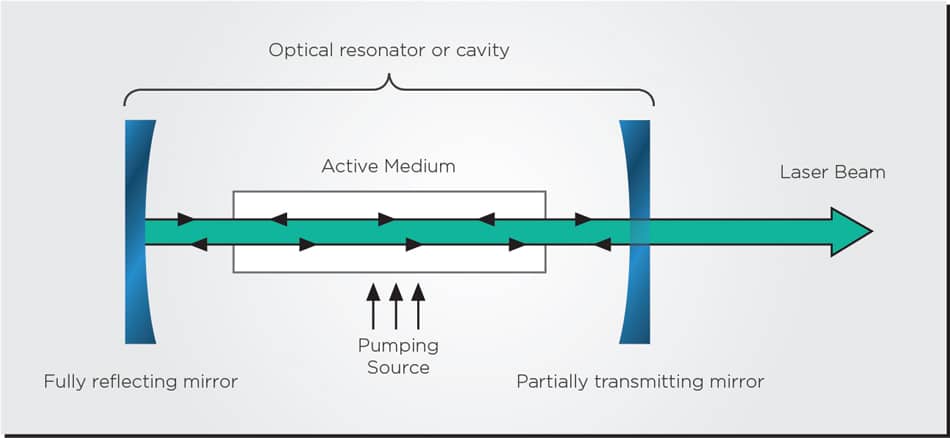

Artificial Intelligence (AI) networks rely on vast amounts of data processed and transferred at incredible speeds to function effectively. This data-intensive nature requires robust infrastructure, with lasers playing a pivotal role. AI networks depend on two primary processes: AI training and AI inference. Training involves feeding large datasets into models to learn and make predictions, while inference uses these trained models to make real-time decisions.

Lasers are crucial in enhancing the efficiency and speed of these processes by enabling high-speed data transfer within data centers and across networks. This article explores the various ways lasers power AI networks, the specific requirements for data center connections, and their broader impact on AI infrastructure.

Requirements for Data Center Connections

The connectivity requirements for data centers supporting AI workloads are stringent. They must handle enormous volumes of data with minimal latency and high reliability. The primary requirements for lasers in these environments include:

High Bandwidth: AI applications, especially those involving large language models and real-time data processing, require interconnects that can support high data rates.

Low Latency: Minimizing latency is crucial for AI inference tasks that require real-time decision-making. Lasers enable faster data transfer compared to traditional electronic interconnects, significantly reducing the time it takes for data to travel between nodes.

Energy Efficiency: AI data centers consume vast amounts of power. Integrated photonics combines optical components on a single chip, reducing power consumption while maintaining high performance.

Scalability: As AI workloads grow, the infrastructure must scale accordingly. Lasers provide the scalability needed to expand data center capabilities without compromising performance.

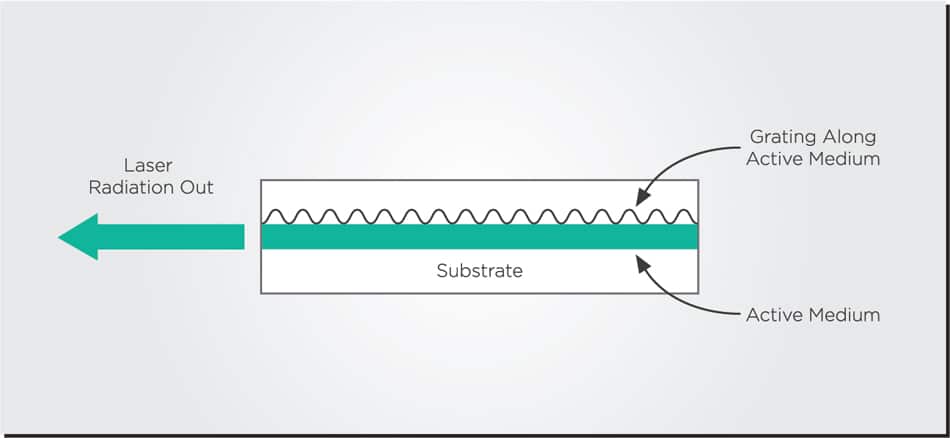

Lasers Arrays in Data Center Interconnects

In 2022, Intel Labs demonstrated an eight-wavelength laser array fully integrated on a silicon wafer. These milestones are essential for optical transceivers because the laser arrays can allow for multi-channel transceivers that are more cost-effective when scaling up to higher speeds.

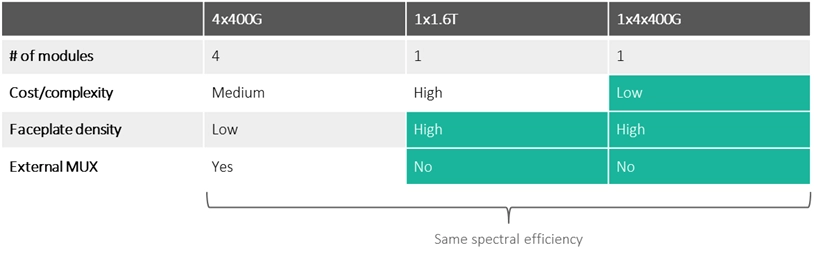

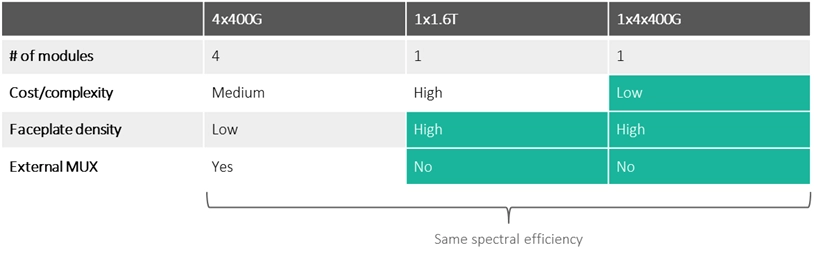

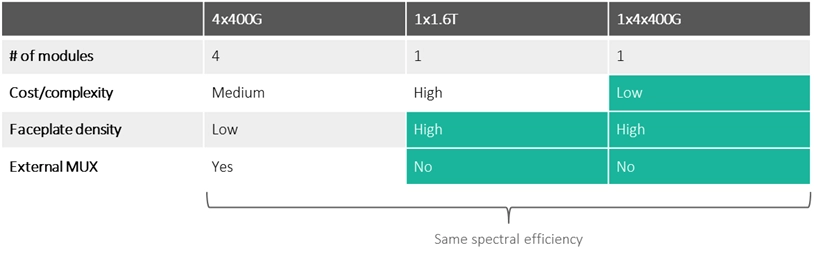

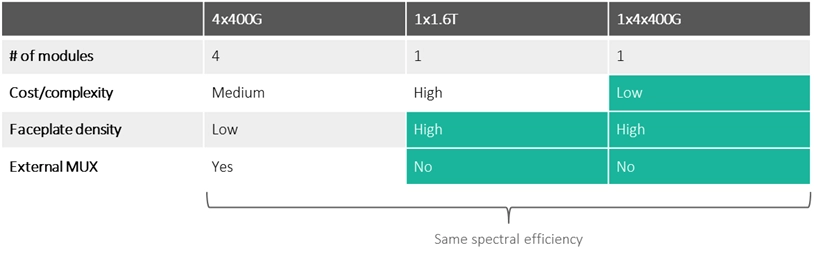

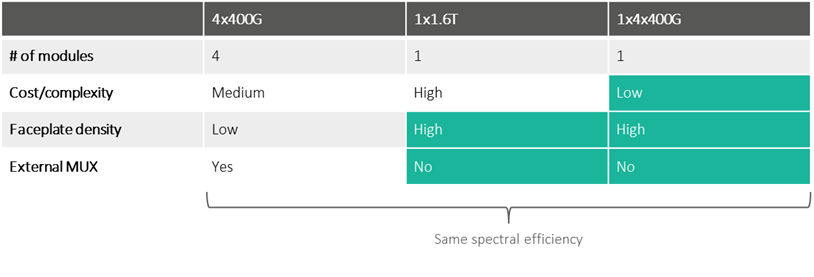

Let’s say we need an intra-DCI link with 1.6 Terabits/s of capacity. There are three ways we could implement it:

Four modules of 400G: This solution uses existing off-the-shelf modules but has the largest footprint. It requires four slots in the router faceplate and an external multiplexer to merge these into a single 1.6T channel.

One module of 1.6T: This solution will not require the external multiplexer and occupies just one plug slot on the router faceplate. However, making a single-channel 1.6T device has the highest complexity and cost.

One module with four internal channels of 400G: A module with an array of four lasers (and thus four different 400G channels) will only require one plug slot on the faceplate while avoiding the complexity and cost of the single-channel 1.6T approach.

Multi-laser array and multi-channel solutions will become increasingly necessary to increase link capacity in coherent systems. They will not need more slots in the router faceplate while simultaneously avoiding the higher cost and complexity of increasing the speed with just a single channel.

Broader Impact on AI Infrastructure

Beyond data centers, lasers are transforming the broader AI infrastructure by enabling advanced applications and enhancing network efficiency. In the context of edge computing, where data is processed closer to the source, lasers facilitate rapid data transfer and low-latency processing. This is essential for applications like autonomous vehicles, smart cities, and real-time analytics, where immediate data processing is critical.

Lasers also play a significant role in the integration of AI with 5G and future 6G networks. The high-frequency demands of these networks require precise and high-speed optical interconnects, which lasers provide.

Conclusion

Lasers are at the core of modern AI networks, providing the high-speed, low-latency, and energy-efficient interconnects needed to support data-intensive AI workloads. From enhancing data center connectivity to enabling advanced edge computing and network integration, lasers play a pivotal role in powering AI. As AI continues to evolve and expand into new applications, the reliance on laser technology will only grow, driving further innovation and efficiency in AI infrastructure.

Tags: 5G Networks, AI inference, AI networks, AI training, Autonomous Vehicles, bandwidth, data centers, data transfer, Edge computing, EFFECT Photonics, energy efficiency, high-speed connectivity, Indium Phosphide lasers, lasers, Low latency, optical interconnects, photonic processors, Scalability, silicon photonics, Smart Cities, VCSELs

AI at the Network Edge

Artificial Intelligence (AI) can impact several different industries by enhancing efficiency, automation, and data processing…

Artificial Intelligence (AI) can impact several different industries by enhancing efficiency, automation, and data processing capabilities. The network edge is another area where AI can deliver such improvements. Edge computing, combined with AI, enables data processing closer to the source of data generation, leading to reduced latency, improved real-time data analytics, and enhanced security. This article delves into the potential of AI at the network edge, exploring its applications, training and inference processes, and future impact.

The Potential of AI at the Network Edge

According to market research, the global market for edge computing technologies is projected to grow from $46.3 billion in 2022 to $124.7 billion by 2027.

AI at the network edge involves deploying AI models and algorithms closer to where data is generated, such as in IoT devices, sensors, and local servers. This proximity allows for real-time data processing and decision-making, which is critical for applications that require immediate responses. Industries such as manufacturing, healthcare, retail, and smart cities are prime beneficiaries of edge AI. For instance, in manufacturing, edge AI can monitor machinery in real-time to predict and prevent failures, enhancing operational efficiency and reducing downtime. In healthcare, edge AI enables real-time patient monitoring, providing immediate alerts to medical staff about critical changes in patient conditions.

The integration of AI at the edge also addresses the growing need for data privacy and security. By processing data locally, sensitive information does not need to be transmitted to centralized cloud servers, reducing the risk of data breaches and ensuring compliance with data protection regulations. Moreover, edge AI reduces the bandwidth required for data transfer, as only the necessary information is sent to the cloud, optimizing network resources and reducing costs.

Training and Inference at the Edge

Training AI models involves feeding large datasets into algorithms to enable them to learn patterns and make predictions. Traditionally, this process requires significant computational power and is often performed in centralized data centers. However, advancements in edge computing and model optimization techniques have made it possible to train AI models at the edge.

One of the key techniques for enabling AI training at the edge is model optimization. This includes methods such as pruning, quantization, and low-rank adaptation, which reduce the size and complexity of AI models without compromising their performance. Pruning involves removing less important neurons or layers from a neural network, while quantization reduces the precision of the model’s weights, making it more efficient in terms of memory and computational requirements. Low-rank adaptation focuses on modifying only a subset of parameters, which is particularly useful for fine-tuning pre-trained models on specific tasks.

Inference, the process of making predictions using a trained AI model, is especially critical at the edge. It requires lower computational power compared to training and can be optimized for low-latency and energy-efficient operations. Edge devices equipped with AI inference capabilities can analyze data in real-time and provide immediate feedback. For example, in retail, edge AI can facilitate frictionless checkout experiences by instantly recognizing and processing items, while in smart cities, it can manage traffic and enhance public safety by analyzing real-time data from surveillance cameras and sensors.

The Role of Pluggables in the Network Edge

Optical transceivers are crucial in developing better AI systems by facilitating the rapid, reliable data transmission these systems need to do their jobs. High-speed, high-bandwidth connections are essential to interconnect data centers and supercomputers that host AI systems and allow them to analyze a massive volume of data.

In addition, optical transceivers are essential for facilitating the development of artificial intelligence-based edge computing, which entails relocating compute resources to the network’s periphery. This is essential for facilitating the quick processing of data from Internet-of-Things (IoT) devices like sensors and cameras, which helps minimize latency and increase reaction times.

Pluggables that fit this new AI era must be fast, smart, and adapt to multiple use cases and conditions. They will relay monitoring data back to the AI management layer in the central office. The AI management layer can then program transceiver interfaces from this telemetry data to change parameters and optimize the network.

Takeaways

By bringing AI closer to the source of data generation, it enables real-time analytics, reduces latency, enhances data privacy, and optimizes network resources. Edge AI can foster innovation in areas such as autonomous vehicles, where real-time data processing is crucial for safe navigation and decision-making. In the healthcare sector, edge AI will enable more sophisticated patient monitoring systems, capable of diagnosing and responding to medical emergencies instantly. Moreover, edge AI will play a role in mobile networks, providing the necessary infrastructure to handle the massive amounts of data generated by connected devices.

Tags: AI edge, AI models, AI network, bandwidth optimization, data generation, data privacy, Edge computing, EFFECTPhotonics, future impact, Healthcare, inference, IoT devices, local servers, Manufacturing, model optimization, operational efficiency, real-time analytics, real-time data processing, reduced latency, security, sensors, Smart Cities, training, transformative power

How Photonics Enables AI Networks

Artificial Intelligence (AI) networks have revolutionized various industries by enabling tasks such as image recognition,…

Artificial Intelligence (AI) networks have revolutionized various industries by enabling tasks such as image recognition, natural language processing, and autonomous driving. Central to the functioning of AI networks are two processes: AI training and AI inference. AI training involves feeding large datasets into algorithms to learn patterns and make predictions, typically requiring significant computational resources. AI inference, on the other hand, is the process of using trained models to make predictions on new data, which requires efficient and fast computation. As the demand for AI capabilities grows, the need for robust, high-speed, and energy-efficient interconnects within data centers and between network nodes becomes critical. This is where photonics comes into play, offering significant advantages over traditional electronic methods.

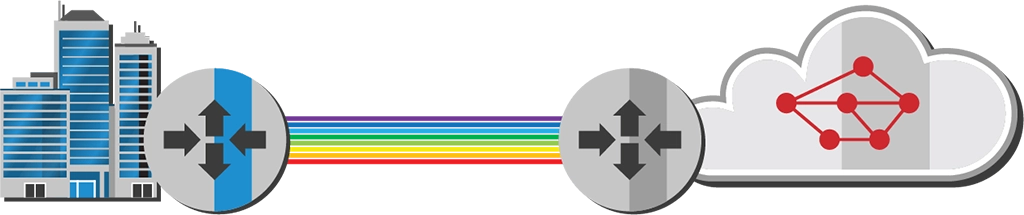

Enhancing Data Center Interconnects

Data centers are the backbone of AI networks, housing the vast computational resources needed for both training and inference tasks. As AI models become more complex, the data traffic within and between data centers increases exponentially. Traditional electronic interconnects face limitations in terms of bandwidth and power efficiency. Photonics, using light to transmit data, offers a solution to these challenges.

Photonics enables the integration of optical components like lasers, modulators, and detectors on a single chip. This technology allows for high-speed data transfer with significantly lower power consumption compared to electronic interconnects. These advancements are crucial for handling the data-intensive nature of AI workloads.

Enabling High-Speed AI Training and Inference

AI training requires the processing of vast amounts of data, often necessitating the use of distributed computing resources across multiple data centers. Photonic interconnects facilitate this by providing ultra-high bandwidth connections, which are essential for the rapid movement of data between computational nodes. The high-speed data transfer capabilities of photonics reduce latency and improve the overall efficiency of AI training processes.

This high transfer speed and capacity also plays a critical role in AI inference, particularly in scenarios where real-time processing and high throughput is essential. For example, in a network featuring autonomous vehicles, AI inference must process data from sensors and cameras in real-time to make immediate decisions. For other ways in which photonics plays a role in autonomous vehicles, please read our article on LIDAR and photonics.

Into Network Edge Applications

The network edge refers to the point where data is generated and collected, such as IoT devices, sensors, and local servers. Deploying AI capabilities at the network edge allows for real-time data processing and decision-making, reducing the need to send data back to centralized data centers. This approach not only reduces latency but also enhances data privacy and security by keeping sensitive information local.

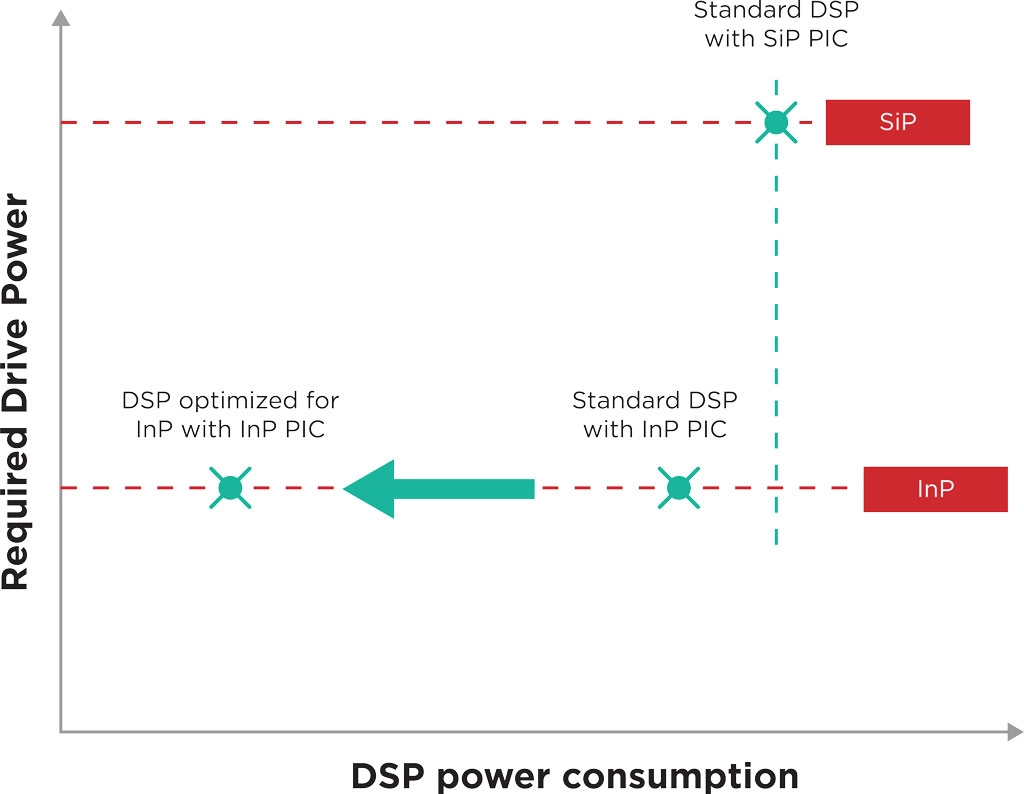

Photonics enables edge AI by providing the necessary high-speed, low-power interconnects required for efficient data processing at the edge. For some use cases, the network edge could benefit from upgrading their existing direct detect or grey links to 100G DWDM coherent. However, the industry needs more affordable and power-efficient transceivers and DSPs specifically designed for coherent 100G transmission in edge and access networks. By realizing DSPs co-designed with the optics, adjusted for reduced power consumption, and industrially hardened, the network edge will have coherent DSP and transceiver products adapted to their needs. This is a path EFFECT Photonics believes strongly in, and we talk more about it in one of our previous articles.

Conclusion

Photonics is transforming the landscape of AI networks by providing high-speed, energy-efficient interconnects that enhance data center performance, enable faster AI training and inference, and support real-time processing at the network edge. As AI continues to evolve and expand into new applications, the role of photonics will become increasingly critical in addressing the challenges of bandwidth, latency, and power consumption. By leveraging the unique properties of light, photonics offers a path to more efficient and scalable AI networks, driving innovation and enabling new possibilities across various industries.

Tags: AI inference, AI networks, AI training, autonomous driving, bandwidth, computational resources, Data center, detectors, edge AI, EFFECT Photonics, energy efficient, high-speed interconnects, lasers, latency, modulators, optical components, photonic processors, Photonics, power consumption, real-time processing, silicon photonics

What Do AI Networks Need From Optical Pluggables?

Artificial intelligence (AI) will have a significant role in making optical networks more scalable, affordable,…

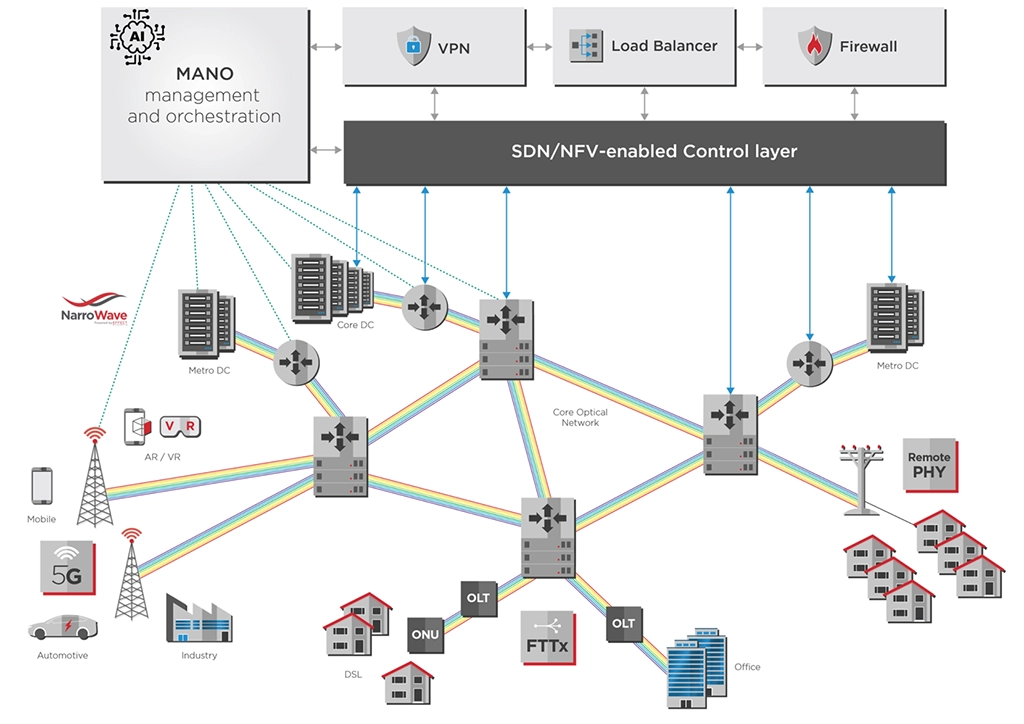

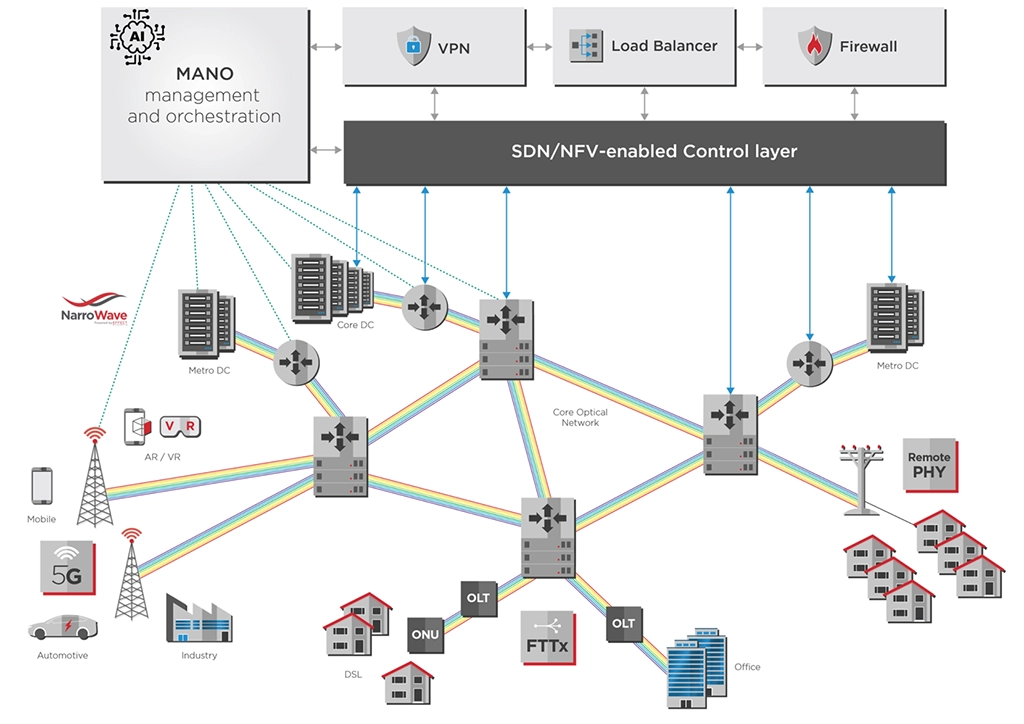

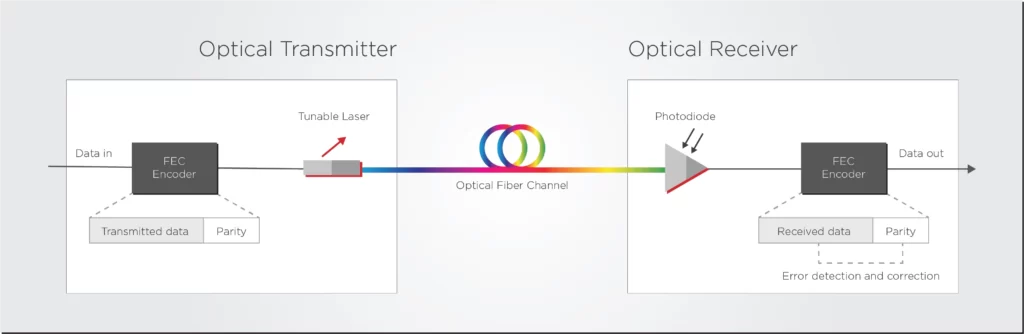

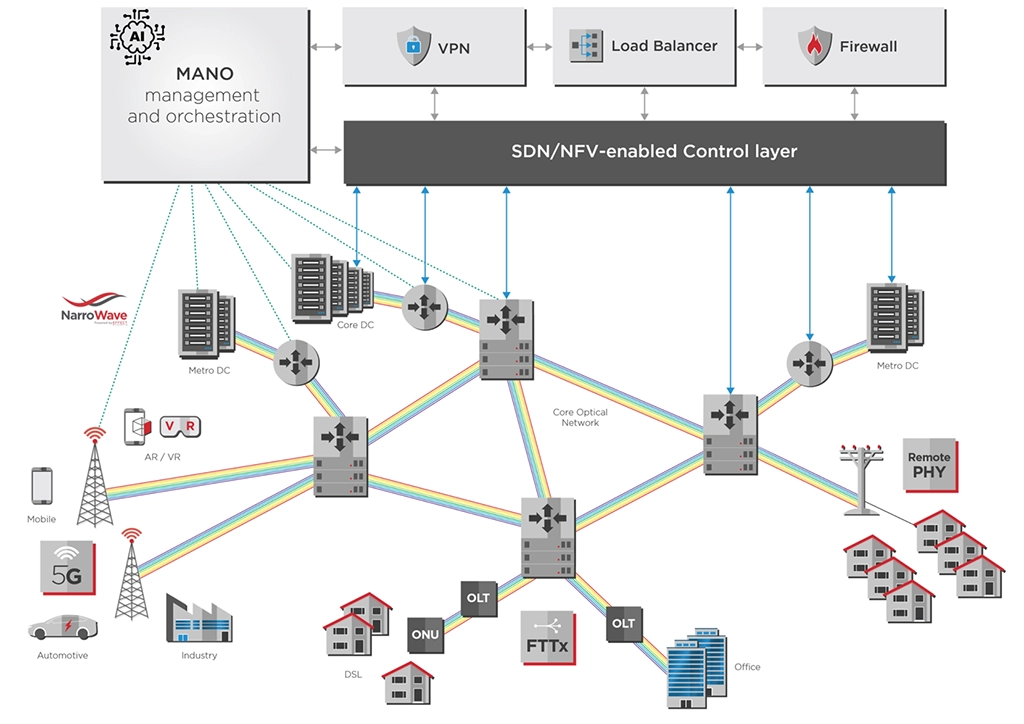

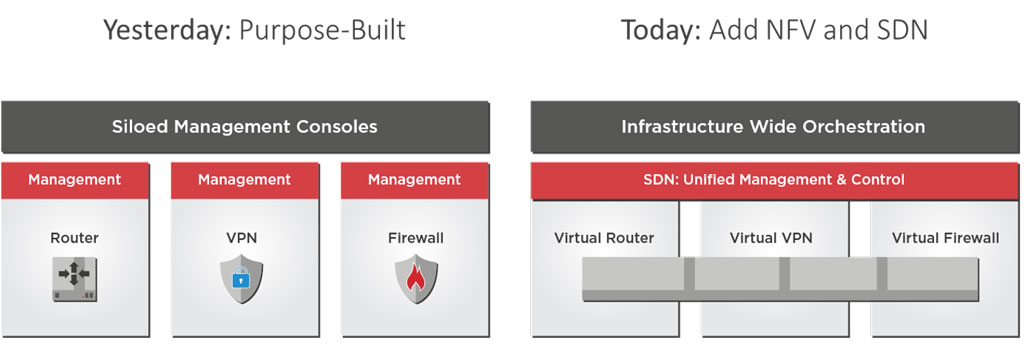

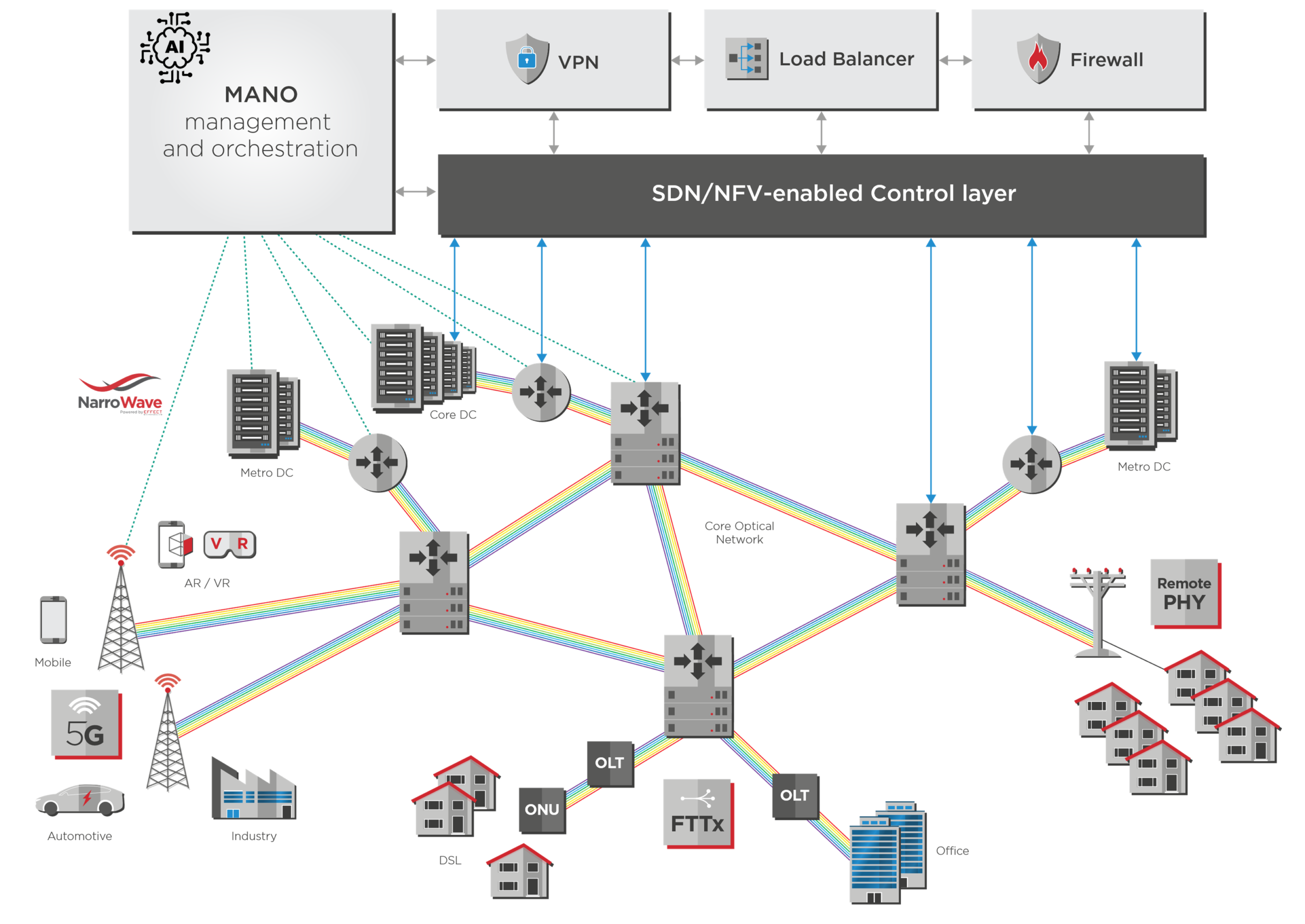

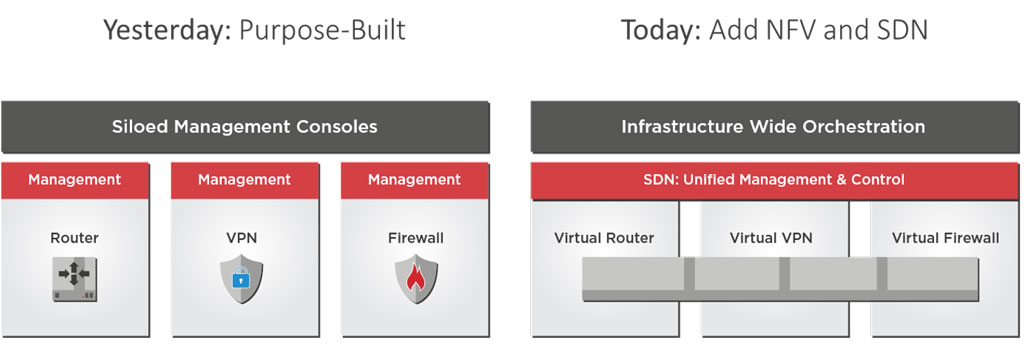

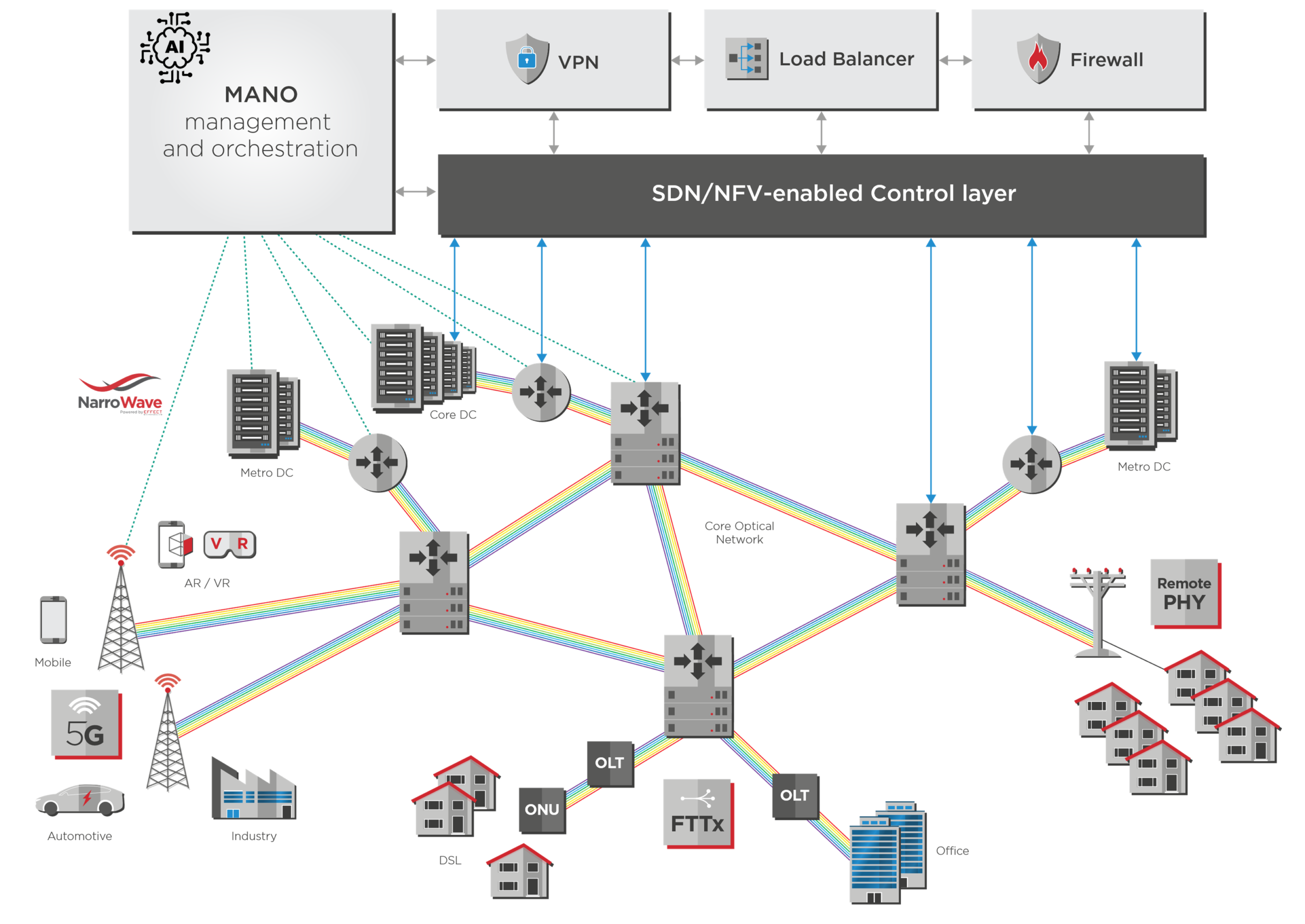

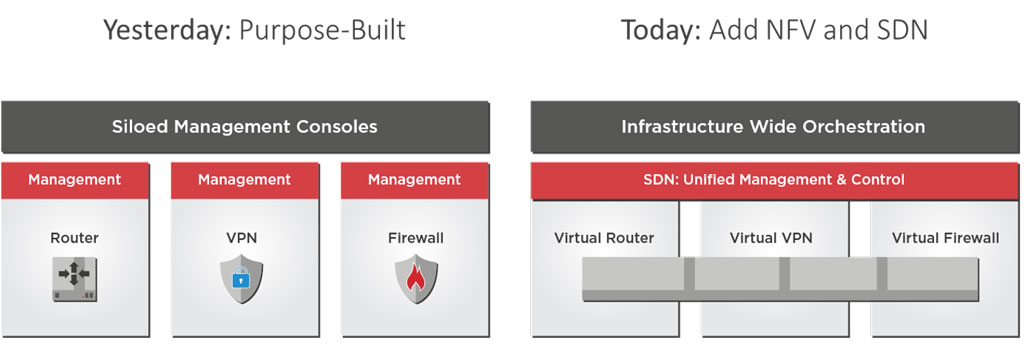

Artificial intelligence (AI) will have a significant role in making optical networks more scalable, affordable, and sustainable. It can gather information from devices across the optical network to identify patterns and make decisions independently without human input. By synergizing with other technologies, such as network function virtualization (NFV), AI can become a centralized management and orchestration network layer. Such a setup can fully automate network provisioning, diagnostics, and management, as shown in the diagram below.

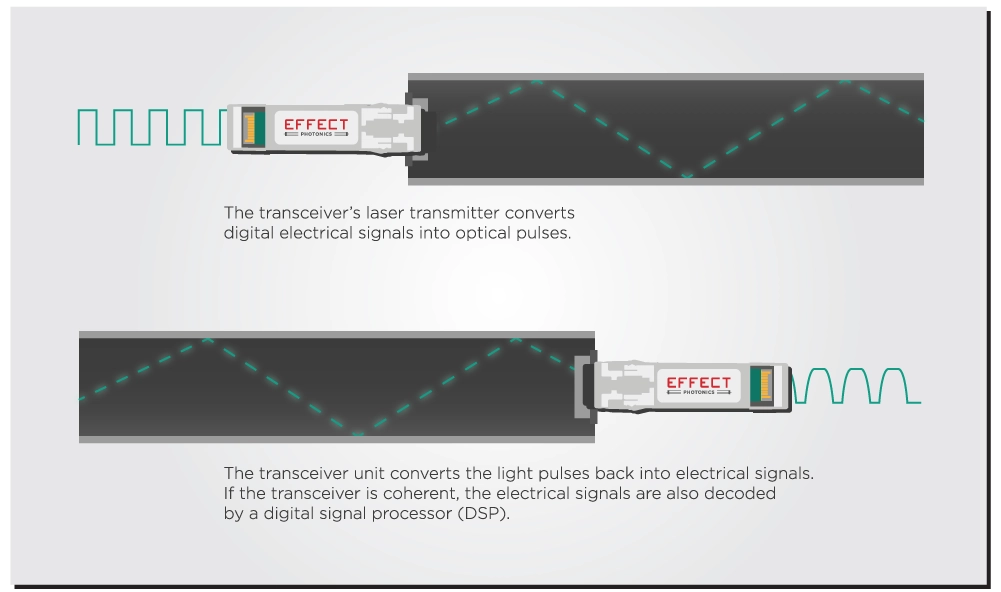

However, artificial intelligence and machine learning algorithms are data-hungry. To work optimally, they need information from all network layers and ever-faster data centers to process it quickly. Pluggable optical transceivers thus need to become smarter, relaying more information back to the AI central unit, and faster, enabling increased AI processing.

The Need for Faster Transceivers

Optical transceivers are crucial in developing better AI systems by facilitating the rapid, reliable data transmission these systems need to do their jobs. High-speed, high-bandwidth connections are essential to interconnect data centers and supercomputers that host AI systems and allow them to analyze a massive volume of data.

In addition, optical transceivers are essential for facilitating the development of artificial intelligence-based edge computing, which entails relocating compute resources to the network’s periphery. This is essential for facilitating the quick processing of data from Internet-of-Things (IoT) devices like sensors and cameras, which helps minimize latency and increase reaction times.

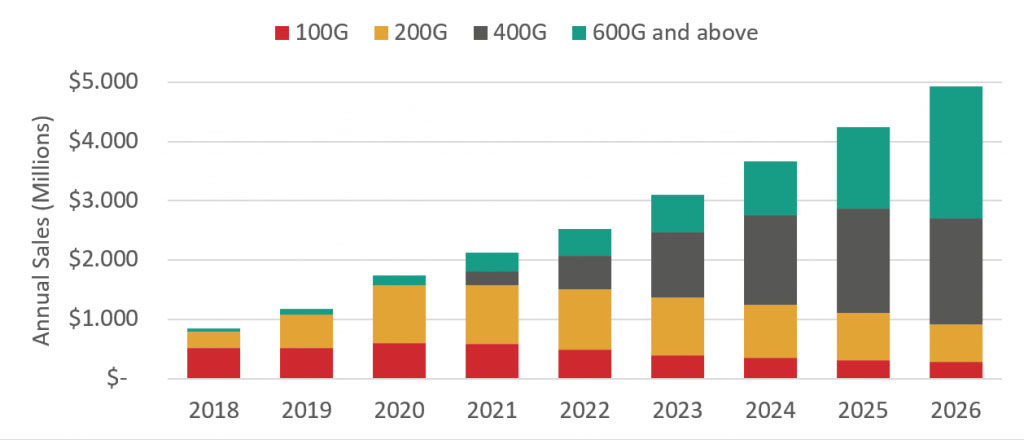

400 Gbps links are becoming the standard across data center interconnects, but providers are already considering the next steps. LightCounting forecasts significant growth in the shipments of dense-wavelength division multiplexing (DWDM) ports with data rates of 600G, 800G, and beyond in the next five years. We discuss these solutions in greater detail in our article about the roadmap to 800G and beyond.

The Need for Telemetry Data

Mobile networks now and in the future will consist of a massive number of devices, software applications, and technologies. Self-managed, zero-touch automated networks will be required to handle all these new devices and use cases. Realizing this full network automation requires two vital components.

- Artificial intelligence and machine learning algorithms for comprehensive network automation: For instance, AI in network management can drastically cut the energy usage of future telecom networks.

- Sensor and control data flow across all network model layers, including the physical layer: As networks grow in size and complexity, the management and orchestration (MANO) software needs more degrees of freedom and dials to turn.

These goals require smart optical equipment and components that provide comprehensive telemetry data about their status and the fiber they are connected to. The AI-controlled centralized management and orchestration layer can then use this data for remote management and diagnostics. We discuss this topic further in our previous article on remote provisioning, diagnostics, and management.

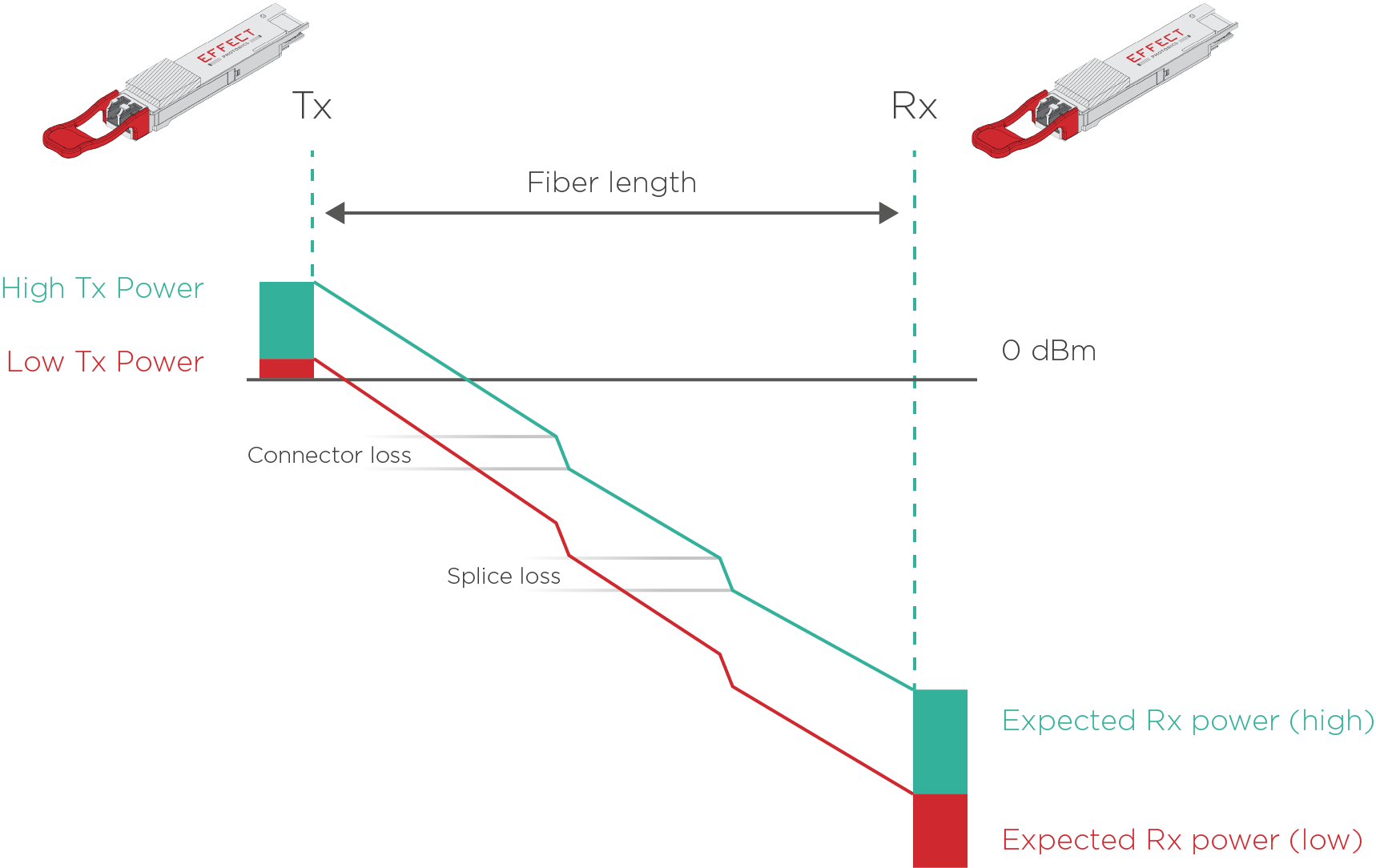

For example, a smart optical transceiver that fits this centralized AI-management model should relay data to the AI controller about fiber conditions. Such monitoring is not just limited to finding major faults or cuts in the fiber but also smaller degradations or delays in the fiber that stem from age, increased stress in the link due to increased traffic, and nonlinear optical effects. A transceiver that could relay all this data allows the AI controller to make better decisions about how to route traffic through the network.

A Smart Transceiver to Rule All Network Links

After relaying data to the AI management system, a smart pluggable transceiver must also switch parameters to adapt to different use cases and instructions given by the controller.

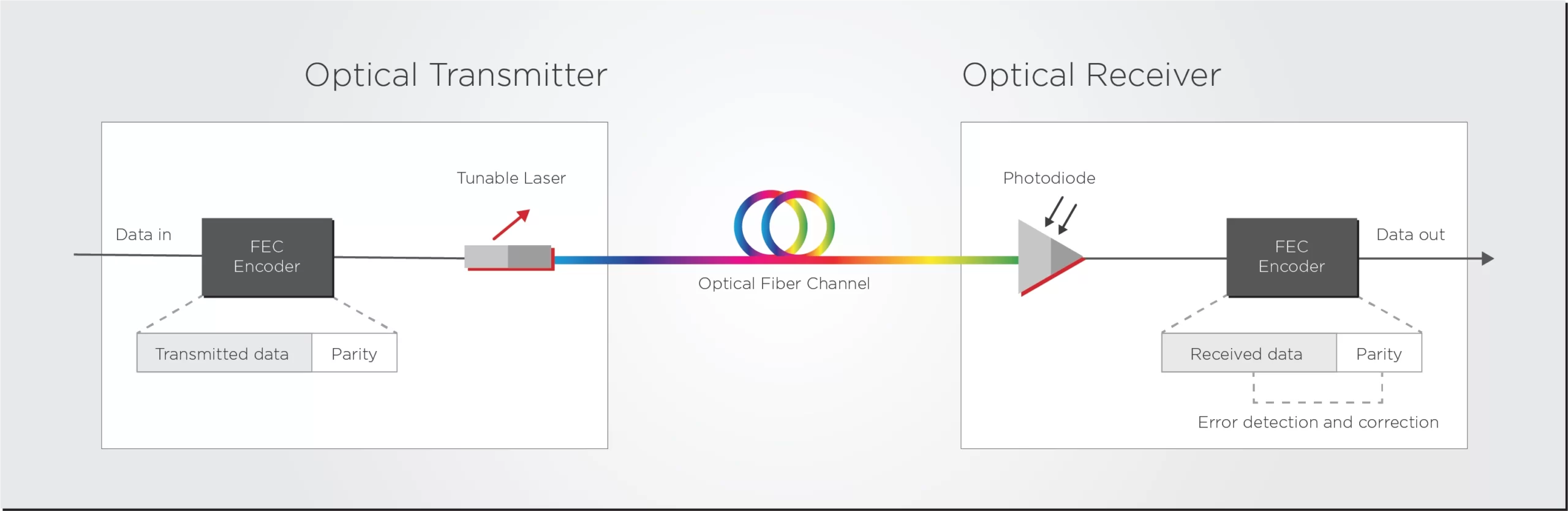

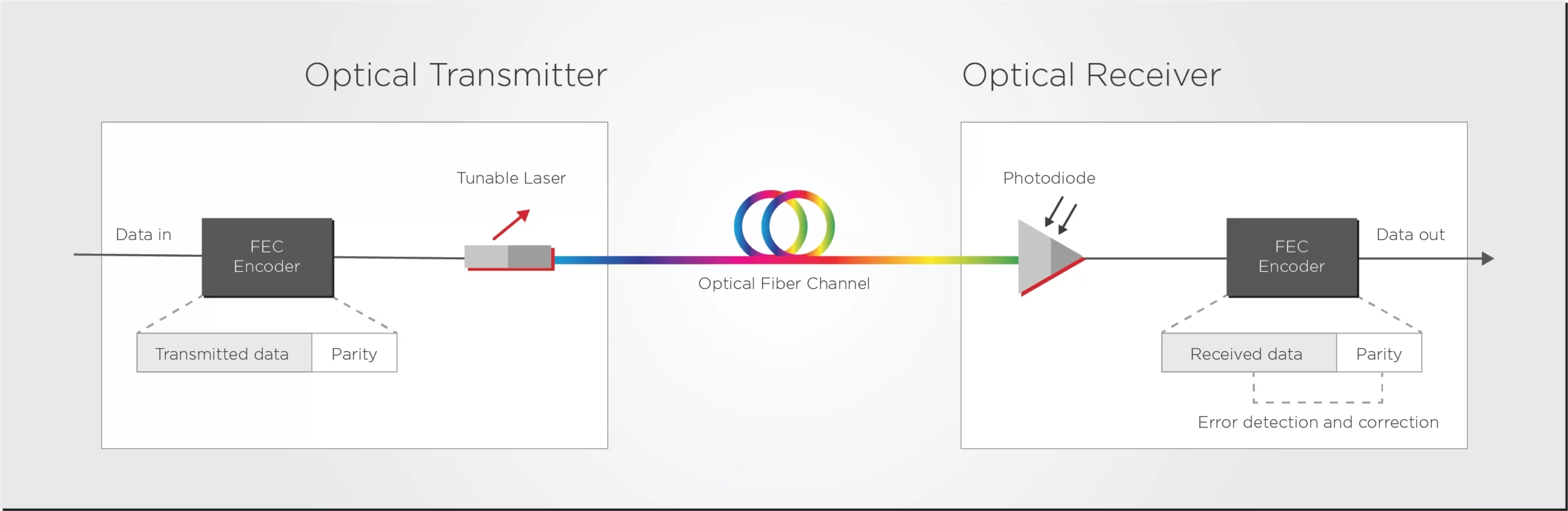

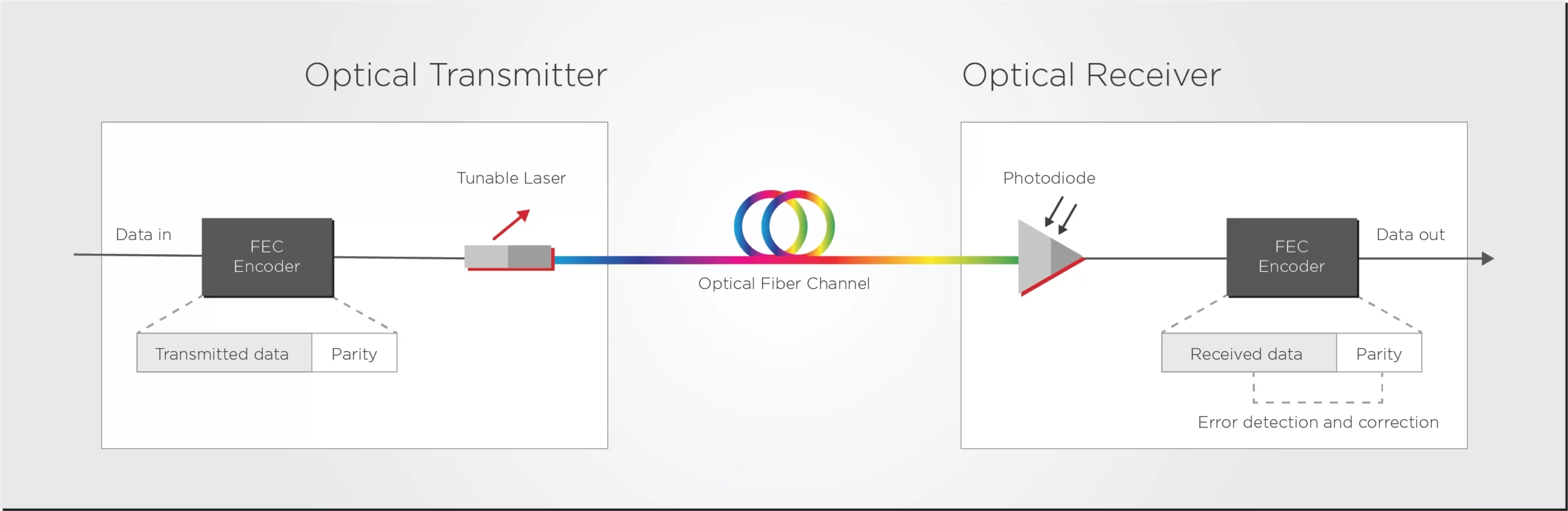

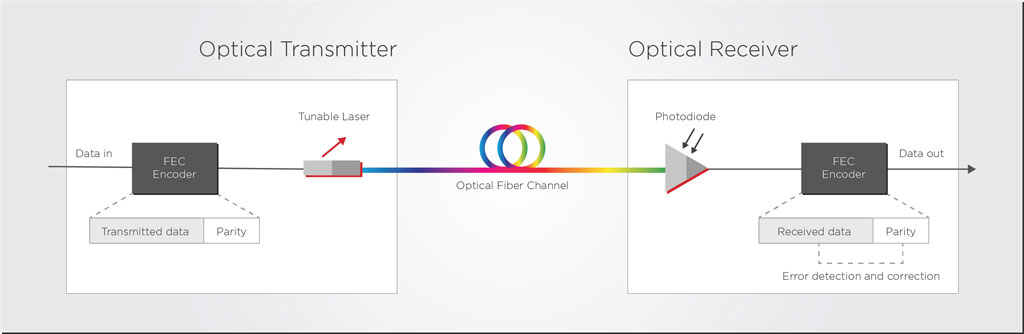

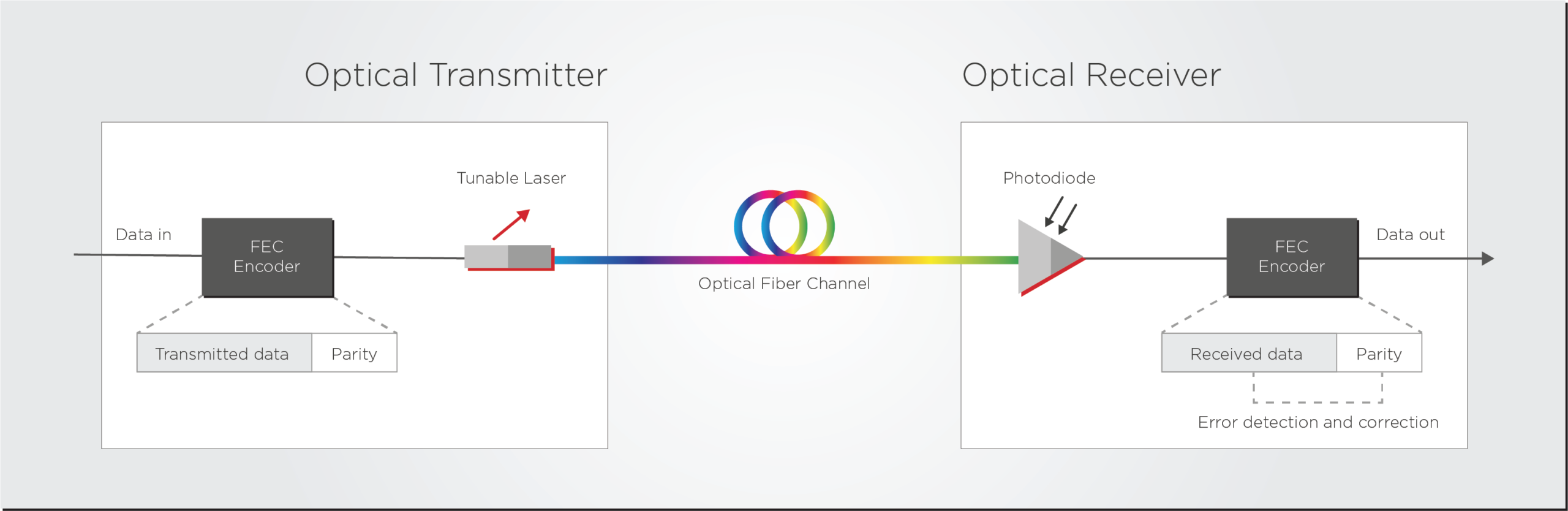

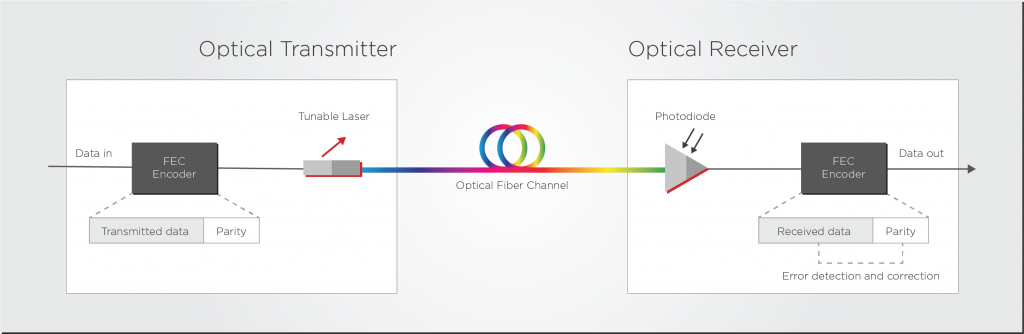

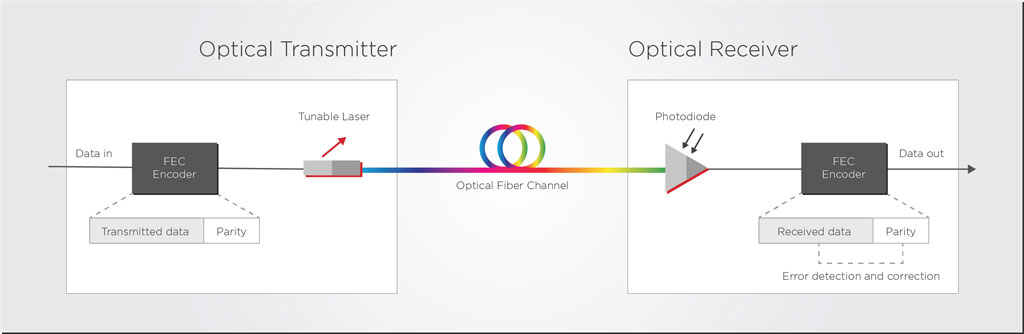

Let’s look at an example of forward error correction (FEC). FEC makes the coherent link much more tolerant to noise than a direct detect system and enables much longer reach and higher capacity. In other words, FEC algorithms allow the DSP to enhance the link performance without changing the hardware. This enhancement is analogous to imaging cameras: image processing algorithms allow the lenses inside your phone camera to produce a higher-quality image.

A smart transceiver and DSP could switch among different FEC algorithms to adapt to network performance and use cases. Let’s look at the case of upgrading a long metro link of 650km running at 100 Gbps with open FEC. The operator needs to increase that link capacity to 400 Gbps, but open FEC could struggle to provide the necessary link performance. However, if the transceiver can be remotely reconfigured to use a proprietary FEC standard, the transceiver will be able to handle this upgraded link.

Reconfigurable transceivers can also be beneficial to auto-configure links to deal with specific network conditions, especially in brownfield links. Let’s return to the fiber monitoring subject we discussed in the previous section. A transceiver can change its modulation scheme or lower the power of its semiconductor optical amplifier (SOA) if telemetry data indicates a good quality fiber. Conversely, if the fiber quality is poor, the transceiver can transmit with a more limited modulation scheme or higher power to reduce bit errors. If the smart pluggable detects that the fiber length is relatively short, the laser transmitter power or the DSP power consumption could be scaled down to save energy.

Takeaways

Optical networks will need artificial intelligence and machine learning to scale more efficiently and affordably to handle the increased traffic and connected devices. Conversely, AI systems will also need faster pluggables than before to acquire data and make decisions more quickly. Pluggables that fit this new AI era must be fast, smart, and adapt to multiple use cases and conditions. They will need to scale up to speeds beyond 400G and relay monitoring data back to the AI management layer in the central office. The AI management layer can then program transceiver interfaces from this telemetry data to change parameters and optimize the network.

Tags: 400 Gbps links, affordable networks, AI networks, centralized management, data-hungry algorithms, Dense wavelength division multiplexing, digital signal processing, EFFECT Photonics, Fiber Monitoring, forward error correction, high-speed transceivers, mobile networks, Network automation, Network Function Virtualization, network orchestration, network scalability, optical pluggables, Reconfigurable transceivers, smart optical equipment, sustainable networks, telemetry data

What Do Coherent Access Pluggables Need?

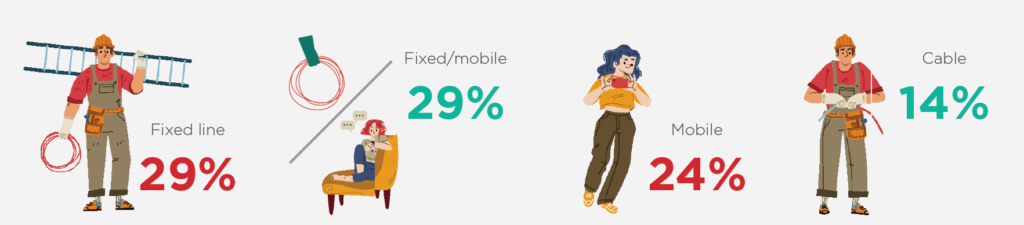

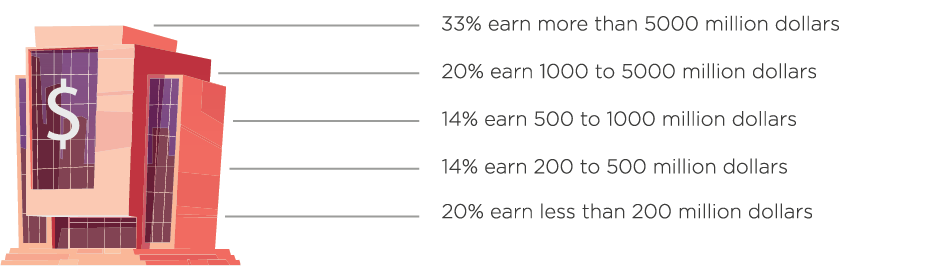

Given the success of 400ZR pluggable coherent solutions in the market, discussions in the telecom…

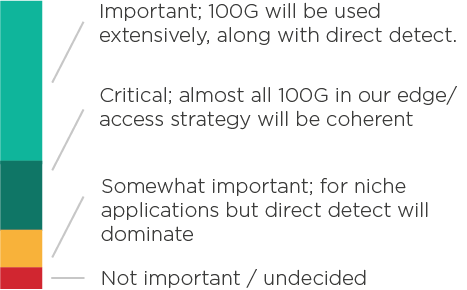

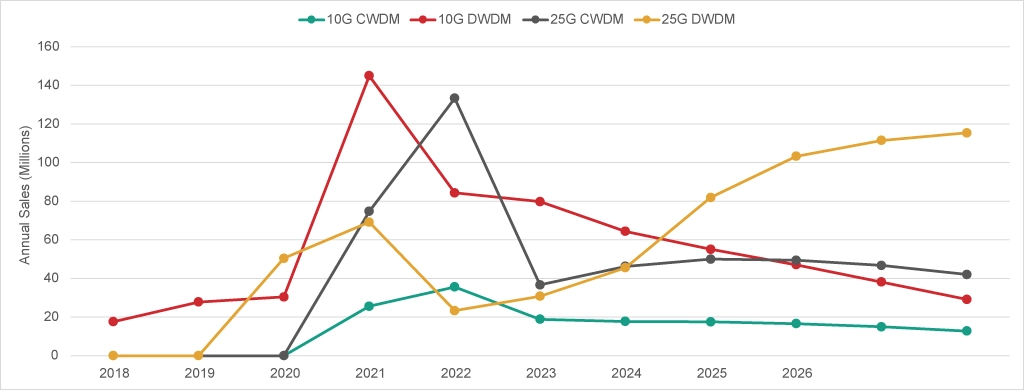

Given the success of 400ZR pluggable coherent solutions in the market, discussions in the telecom sector about a future beyond 400G pluggables have often focused on 800G solutions and 800ZR. However, there is also increasing excitement about “downscaling” to 100G coherent products for applications in the network edge. The industry is labeling these pluggables as 100ZR.

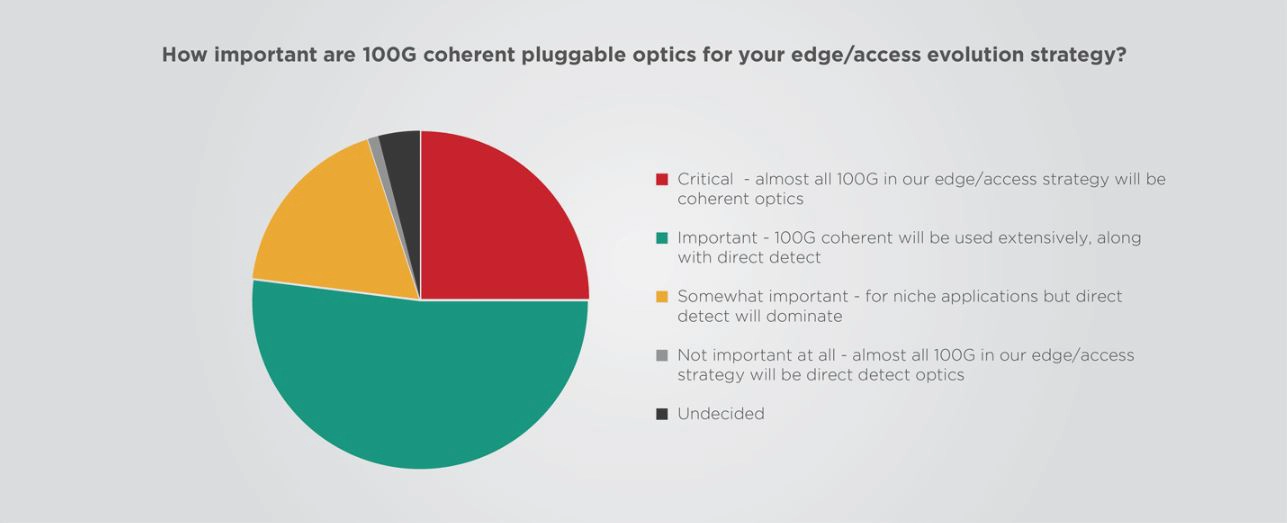

A recently released Heavy Reading survey revealed that over 75% of operators surveyed believe that 100G coherent pluggable optics will be used extensively in their edge and access evolution strategy. In response to this interest from operators, several vendors are keenly jumping on board the 100ZR train by announcing their development projects: : Acacia, Coherent/ADVA, Marvell/InnoLight, and Marvell/OE Solutions.

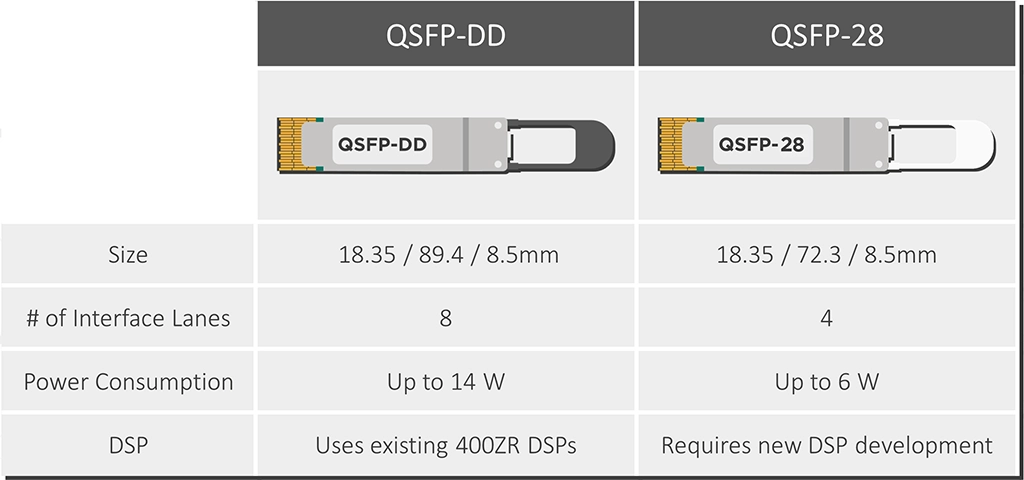

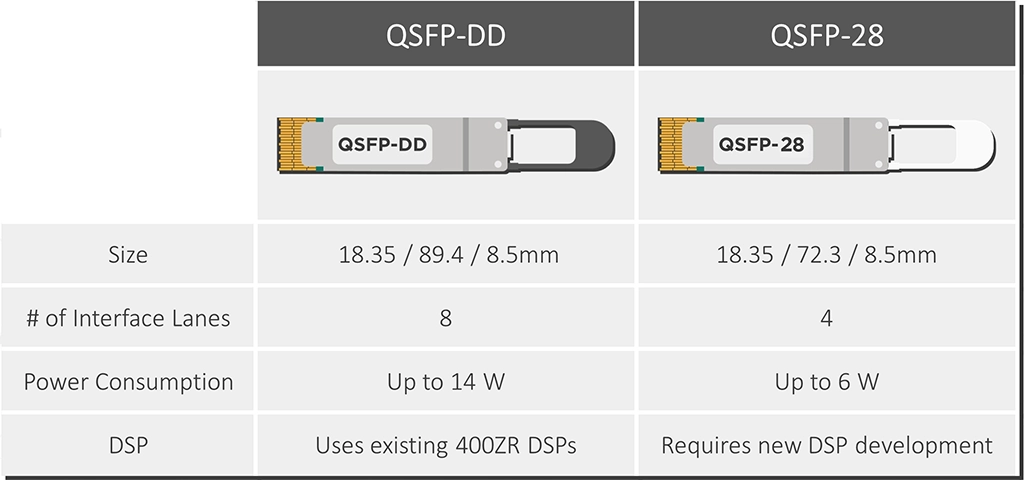

This growing interest and use cases for 100ZR are also changing how industry analysts view the potential of the 100ZR market. Last February, Cignal AI released a report on 100ZR, which stated that the viability of new low-power solutions in the QSFP28 form factor enabled use cases in access networks, thus doubling the size of their 100ZR shipment forecasts

The access market needs a simple, pluggable, low-cost upgrade to the 10G DWDM optics that it has been using for years. 100ZR is that upgrade. As access networks migrate from 1G solutions to 10G solutions, 100ZR will be a critical enabling technology.”

– Scott Wilkinson, Lead Analyst for Optical Components at Cignal AI.

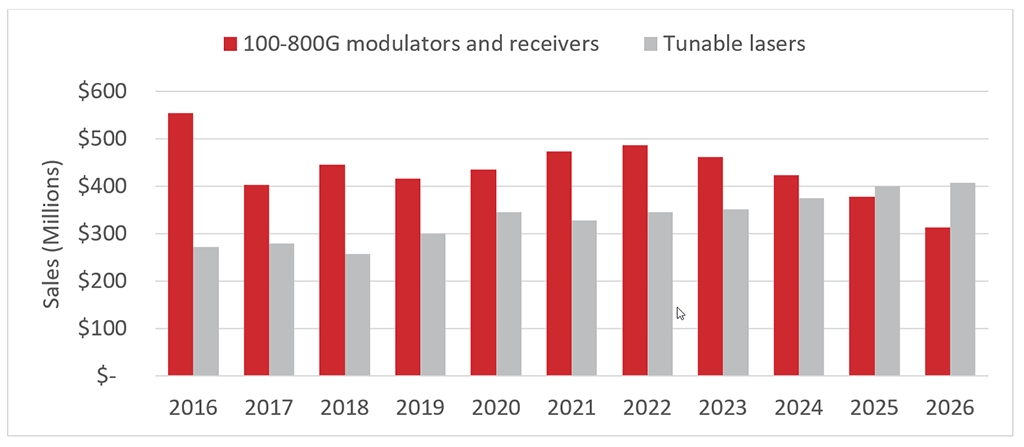

The 100ZR market can expand even further, however. Access networks are heavily price-conscious, and the lower the prices of 100ZR pluggables become, the more widely they will be adopted. Reaching such a goal requires a vibrant 100ZR ecosystem with multiple suppliers that can provide lasers, digital signal processors (DSPs), and full transceiver solutions that address the access market’s needs and price targets.

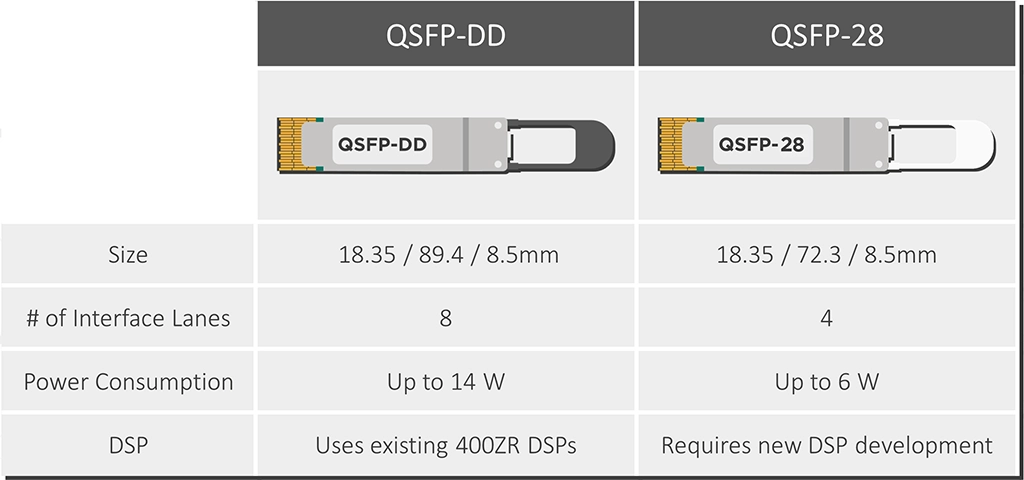

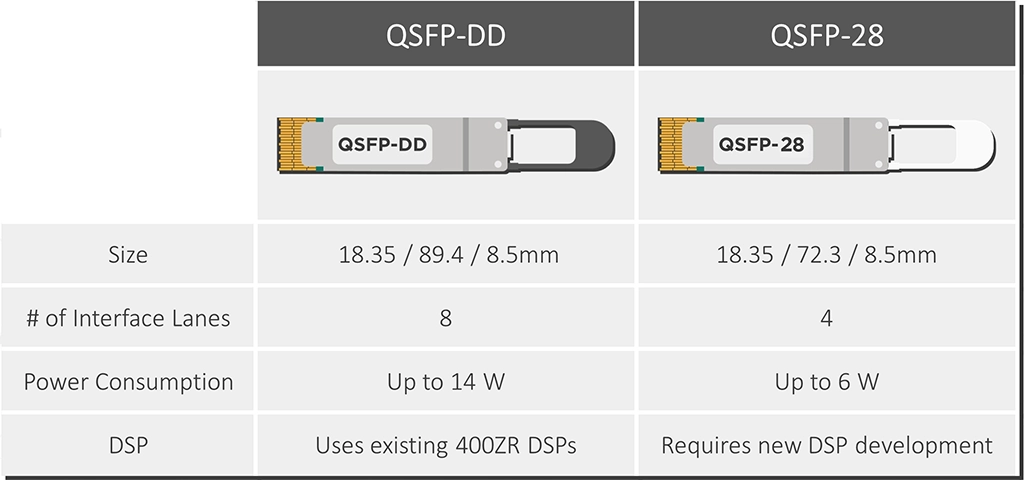

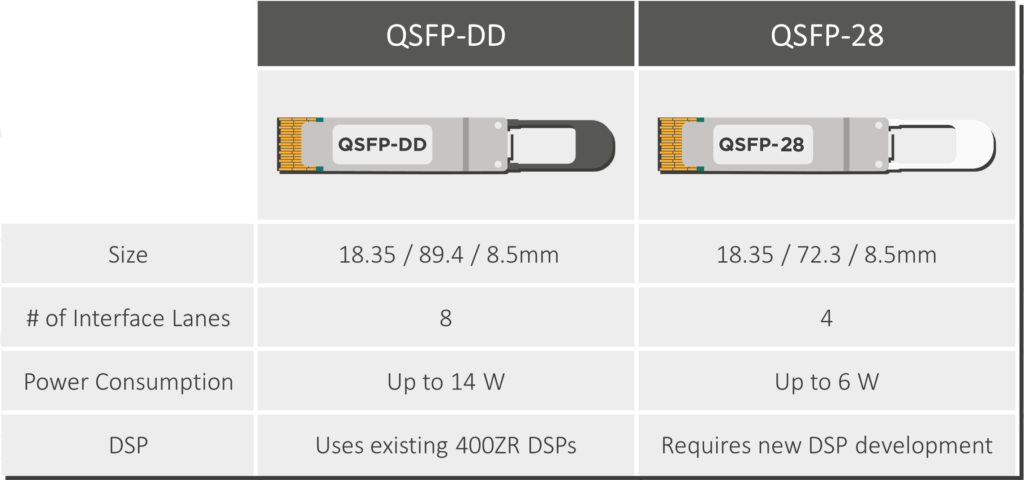

The Need for Lower Power

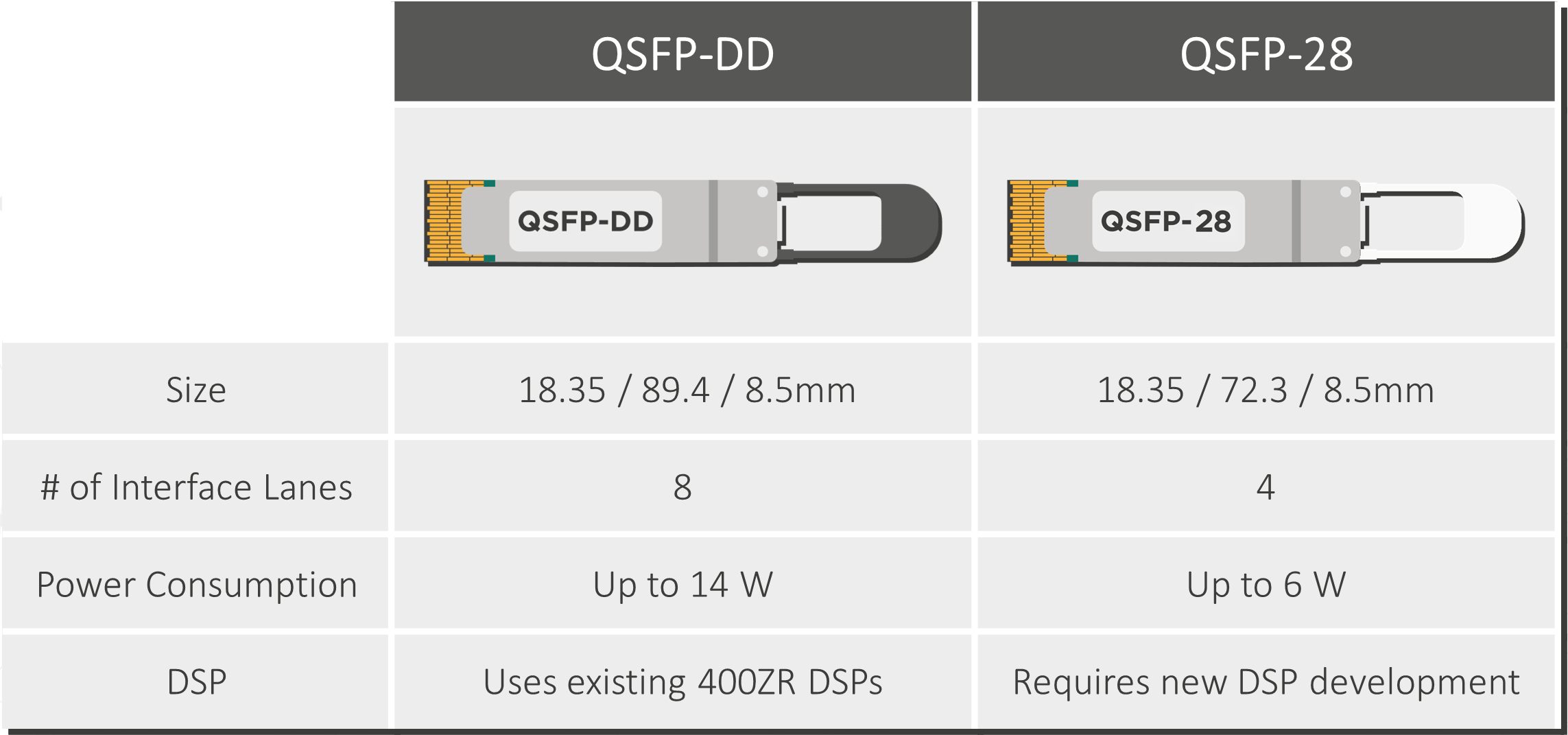

Unlike data centers and the network core, access network equipment lives in uncontrolled environments with limited cooling capabilities. Therefore, every extra watt of pluggable power consumption will impact how vendors and operators design their cabinets and equipment. QSFP-DD modules forced operators and equipment vendors to use larger cooling components (heatsinks and fans), meaning that each module would need more space to cool appropriately. The increased need for cabinet real estate makes these modules more costly to deploy in the access domain.

These struggles are a major reason why QSFP28 form factor solutions are becoming increasingly attractive in the 100ZR domain. Their power consumption (up to 6 watts) is lower than that of QSFP-DD form factors (up to 15 Watts), which allows them to be stacked more densely in access network equipment rooms. Besides, QSFP28 modules are compatible with existing access network equipment, which often features QSFP28 slots.

The Need to Overcome Laser and DSP Bottlenecks

Even though QSFP28 modules are better at addressing the power concerns of the access domain, some obstacles prevent their wider availability.

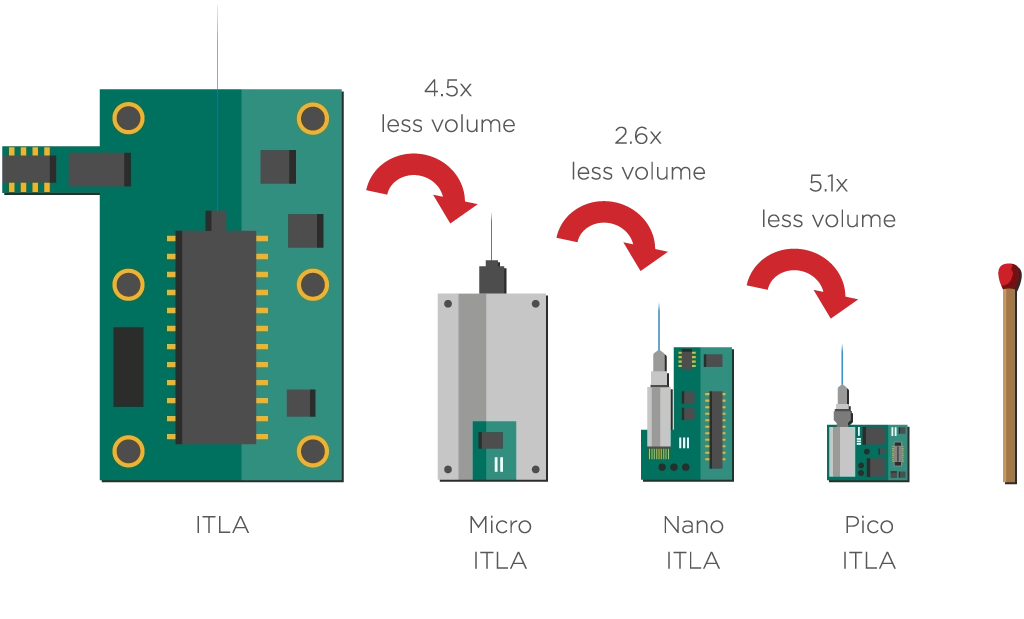

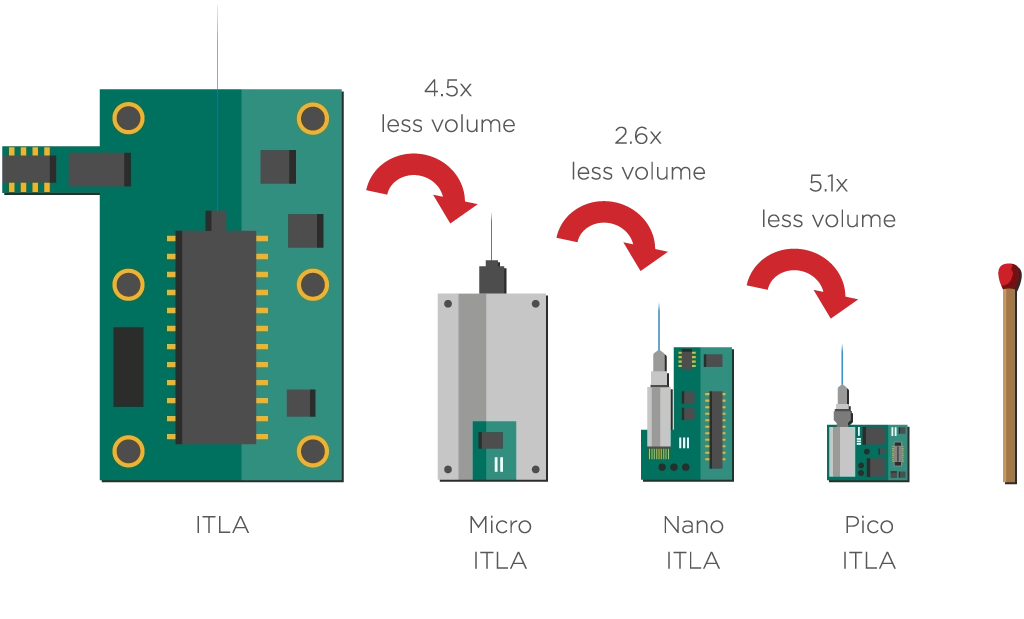

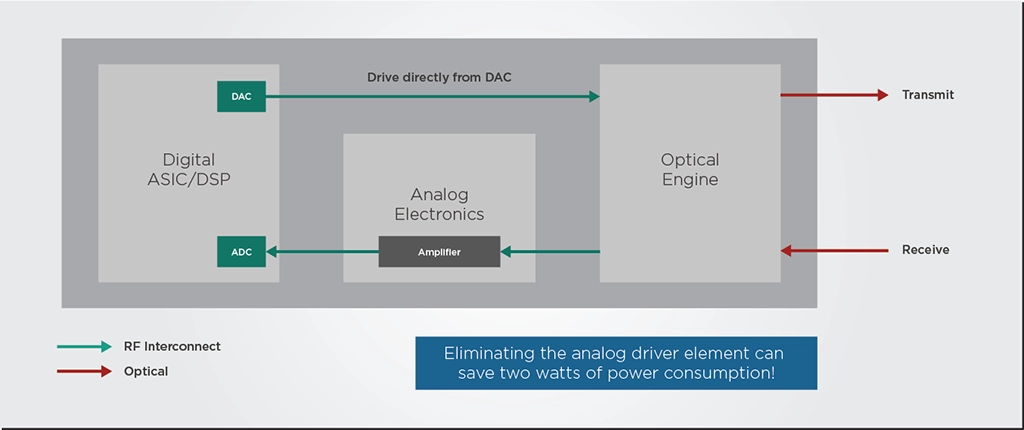

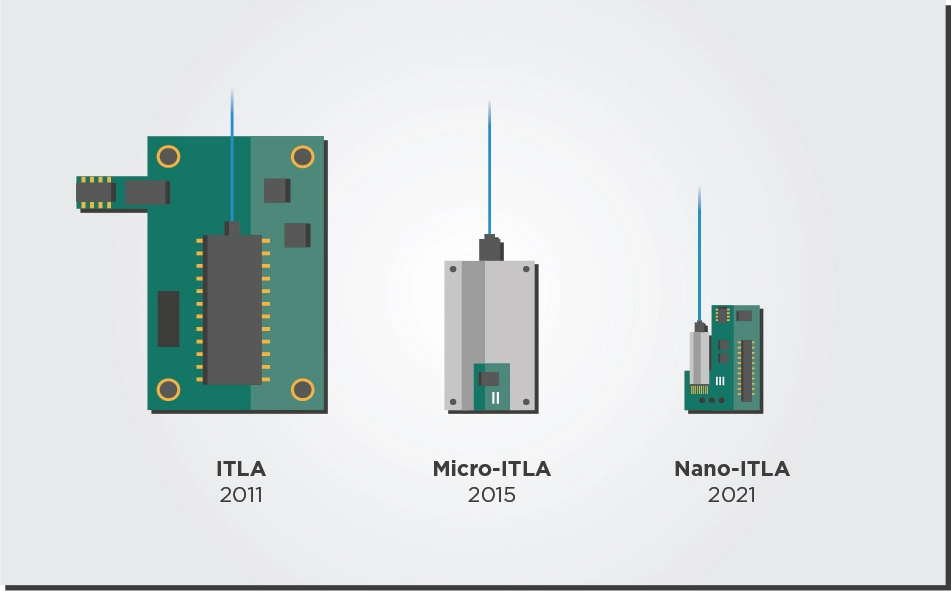

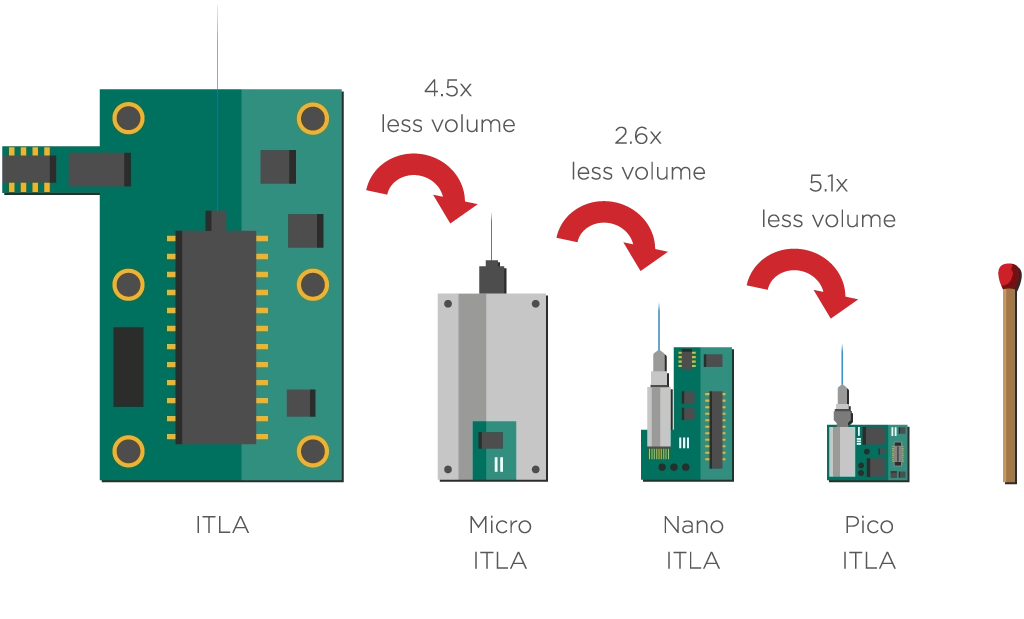

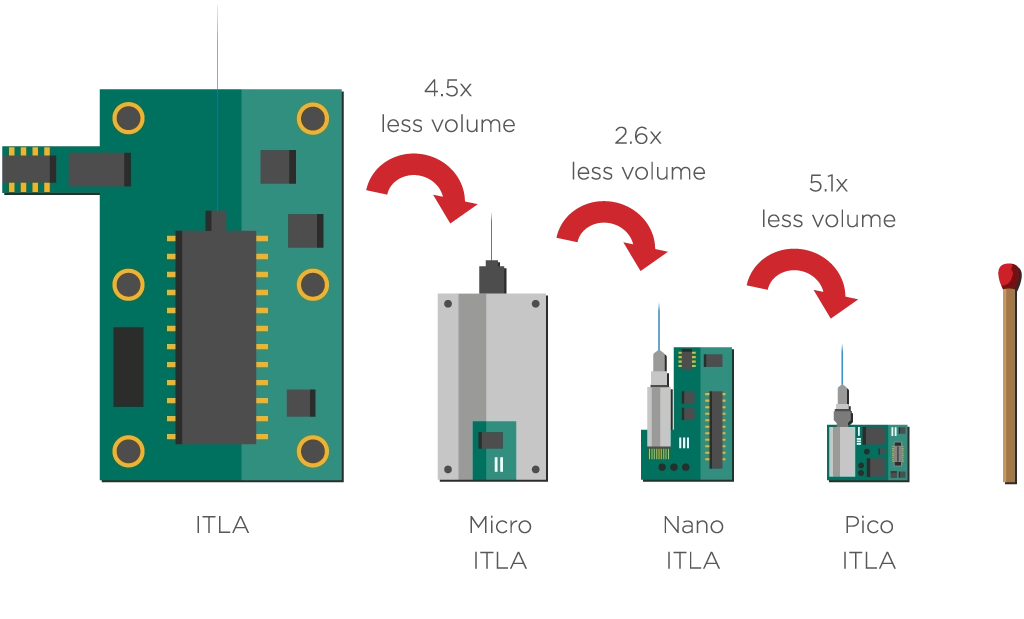

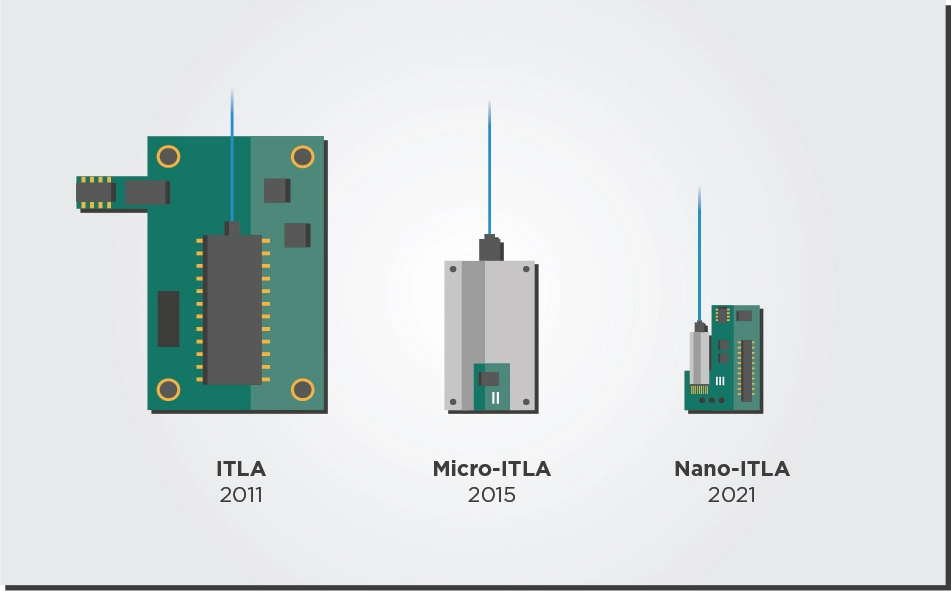

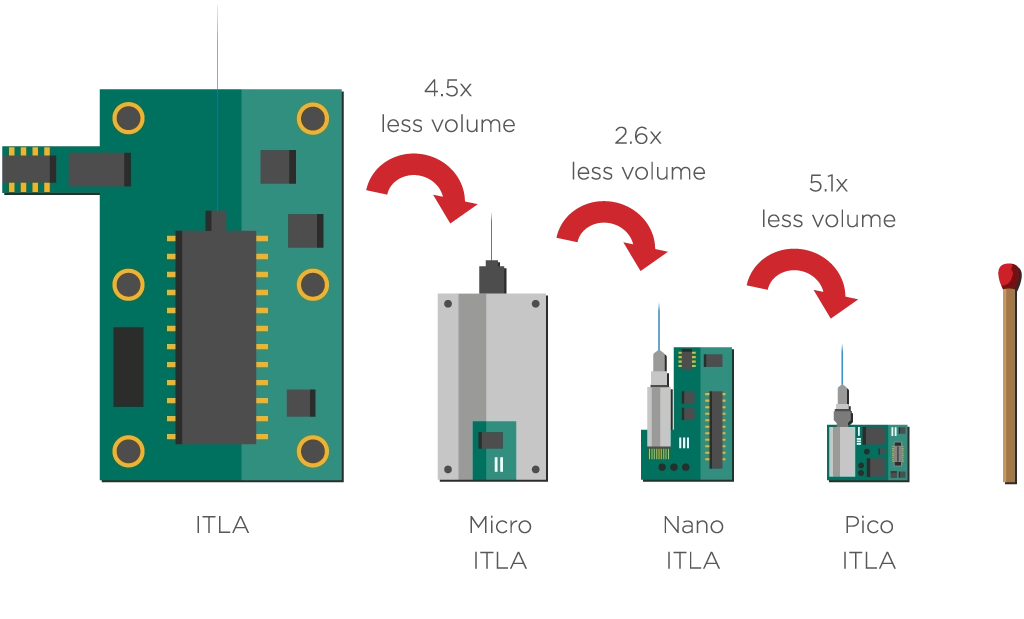

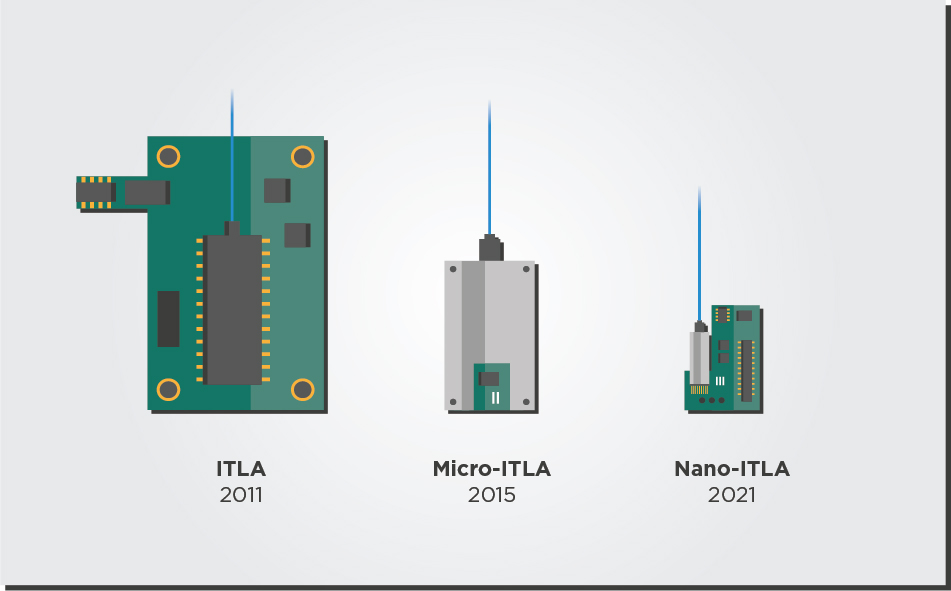

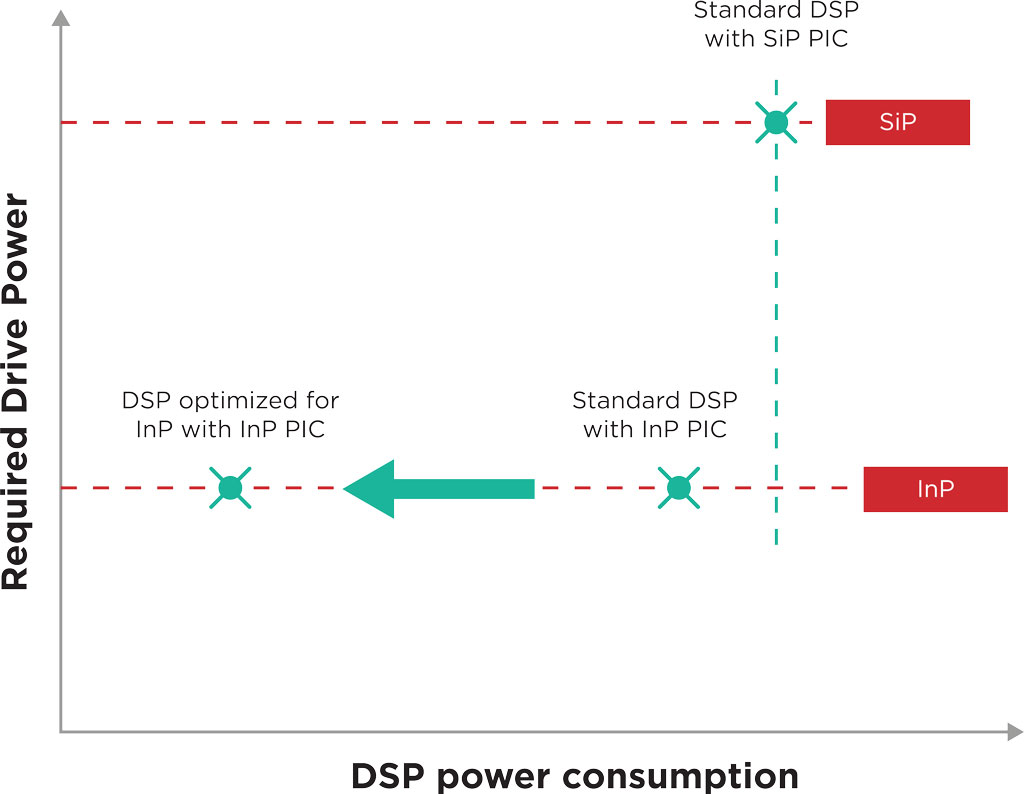

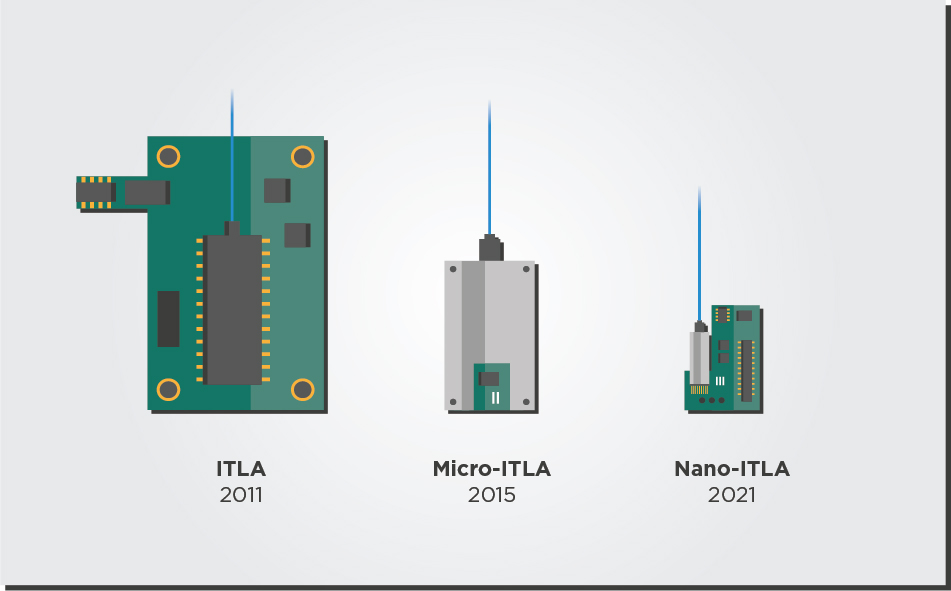

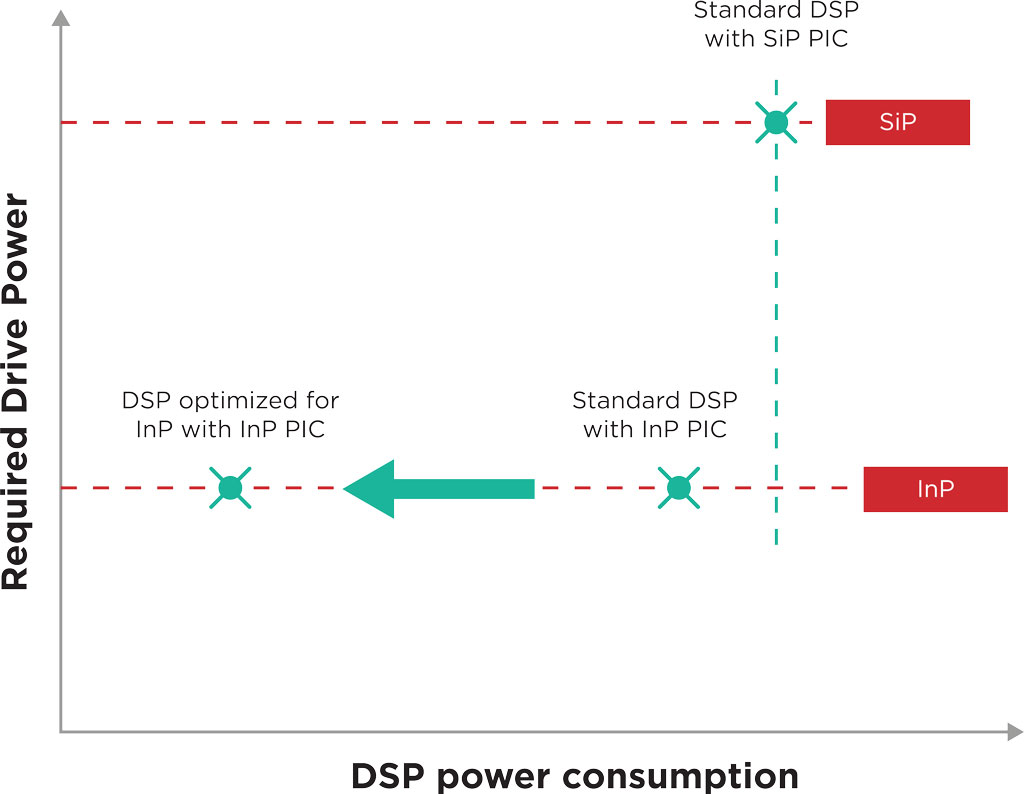

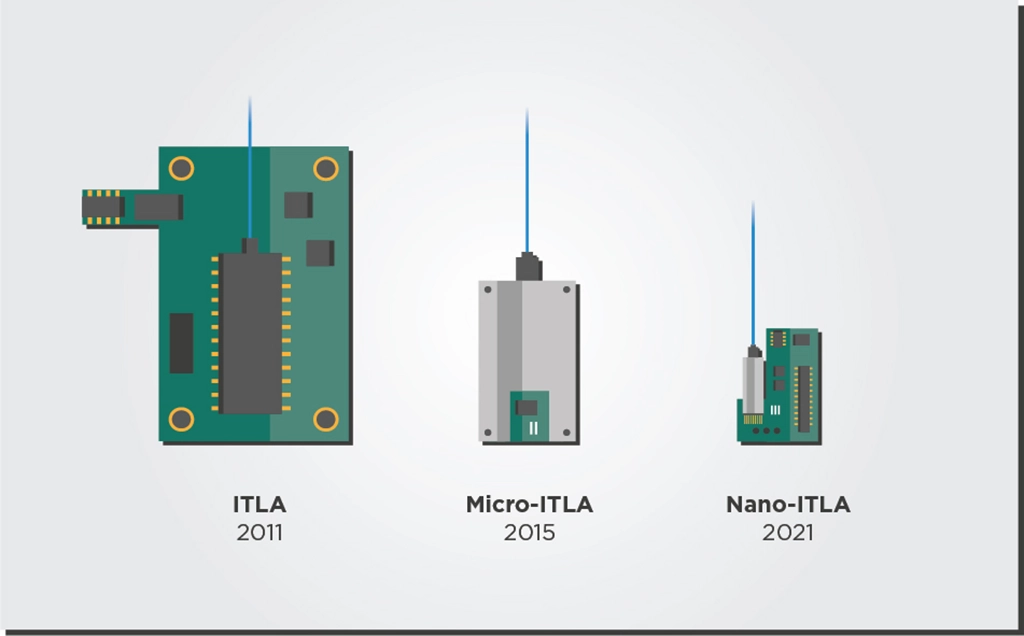

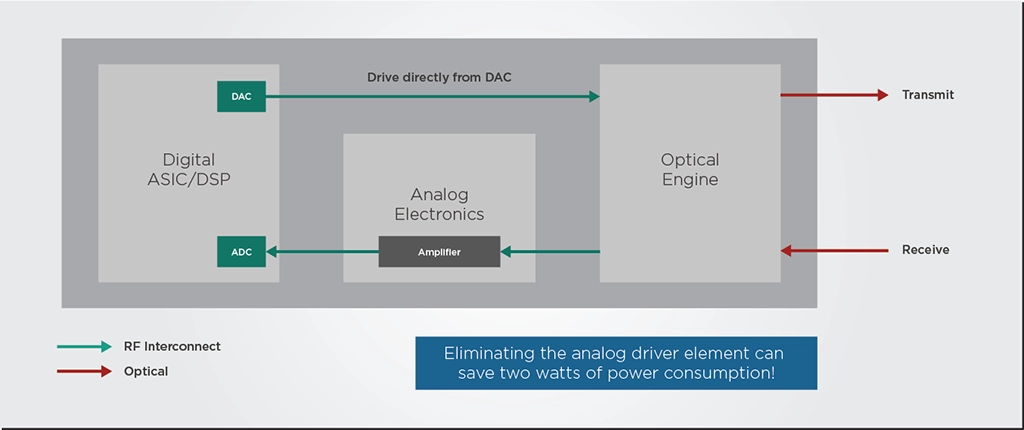

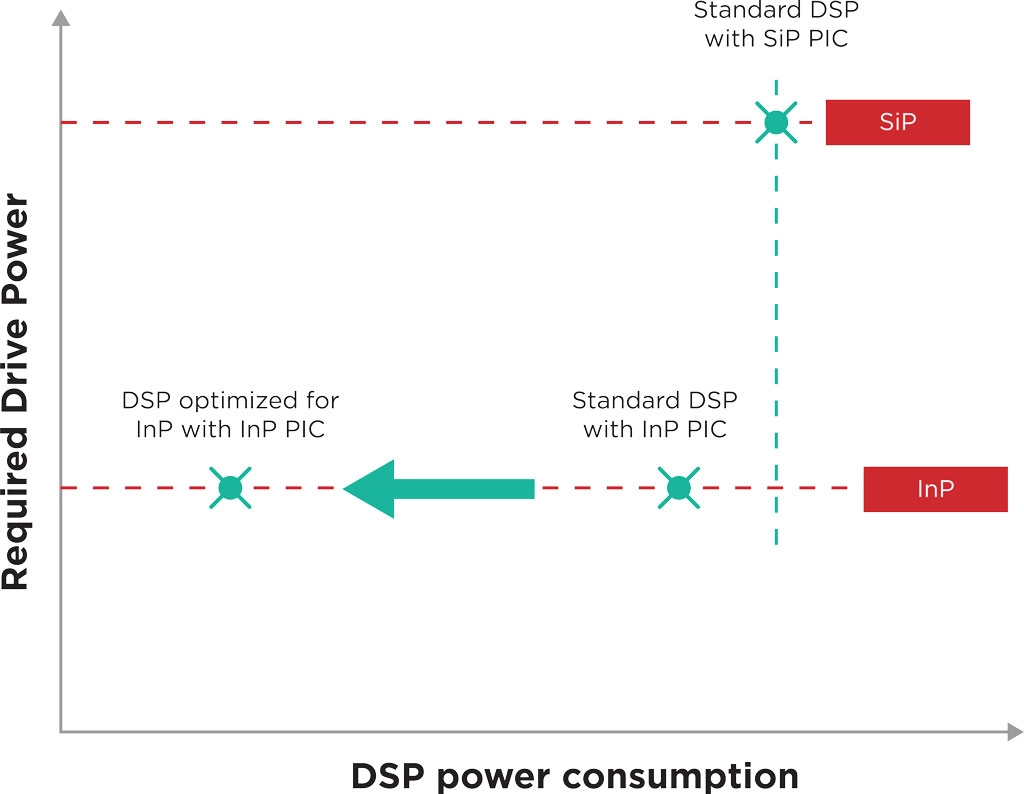

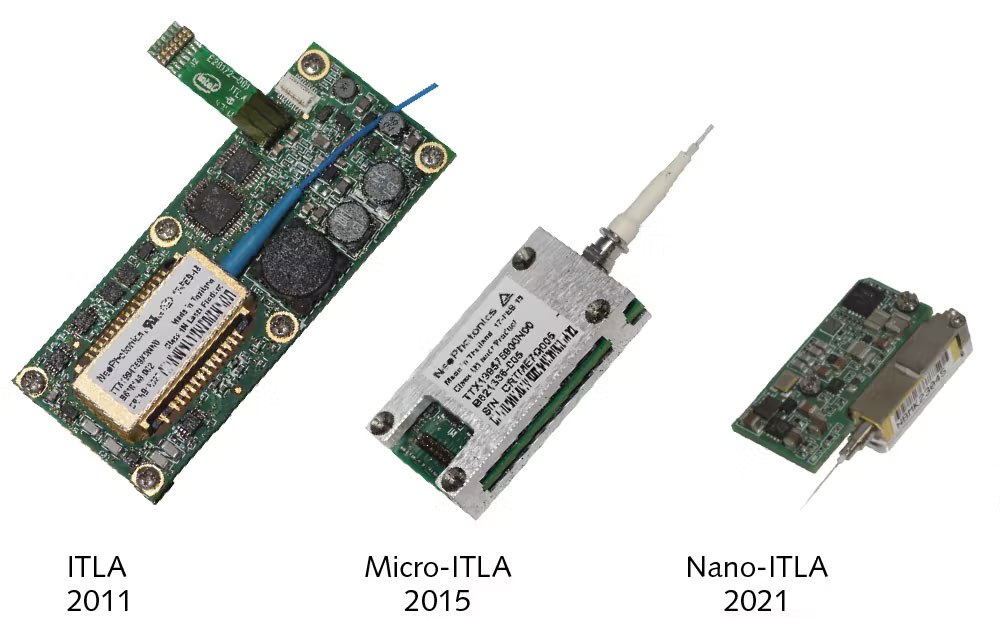

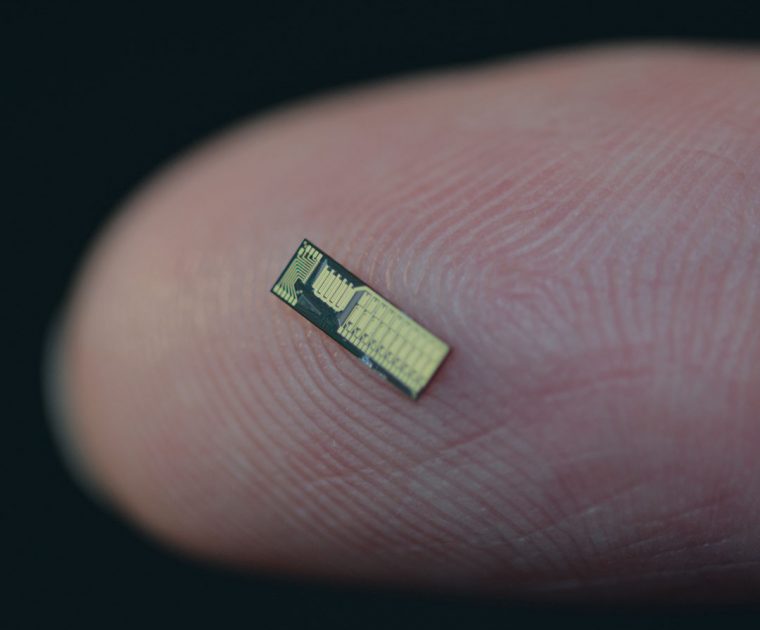

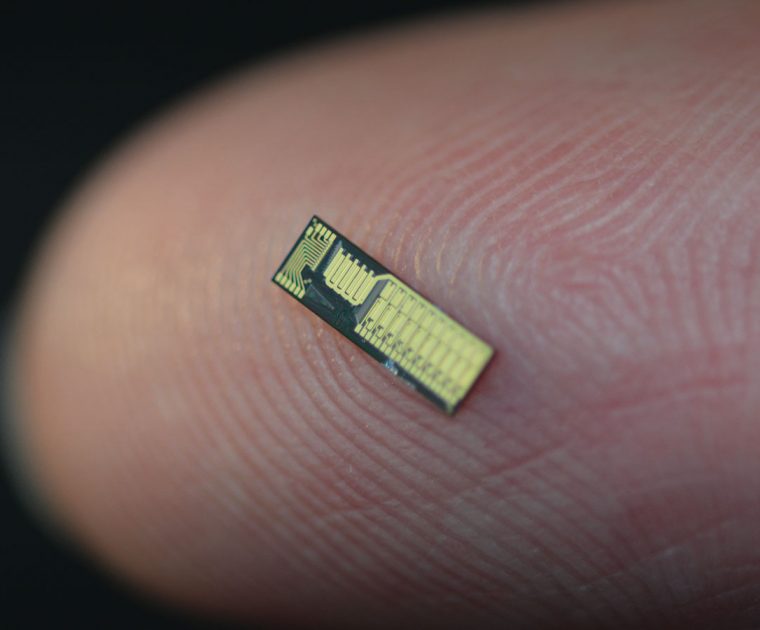

Since QSFP28 pluggables require lower power consumption and slightly smaller footprints, they also need new laser and digital signal processor (DSP) solutions. The industry cannot simply incorporate the same lasers and DSPs used for 400ZR devices. This is why EFFECT Photonics is developing a pico tunable laser assembly (pTLA) and a 100G DSP that will best fit 100ZR solutions in the QSFP28 form factor.

However, a 100ZR industry with only one or two laser and DSP suppliers will struggle to scale up and make these solutions more widely accessible. The 400ZR market provides a good example of the benefits of a vibrant ecosystem. This larger vendor ecosystem helps 400ZR production scale up in volume and satisfy a rapidly growing market.

The Need for Standards and Interoperability

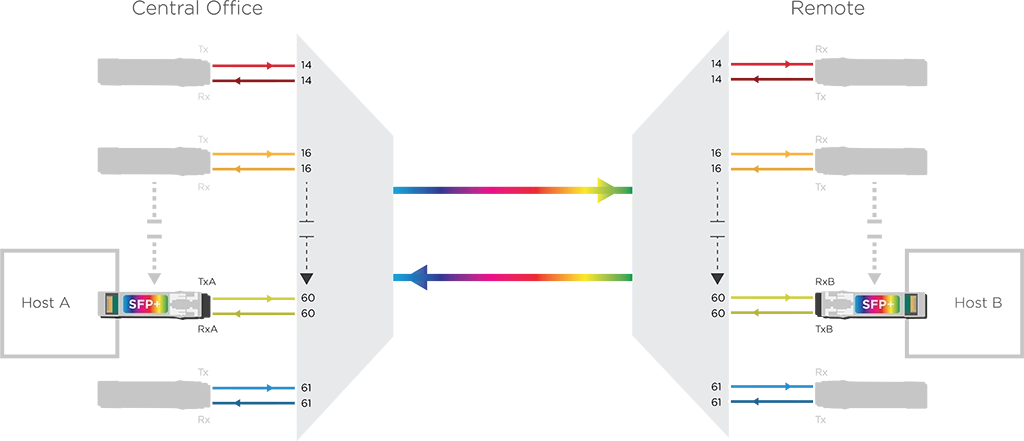

Another reason 400ZR solutions became so widespread is their standardization and interoperability. Previously, the 400G space was more fragmented, and pluggables from different vendors could not operate with each other, forcing operators to use a single vendor for their entire network deployment.

Eventually, datacom and telecom providers approached their suppliers and the Optical Internetworking Forum (OIF) about the need to develop an interoperable 400G coherent solution that addressed their needs. These discussions and technology development led the OIF to publish the 400ZR implementation agreement in 2020. This standardization and interoperability effort enabled the explosive growth of the 400G market.

100ZR solutions must follow a similar path to reach a larger market. If telecom and datacom operators want more widespread and affordable 100ZR solutions, more of them will have to join the push for 100ZR standardization and interoperability. This includes standards not just for the power consumption and line interfaces but also for management and control interfaces, enabling more widespread use of remote provisioning and diagnostics. These efforts will make 100ZR devices easier to implement across access networks standards-compatible modes for interoperability or in high-performance modes that use proprietary features.

Takeaways

The demand from access network operators for 100ZR solutions is there, but it has yet to fully materialize in the industry forecasts because, right now, there is not enough supply of viable 100ZR solutions that can address their targets. So in a way, further growth of the 100ZR market is a self-fulfilled prophecy: the more suppliers and operators support 100ZR, the easier it is to scale up the supply and meet the price and power targets of access networks, expanding the potential market. Instead of one or two vendors fighting for control of a smaller 100ZR pie, having multiple vendors and standardization efforts will increase the supply, significantly increasing the size of the pie and benefiting everyone’s bottom line.

Therefore, EFFECT Photonics believes in the vision of a 100ZR ecosystem where multiple vendors can provide affordable laser, DSP, and complete transceiver solutions tailored to network edge use cases. Meanwhile, if network operators push towards greater standardization and interoperability, 100ZR solutions can become even more widespread and easy to use.

Tags: 100G Coherent Products, 100ZR, 400ZR, 800G Solutions, 800ZR, Acacia, access networks, Cignal AI report, Coherent/ADVA, DSPs, DWDM optics, Heavy Reading survey, lasers, Marvell/InnoLight, Marvell/OE Solutions, operators, power consumption, QSFP-DD form factors, QSFP28 form factor, Scott Wilkinson, vendors

Reducing the Cost per Bit with Coherent Technology

The cost per bit is a metric directly impacting network operators’ economic viability and competitive…

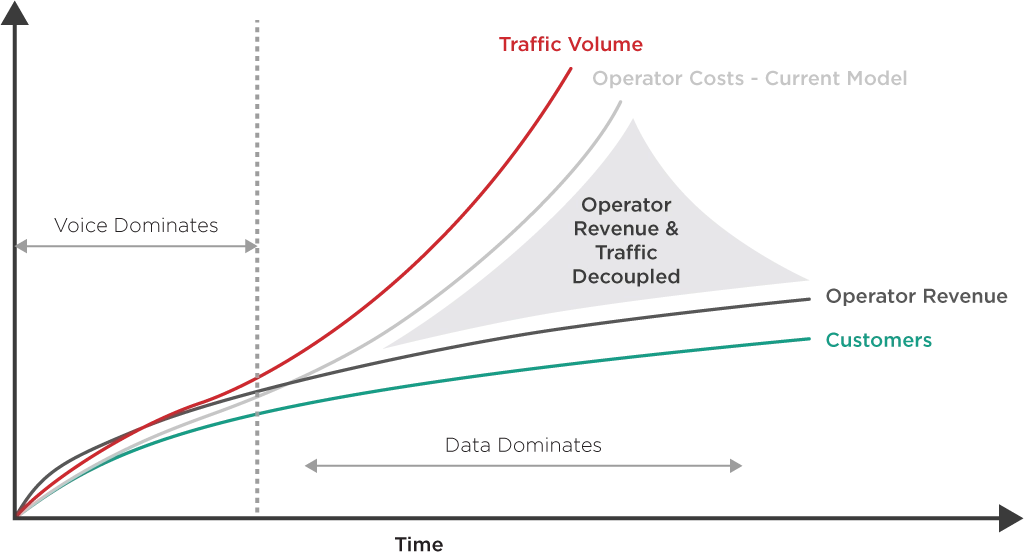

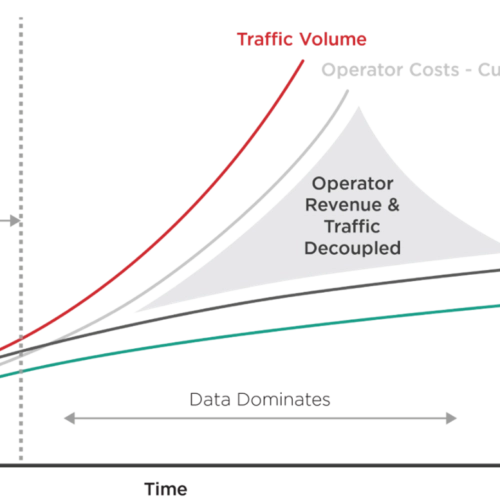

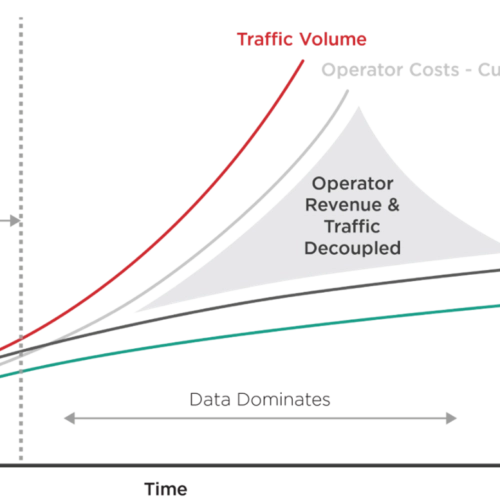

The cost per bit is a metric directly impacting network operators’ economic viability and competitive positioning. It represents the expense of transmitting a single bit of information across a network, encompassing infrastructure, operations, and maintenance costs. Lower costs per bit enable providers to offer more data-intensive services at competitive prices, attracting more customers and increasing revenue. Additionally, optimizing this metric helps achieve higher efficiency and sustainability in network operations.

Different segments of a telecommunication network—core, metro, and access—prioritize the cost per bit differently due to their distinct roles and technical requirements. The core network, which connects major cities and data centers, handles high data volumes over long distances. Economies of scale play a significant role here, as reducing the cost per bit is crucial for maintaining profitability on the vast amount of data transmitted. This segment typically invests in high-capacity, longer-haul technologies that, while expensive, reduce the cost per bit through enhanced efficiency and higher data throughput.

Conversely, edge networks face different challenges and priorities. They must prioritize flexibility and adaptability to handle varying traffic loads efficiently. Reducing the cost per bit involves deploying technologies that can scale quickly and cost-effectively. The access segment of edge networks, which brings connectivity directly to end-users, focuses on maximizing coverage and reliability. Here, the cost per bit needs to be managed against the need for extensive physical infrastructure that reaches individual customers.

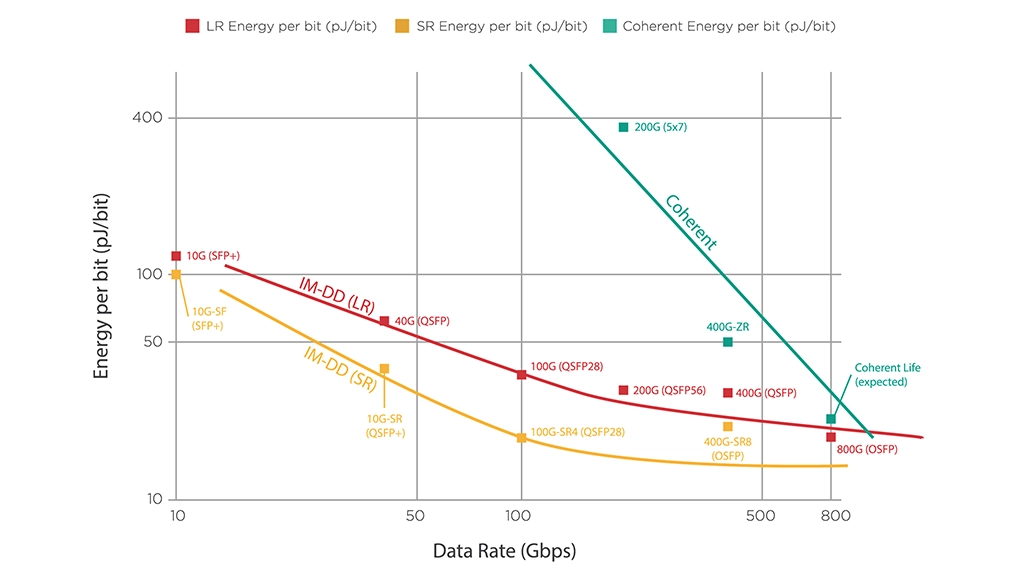

Coherent technology is often seen as more expensive than direct detection (IM-DD), but in this article, we will explore some ways in which the initial investment in coherent technology can help networks reduce their cost per bit.

Coherent Increases Transmission Reach

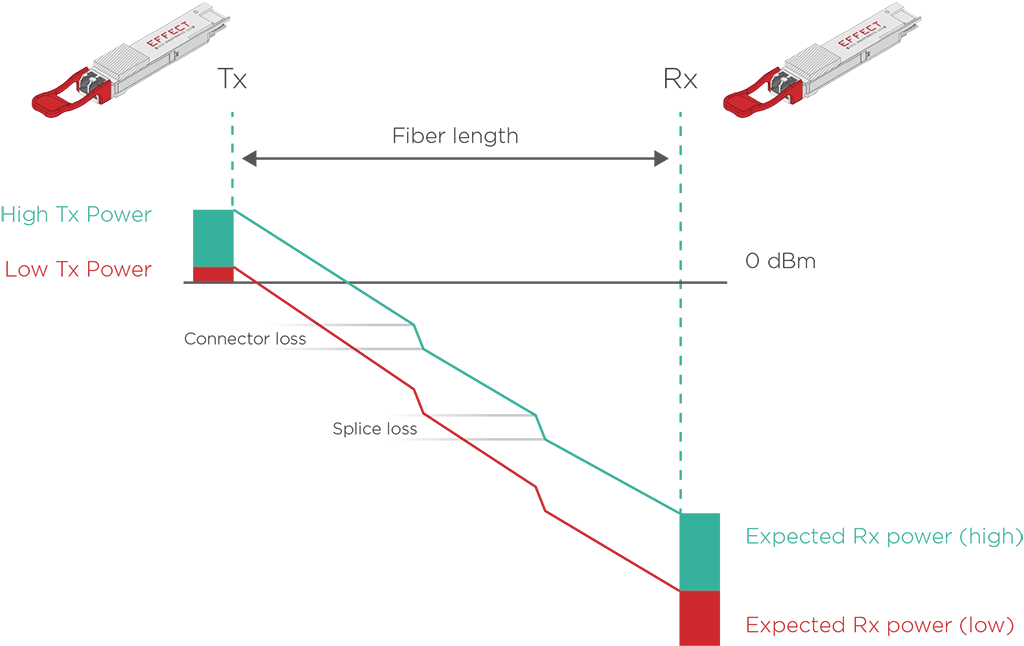

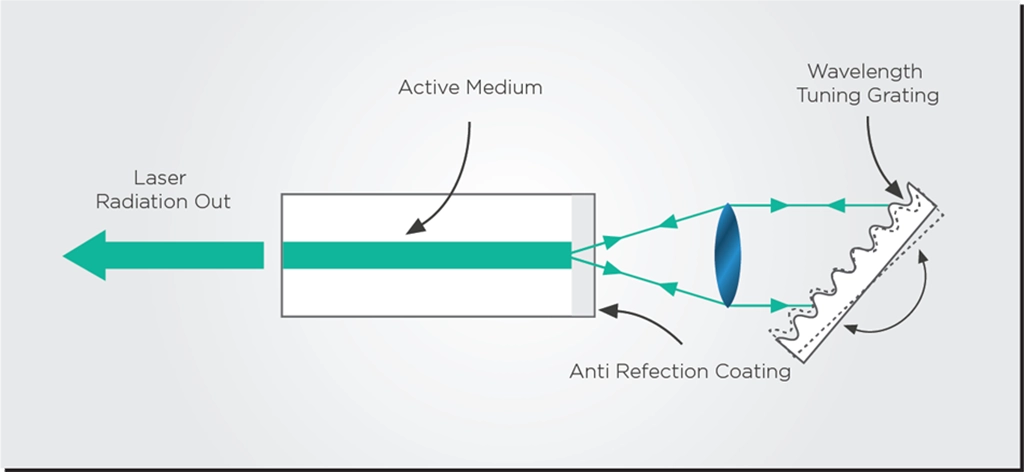

The quality of the light signal degrades when traveling through an optical fiber by a process called dispersion. The same phenomenon happens when a prism splits white light into several colors. The fiber also adds other distortions due to nonlinear optical effects.

These effects get worse as the input power of the light signal increases, leading to a trade-off. You might want more power to transmit over longer distances, but the nonlinear distortions also become larger, which beats the point of using more power.

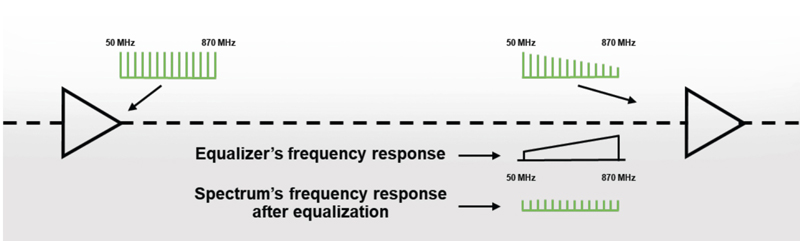

Coherent systems use sophisticated digital signal processing (DSP) technologies to automatically compensate for signal impairments, including chromatic and polarization mode dispersion. Coherent receivers are also highly sensitive, allowing them to detect signals over longer distances with higher fidelity than is possible with IM-DD systems. The dispersion compensation and increased sensitivity reduces the number of regenerative repeaters and other physical modules needed to boost the signal over longer distances.

Fewer repeaters mean lower energy consumption, reduced maintenance, and fewer operational disruptions, all contributing to a lower cost per bit. Additionally, the ability to transmit over extended distances without degradation in signal quality allows for more straightforward network architectures with longer point-to-point connections, simplifying the overall network design and further reducing costs

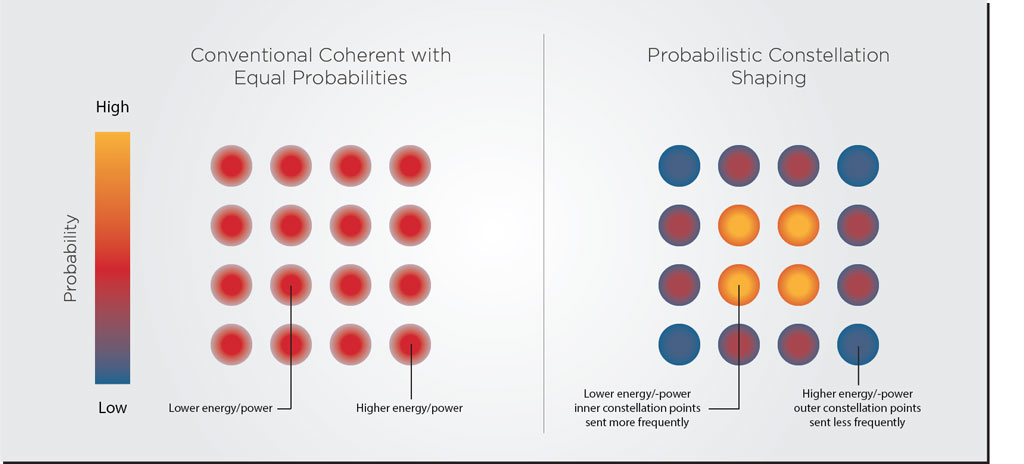

Coherent Increases Transmission Efficiency

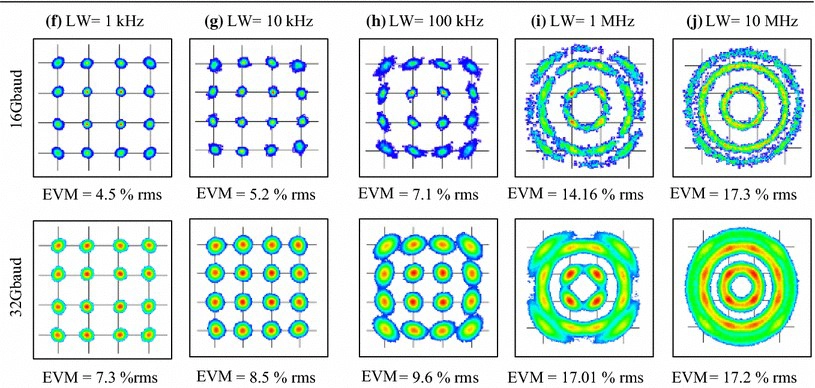

Coherent systems use complex modulation formats that encode data on a light wave’s amplitude, phase, and polarization. By encoding multiple bits per symbol, these systems can transmit more data over a single wavelength than IM-DD, which primarily uses amplitude-only modulation, rather than just the intensity. This allows more bits to be transmitted per symbol, effectively increasing the data-carrying capacity of a single fiber.

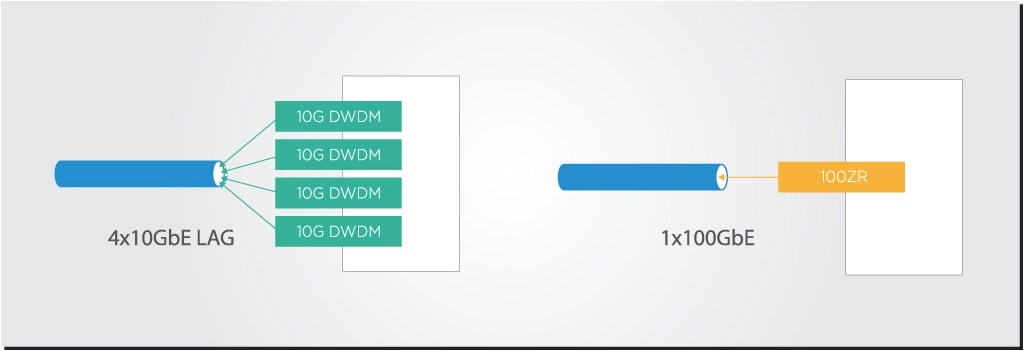

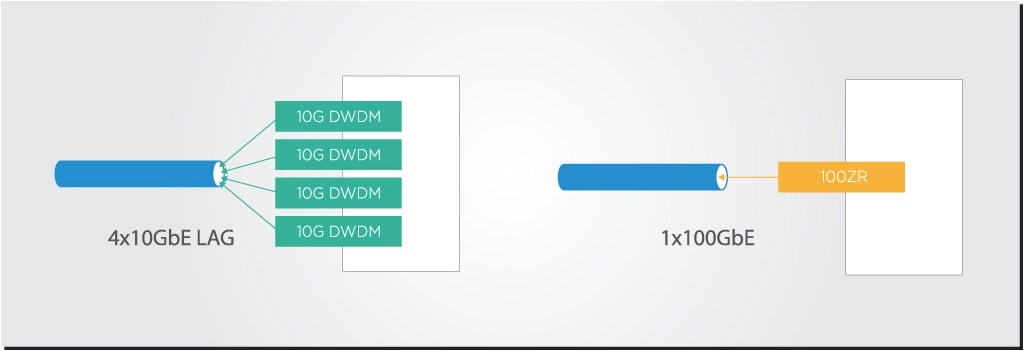

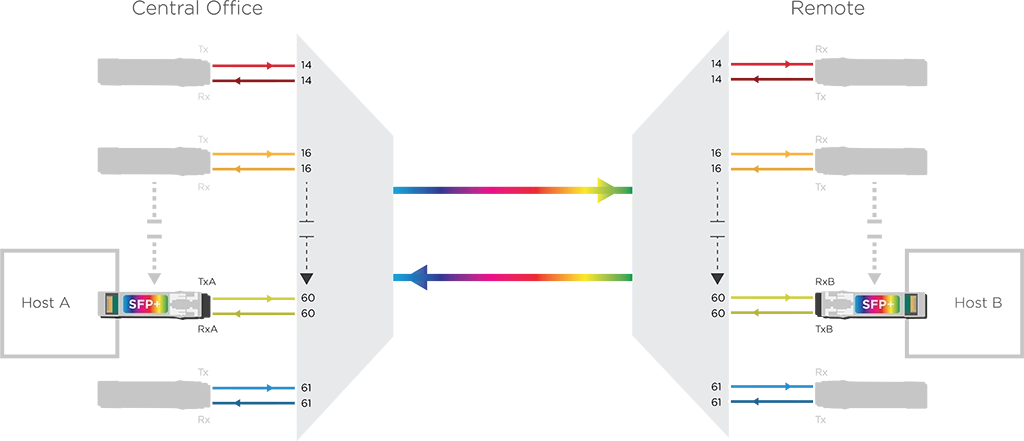

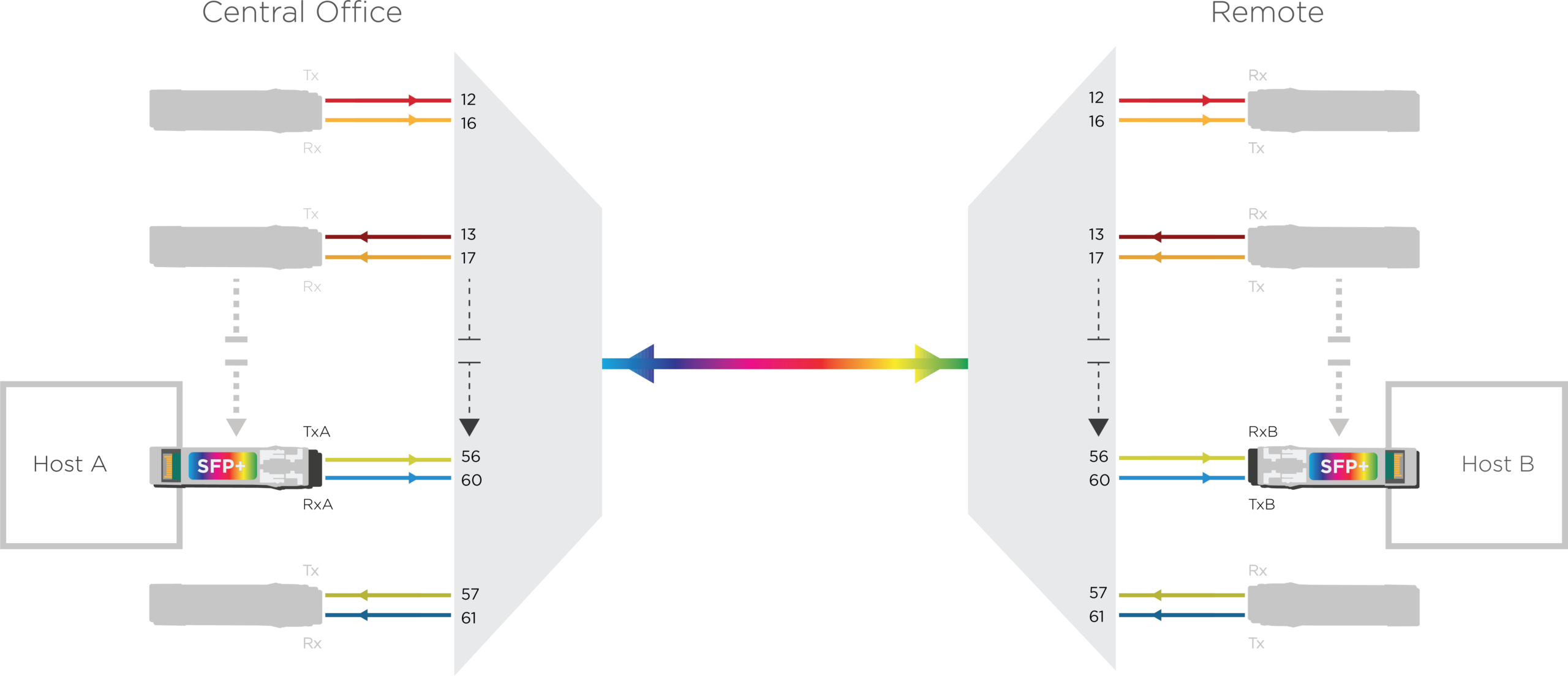

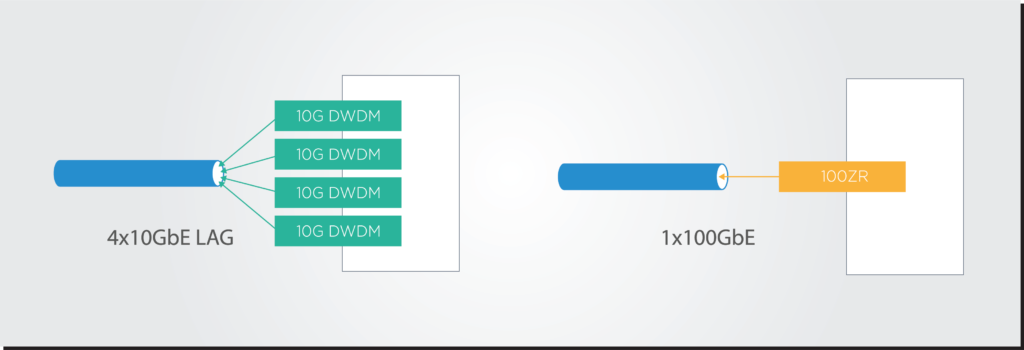

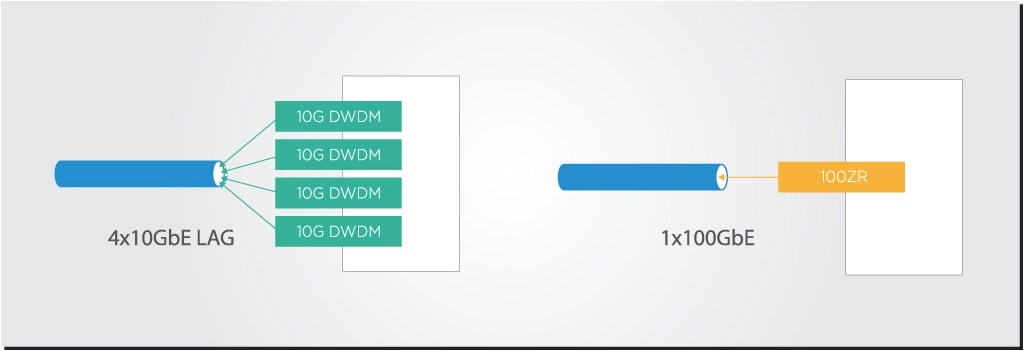

Such efficiency can allow a single coherent channel to replace the work of several IM-DD channels. In our previous article, we provided an example of a single 100Gbps coherent channel replacing the link aggregation of four 10Gbps IM-DD channels found in some access and aggregation network architectures.

This substitution would replace eight SFP+ transceivers with just two coherent 100G transceivers, simplifying network configuration and operation. It more than doubles the capacity of 4x10Gbps link aggregation, allowing the network to handle more data traffic while reducing the required physical infrastructure, effectively reducing the cost per bit.

You can consult the recent Cignal AI report on 100ZR technologies to gain further insight into this link aggregation upgrade’s potential market and reach.

The Synergy with WDM Technology

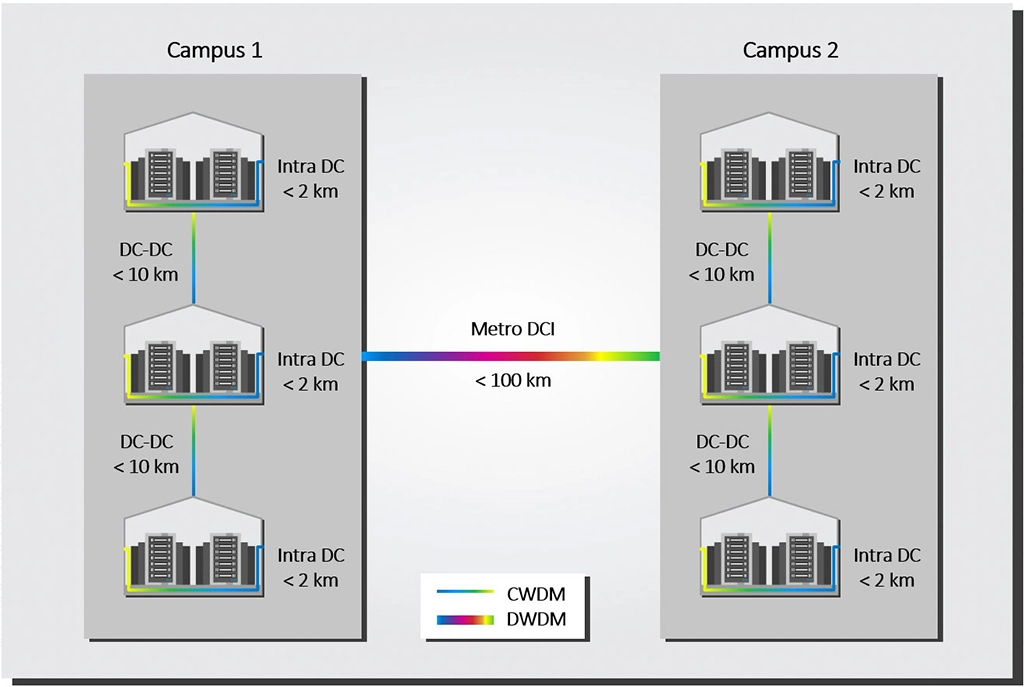

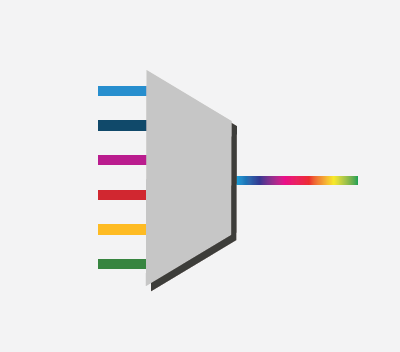

Dense Wavelength Division Multiplexing (DWDM) is an optical technology that dramatically increases the amount of data transmitted over existing fiber networks. Data from various signals are separated, encoded on different wavelengths, and put together (multiplexed) in a single optical fiber.

The wavelengths are separated again and reconverted into the original digital signals at the receiving end. In other words, DWDM allows different data streams to be sent simultaneously over a single optical fiber without requiring the expensive installation of new fiber cables. In a way, it’s like adding more lanes to the information highway without building new roads!

The tremendous expansion in data volume afforded with DWDM can be seen compared to other optical methods. A standard transceiver, often called a grey transceiver, is a single-channel device – each fiber has a single laser source. You can transmit 10 Gbps with grey optics. Coarse Wavelength Division Multiplexing (CWDM) has multiple channels, although far fewer than possible with DWDM. For example, with a 4-channel CWDM, you can transmit 40 Gbps. DWDM can accommodate up to 100 channels. You can transmit 1 Tbps or one trillion bps at that capacity – 100 times more data than grey optics and 25 times more than CWDM.

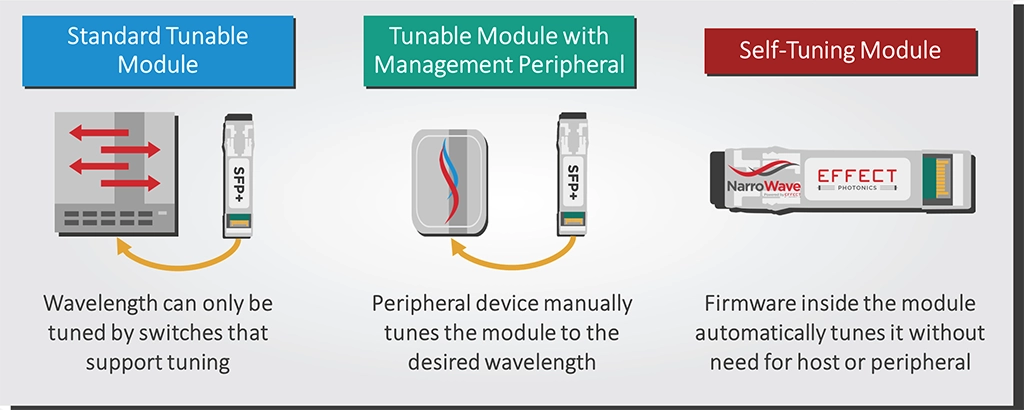

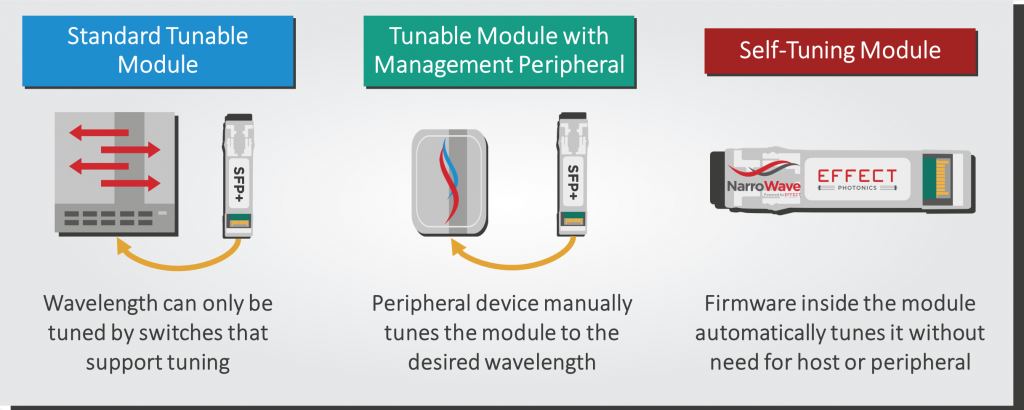

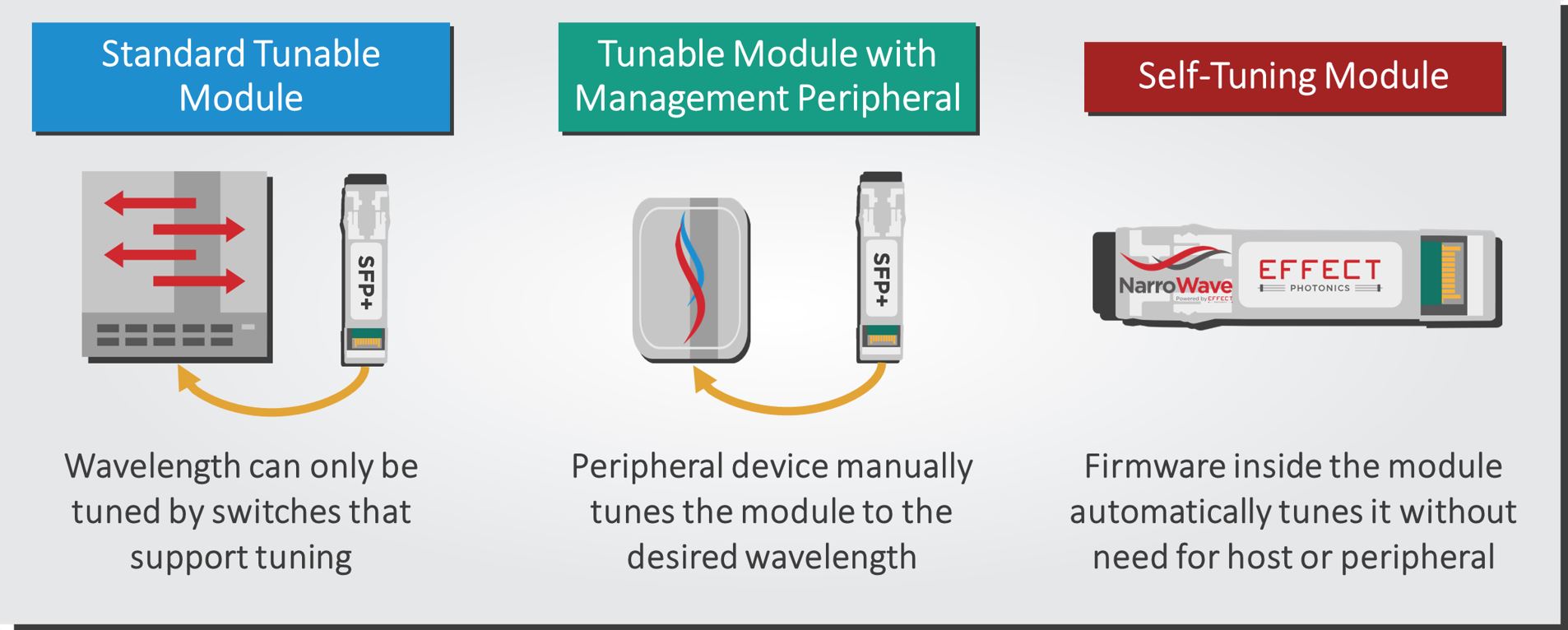

While the upgrade to DWDM requires some initial investment in new and more tunable transceivers, the use of this technology ultimately reduces the cost per bit transmitted to the network. Demand in access networks will continue to grow as we move toward IoT and 5G, and DWDM will be vital to scaling cost-effectively. Self-tuning modules have also helped further reduce the expenses associated with tunable transceivers.

Takeaways

Coherent systems minimize the need for frequent signal regeneration by compensating signal dispersion and enhancing signal reach. This simplifies network architecture and contributes to a lower cost per bit. Additionally, by employing complex modulation techniques, coherent technology maximizes the data capacity per wavelength, potentially replacing multiple IM-DD systems with a single coherent channel. This can further streamline network operations and reduce expenses.

The synergy of coherent technology with Dense Wavelength Division Multiplexing (DWDM) can multiply the data throughput of existing fiber infrastructures without requiring new infrastructure installations. Overall, while coherent technology involves a higher upfront investment compared to IM-DD systems, it can lower the cost per bit by enhancing the efficiency, reach, and capacity of data transmission.

Tags: capacity, channels, Coherent technology, cost per bit, data, dispersion, DWDM, EFFECT Photonics, efficiency, fiber, IM-DD, infrastructure, modulation, multiplexing, network, operations, reach, signal, systems, technology, transmission

What Goes Into Power Per Bit

In the information and communication technology (ICT) sector, the exponential increase in data traffic makes…

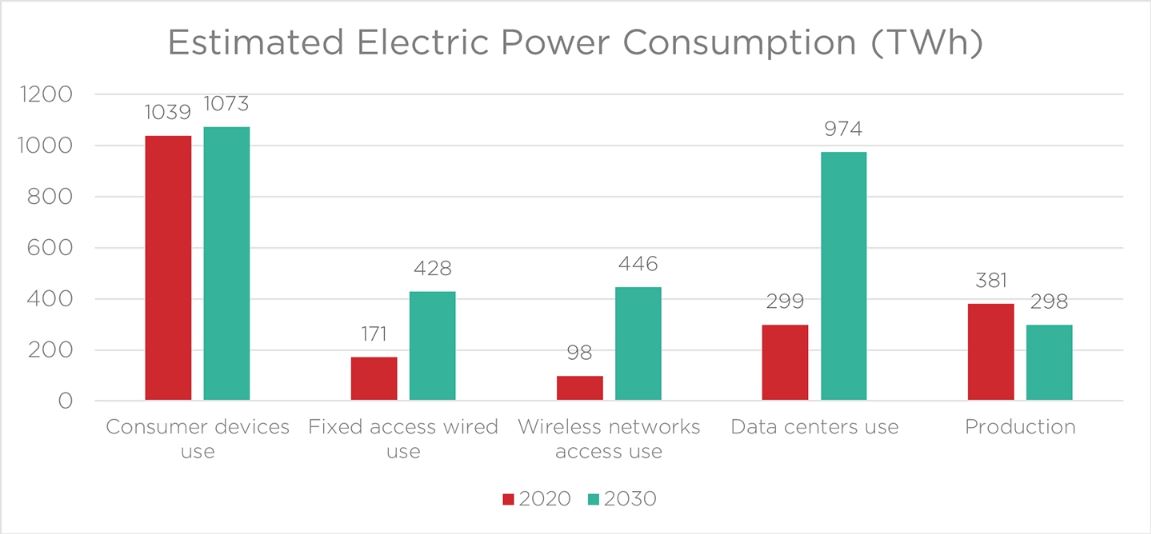

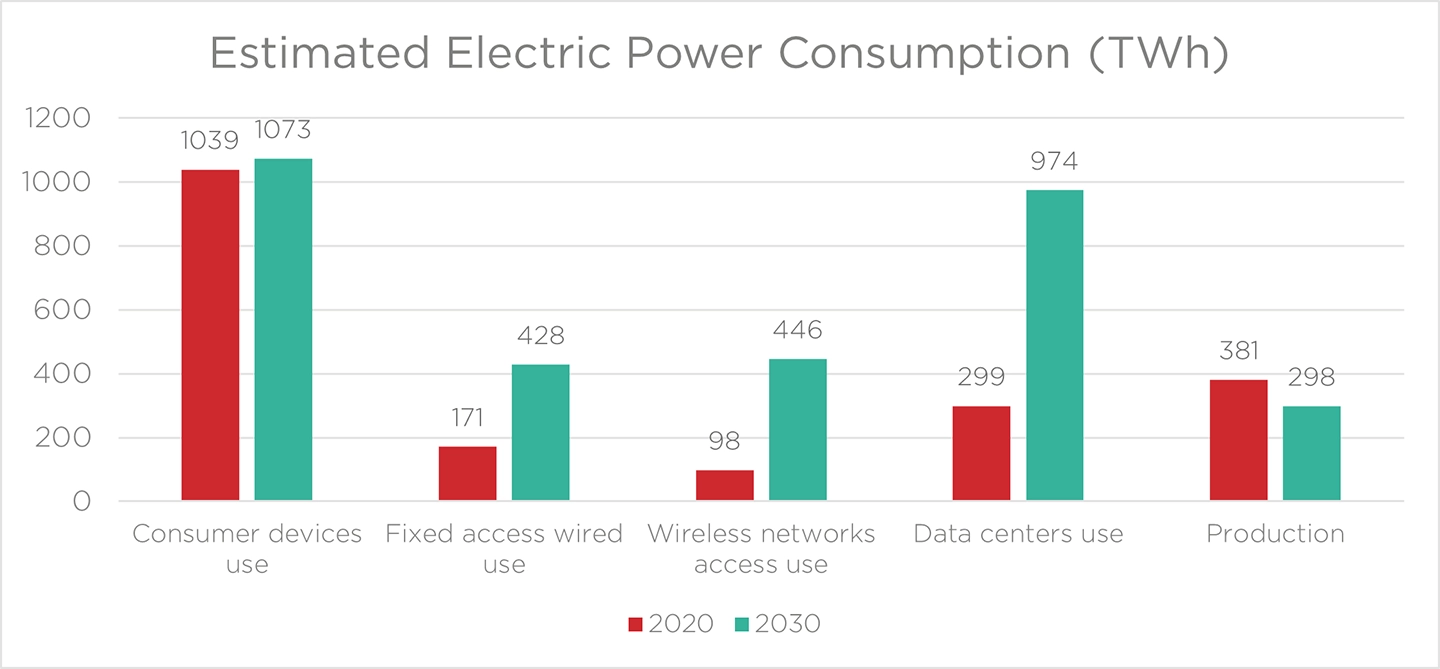

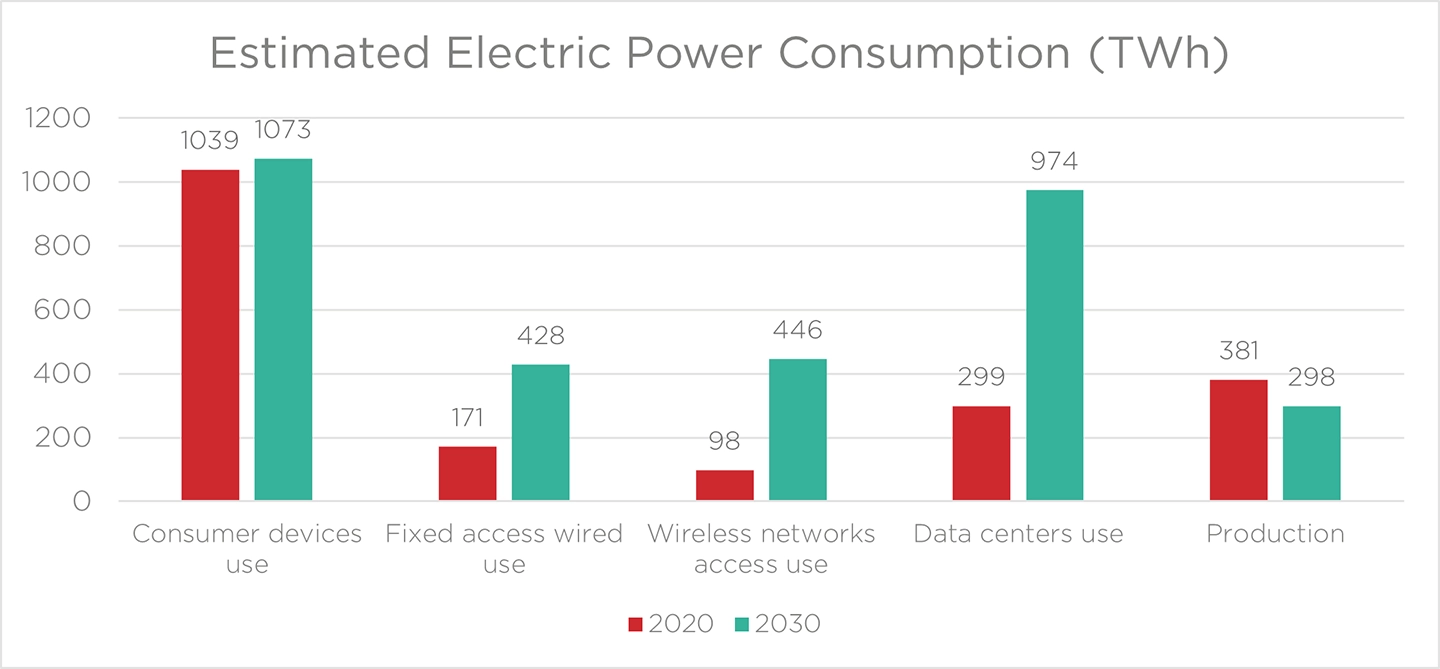

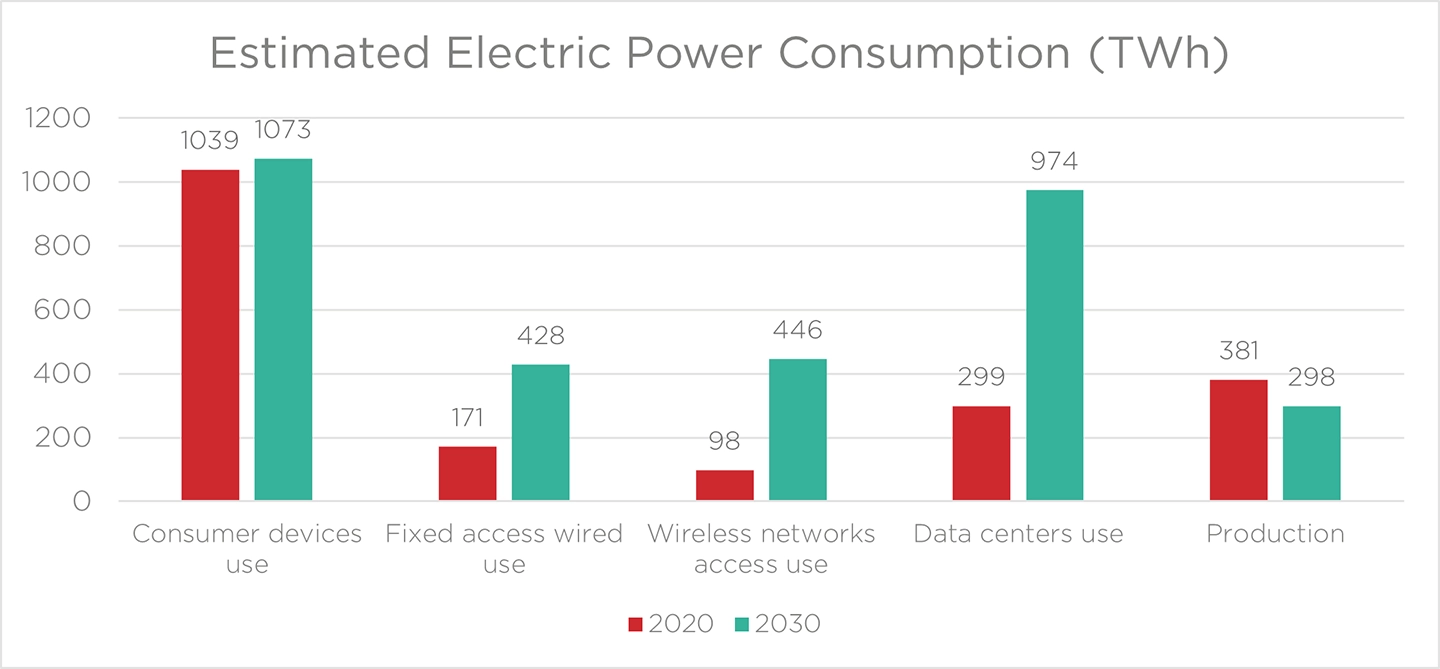

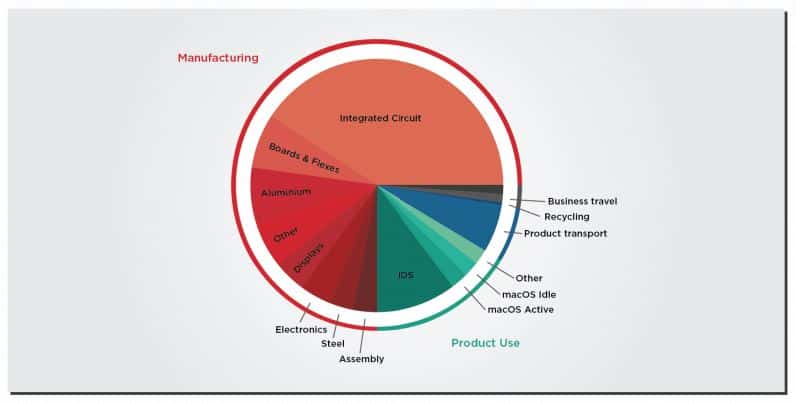

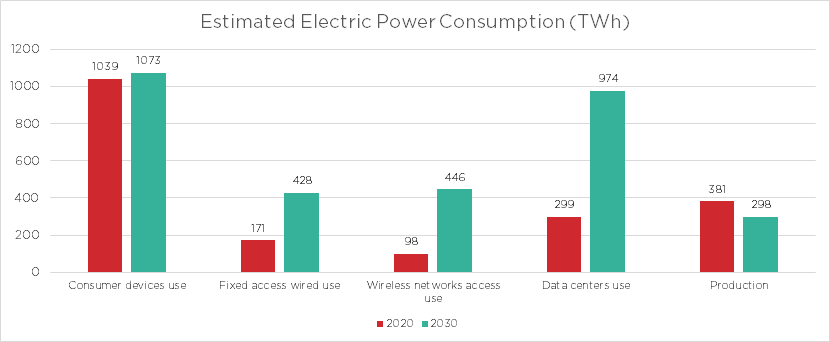

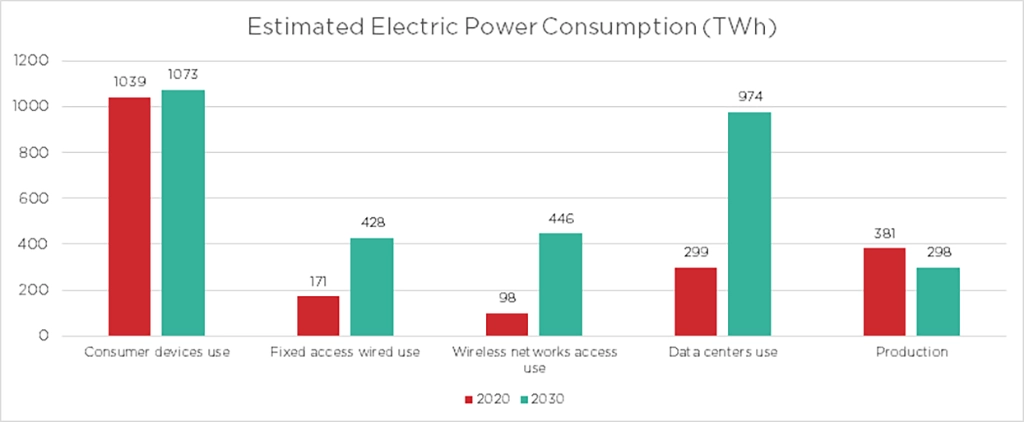

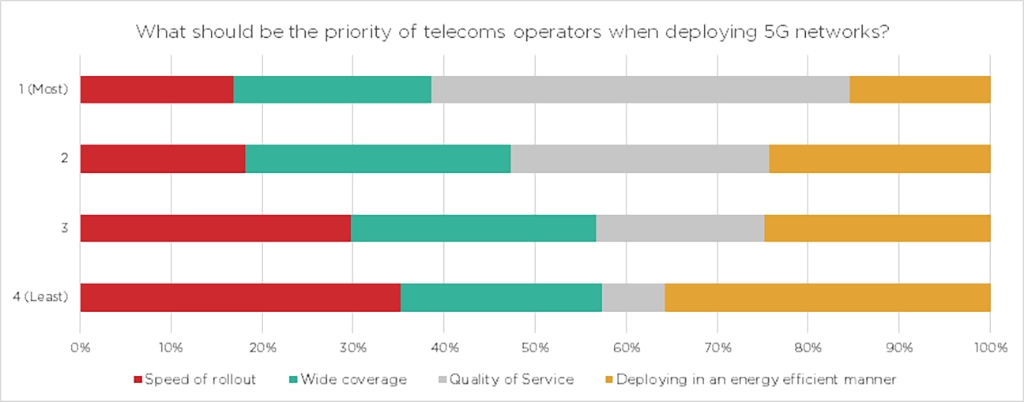

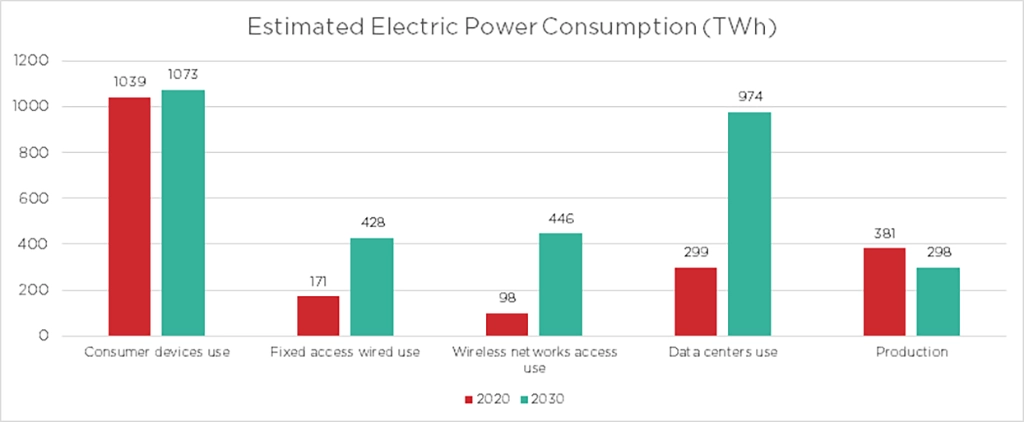

In the information and communication technology (ICT) sector, the exponential increase in data traffic makes it difficult to keep emissions down and contribute to the energy transition. A 2020 study by Huawei estimates that the power consumption of the data center sector will increase threefold in the next ten years. Meanwhile, wireless access networks are expected to increase their power consumption even faster, more than quadrupling between 2020 and 2030.

These issues affect both the environment and the bottom lines of communications companies, which must commit increasingly larger percentages of their operating expenditure to cooling solutions.

As we explained in our previous articles, photonics and transceiver integration will play a key role in addressing these issues and making the ICT sector greener. EFFECT Photonics also believes that the transition of optical access networks to coherent 100G technology can help reduce power consumption.

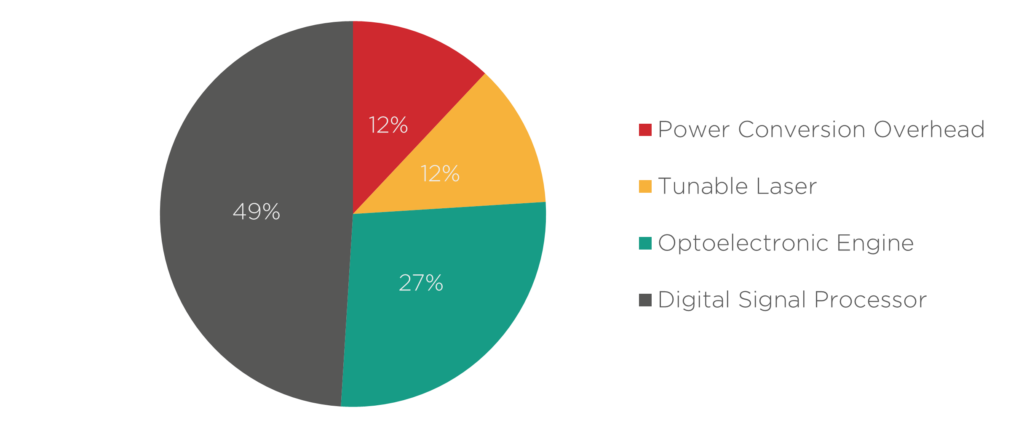

This insight might sound counterintuitive at first since a coherent transceiver will normally consume more than twice the power of a direct detect one due to the use of a digital signal processor (DSP). However, by replacing the aggregation of multiple direct detect links with a single coherent link and skipping the upgrades to 56Gbps and going directly for 100Gbps, optical networks can save energy consumption, materials, and operational expenditures such as truck rolls.

The Impact of Streamlining Link Aggregation

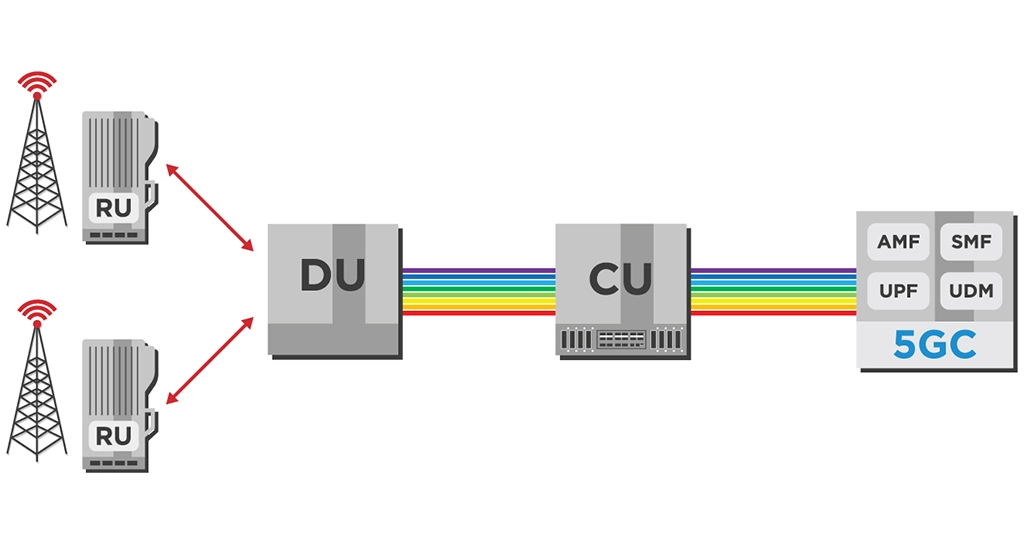

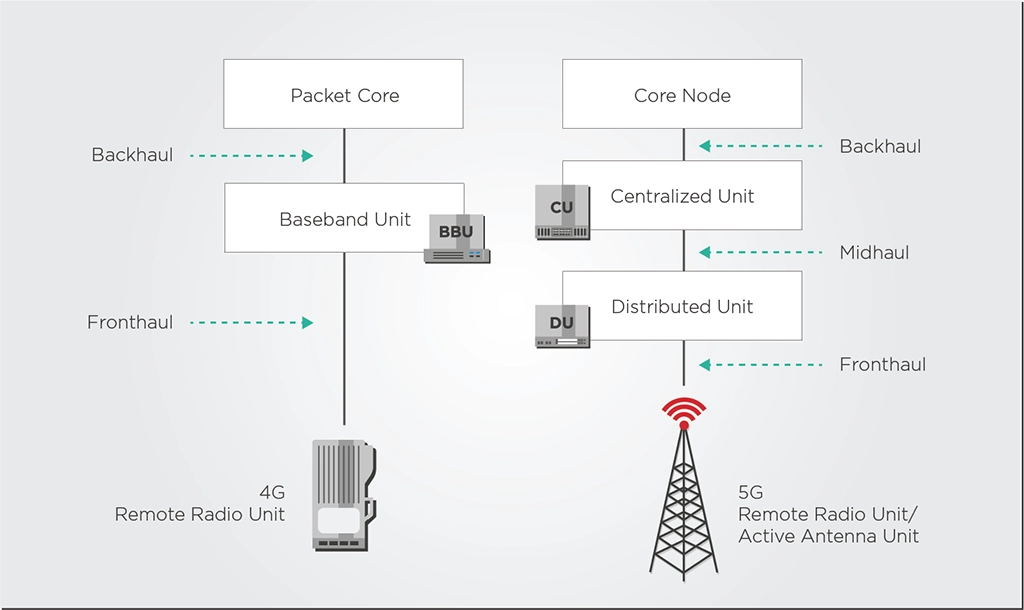

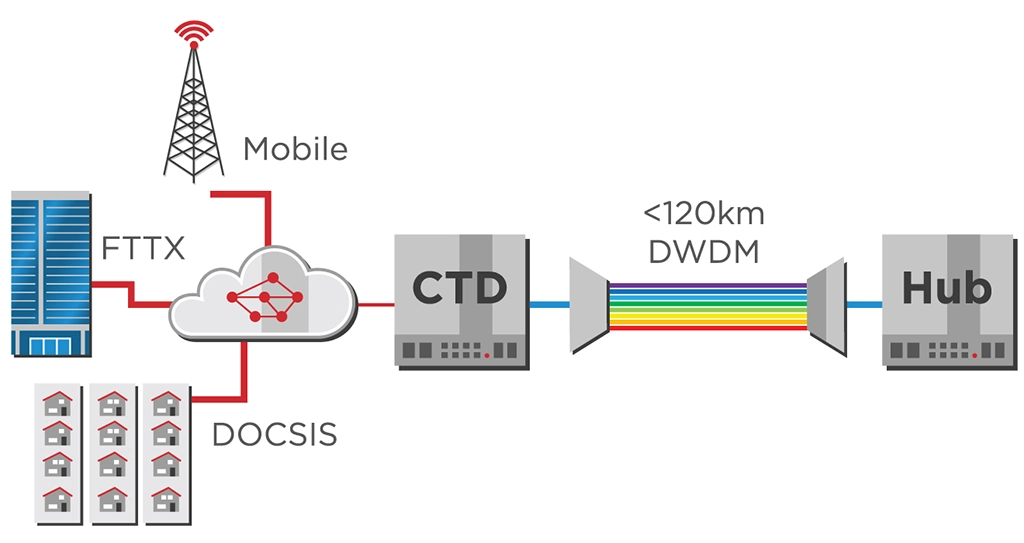

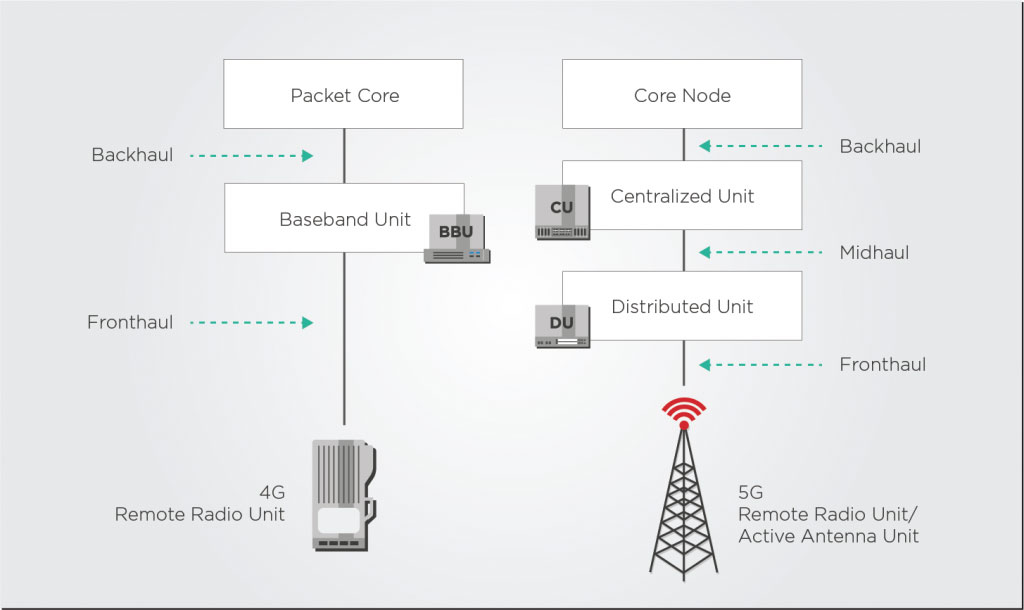

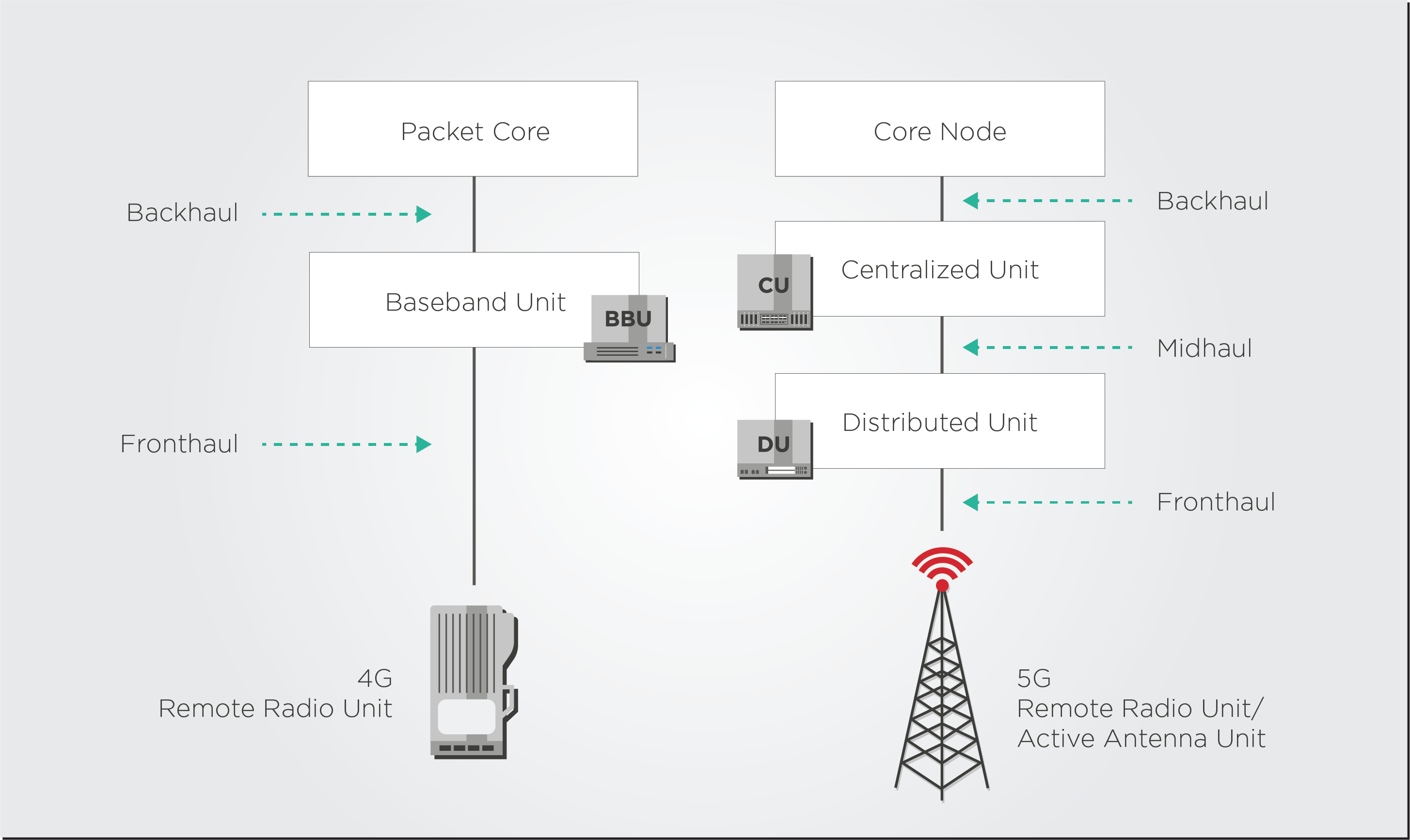

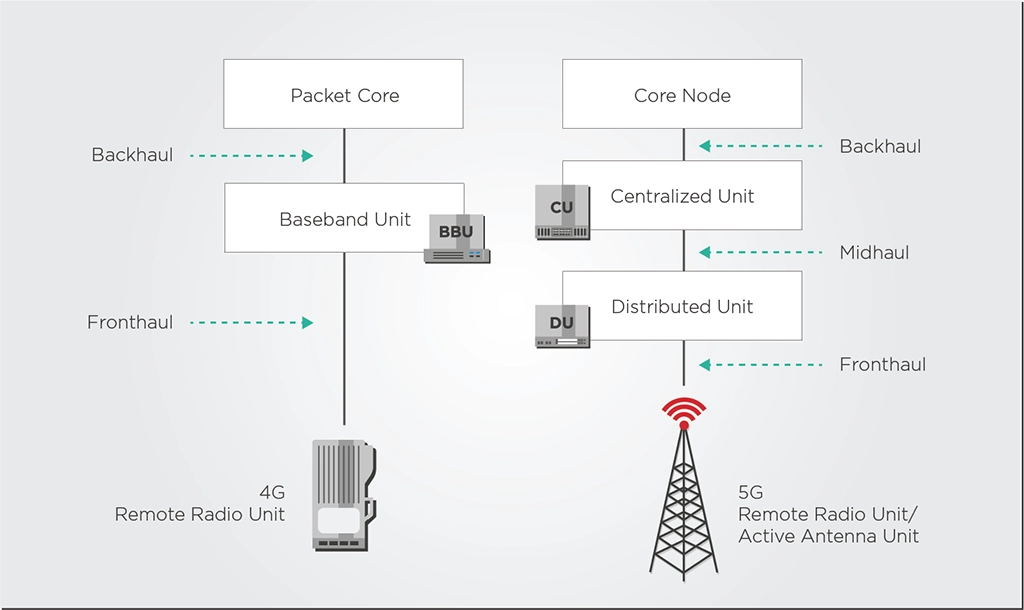

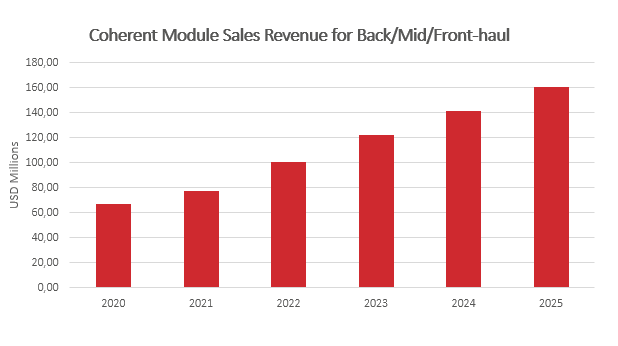

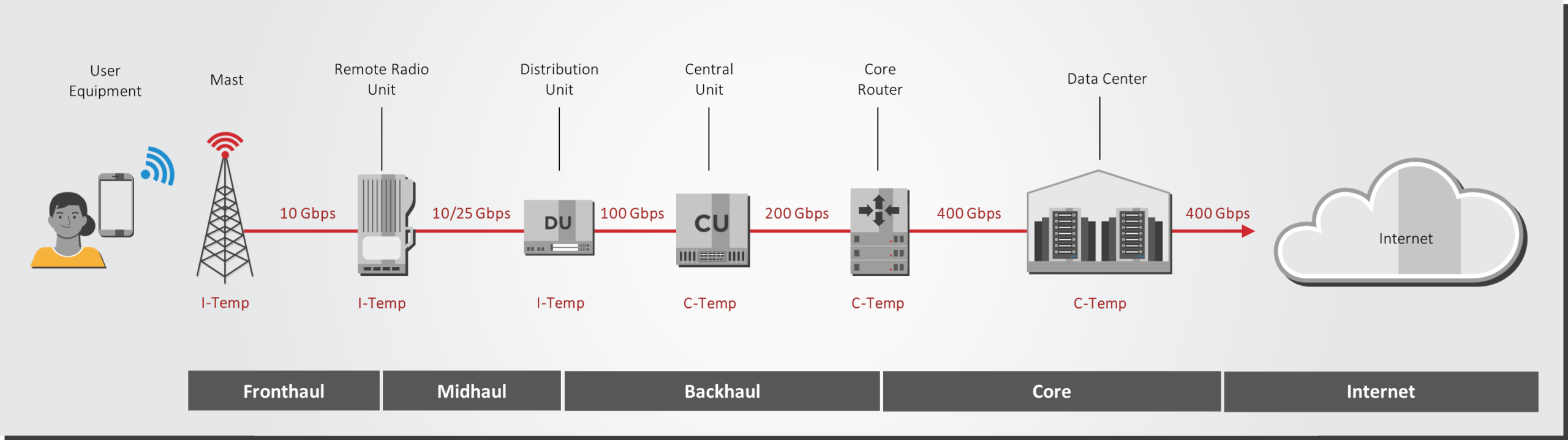

The advanced stages of 5G deployment will require operators to cost-effectively scale fiber capacity in their fronthaul networks using more 10G DWDM SFP+ solutions and 25G SFP28 transceivers. This upgrade will pressure the aggregation segments of mobile backhaul and midhaul, which typically rely on link aggregation of multiple 10G DWDM links into a higher bandwidth group (e.g., 4x10G).

On the side of cable optical networks, the long-awaited migration to 10G Passive Optical Networks (10G PON) is happening and will also require the aggregation of multiple 10G links in optical line terminals (OLTs) and Converged Cable Access Platforms (CCAPs).

This type of link aggregation involves splitting larger traffic streams and can be intricate to integrate within an access ring. Furthermore, it carries an environmental impact.

A single 100G coherent pluggable consumes a maximum of six watts of power, which is significantly more than the two watts of power of a 10G SFP+ pluggable. However, aggregating four 10G links would require a total of eight SFP+ pluggables (two on each end) for a total maximum power consumption of 16 watts. Substituting this link aggregation for a single 100G coherent link would replace the eight SFP+ transceivers with just two coherent transceivers with a total power consumption of 12 watts. And on top of that reduced total power consumption, a single 100G coherent link more than doubles the capacity of aggregating those four 10G links.

Adopting a single 100G uplink also diminishes the need for such link aggregation, simplifying network configuration and operations. To gain further insight into the potential market and reach of this link aggregation upgrade, it is recommended to consult the recent Cignal AI report on 100ZR technologies.

The Environmental Advantage of Leaping to 100G

While conventional wisdom may suggest a step-by-step progression from 28G midhaul and backhaul network links to 56G and then to 100G, it’s important to remember that each round of network upgrade carries an environmental impact.

Let’s look at an example. As per the European 5G observatory, a country like The Netherlands has deployed 12,858 5G base stations. There are several thousands of mid- and backhaul links connecting groups of these base stations to the 5G core networks. Every time these networks require an upgrade to accommodate increasing capacity, tens of thousands of pluggable transceivers must be replaced nationwide. This upgrade entails a substantial capital investment as well as resources and materials.

A direct leap from 28G mid- and backhaul links directly to coherent 100G allows network operators to have their networks already future-proofed for the next ten years. From an environmental perspective, it saves the economic and environmental impact of buying, manufacturing, and installing tens of thousands of 56G plugs across mobile network deployments. It’s a strategic choice that avoids the redundancy and excess resource utilization associated with two consecutive upgrades, allowing for a more streamlined and sustainable deployment.

Streamlining Operations with 100G ZR

Beyond the environmental considerations and capital expenditure, the operational issues and expenses of new upgrades cannot be overlooked. Each successive generation of upgrades necessitates many truck rolls and other operational expenditures, which can be both costly and resource-intensive.

Each truck roll involves a number of costs:

- Staff time (labor cost)

- Staff safety (especially in poor weather conditions

- Staff opportunity cost (what complicated work could have been done instead of driving?)

- Fuel consumption (gasoline/petrol)

- Truck wear and tear

By directly upgrading from 25G to 100G, telecom operators can bypass an entire cycle of logistical and operational complexities, resulting in substantial savings in both time and resources.

This streamlined approach not only accelerates the transition toward higher speeds but also frees up resources that can be redirected toward other critical aspects of network optimization and sustainability initiatives.

Conclusion

In the midst of the energy transition, the ICT sector must also contribute toward a more sustainable and environmentally responsible future. While it might initially seem counterintuitive, upgrading to 100G coherent pluggables can help streamline optical access network architectures, reducing the number of pluggables required and their associated power consumption. Furthermore, upgrading these access network mid- and backhaul links directly to 100G leads to future-proofed networks that will not require financially and environmentally costly upgrades for the next decade.

As the ecosystem for QSFP28 100ZR solutions expands, production will scale up, making these solutions more widely accessible and affordable. This, in turn, will unlock new use cases within access networks.

Tags: 100G coherent transceivers, 100ZR technology, 10G DWDM SFP+ solutions, 5G network power savings, coherent 100G technology, data center power usage, digital signal processor power, EFFECT Photonics, energy transition in ICT, energy-efficient technology, environmental impact of ICT, green ICT solutions, ICT power consumption, link aggregation optimization, Optical Access Networks., photonics in ICT, power per bit efficiency, reducing ICT emissions, sustainable technology upgrades, telecom power consumption, transceiver integration

Integrating Line Card Performance and Functions Into a Pluggable

Article first published 12th October 2021, updated 3rd July 2024. The optical transceiver market is…

Article first published 12th October 2021, updated 3rd July 2024.

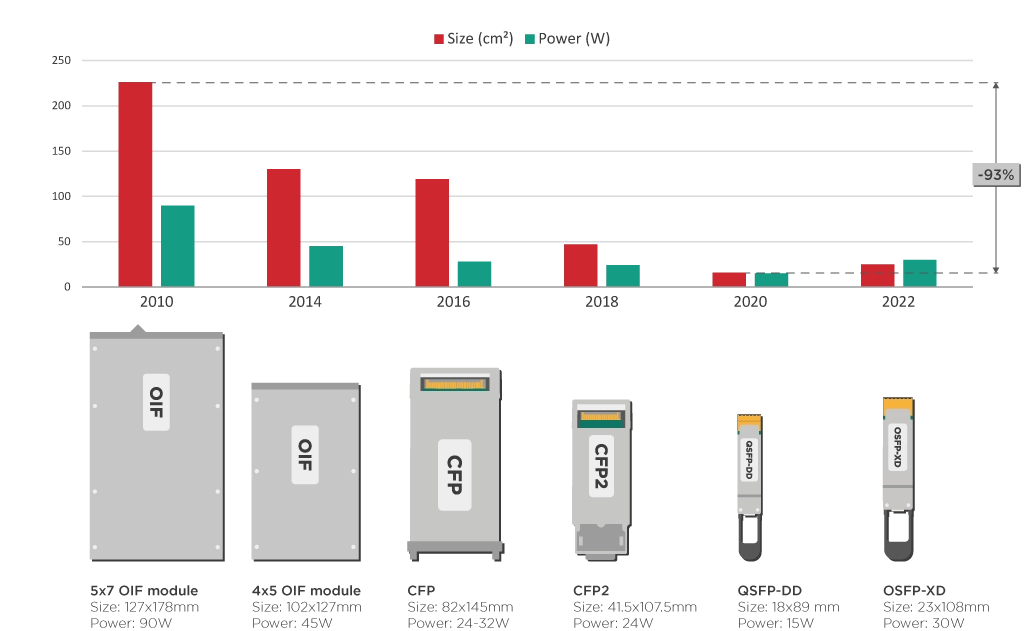

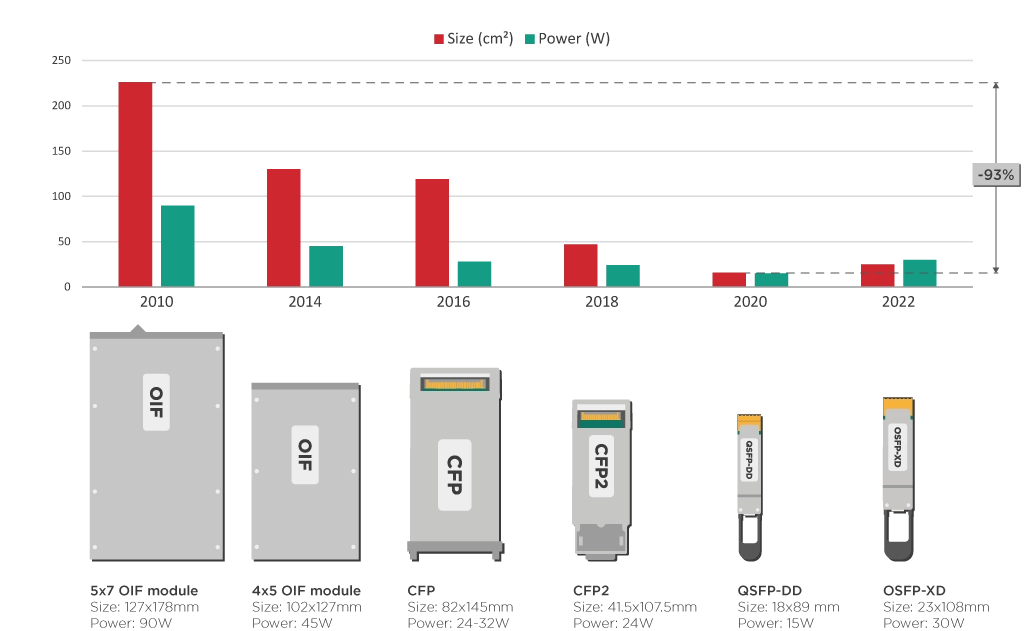

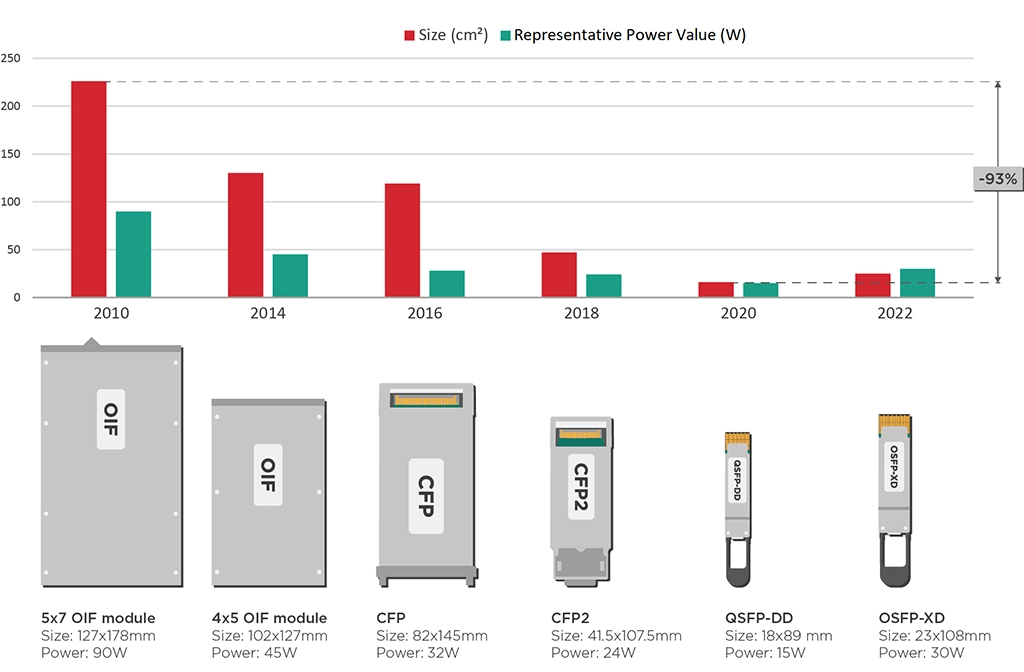

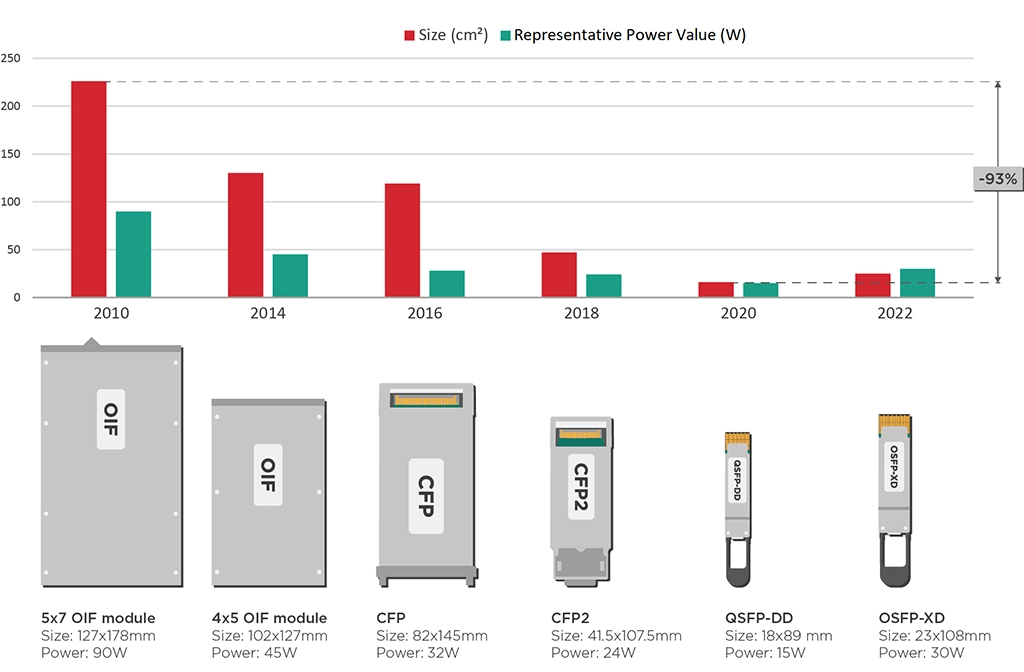

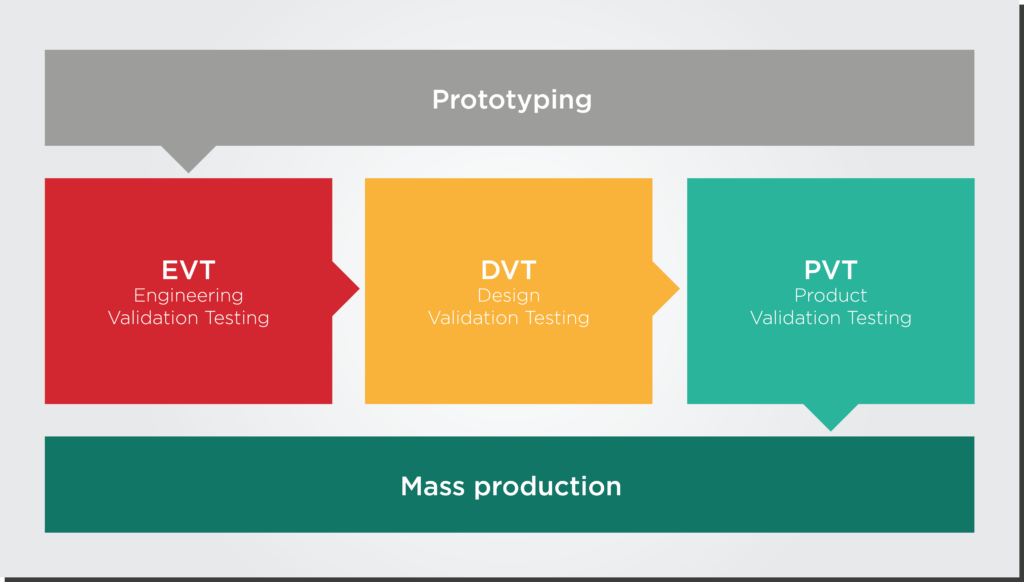

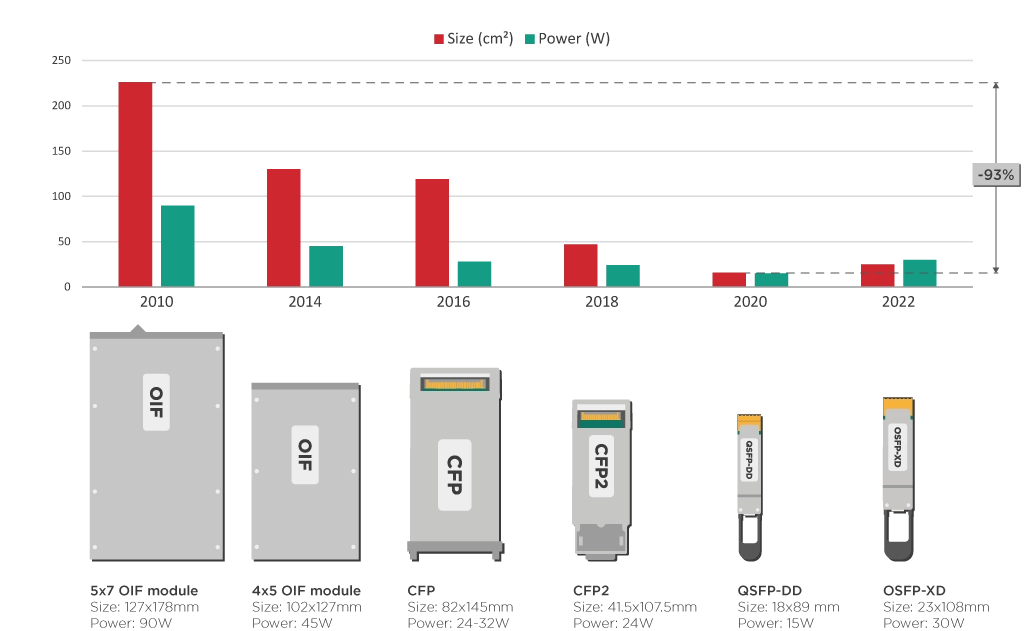

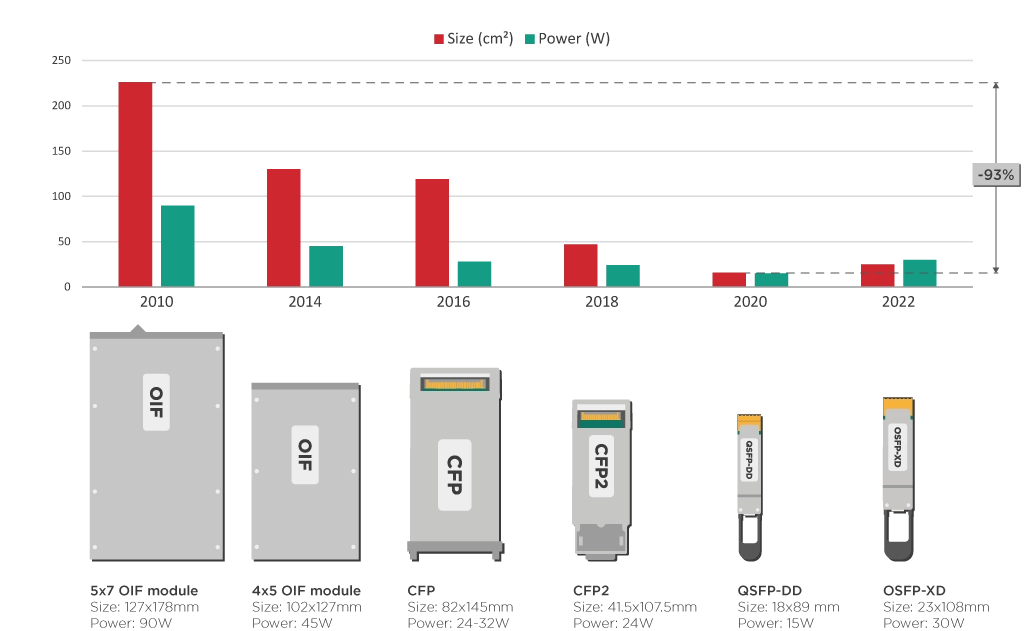

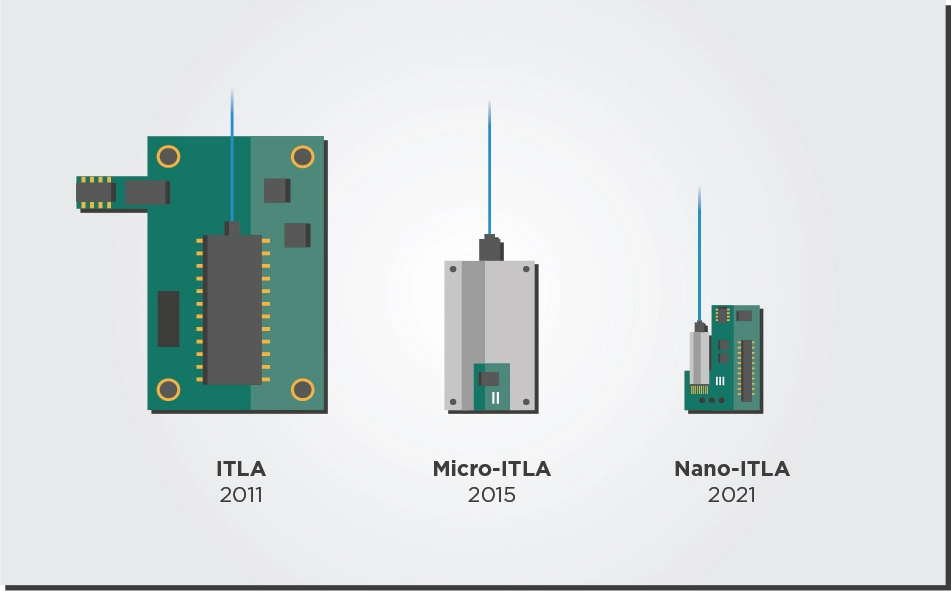

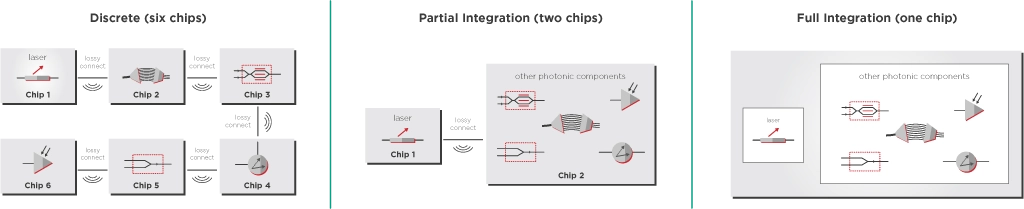

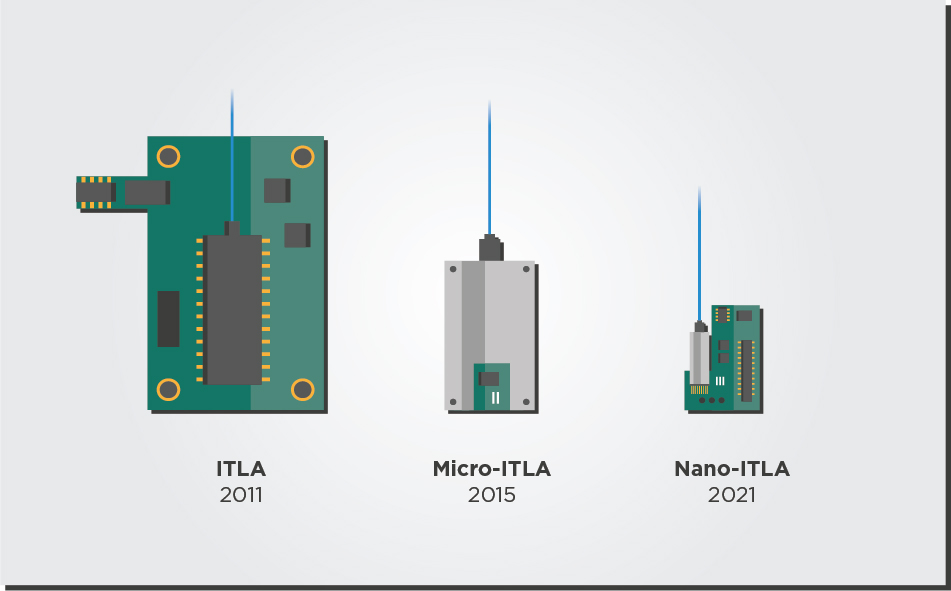

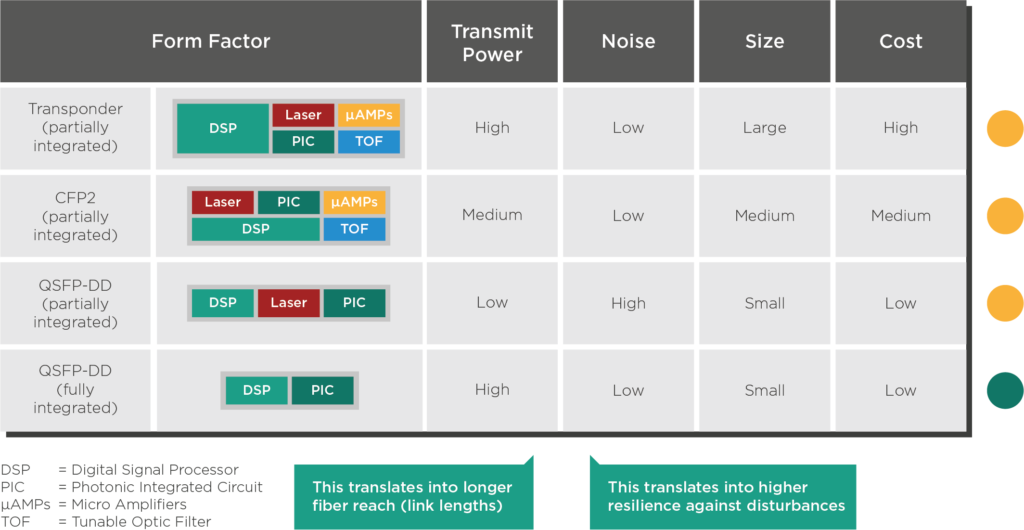

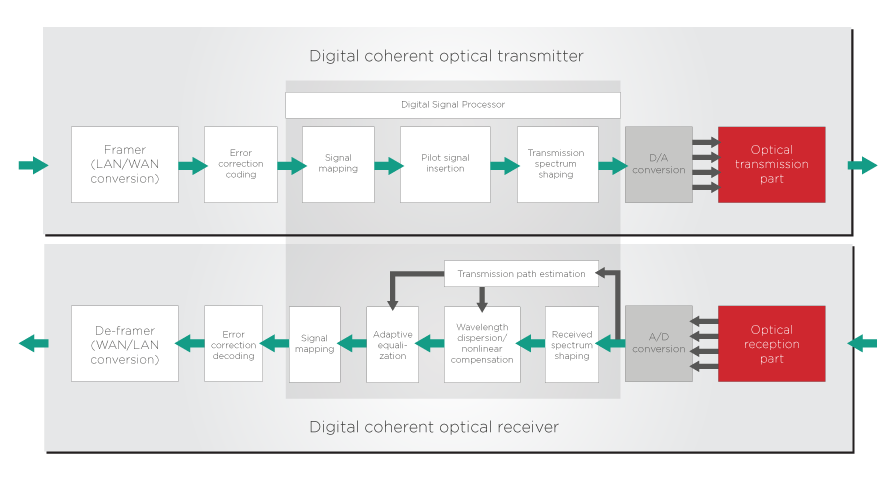

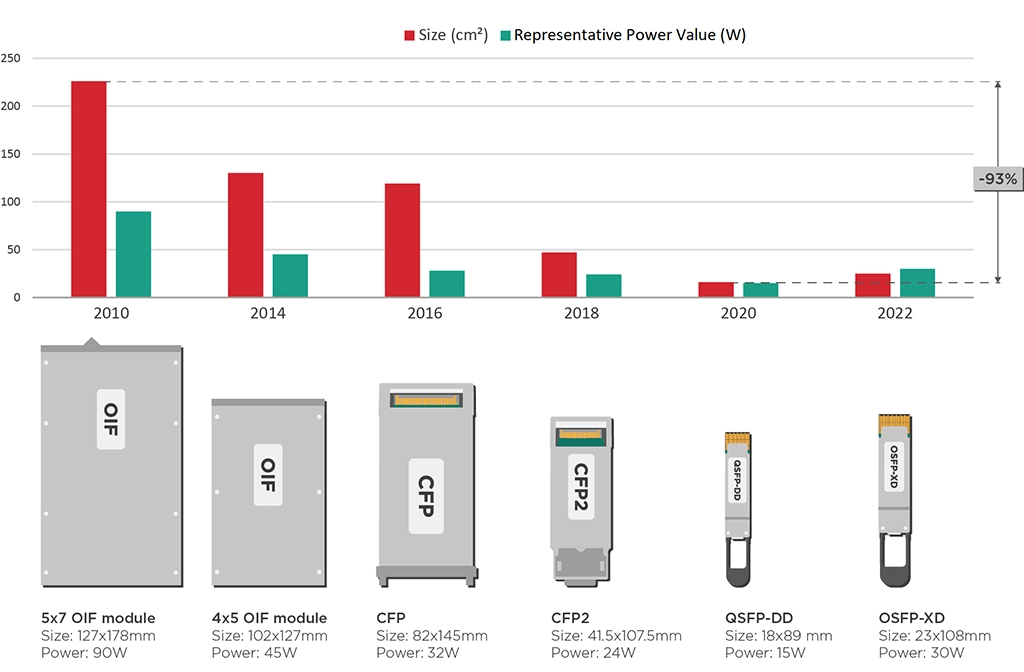

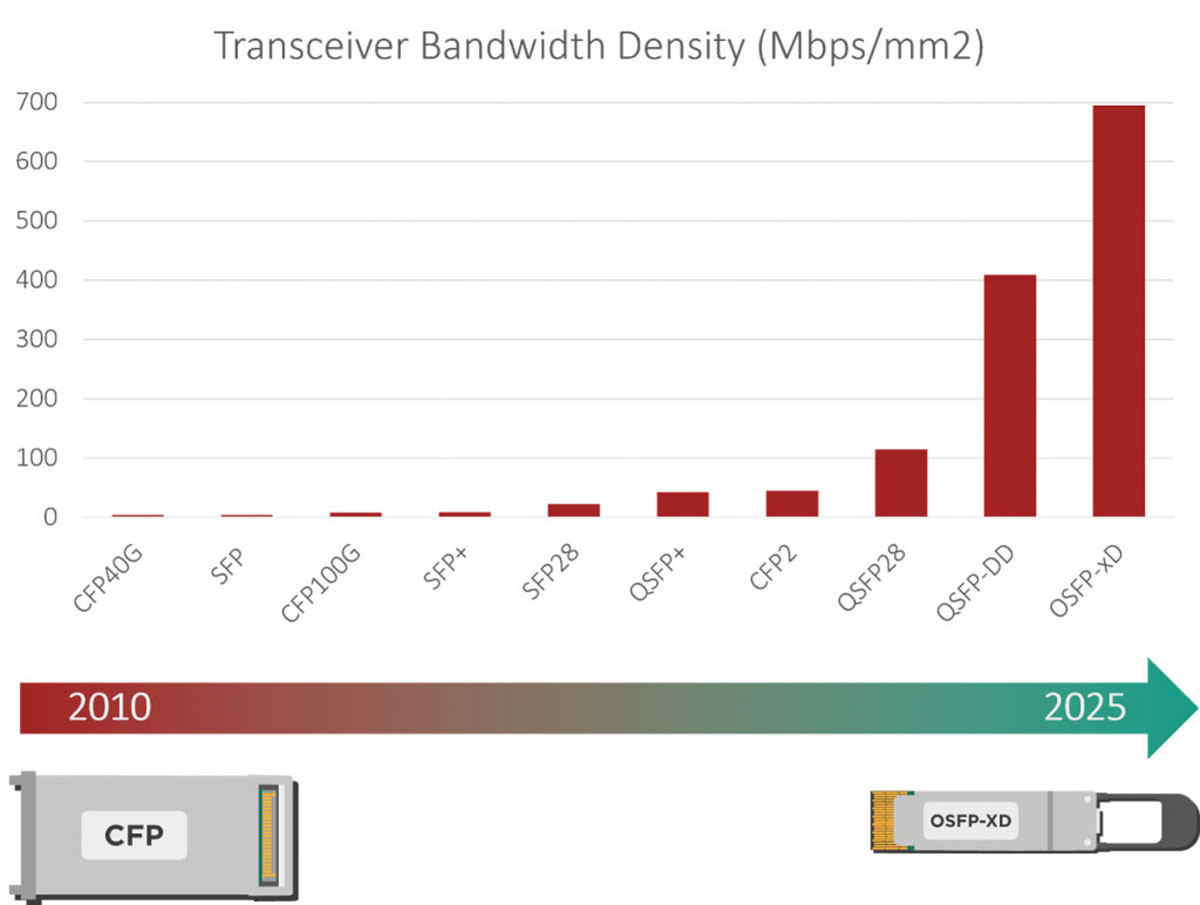

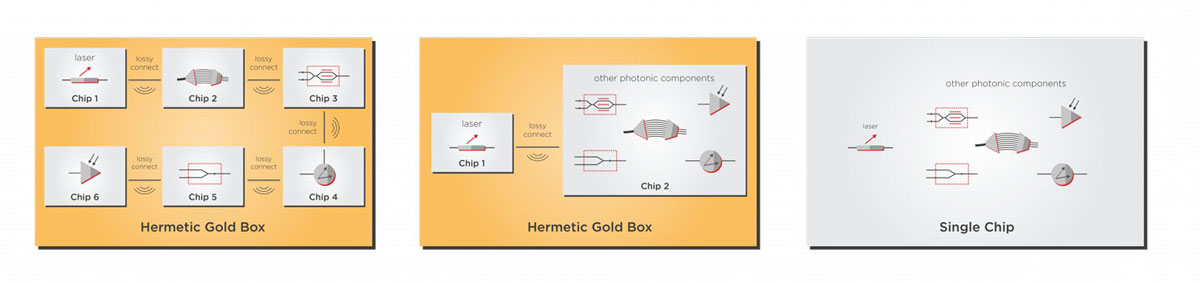

The optical transceiver market is expected to double in size by 2025, and coherent optical technology has come a long way over the past decade to become more accessible and thus have a greater impact on this market. When Nortel (later Ciena) introduced the first commercial coherent transponder in 2008, the device was a bulky, expensive line card with discrete components distributed on multiple circuit boards.

As time went by, coherent devices got smaller and consumed less power. By 2018, most coherent line card transponder functions could be miniaturized into CFP2 transceiver modules that were the size of a pack of cards and could plug into modules with pluggable line sides. QSFP modules followed a couple of years later, and they were essentially the size of a large USB stick and could be plugged directly into routers. They were a great fit for network operators who wanted to take advantage of the lower power consumption and cost, field replaceability, vendor interoperability, and pay-as-you-grow features.

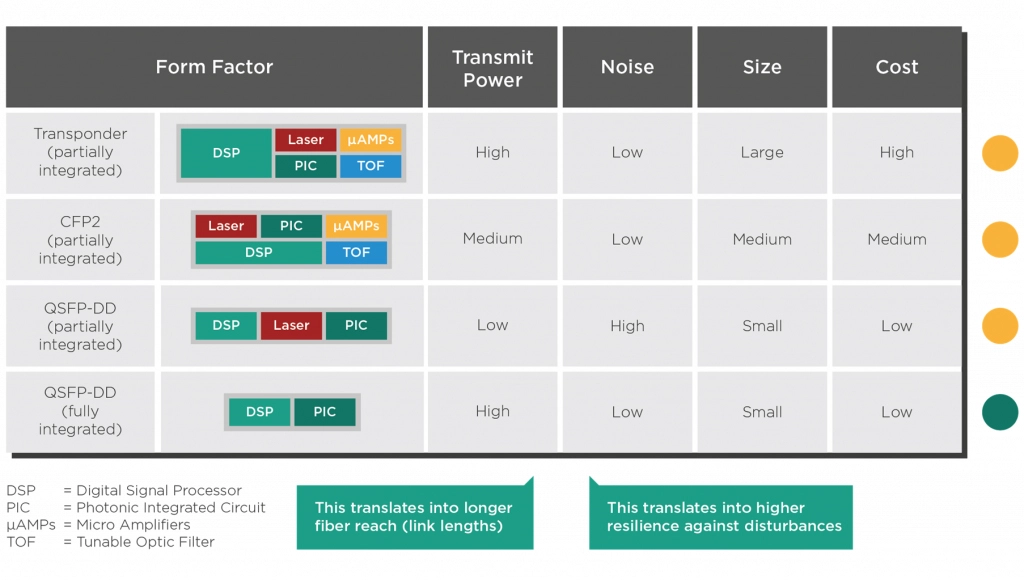

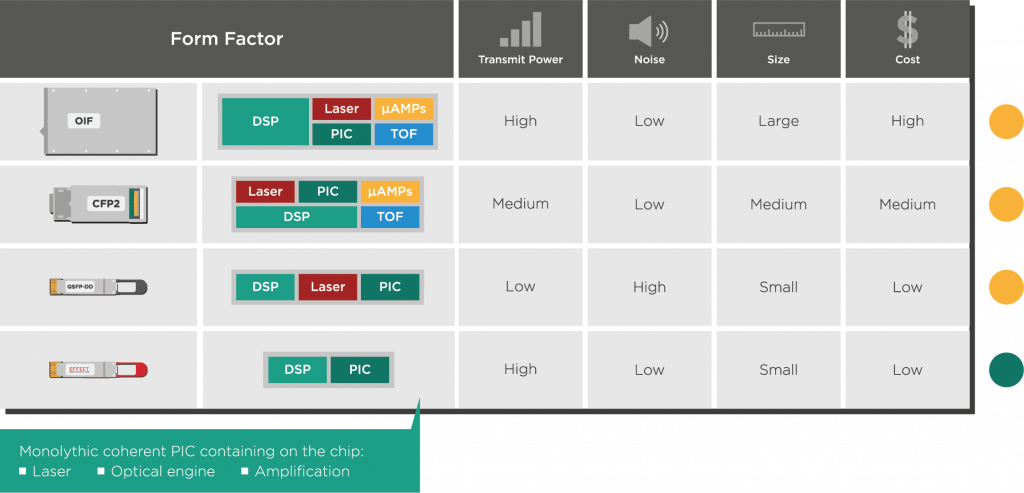

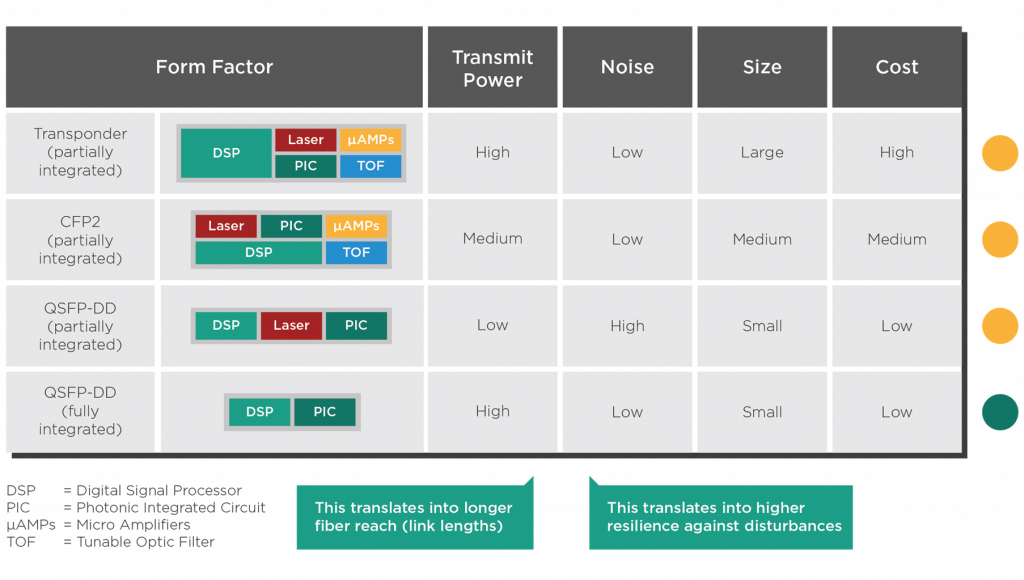

Despite the onset of pluggables, the big, proprietary line card optical engines have still played a role in the market by focusing on delivering best-in-class optical performance. The low-noise, high-power signals they produce have the longest reach for optical links and have wider compatibility with the ROADM multiplexers used in metro and long-haul networks. The smaller CFP2 modules produce, at best, roughly half the laser power of the line card modules, which limits their reach. Meanwhile, even smaller QSFP form factors can not fit optical amplifier components, so their transmit power and reach are much more limited than even a CFP2 module.

All in all, the trade-offs were clear: go for proprietary line card transponders if you want best-in-class performance and longest reach, and go for CFP2 or QSFP transceivers if you want a smaller footprint and power consumption. This trade-off, however, limits the more widespread adoption of coherent technology. For example, mobile network operators need high performance, a smaller footprint, and power consumption so that their metro and access networks can meet the rising demands for 5G data.

So what if we told you the current paradigm of line card transponders versus pluggable transceivers is outdated? Recent improvements in electronic and photonic integration have squeezed more performance and functions into smaller form factors, allowing pluggable devices to almost catch up to line cards.

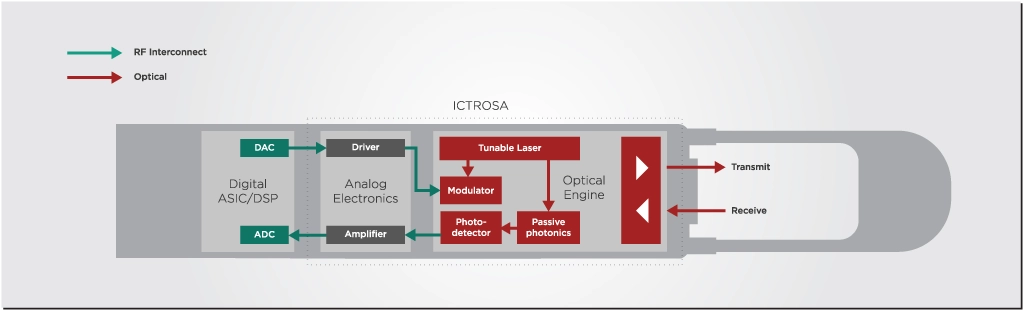

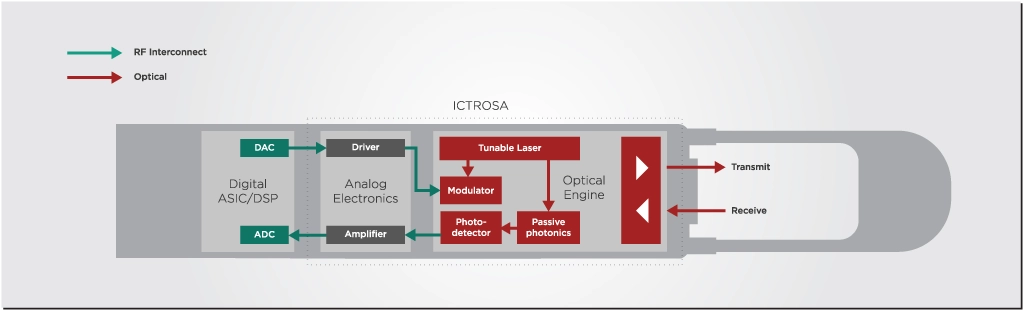

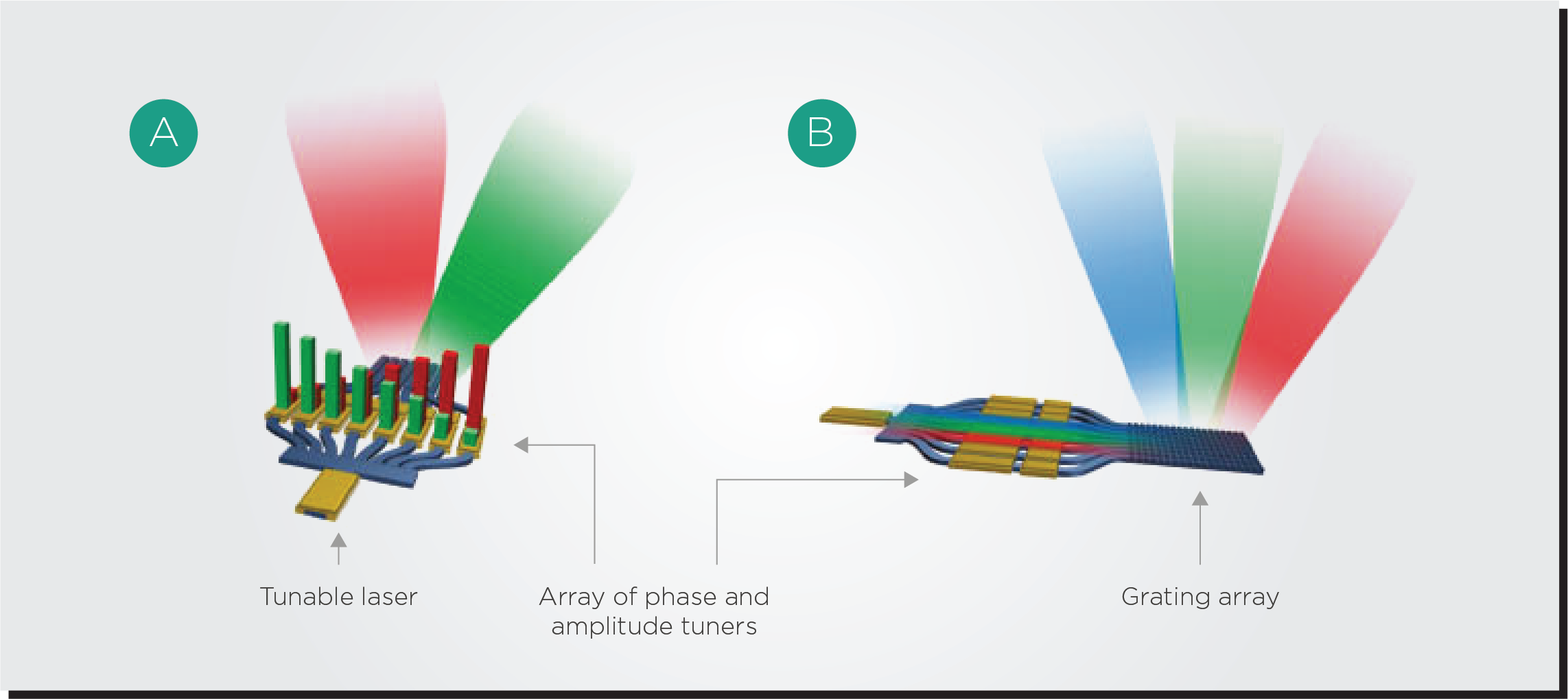

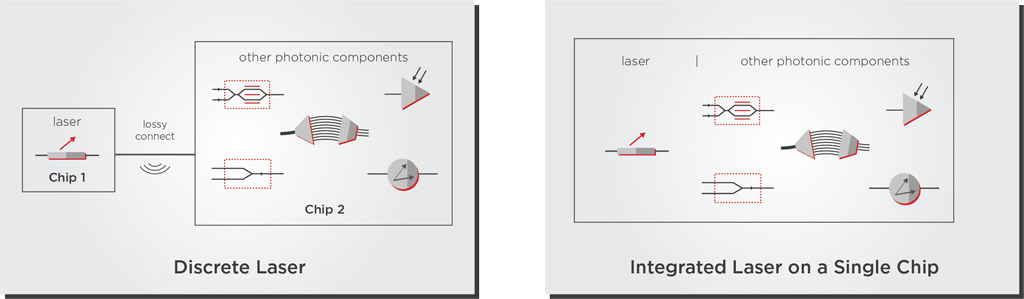

Integration Enables Line Card Performance in a Pluggable Form Factor

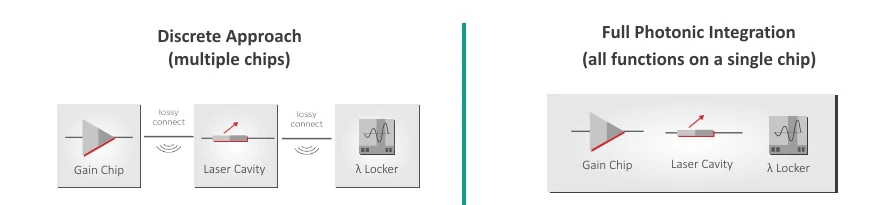

The advances in photonic integration change the game and can enable high performance and transmit power in the smallest pluggable transceiver form factors. By integrating all photonic functions on a single chip, including lasers and optical amplifiers, pluggable transceiver modules can achieve transmit power levels closer to those of line card transponder modules while still keeping the smaller QSFP router pluggable form factor, power consumption, and cost.

Full photonic integration increases the transmit power further by minimizing the optical losses due to the use of more efficient optical modulators, fewer coupling losses compared to silicon, and the integration of the laser device on the same chip as the rest of the optical components.

Modern ASICs Can Fit Electronics Functions in a Pluggable Form Factor

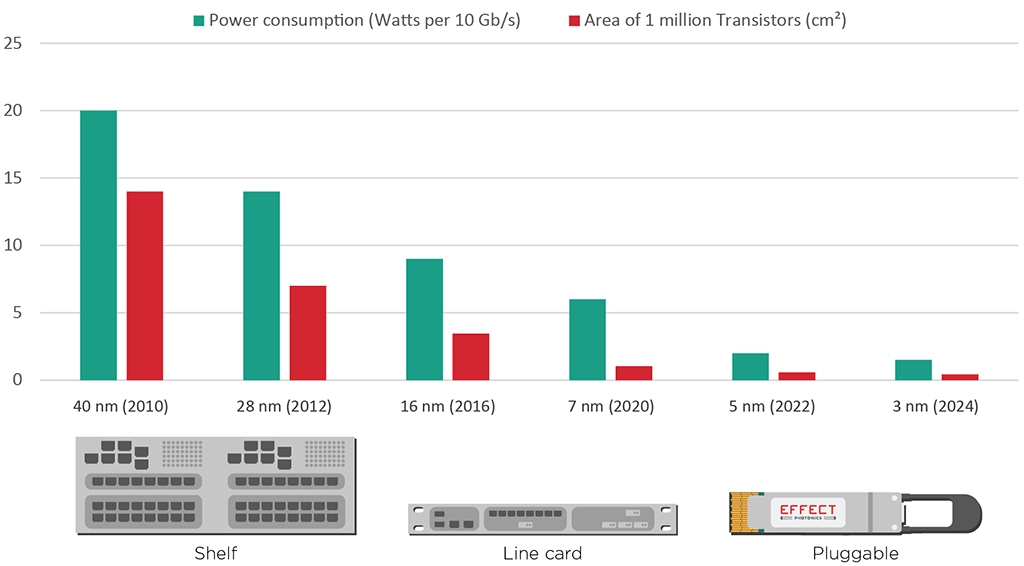

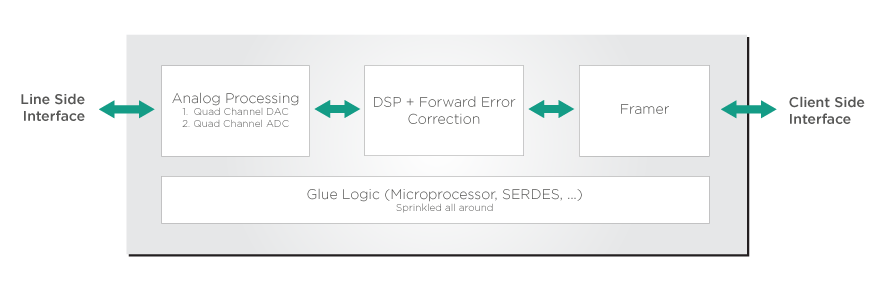

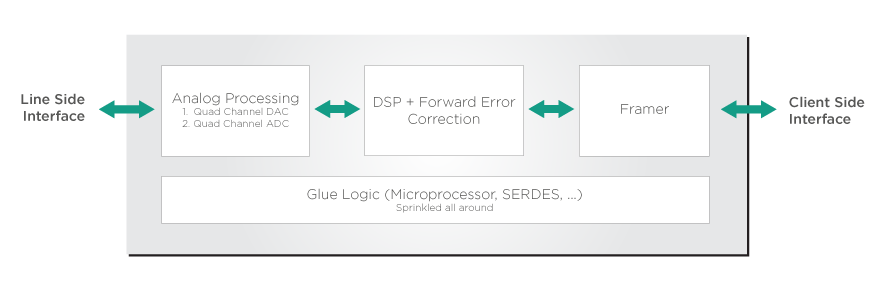

As important as optical performance is, though, pluggable transceivers also needed improvements on the electronic side. Traditionally, line card systems not only had better optical performance but also broader and more advanced electronic functionalities, such as digital signal processing (DSP), advanced forward error correction (FEC), encryption, and advanced modulation schemes. These features are usually implemented on electronic application-specific integrated circuits (ASICs).

ASICs benefit from the same CMOS process improvements that drive progress in consumer electronics. Each new CMOS process generation can fit more transistors into a single chip. Ten years ago, an ASIC for line cards had tens of millions of transistors, while the 7nm ASIC technology used in modern pluggables has more than five billion transistors. This progress in transistor density allows ASICs to integrate more electronic functions than ever into a single chip while still making the chip smaller. Previously, every function—signal processing, analog/digital conversion, error correction, multiplexing, encryption—required a separate ASIC, but now they can all be consolidated on a single chip that fits in a pluggable transceiver.

This increase in transistor density and integration also leads to massive gains in power consumption and performance. For example, modern transceivers using 7nm ASICs have decreased their consumption by 50% compared to the previous generation using 16nm ASICs while delivering roughly a 30% increase in bandwidth and baud rates. By 2022, ASICs in pluggables will benefit from a newer 5nm CMOS process, enabling further improvements in transistor density, power consumption, and speed.

Electronic Integration Enables Line-Card System Management in a Pluggable Form Factor

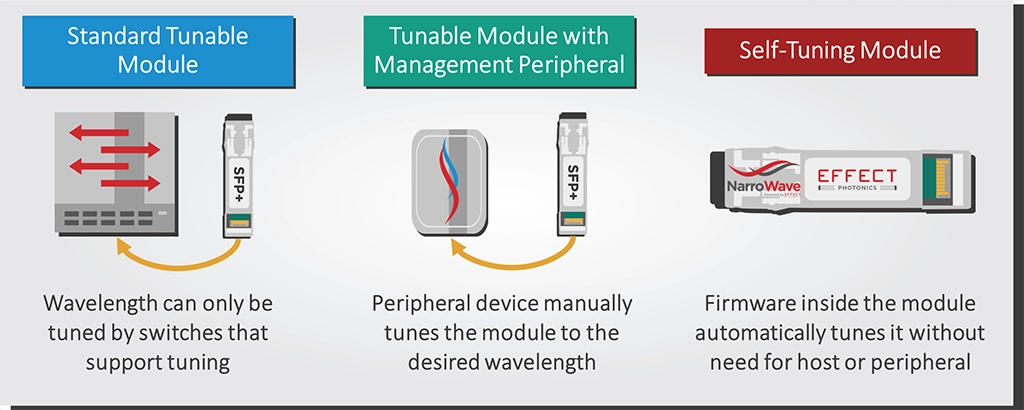

The advancements in CMOS technology also enable the integration of system-level functions into a pluggable transceiver. Previously, functions such as in-band network management and security, remote management, autotuneability, or topology awareness had to live on the shelf controller or in the line card interface, but that’s not the case anymore. Thanks to the advances in electronic integration, we are closer than ever to achieving a full, open transponder on a pluggable that operates as part of the optical network. These programmable, pluggable transceivers provide more flexibility than ever to manage access networks.

For example, the pluggable transceiver could run in a mode that prioritizes high-performance or one that prioritizes low consumption by using simpler and less power-hungry signal processing and error correction features. Therefore, these pluggables could provide high-end performance in the smallest form-factor or low and mid-range performance at lower power consumption than embedded line card transponders.

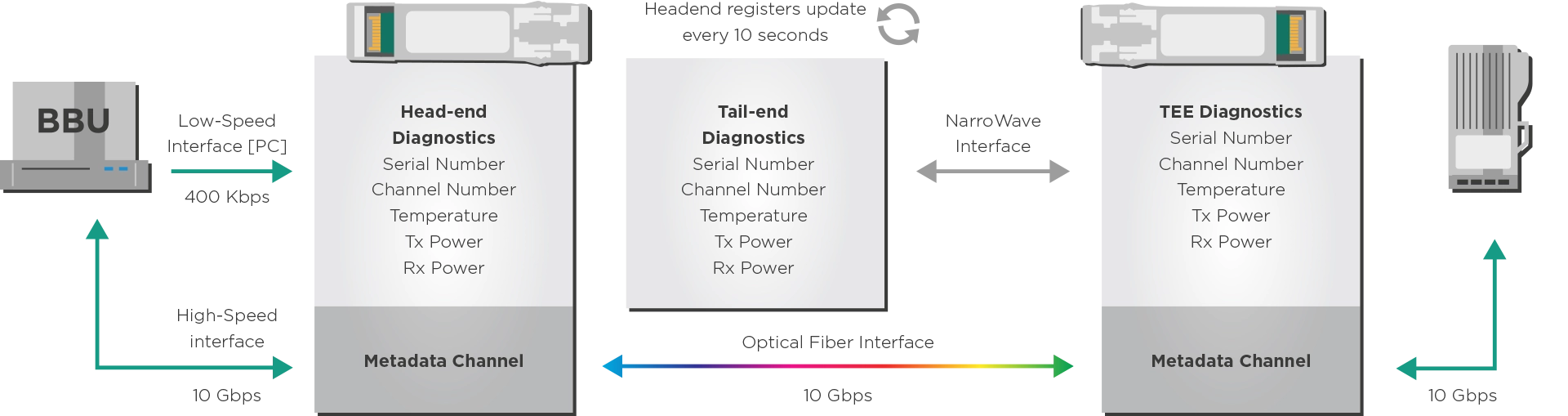

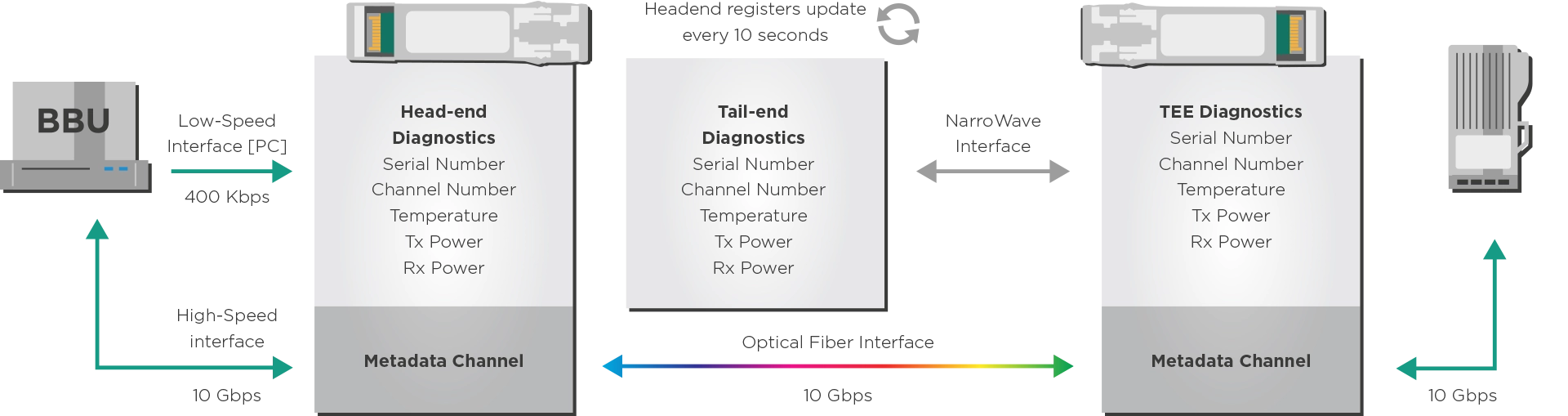

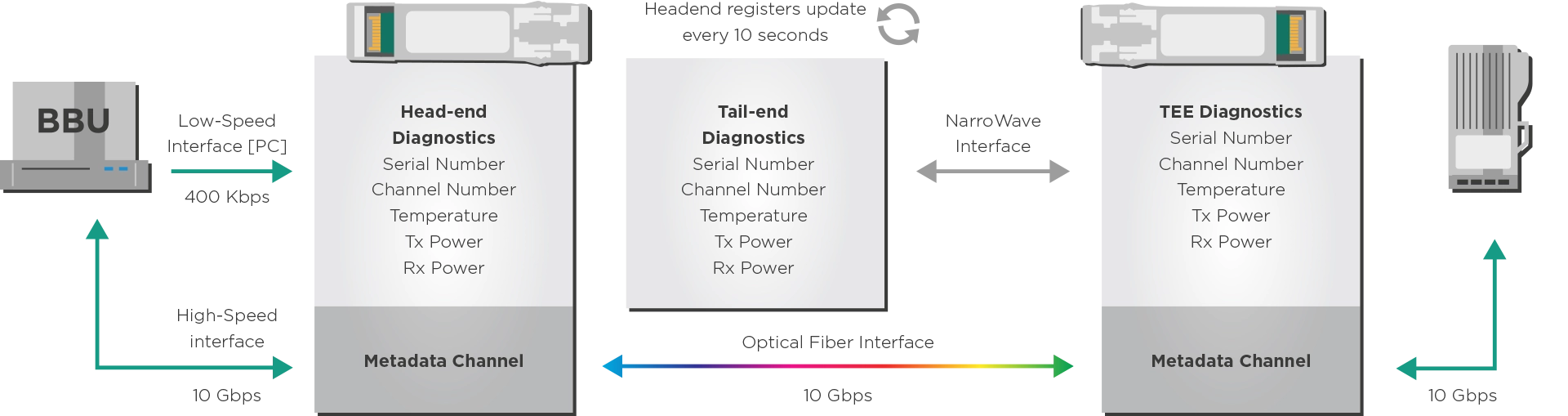

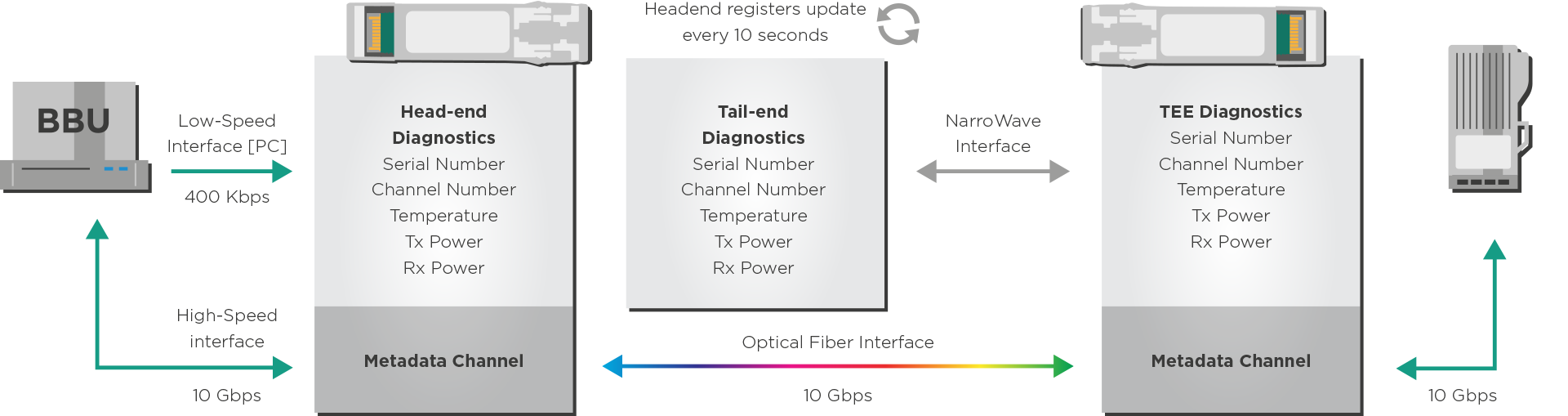

EFFECT Photonics has already started implementing these system-management features in its products. For example, our direct-detect SFP+ transceiver modules feature NarroWave technology, which allows customers to monitor and control remote SFP+ modules from the central office without making any hardware or software changes in the field. NarroWave is agnostic of vendor equipment, data rate, or protocol of the in-band traffic.

Pluggable transceivers also provide the flexibility of multi-vendor interoperability. High-performance line card transponders have often prioritized using proprietary features to increase performance while neglecting interoperability. The new generations of pluggables don’t need to make this trade-off: they can operate in standards-compatible modes for interoperability or in high-performance modes that use proprietary features.

Takeaways

Coherent technology was originally reserved for premium long-distance links where performance is everything. Edge and access networks could not use this higher-performance technology since it was too bulky and expensive.

Photonic integration technology like the one used by EFFECT Photonics helps bring these big, proprietary, and expensive line card systems into a router pluggable form factor. This tech has squeezed more performance into a smaller area and at lower power consumption, making the device more cost-effective. Combining the improvements in photonic integration with the advances in electronic integration for ASICs, the goal of having a fully programmable transponder in a pluggable is practically a reality. Photonic integration will be a disruptive technology that will simplify network design and operation and reduce network operators’ capital and operating expenses.

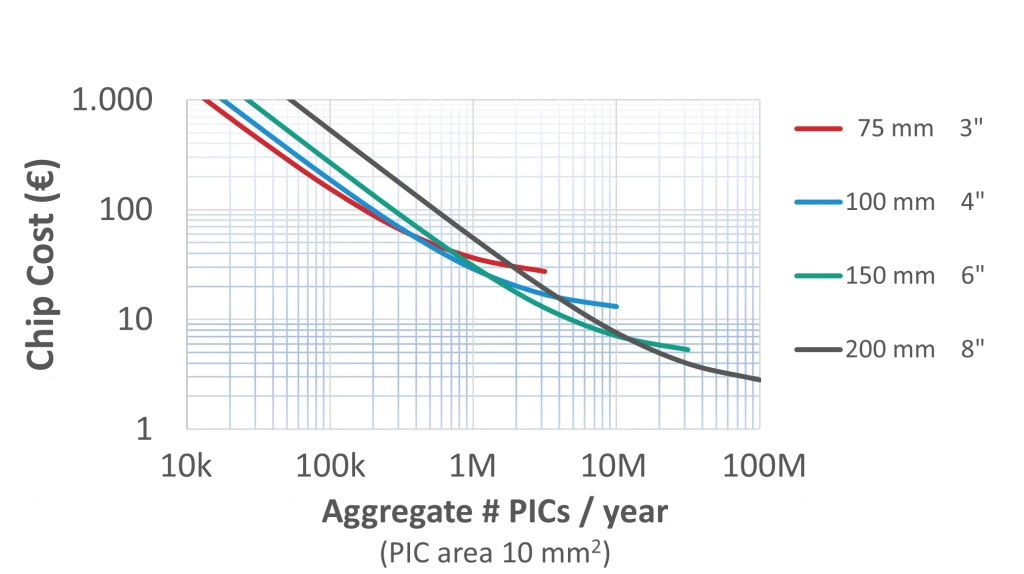

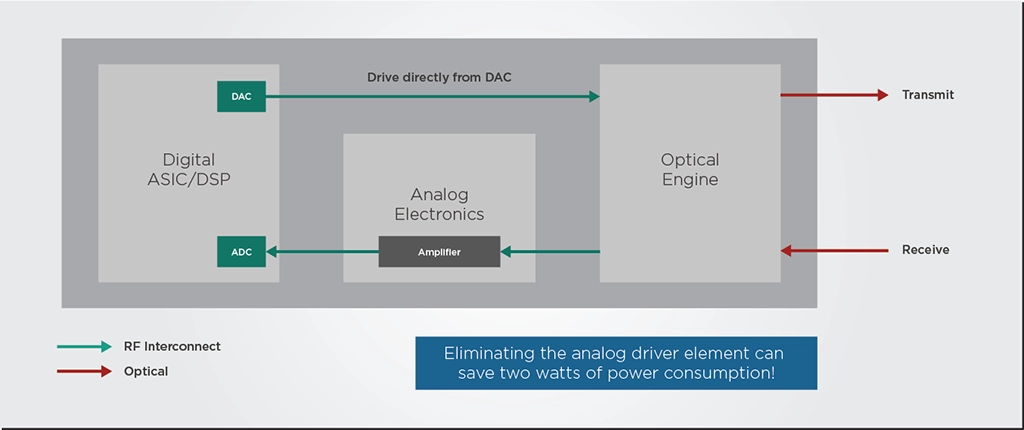

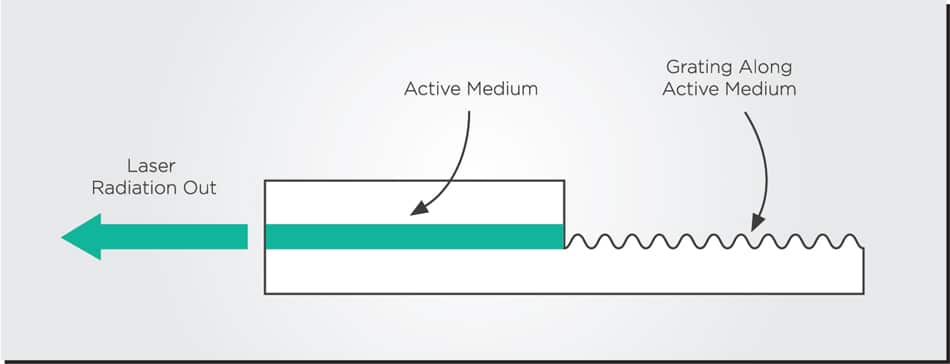

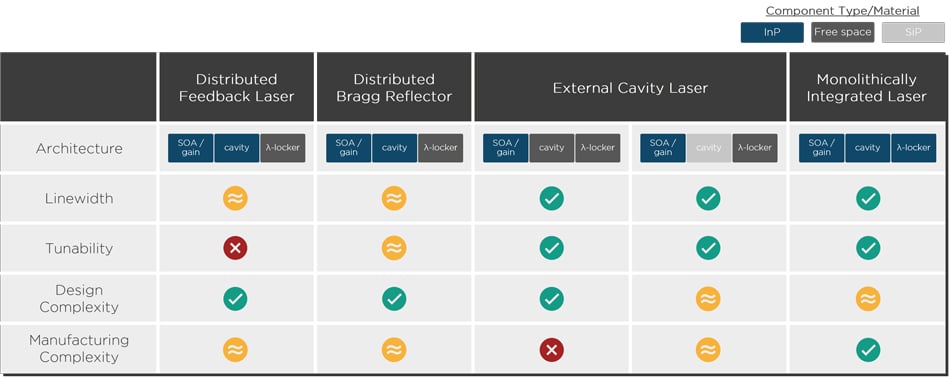

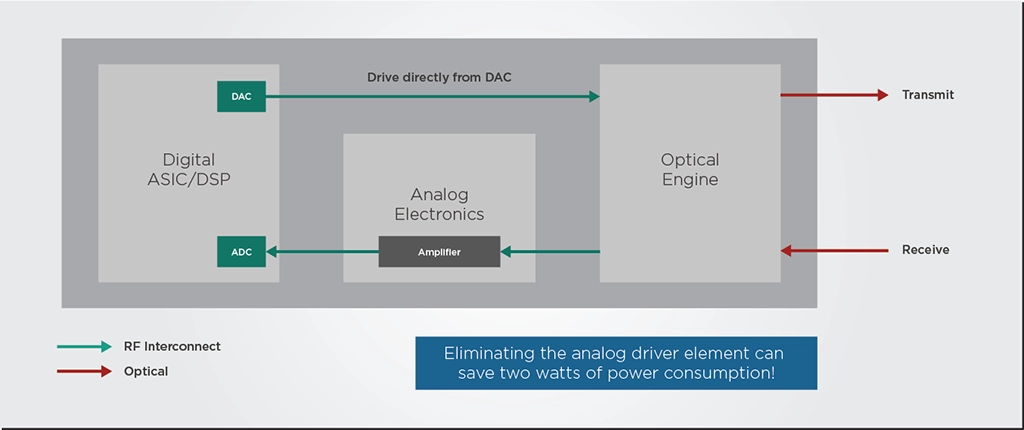

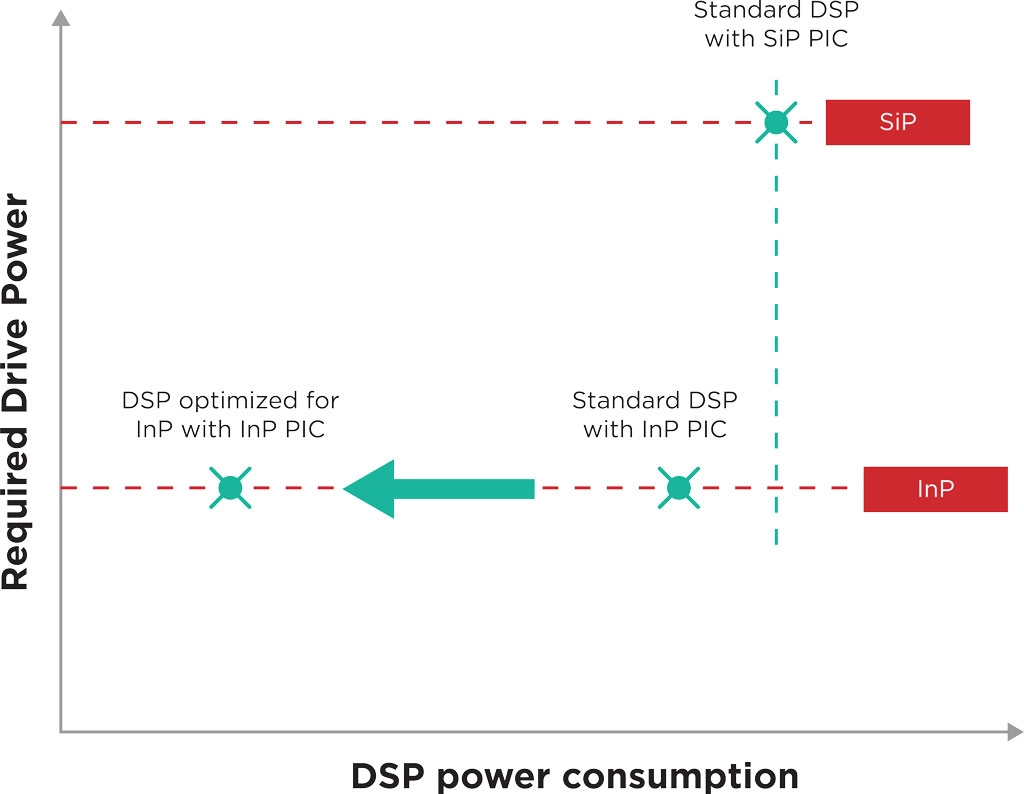

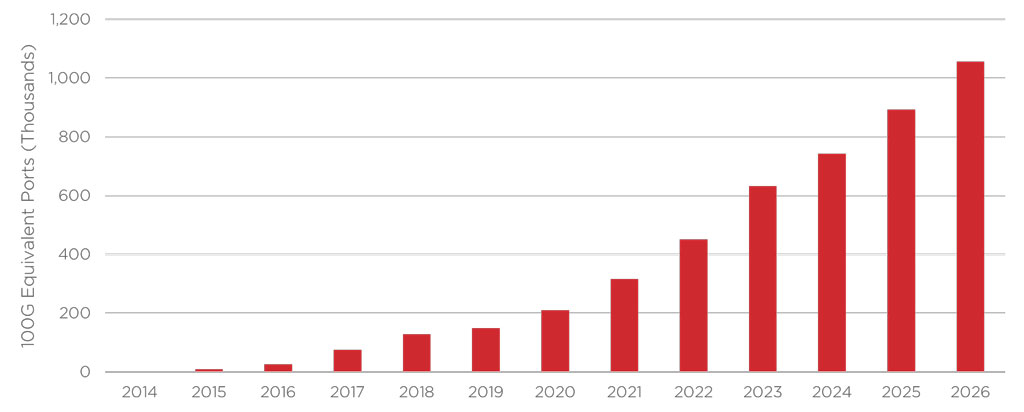

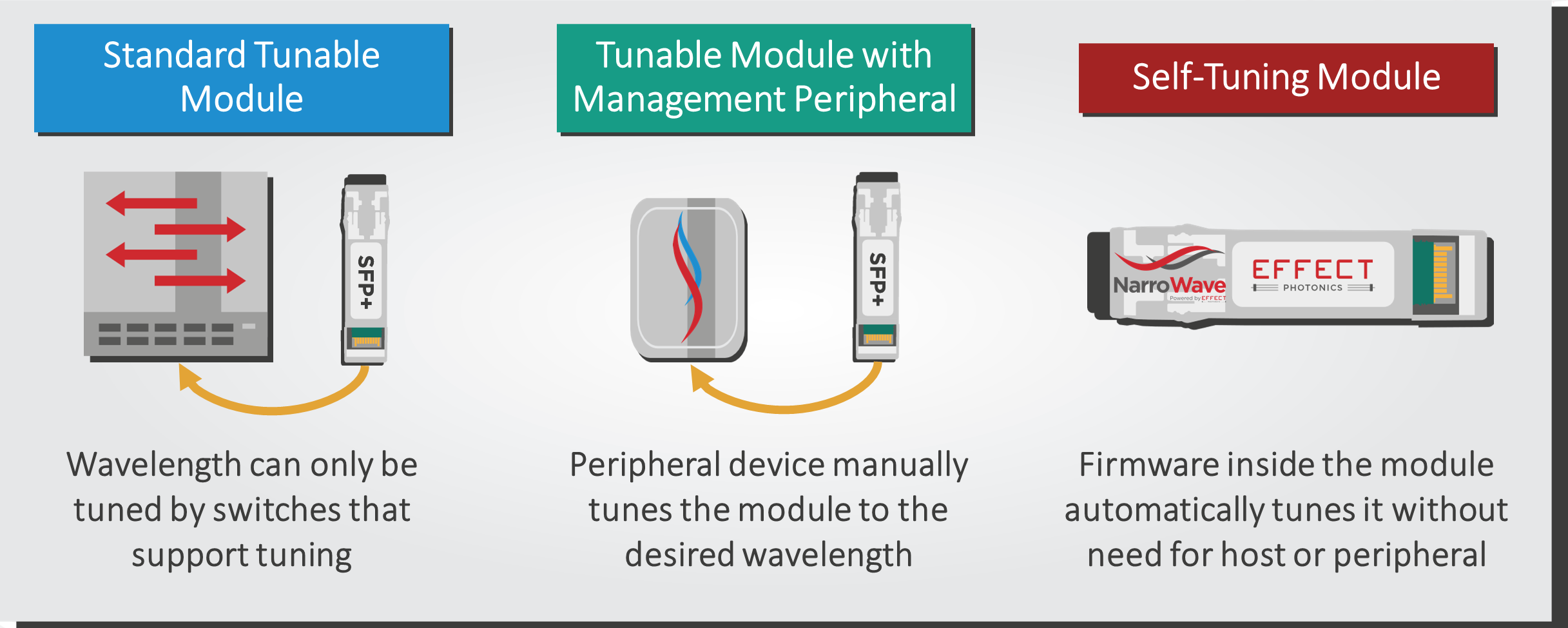

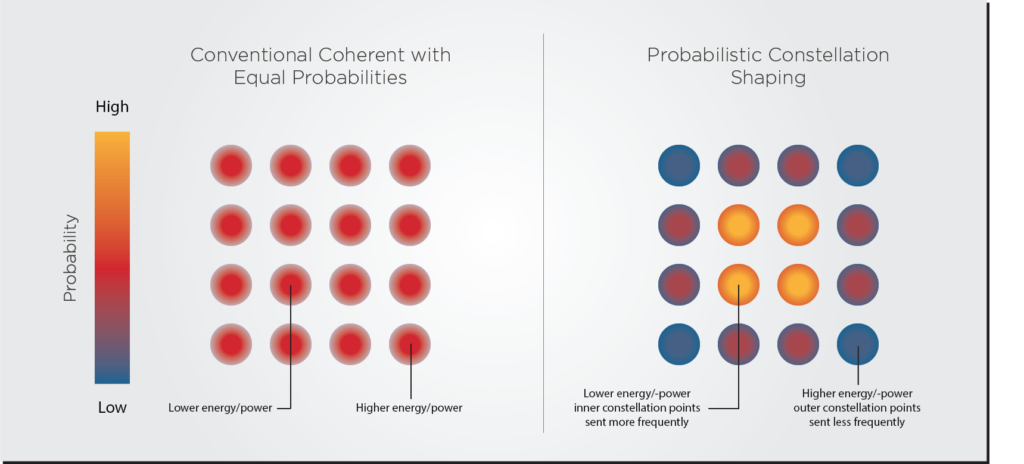

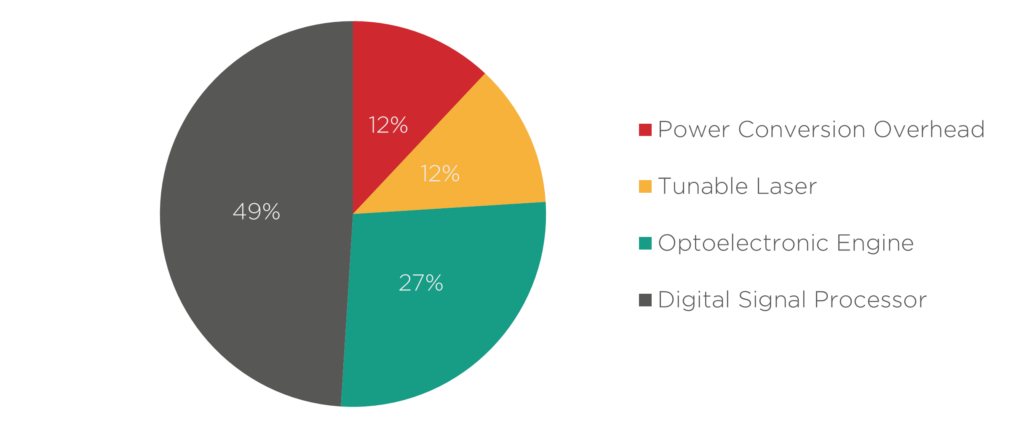

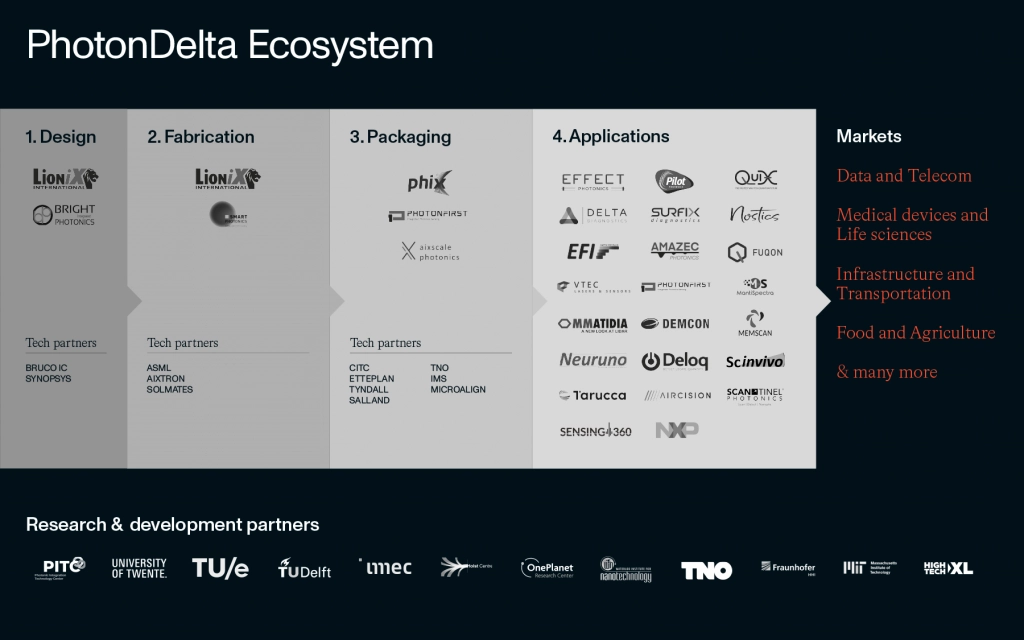

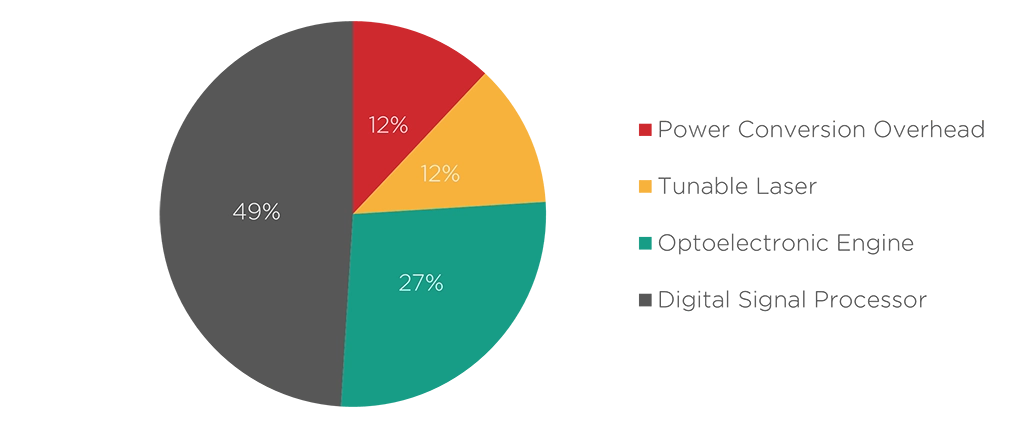

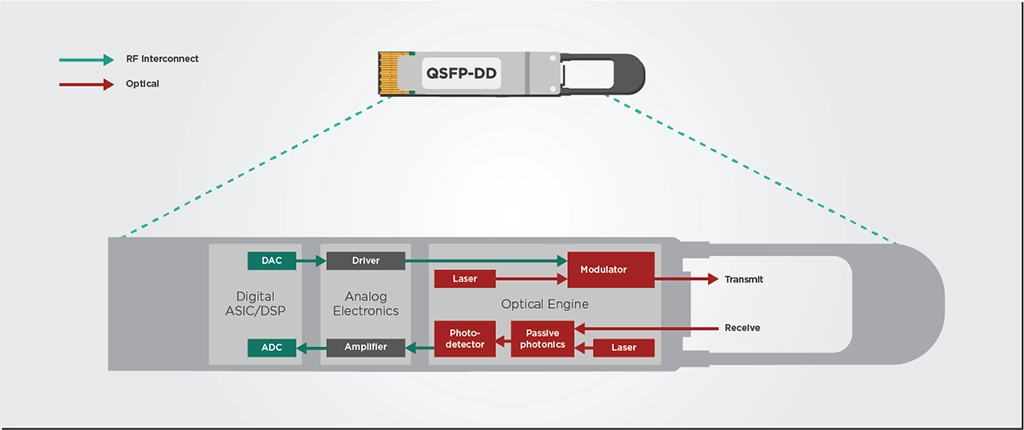

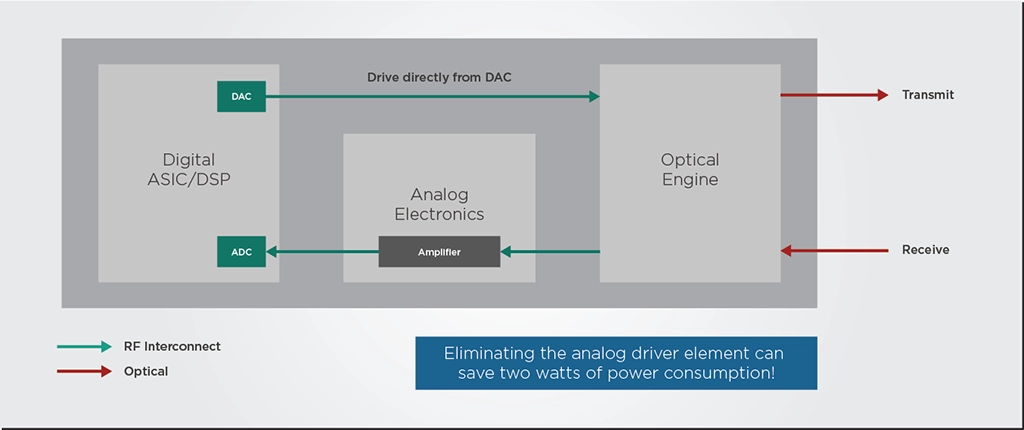

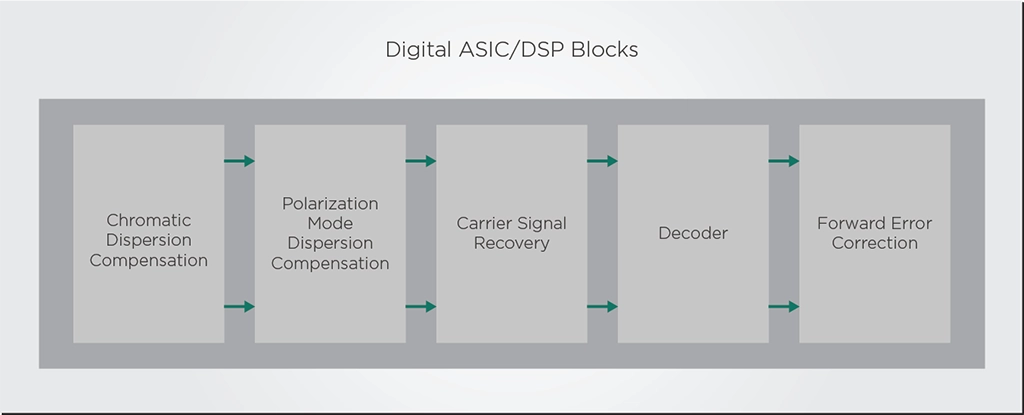

The impact of this technological improvement in pluggable transceivers was summarized deftly by Keven Wollenweber, VP of Product Management for Cisco’s Routing Portfolio: