– Link Magazine

– Link Magazine

Article first published 27 September 2021, updated 31st May 2023. The demand for data and…

Article first published 27 September 2021, updated 31st May 2023.

The demand for data and other digital services is rising exponentially. From 2010 to 2020, the number of Internet users worldwide doubled, and global internet traffic increased 12-fold. By 2022, internet traffic had doubled yet again. While 5G standards are more energy-efficient per bit than 4G, the total power consumption will be much higher than 4G. Huawei expects that the maximum power consumption of one of their 5G base stations will be 68% higher than their 4G stations. These issues do not just affect the environment but also the bottom lines of communications companies.

Keeping up with the increasing data demand of future networks sustainably will require operators to deploy more optical technologies, such as photonic integrated circuits (PICs), in their access and fronthaul networks.

Lately, we have seen many efforts to increase further the integration on a component level across the electronics industry. For example, moving towards greater integration of components in a single chip has yielded significant efficiency benefits in electronics processors. Apple’s recent M1 and M2 processors integrate all electronic functions in a single system-on-chip (SoC) and consume significantly less power than the processors with discrete components used in their previous generations of computers.

| 𝗠𝗮𝗰 𝗠𝗶𝗻𝗶 𝗠𝗼𝗱𝗲𝗹 | 𝗣𝗼𝘄𝗲𝗿 𝗖𝗼𝗻𝘀𝘂𝗺𝗽𝘁𝗶𝗼𝗻 | |

| 𝗜𝗱𝗹𝗲 | 𝗠𝗮𝘅 | |

| 2023, M2 | 7 | 5 |

| 2020, M1 | 7 | 39 |

| 2018, Core i7 | 20 | 122 |

| 2014, Core i5 | 6 | 85 |

| 2010, Core 2 Duo | 10 | 85 |

| 2006, Core Solo or Duo | 23 | 110 |

| 2005, PowerPC G4 | 32 | 85 |

| Table 1: Comparing the power consumption of a Mac Mini with an M1 and M2 SoC chips to previous generations of Mac Minis. [Source: Apple’s website] | ||

Photonics is also achieving greater efficiency gains by following a similar approach to integration. The more active and passive optical components (lasers, modulators, detectors, etc.) manufacturers can integrate on a single chip, the more energy they can save since they avoid coupling losses between discrete components and allow for interactive optimization.

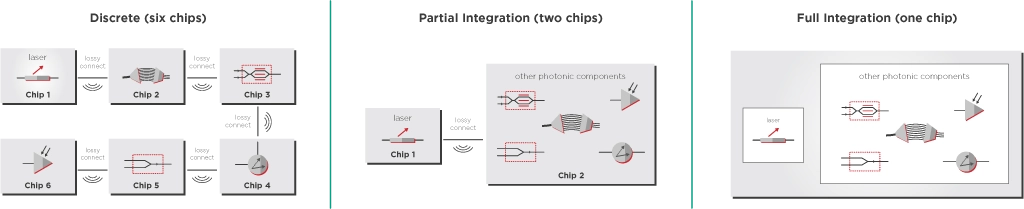

Let’s start by discussing three different levels of device integration for an optical device like a transceiver:

While discrete builds and partial integration have advantages in managing the production yield of the individual components, full integration leads to fewer optical losses and more efficient packaging and testing processes, making them a much better fit in terms of sustainability.

The interconnects required to couple discrete components result in electrical and optical losses that must be compensated with higher transmitter power and more energy consumption. The more interconnects between different components, the higher the losses become. Discrete builds will have the most interconnect points and highest losses. Partial integration reduces the number of interconnect points and losses compared to discrete builds. If these components are made from different optical materials, the interconnections will suffer additional losses.

On the other hand, full integration uses a single chip of the same base material. It does not require lossy interconnections between chips, minimizing optical losses and significantly reducing the energy consumption and footprint of the transceiver device.

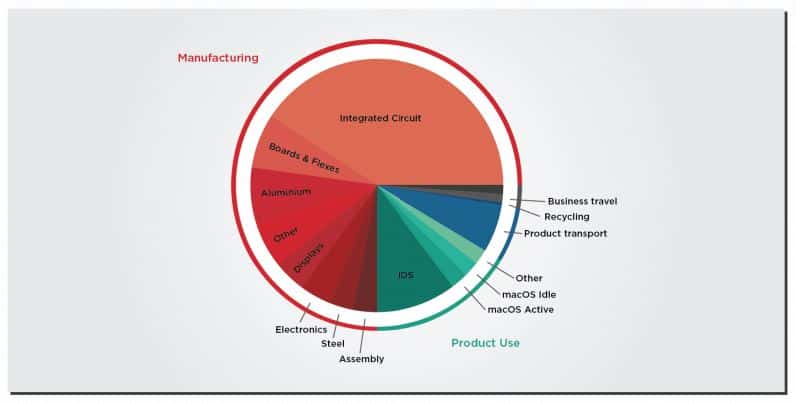

When it comes to energy consumption and sustainability, we shouldn’t just think about the energy the PIC consumes but also the energy and carbon footprint of fabricating the chip and assembling the transceiver. To give an example from the electronics sector, a Harvard and Facebook study estimated that for Apple, manufacturing accounts for 74% of their carbon emissions, with integrated circuit manufacturing comprising roughly 33% of Apple’s carbon output. That’s higher than the emissions from product use.

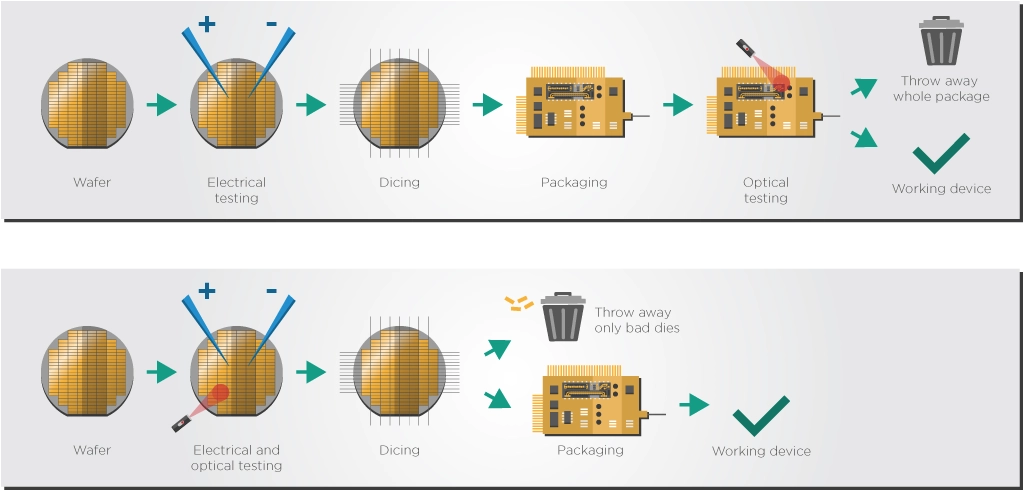

Testing is another aspect of the manufacturing process that impacts sustainability. The earlier faults can be found in the testing process, the greater the impact on the use of materials and the energy used to process defective chips. Ideally, testing should happen not only on the final, packaged transceiver but in the earlier stages of PIC fabrication, such as measuring after wafer processing or cutting the wafer into smaller dies.

Discrete and partial integration approaches do more of their optical testing on the finalized package, after connecting all the different components together. Should just one of the components not pass the testing process, the complete packaged transceiver would need to be discarded, potentially leading to a massive waste of materials as nothing can be ”fixed” or reused at this stage of the manufacturing process.

Full integration enables earlier optical testing on the semiconductor wafer and dies. By testing the dies and wafers directly before packaging, manufacturers need only discard the bad dies rather than the whole package, which saves valuable energy and materials.

While communication networks have become more energy-efficient, further technological improvements must continue decreasing the cost of energy per bit and keeping up with the exponential increase in Internet traffic. At the same time, a greater focus is being placed on the importance of sustainability and responsible manufacturing. All the photonic integration approaches we have touched on will play a role in reducing the energy consumption of future networks. However, out of all of them, only full integration is in a position to make a significant contribution to the goals of sustainability and environmentally friendly manufacturing. A fully integrated system-on-chip minimizes optical losses, transceiver energy consumption, power usage, and materials wastage while at the same time ensuring increased energy efficiency of the manufacturing, packaging, and testing process.

Tags: ChipIntegration, Data demand, DataDemand, EFFECT Photonics, Energy consumption reduction, energy efficiency, EnergySavings, Environmental impact, Fully Integrated PICs, Green Future, GreenFuture, Integrated Photonics, Integration benefits, Manufacturing sustainability, Optical technologies, OpticalComponents, photonic integration, PIC, PICs, ResponsibleManufacturing, sustainability telecommunication, Sustainable, Sustainable future, SustainableNetworks, Transceiver optimization

Article first published 15 June 2022, updated 18 May 2023. With the increasing demand for…

Article first published 15 June 2022, updated 18 May 2023.

With the increasing demand for cloud-based applications, datacom providers are expanding their distributed computing networks. Therefore, they and telecom provider partners are looking for data center interconnect (DCI) solutions that are faster and more affordable than before to ensure that connectivity between metro and regional facilities does not become a bottleneck.

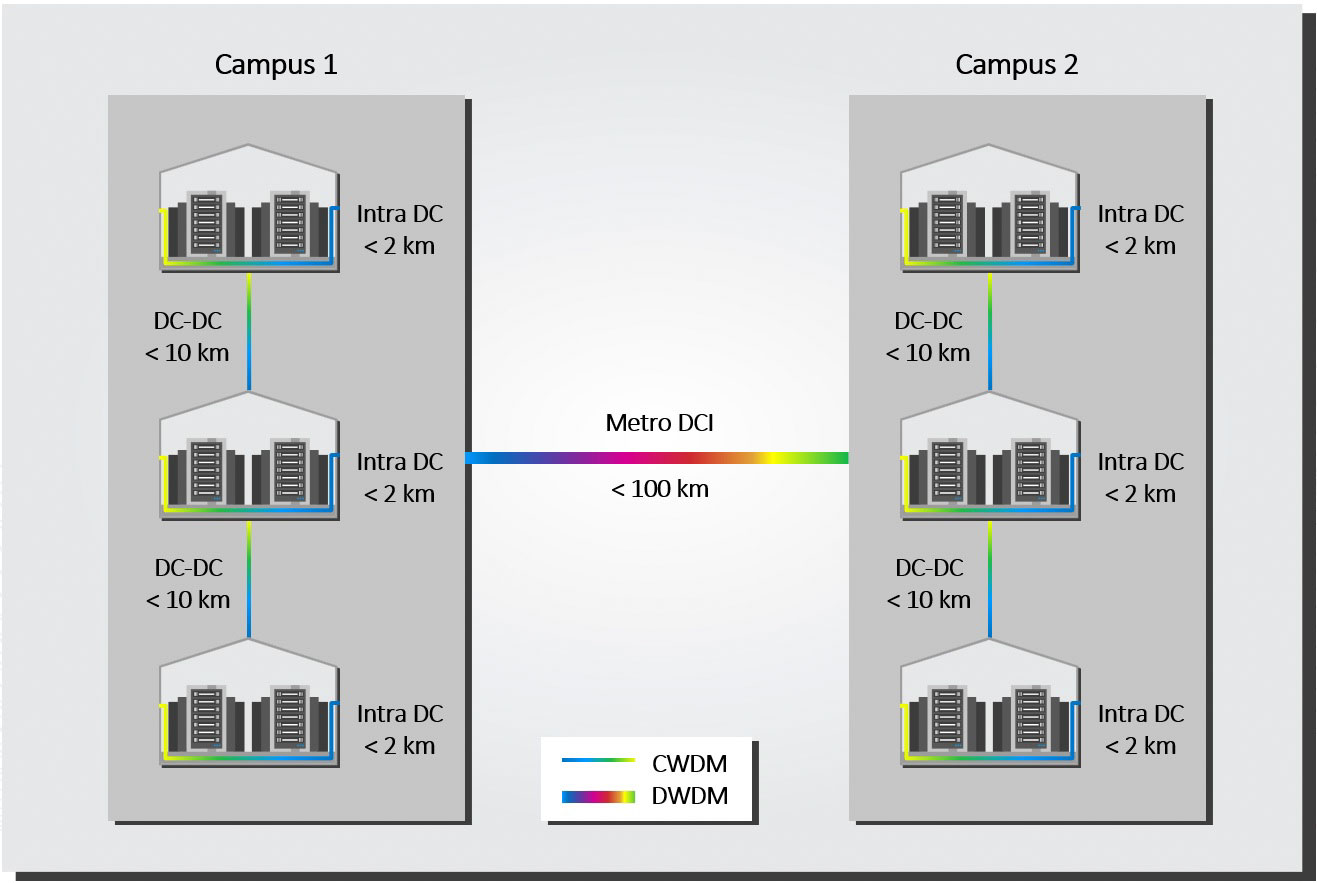

As shown in the figure below, we can think about three categories of data center interconnects based on their reach

Coherent 400ZR now dominates the metro DCI space, but in the coming decade, coherent technology could also play a role in shorter ranges, such as campus and intra-data center interconnects. As interconnects upgrade to Terabit speeds, coherent technology might start coming closer to direct detect power consumption and cost.

The advances in electronic and photonic integration allowed coherent technology for metro DCIs to be miniaturized into QSFP-DD and OSFP form factors. This progress allowed the Optical Internetworking Forum (OIF) to create a 400ZR multi-source agreement. With small enough modules to pack a router faceplate densely, the datacom sector could profit from a 400ZR solution for high-capacity data center interconnects of up to 80km. Operations teams found the simplicity of coherent pluggables very attractive. There was no need to install and maintain additional amplifiers and compensators as in direct detection: a single coherent transceiver plugged into a router could fulfill the requirements.

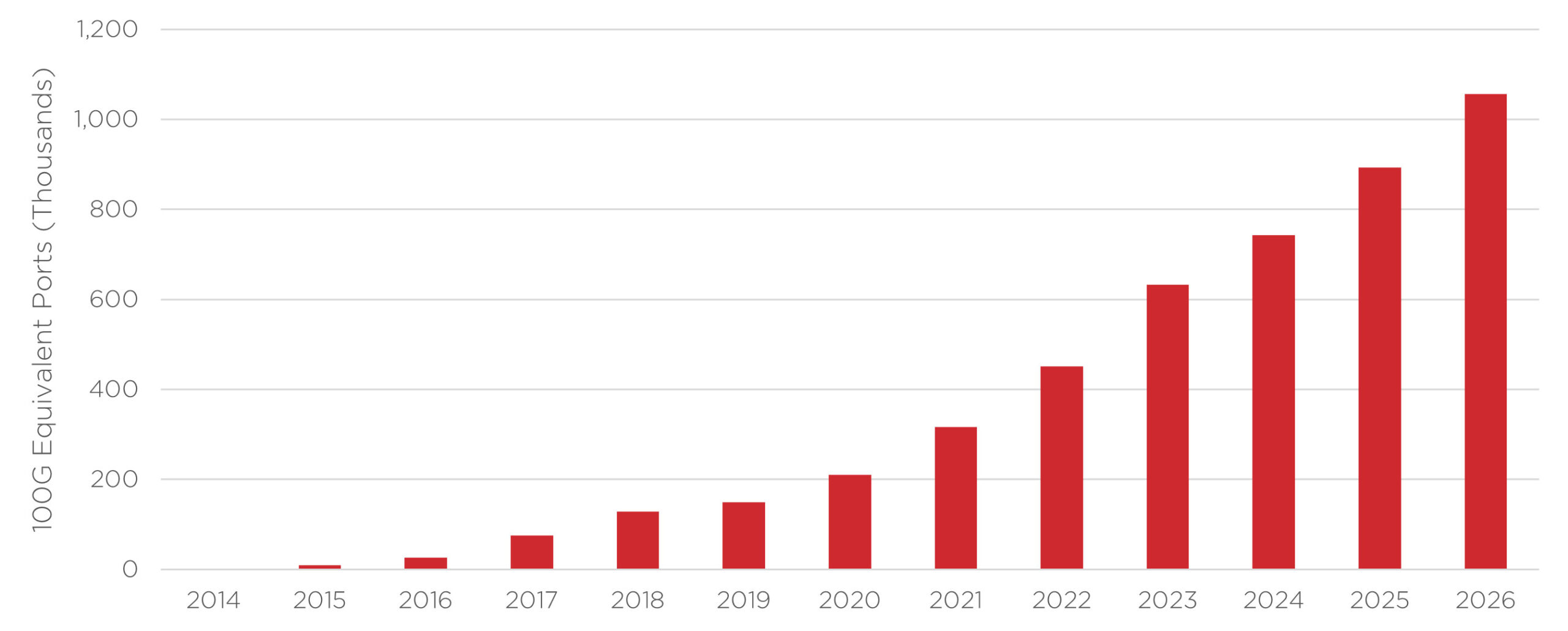

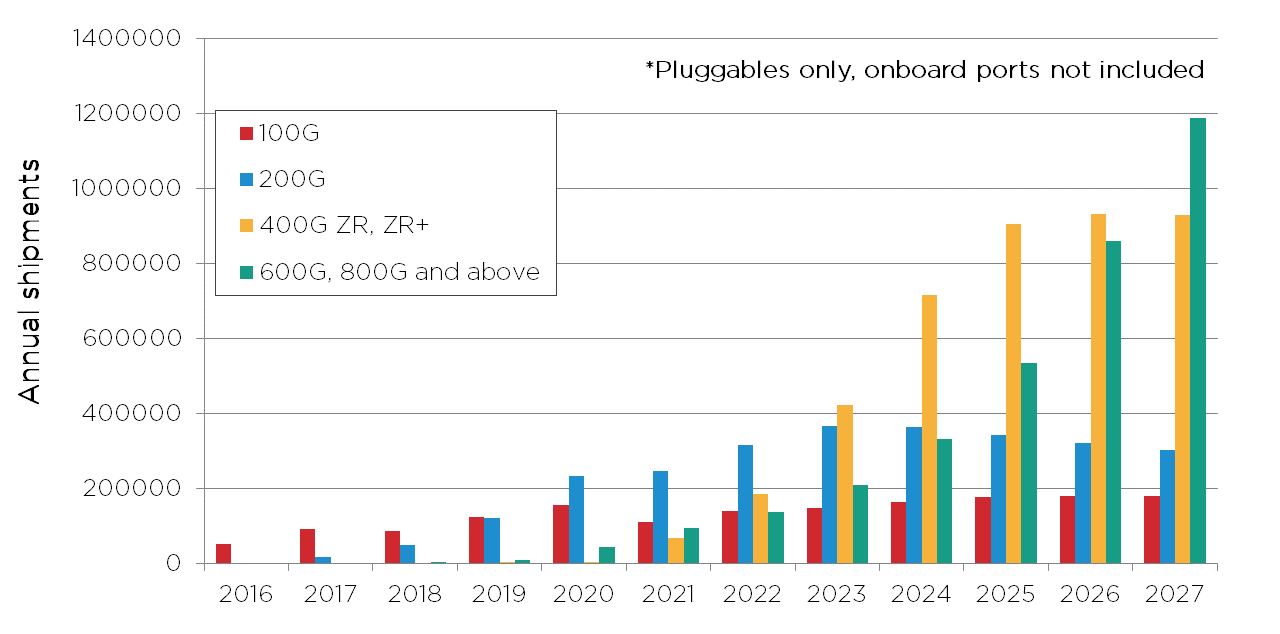

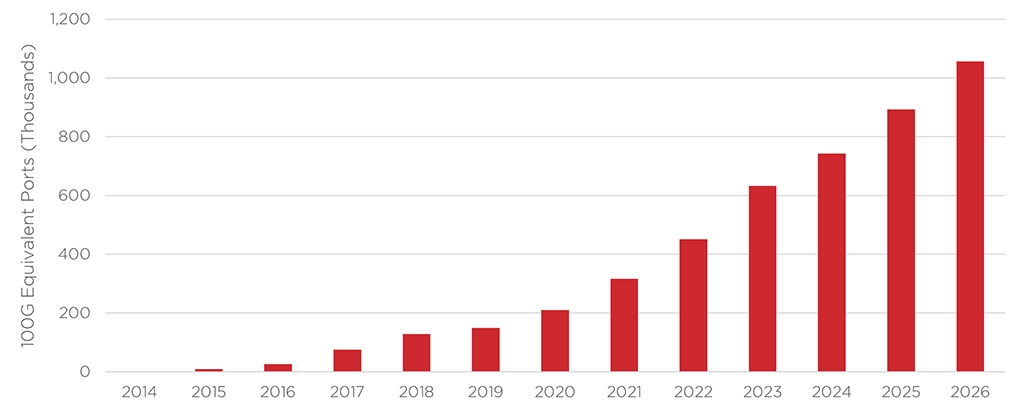

As an example of their success, Cignal AI forecasted that 400ZR shipments would dominate edge applications, as shown in Figure 2.

The campus DCI segment, featuring distances below 10 kilometers, was squarely in the domain of direct detect products when the standard speed of these links was 100Gbps. No amplifiers nor compensators were needed for these shorter distances, so direct detect transceivers are as simple to deploy and maintain as coherent ones.

However, as link bandwidths increase into the Terabit space, these direct detect links will need more amplifiers to reach 10 kilometers, and their power consumption will approach that of coherent solutions. The industry initially predicted that coherent solutions would be able to match the power consumption of PAM4 direct detect solutions as early as 800G generation. However, PAM4 developers have proven resourceful and have borrowed some aspects of coherent solutions without fully implementing a coherent solution. For example, ahead of OFC 2023, semiconductor solutions provider Marvell announced a 1.6Tbps PAM4 platform that pushes the envelope on the cost and power per bit they could offer in the 10 km range.

Following the coming years and how the PAM-4 industry evolves will be interesting. How many (power-hungry) features of coherent solutions will they have to borrow if they want to keep up in upcoming generations and speeds of 3.2 Tbps and beyond? Lumentum’s Chief Technology Officer, Brandon Collings, has some interesting thoughts on the subject in this interview with Gazettabyte.

Below Terabit speeds, direct detect technology (both NRZ and PAM-4) will likely dominate the intra-DCI space (also called data center fabric) in the coming years. In this space, links span less than 2 kilometers, and for particularly short links (< 300 meters), affordable multimode fiber (MMF) is frequently used.

Nevertheless, moving to larger, more centralized data centers (such as hyperscale) is lengthening intra-DCI links. Instead of transferring data directly from one data center building to another, new data centers move data to a central hub. So even if the building you want to connect to might be 200 meters away, the fiber runs to a hub that might be one or two kilometers away. In other words, intra-DCI links are becoming campus DCI links requiring their single-mode fiber solutions.

On top of these changes, the upgrades to Terabit speeds in the coming decade will also see coherent solutions more closely challenge the power consumption of direct detect transceivers. PAM-4 direct detect transceivers that fulfill the speed requirements require digital signal processors (DSPs) and more complex lasers that will be less efficient and affordable than previous generations of direct detect technology. With coherent technology scaling up in volume and having greater flexibility and performance, one can argue that it will also reach cost-competitiveness in this space.

Unsurprisingly, using coherent or direct detect technology for data center interconnects boils down to reach and capacity needs. 400ZR coherent is already established as the solution for metro DCIs. In campus interconnects of 10 km or less, PAM-4 products remain a robust solution up to 1.6 Tbps, but coherent technology is making a case for its use. Thus, it will be interesting to see how they compete in future generations and 3.2 Tbps.

Coherent solutions are also becoming more competitive as the intra-data center sector moves into higher Terabit speeds, like 3.2Tbps. Overall, the datacom sector is moving towards coherent technology, which is worth considering when upgrading data center links.

Tags: 800G, access networks, coherent, cost, cost-effective, Data center, distributed computing, edge and metro DCIs, integration, Intra DCI, license, metro, miniaturized, photonic integration, Photonics, pluggable, power consumption, power consumption SFP, reach, Terabit

Article first published 27 July 2022, updated 12 April 2023. Network carriers want to provide…

Article first published 27 July 2022, updated 12 April 2023.

Network carriers want to provide communications solutions in all areas: mobile access, cable networks, and fixed access to business customers. They want to provide this extra capacity and innovative and personalized connectivity and entertainment services to their customers.

Deploying only legacy direct detect technologies will not be enough to cover these growing bandwidth and service demands of mobile, cable, and business access networks with the required reach. In several cases, networks must deploy more 100G coherent dense wavelength division multiplexing (DWDM) technology to transmit more information over long distances. Several applications in the optical network edge could benefit from upgrading from 10G DWDM or 100G grey aggregation uplinks to 100G DWDM optics:

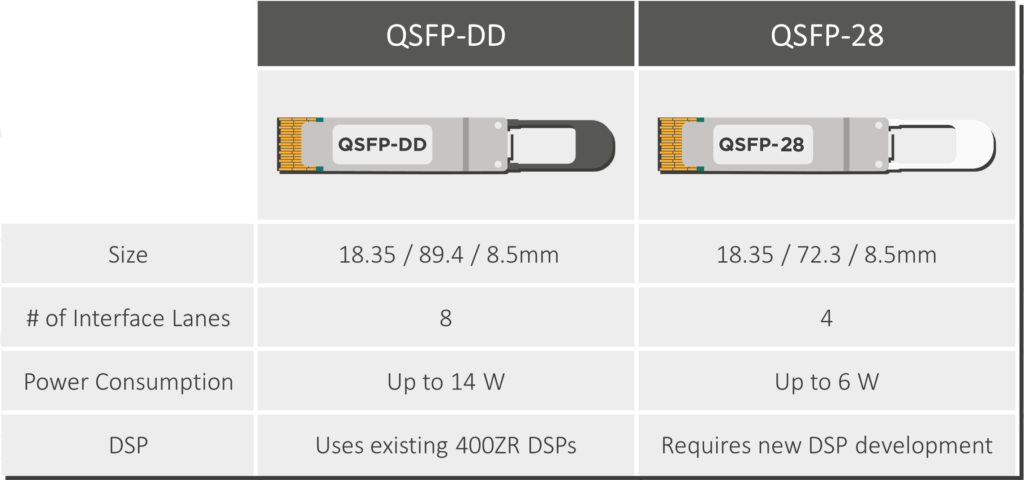

However, network providers have often stuck to their 10G DWDM or 100G grey links because the existing 100G DWDM solutions could not check all the required boxes. “Scaled-down” coherent 400ZR solutions had the required reach and tunability but were too expensive and power-hungry for many access network applications. Besides, ports in small to medium IP routers used in most edge deployments often do not support the QSFP-DD form factor commonly used in 400ZR modules but the QSFP28 form factor.

Fortunately, the rise of 100ZR solutions in the QSFP28 form factor is changing the landscape for access networks. “The access market needs a simple, pluggable, low-cost upgrade to the 10G DWDM optics that it has been using for years. 100ZR is that upgrade,” said Scott Wilkinson, Lead Analyst for Optical Components at market research firm Cignal AI. “As access networks migrate from 1G solutions to 10G solutions, 100ZR will be a critical enabling technology.”

In this article, we will discuss how the recent advances in 100ZR solutions will enable the evolution of different segments of the network edge: mobile midhaul and backhaul, business services, and cable.

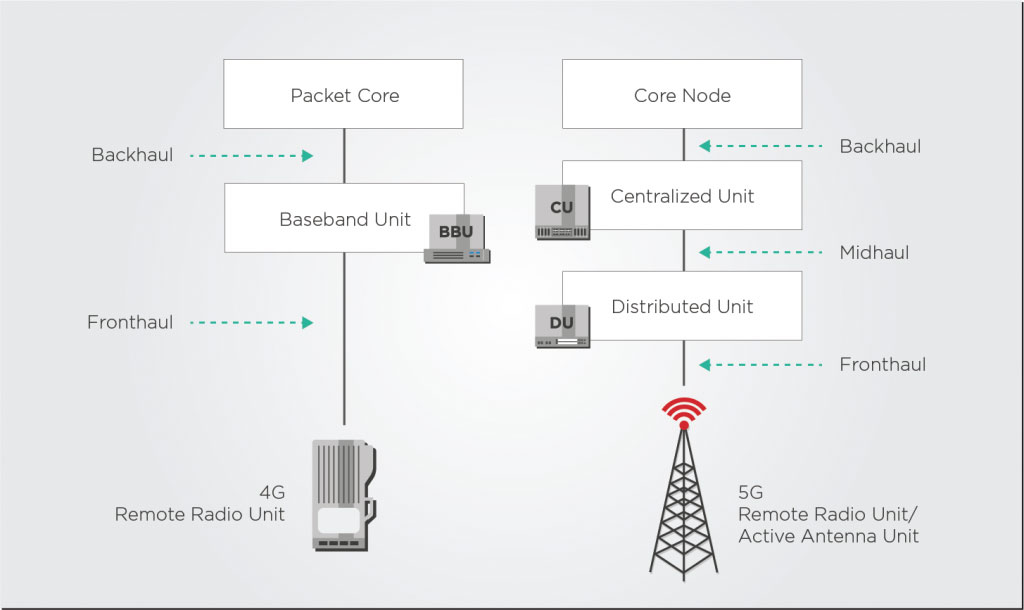

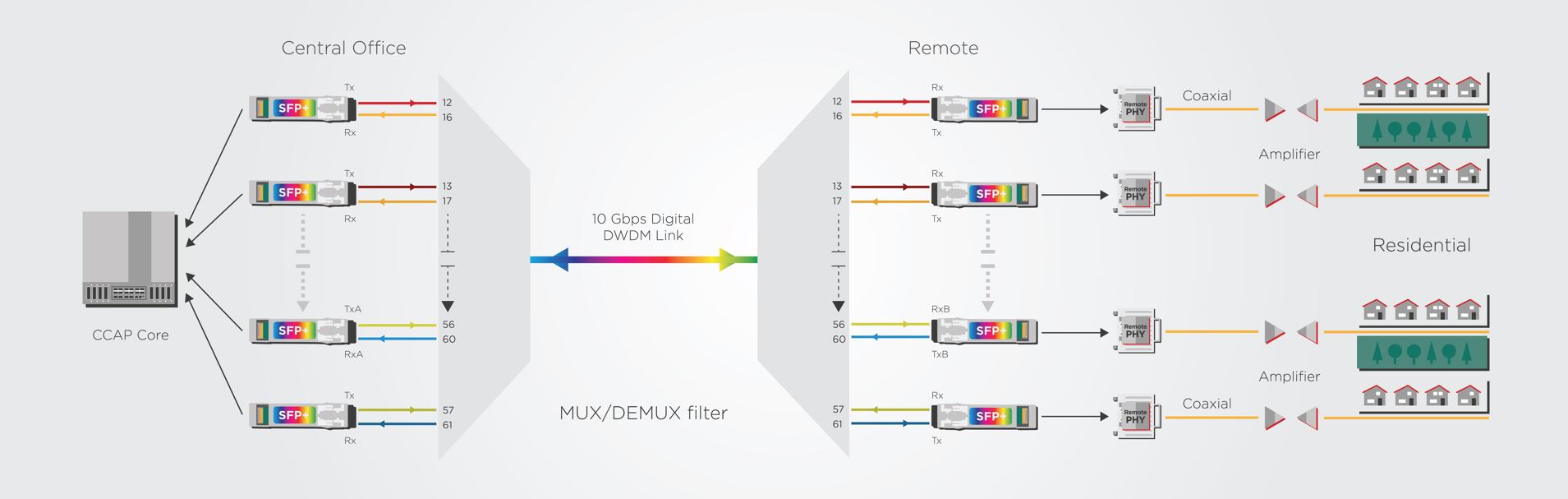

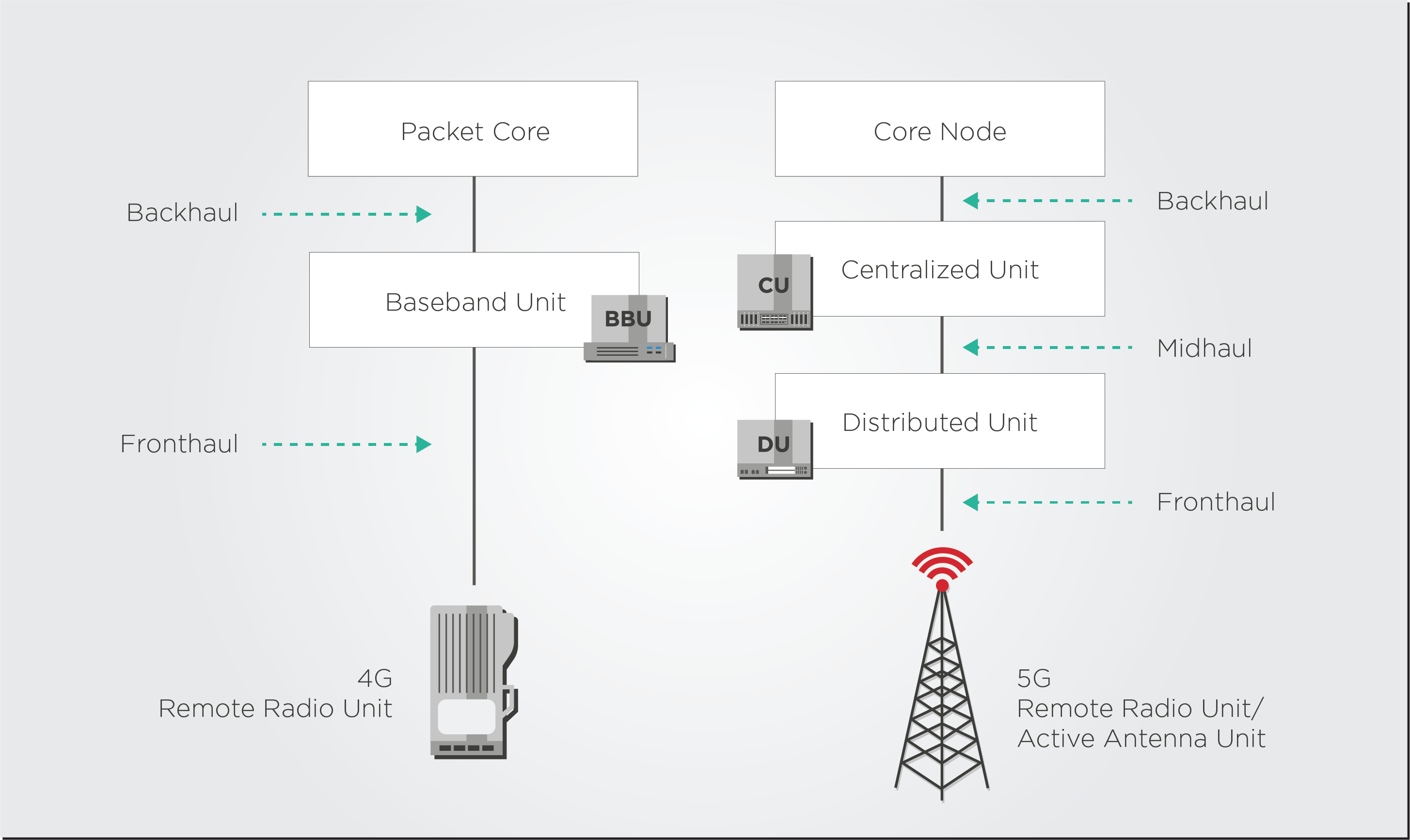

The upgrade from 4G to 5G has shifted the radio access network (RAN) from a two-level structure with backhaul and fronthaul in 4G to a three-level structure with back-, mid-, and fronthaul:

The initial rollout of 5G has already happened in most developed countries, with many operators upgrading their 1G SFPs transceiver to 10G SFP+ devices. Some of these 10G solutions had DWDM technology; many were single-channel grey transceivers. However, mobile networks must move to the next phase of 5G deployments, which requires installing and aggregating more and smaller base stations to exponentially increase the number of devices connected to the network.

These mature phases of 5G deployment will require operators to continue scaling fiber capacity cost-effectively with more widespread 10G DWDM SFP+ solutions and 25G SFP28 transceivers. These upgrades will put greater pressure on the aggregation segments of mobile backhaul and midhaul. These network segments commonly used link aggregation of multiple 10G DWDM links into a higher bandwidth group (such as 4x10G). However, this link aggregation requires splitting up larger traffic streams and can be complex to integrate across an access ring. A single 100G uplink would reduce the need for such link aggregation and simplify the network setup and operations. If you want to know more about the potential market and reach of this link aggregation upgrade, we recommend reading the recent Cignal AI report on 100ZR technologies.

According to Cignal AI’s 100ZR report, the biggest driver of 100ZR use will come from multiplexing fixed access network links upgrading from 1G to 10G. This trend will be reflected in cable networks’ long-awaited migration from Gigabit Passive Optical Networks (GPON) to 10G PON. This evolution is primarily guided by the new DOCSIS 4.0 standard, which promises 10Gbps download speeds for customers and will require several hardware upgrades in cable networks.

To multiplex these new larger 10Gbps customer links, cable providers and network operators need to upgrade their optical line terminals (OLTs) and Converged Cable Access Platforms (CCAPs) from 10G to 100G DWDM uplinks. Many of these new optical hubs will support up to 40 or 80 optical distribution networks (ODNs), too, so the previous approach of aggregating multiple 10G DWDM uplinks will not be enough to handle this increased capacity and higher number of channels.

Anticipating such needs, the non-profit R&D organization CableLabs has recently pushed to develop a 100G Coherent PON (C-PON) standard. Their proposal offers 100 Gbps per wavelength at a maximum reach of 80 km and up to a 1:512 split ratio. CableLabs anticipates C-PON and its 100G capabilities will play a significant role not just in cable optical network aggregation but in other use cases such as mobile x-haul, fiber-to-the-building (FTTB), long-reach rural scenarios, and distributed access networks.

Almost every organization uses the cloud in some capacity, whether for development and test resources or software-as-a-service applications. While the cost and flexibility of the cloud are compelling, its use requires fast, high-bandwidth wide-area connectivity to make cloud-based applications work as they should.

Similarly to cable networks, these needs will require enterprises to upgrade their existing 1G Ethernet private lines to 10G Ethernet, which will also drive a greater need for 100G coherent uplinks. Cable providers and operators will also want to take advantage of their upgraded 10G PON networks and expand the reach and capacity of their business services.

The business and enterprise services sector was the earliest adopter of 100G coherent uplinks, deploying “scaled-down” 400ZR transceivers in the QSFP-DD form factor since they were the solution available at the time. However, these QSFP-DD slots also support QSFP28 form factors, so the rise of QSFP 100ZR solutions will provide these enterprise applications with a more attractive upgrade with lower cost and power consumption. These QSFP28 solutions had struggled to become more widespread before because they required the development of new, low-power digital signal processors (DSPs), but DSP developers and vendors are keenly jumping on board the 100ZR train and have announced their development projects: Acacia, Coherent/ADVA, Marvell/InnoLight, and Marvell/OE Solutions. This is also why EFFECT Photonics has announced its plans to co-develop a 100G DSP with Credo Semiconductor that best fits 100ZR solutions in the QSFP28 form factor.

In the coming years, 100G coherent uplinks will become increasingly widespread in deployments and applications throughout the network edge. Some mobile access networks use cases must upgrade their existing 10G DWDM link aggregation into a single coherent 100G DWDM uplink. Meanwhile, cable networks and business services are upgrading their customer links from 1Gbps to 10Gbps, and this migration will be the major factor that will increase the demand for coherent 100G uplinks. For carriers who provide converged cable/mobile access, these upgrades to 100G uplinks will enable opportunities to overlay more business services and mobile traffic into their existing cable networks.

As the QSFP28 100ZR ecosystem expands, production will scale up, and these solutions will become more widespread and affordable, opening up even more use cases in access networks.

Tags: 5G, access, aggregation, backhaul, capacity, DWDM, fronthaul, Integrated Photonics, LightCounting, metro, midhaul, mobile, mobile access, network, optical networking, optical technology, photonic integrated chip, photonic integration, Photonics, PIC, PON, programmable photonic system-on-chip, solutions, technology

Artificial intelligence (AI) will have a significant role in making optical networks more scalable, affordable,…

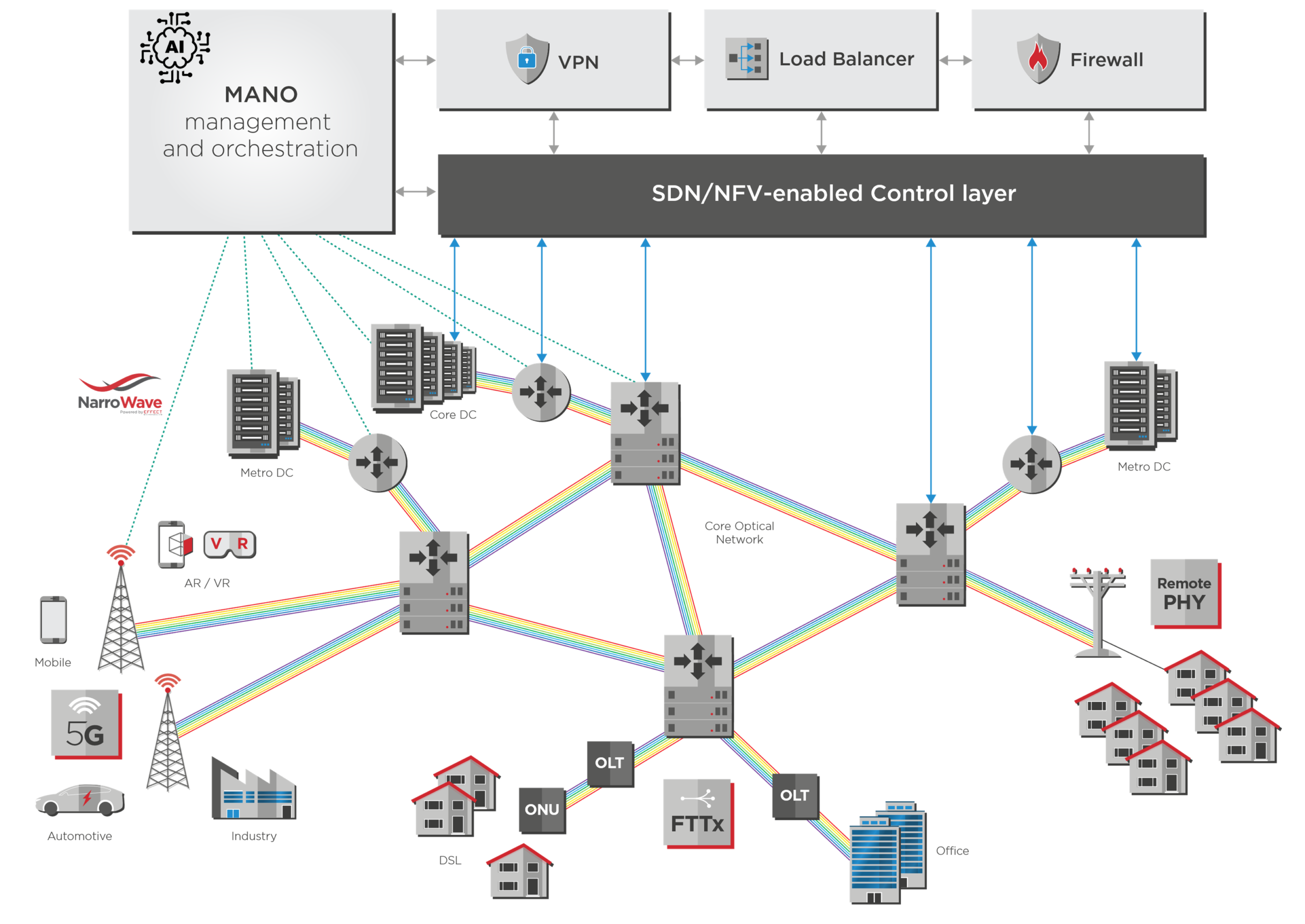

Artificial intelligence (AI) will have a significant role in making optical networks more scalable, affordable, and sustainable. It can gather information from devices across the optical network to identify patterns and make decisions independently without human input. By synergizing with other technologies, such as network function virtualization (NFV), AI can become a centralized management and orchestration network layer. Such a setup can fully automate network provisioning, diagnostics, and management, as shown in the diagram below.

However, artificial intelligence and machine learning algorithms are data-hungry. To work optimally, they need information from all network layers and ever-faster data centers to process it quickly. Pluggable optical transceivers thus need to become smarter, relaying more information back to the AI central unit, and faster, enabling increased AI processing.

Optical transceivers are crucial in developing better AI systems by facilitating the rapid, reliable data transmission these systems need to do their jobs. High-speed, high-bandwidth connections are essential to interconnect data centers and supercomputers that host AI systems and allow them to analyze a massive volume of data.

In addition, optical transceivers are essential for facilitating the development of artificial intelligence-based edge computing, which entails relocating compute resources to the network’s periphery. This is essential for facilitating the quick processing of data from Internet-of-Things (IoT) devices like sensors and cameras, which helps minimize latency and increase reaction times.

400 Gbps links are becoming the standard across data center interconnects, but providers are already considering the next steps. LightCounting forecasts significant growth in the shipments of dense-wavelength division multiplexing (DWDM) ports with data rates of 600G, 800G, and beyond in the next five years. We discuss these solutions in greater detail in our article about the roadmap to 800G and beyond.

Mobile networks now and in the future will consist of a massive number of devices, software applications, and technologies. Self-managed, zero-touch automated networks will be required to handle all these new devices and use cases. Realizing this full network automation requires two vital components.

These goals require smart optical equipment and components that provide comprehensive telemetry data about their status and the fiber they are connected to. The AI-controlled centralized management and orchestration layer can then use this data for remote management and diagnostics. We discuss this topic further in our previous article on remote provisioning, diagnostics, and management.

For example, a smart optical transceiver that fits this centralized AI-management model should relay data to the AI controller about fiber conditions. Such monitoring is not just limited to finding major faults or cuts in the fiber but also smaller degradations or delays in the fiber that stem from age, increased stress in the link due to increased traffic, and nonlinear optical effects. A transceiver that could relay all this data allows the AI controller to make better decisions about how to route traffic through the network.

After relaying data to the AI management system, a smart pluggable transceiver must also switch parameters to adapt to different use cases and instructions given by the controller.

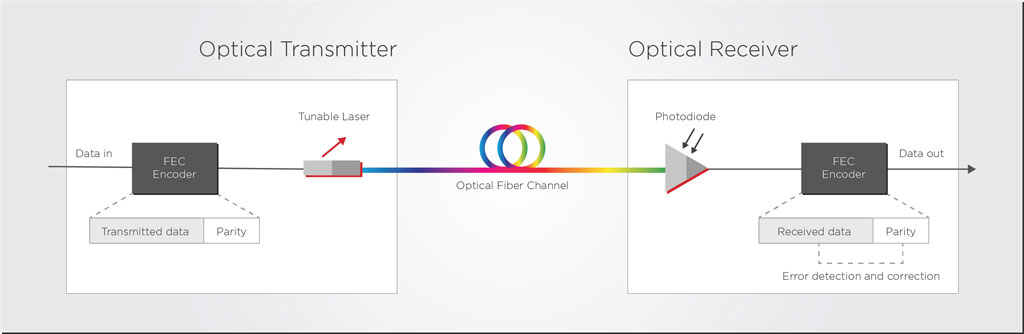

Let’s look at an example of forward error correction (FEC). FEC makes the coherent link much more tolerant to noise than a direct detect system and enables much longer reach and higher capacity. In other words, FEC algorithms allow the DSP to enhance the link performance without changing the hardware. This enhancement is analogous to imaging cameras: image processing algorithms allow the lenses inside your phone camera to produce a higher-quality image.

A smart transceiver and DSP could switch among different FEC algorithms to adapt to network performance and use cases. Let’s look at the case of upgrading a long metro link of 650km running at 100 Gbps with open FEC. The operator needs to increase that link capacity to 400 Gbps, but open FEC could struggle to provide the necessary link performance. However, if the transceiver can be remotely reconfigured to use a proprietary FEC standard, the transceiver will be able to handle this upgraded link.

Reconfigurable transceivers can also be beneficial to auto-configure links to deal with specific network conditions, especially in brownfield links. Let’s return to the fiber monitoring subject we discussed in the previous section. A transceiver can change its modulation scheme or lower the power of its semiconductor optical amplifier (SOA) if telemetry data indicates a good quality fiber. Conversely, if the fiber quality is poor, the transceiver can transmit with a more limited modulation scheme or higher power to reduce bit errors. If the smart pluggable detects that the fiber length is relatively short, the laser transmitter power or the DSP power consumption could be scaled down to save energy.

Optical networks will need artificial intelligence and machine learning to scale more efficiently and affordably to handle the increased traffic and connected devices. Conversely, AI systems will also need faster pluggables than before to acquire data and make decisions more quickly. Pluggables that fit this new AI era must be fast, smart, and adapt to multiple use cases and conditions. They will need to scale up to speeds beyond 400G and relay monitoring data back to the AI management layer in the central office. The AI management layer can then program transceiver interfaces from this telemetry data to change parameters and optimize the network.

Tags: 800G, 800G and beyond, adaptation, affordable, AI, artificial intelligence, automation, CloudComputing, data, DataCenter, EFFECT Photonics, FEC, fiber quality, innovation, integration, laser arrays, machine learning, network conditions, network optimization, Networking, optical transceivers, photonic integration, Photonics, physical layer, programmable interface, scalable, sensor data flow, technology, Telecommunications, telemetry data, terabyte, upgrade, virtualization

Join EFFECT Photonics from March 7 to 9, 2023 at OFC in San Diego, California, the world’s largest event for optical networking and communications, to discover firsthand how our technology is transforming where light meets digital. Visit Booth #2423 to learn how EFFECT Photonics’ full portfolio of optical building blocks are enabling 100G coherent to the network edge and next-generation applications.

Build Your Own 100G ZR Coherent Module

At this year’s OFC, see how easy and affordable it can be to upgrade existing 10G links to a more scalable 100G coherent solution! Try your hand at constructing a 100G ZR coherent module specifically designed for the network edge utilizing various optical building blocks including tunable lasers, DSPs and optical subassemblies.

Tune Your Own PIC (Photonic Integrated Circuit)

Be sure to stop by Booth #2423 to tune your own PIC with EFFECT Photonics technology. In this interactive and dynamic demonstration, participants can explore first-hand the power of EFFECT Photonics solutions utilizing various parameters and product configurations.

Our experts are also available to discuss customer needs and how EFFECT Photonics might be able to assist. To schedule a meeting, please email marketing@effectphotonics.com

Tags: 100 ZR, 100G, 100gcoherent, access, access networks, bringing100Gtoedge, cloud, cloudedge, coherent, coherentoptics, datacenters, DSP, DSPs, EFFECT Photonics, Integrated Photonics, networkedge, ofc23, opticcommunications, Optics, photonic integration, Photonics, PIC, tunablelasers, wherelightmeetsdigital

Outside of communications applications, photonics can play a major role in sensing and imaging applications.…

Outside of communications applications, photonics can play a major role in sensing and imaging applications. The most well-known of these sensing applications is Light Detection and Ranging (LIDAR), which is the light-based cousin of RADAR systems that use radio waves.

To put it in a simple way: LIDAR involves sending out a pulse of light, receiving it back, and using a computer to study how the environment changes that pulse. It’s a simple but quite powerful concept.

If we send pulses of light to a wall and listen to how long it takes for them to come back, we know how far that wall is. That is the basis of time-of-flight (TOF) LIDAR. If we send a pulse of light with multiple wavelengths to an object, we know where the object is and whether it is moving towards you or away from you. That is next-gen LIDAR, known as FMCW LIDAR. These technologies are already used in self-driving cars to figure out the location and distance of other cars. The following video provides a short explainer of how LIDAR works in self-driving cars.

Despite their usefulness, the wider implementation of LIDAR systems is limited by their size, weight, and power (SWAP) requirements. Or, to put it bluntly, they are bulky and expensive. For example, maybe you have seen pictures and videos of self-driving cars with a large LIDAR sensor and scanner on the roof of the car, as in the image below.

Making LIDAR systems more affordable and lighter requires integrating the optical components more tightly and manufacturing them at a higher volume. Unsurprisingly, this sounds like a problem that could be solved by integrated photonics.

Back in 2019, Tesla CEO Elon Musk famously said that “Anyone relying on LIDAR is doomed”. And his scepticism had some substance to it. LIDAR sensors were clunky and expensive, and it wasn’t clear that they would be a better solution than just using regular cameras with huge amounts of visual analysis software. However, the incentive to dominate the future of the automotive sector was too big, and a technology arms race had already begun to miniaturize LIDAR systems into a single photonic chip.

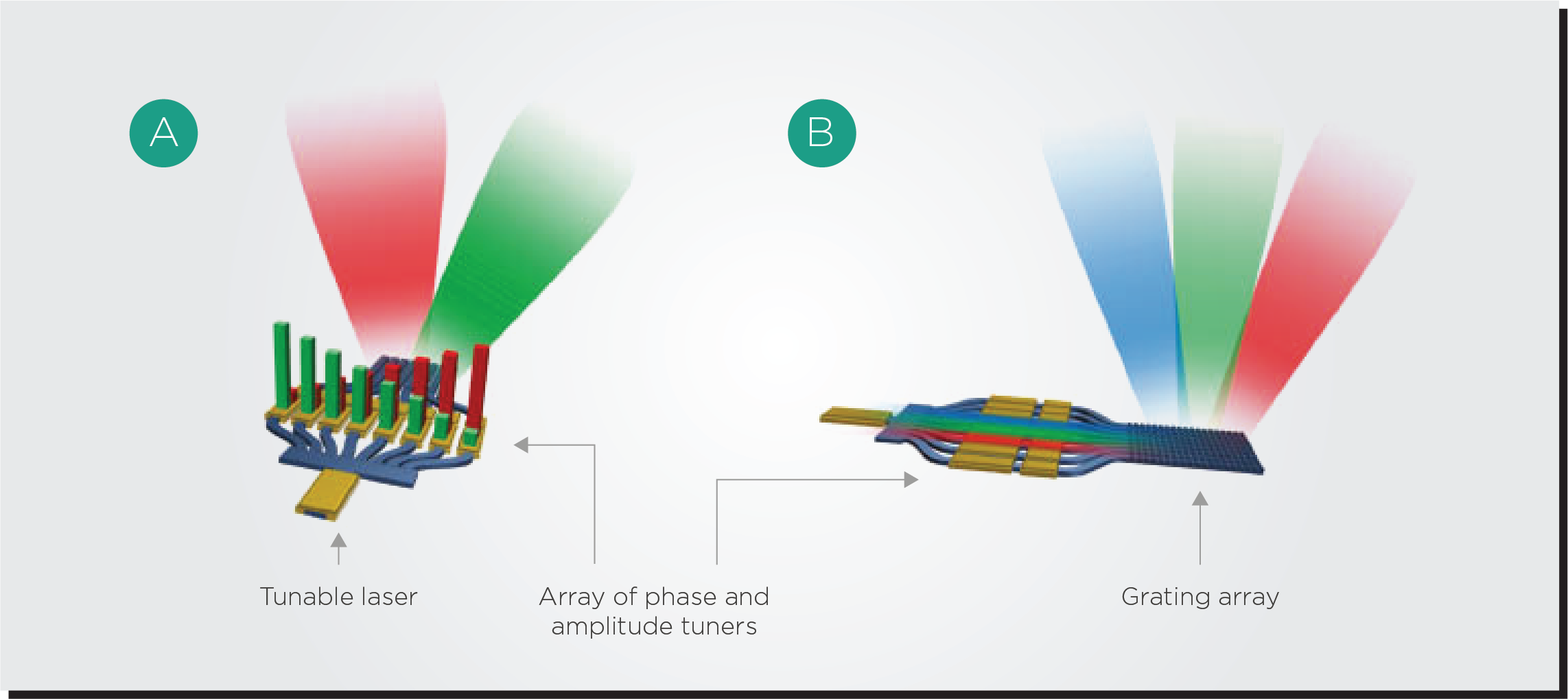

Let’s provide a key example. A typical LIDAR system will require a mechanical system that moves the light source around to scan the environment. This could be as simple as a 360-rotating LIDAR scanner or using small scanning mirrors to steer the beam. However, an even better solution would be to create a LIDAR scanner with no moving parts that could be manufactured at a massive scale on a typical semiconductor process.

This is where optical phased arrays (OPAs) systems come in. An OPA system splits the output of a tunable laser into multiple channels and puts different time delays on each channels. The OPA will then recombine the channels, and depending on the time delays assigned, the resulting light beam will come out at a different angle. In other words, an OPA system can steer a beam of light from a semiconductor chip without any moving parts.

There is still plenty of development required to bring OPAs into maturity. Victor Dolores Calzadilla, a researcher from the Eindhoven University of Technology (TU/e) explains that “The OPA is the biggest bottleneck for achieving a truly solid-state, monolithic lidar. Many lidar building blocks, such as photodetectors and optical amplifiers, were developed years ago for other applications, like telecommunication. Even though they’re generally not yet optimized for lidar, they are available in principle. OPAs were not needed in telecom, so work on them started much later. This component is the least mature.”

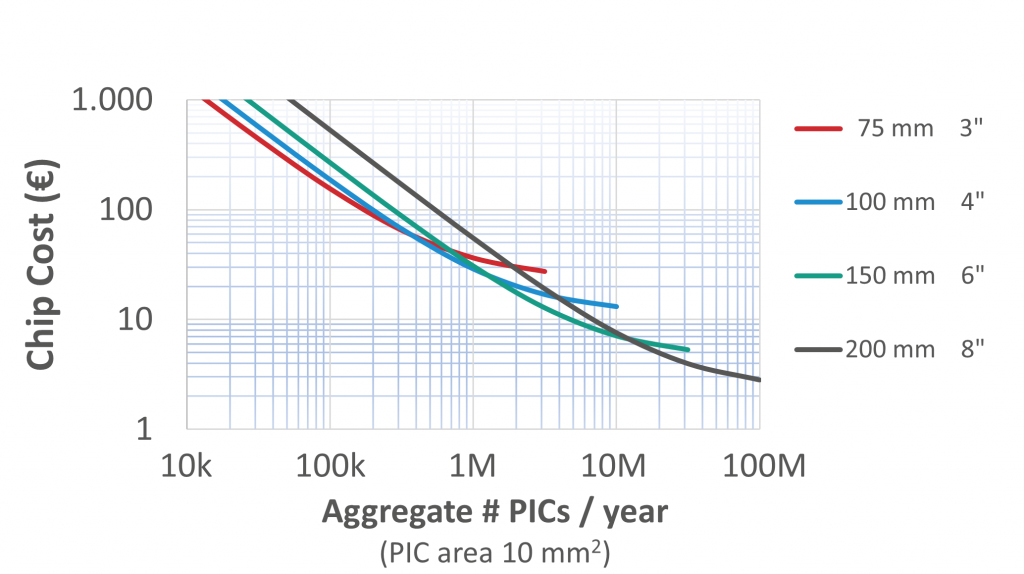

Wafer scale photonics manufacturing demands a higher upfront investment, but the resulting high-volume production line drives down the cost per device. This economy-of-scale principle is the same one behind electronics manufacturing, and the same must be applied to photonics. The more optical components we can integrate into a single chip, the more can the price of each component decrease. The more optical System-on-Chip (SoC) devices can go into a single wafer, the more can the price of each SoC decrease.

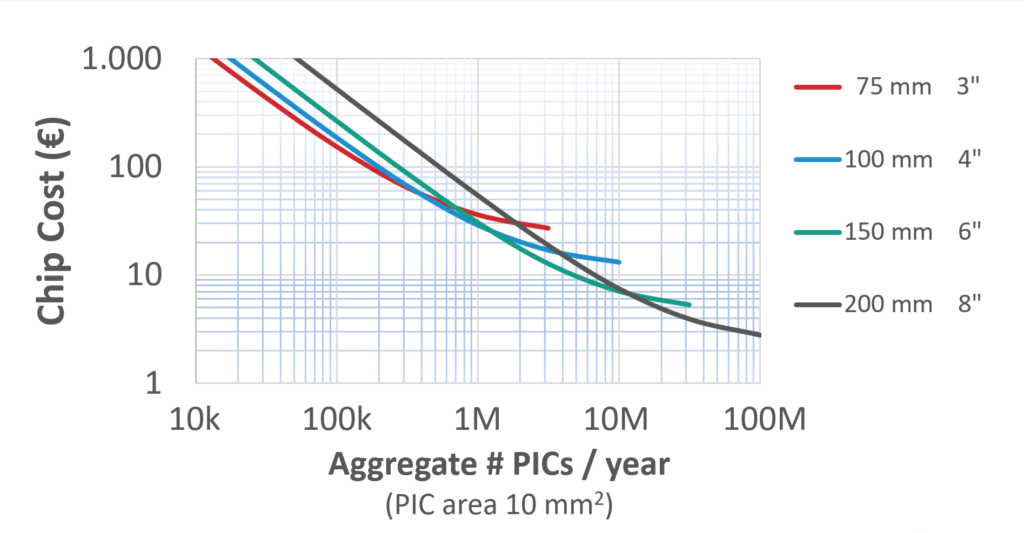

Researchers at the Technical University of Eindhoven and the JePPIX consortium have done some modelling to show how this economy of scale principle would apply to photonics. If production volumes can increase from a few thousands of chips per year to a few millions, the price per optical chip can decrease from thousands of Euros to mere tens of Euros. This must be the goal for LIDAR and automotive industry.

By integrating all optical components on a single chip, we also shift the complexity from the assembly process to the much more efficient and scalable semiconductor wafer process. Assembling and packaging a device by interconnecting multiple photonic chips increases assembly complexity and costs. On the other hand, combining and aligning optical components on a wafer at a high volume is much easier, which drives down the device’s cost.

Another challenge for photonics technologies is that they need to move from into parameters and specifications in the automotive sector that are often harsher than the telecom/datacom sector. For example, a target temperature range of −40°C to 125°C is often required, which is much broader than the typical industrial temperature range used in the telecom sector. The packaging of the PIC and its coupling to fiber and free space is particularly sensitive to these temperature changes.

| Temperature Standard | Temperature Range (°C) | |

| Min | Max | |

| Commercial (C-temp) | 0 | 70 |

| Extended (E-temp) | -20 | 85 |

| Industrial (I-temp) | -40 | 85 |

| Automotive / Full Military | -40 | 125 |

Fortunately, a substantial body of knowledge already exists to make integrated photonics compatible with harsh environments like those of outer space. After all, photonic integrated circuits (PICs) use similar materials to their electronic counterparts, which have already been qualified for space and automotive applications. Commercial solutions, such as those offered by PHIX Photonics Assembly, Technobis IPS, and the PIXAPP Photonic Packaging Pilot Line, are now available.

Photonics technology must be built on a wafer-scale process that can produce millions of chips in a month. When we can show the market that photonics can be as easy to use as electronics, that will trigger a revolution in the use of photonics worldwide.

The broader availability of photonic devices will take photonics into new applications, such as those of LIDAR and the automotive sector. With a growing integrated photonics industry, LIDAR can become lighter, avoid moving parts, and be manufactured in much larger volumes that reduce the cost of LIDAR devices. Integrated photonics is the avenue for LIDAR to become more accessible to everyone.

Tags: accessible, affordable, automotive, automotive sector, beamforming, discrete, economics of scale, efficient, electronics, laser, LIDAR, phased arrays, photonic integration, power consumption, self-driving car, self-driving cars, space, wafer

Smaller data centers placed locally have the potential to minimize latency, overcome inconsistent connections, and…

Smaller data centers placed locally have the potential to minimize latency, overcome inconsistent connections, and store and compute data closer to the end user. These benefits are causing the global market for edge data centers to explode, with PWC predicting that it will nearly triple from $4 billion in 2017 to $13.5 billion in 2024.

As edge data centers become more common, the issue of interconnecting them becomes more prominent. This situation motivated the Optical Internetworking Forum (OIF) to create the 400ZR and ZR+ standards for pluggable modules. With small enough modules to pack a router faceplate densely, the datacom sector could profit from a 400ZR solution for high-capacity data center interconnects of up to 80km. Cignal AI forecasts that 400ZR shipments will dominate the edge applications, as shown in the figure below.

The 400ZR standard has made coherent technology and dense wavelength division multiplexing (DWDM) the dominant solution in the metro data center interconnects (DCIs) space. Datacom provider operations teams found the simplicity of coherent pluggables very attractive. There was no need to install and maintain additional amplifiers and compensators as in direct detect technology. A single coherent transceiver plugged into a router could fulfill the requirements.

However, there are still obstacles that prevent coherent from becoming dominant in shorter-reach DCI links at the campus (< 10km distance) and intra-datacenter (< 2km distance) level. These spaces require more optical links and transceivers, and coherent technology is still considered too power-hungry and expensive to become the de-facto solution here.

Fortunately, there are avenues for coherent technology to overcome these barriers. By embracing multi-laser arrays, DSP co-design, and electronic ecosystems, coherent technology can mature and become a viable solution for every data center interconnect scenario.

Earlier this year, Intel Labs demonstrated an eight-wavelength laser array fully integrated on a silicon wafer. These milestones are essential for optical transceivers because the laser arrays can allow for multi-channel transceivers that are more cost-effective when scaling up to higher speeds.

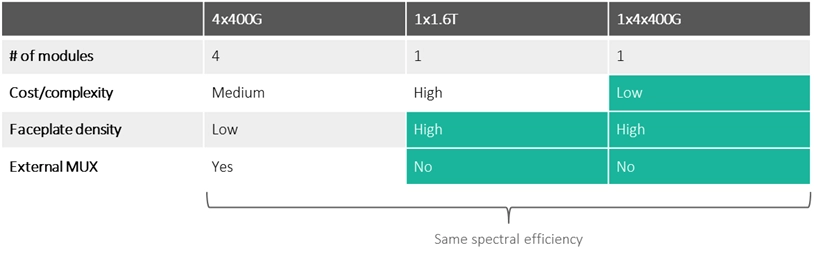

Let’s say we need an intra-DCI link with 1.6 Terabits/s of capacity. There are three ways we could implement it:

Multi-laser array and multi-channel solutions will become increasingly necessary to increase link capacity in coherent systems. They will not need more slots in the router faceplate while simultaneously avoiding the higher cost and complexity of increasing the speed with just a single channel.

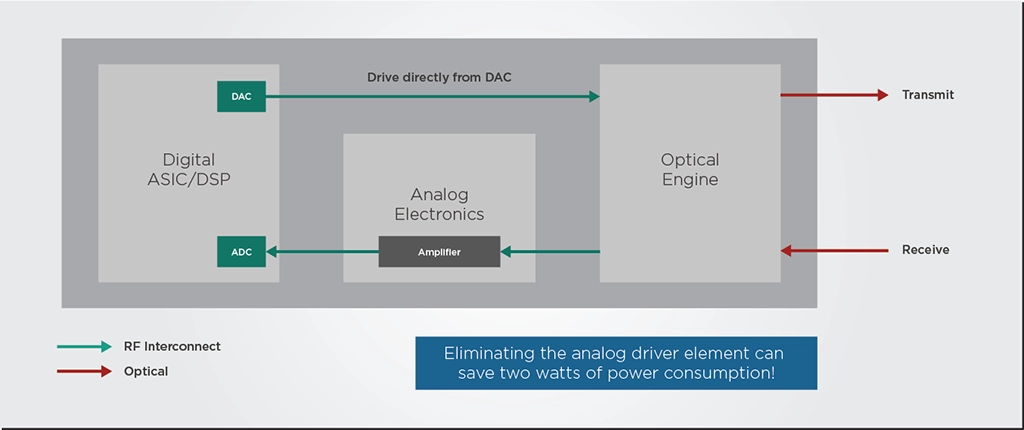

Transceiver developers often source their DSP, laser, and optical engine from different suppliers, so all these chips are designed separately from each other. This setup reduces the time to market and simplifies the research and design processes but comes with trade-offs in performance and power consumption.

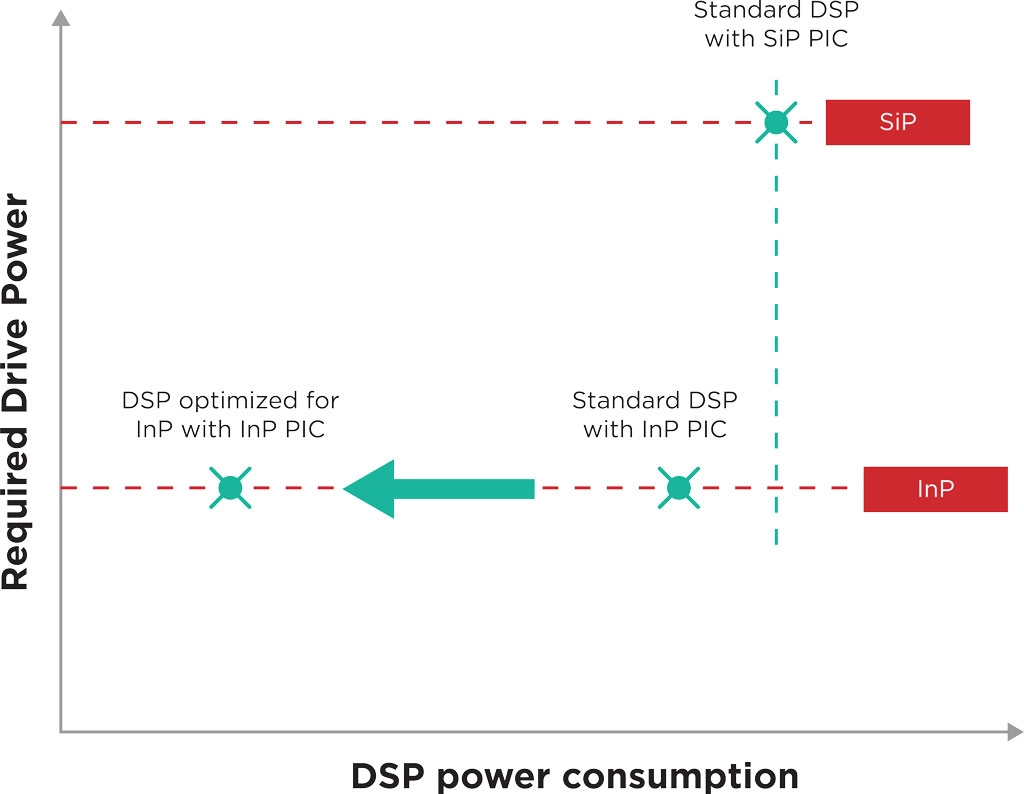

In such cases, the DSP is like a Swiss army knife: a jack of all trades designed for different kinds of optical engines but a master of none. For example, current DSPs are designed to be agnostic to the material platform of the photonic integrated circuit (PIC) they are connected to, which can be Indium Phosphide (InP) or Silicon. Thus, they do not exploit the intrinsic advantages of these material platforms. Co-designing the DSP chip alongside the PIC can lead to a much better fit between these components.

To illustrate the impact of co-designing PIC and DSP, let’s look at an example. A PIC and a standard platform-agnostic DSP typically operate with signals of differing intensities, so they need some RF analog electronic components to “talk” to each other. This signal power conversion overhead constitutes roughly 2-3 Watts or about 10-15% of transceiver power consumption.

However, the modulator of an InP PIC can run at a lower voltage than a silicon modulator. If this InP PIC and the DSP are designed and optimized together instead of using a standard DSP, the PIC could be designed to run at a voltage compatible with the DSP’s signal output. This way, the optimized DSP could drive the PIC directly without needing the RF analog driver, doing away with most of the power conversion overhead we discussed previously.

Additionally, the optimized DSP could also be programmed to do some additional signal conditioning that minimizes the nonlinear optical effects of the InP material, which can reduce noise and improve performance.

Making coherent optical transceivers more affordable is a matter of volume production. As discussed in a previous article, if PIC production volumes can increase from a few thousand chips per year to a few million, the price per optical chip can decrease from thousands of Euros to mere tens of Euros. Achieving this production goal requires photonics manufacturing chains to learn from electronics and leverage existing electronics manufacturing processes and ecosystems.

While vertically-integrated PIC development has its strengths, a fabless model in which developers outsource their PIC manufacturing to a large-scale foundry is the simplest way to scale to production volumes of millions of units. Fabless PIC developers can remain flexible and lean, relying on trusted large-scale manufacturing partners to guarantee a secure and high-volume supply of chips. Furthermore, the fabless model allows photonics developers to concentrate their R&D resources on their end market and designs instead of costly fabrication facilities.

Further progress must also be made in the packaging, assembly, and testing of photonic chips. While these processes are only a small part of the cost of electronic systems, the reverse happens with photonics. To become more accessible and affordable, the photonics manufacturing chain must become more automated and standardized. It must move towards proven and scalable packaging methods that are common in the electronics industry.

If you want to know more about how photonics developers can leverage electronic ecosystems and methods, we recommend you read our in-depth piece on the subject.

Coherent transceivers are already established as the solution for metro Data Center Interconnects (DCIs), but they need to become more affordable and less power-hungry to fit the intra- and campus DCI application cases. Fortunately, there are several avenues for coherent technology to overcome these cost and power consumption barriers.

Multi-laser arrays can avoid the higher cost and complexity of increasing capacity with just a single transceiver channel. Co-designing the optics and electronics can allow the electronic DSP to exploit the intrinsic advantages of specific photonics platforms such as indium phosphide. Finally, leveraging electronic ecosystems and processes is vital to increase the production volumes of coherent transceivers and make them more affordable.

By embracing these pathways to progress, coherent technology can mature and become a viable solution for every data center interconnect scenario.

Tags: campus, cloud, cloud edge, codesign, coherent, DCI, DSP, DSPs, DWDM, integration, intra, light sources, metro, modulator, multi laser arrays, photonic integration, PIC, power consumption, wafer testing

Like every other telecom network, cable networks had to change to meet the growing demand…

Like every other telecom network, cable networks had to change to meet the growing demand for data. These demands led to the development of hybrid fiber-coaxial (HFC) networks in the 1990s and 2000s. In these networks, optical fibers travel from the cable company hub and terminate in optical nodes, while coaxial cable connects the last few hundred meters from the optical node to nearby houses. Most of these connections were asymmetrical, giving customers more capacity to download data than upload.

That being said, the way we use the Internet has evolved over the last ten years. Users now require more upstream bandwidth thanks to the growth of social media, online gaming, video calls, and independent content creation such as video blogging. The DOCSIS standards that govern data transmission over coaxial cables have advanced quickly because of these additional needs. For instance, full-duplex transmission with symmetrical upstream and downstream channels is permitted under the most current DOCSIS 4.0 specifications.

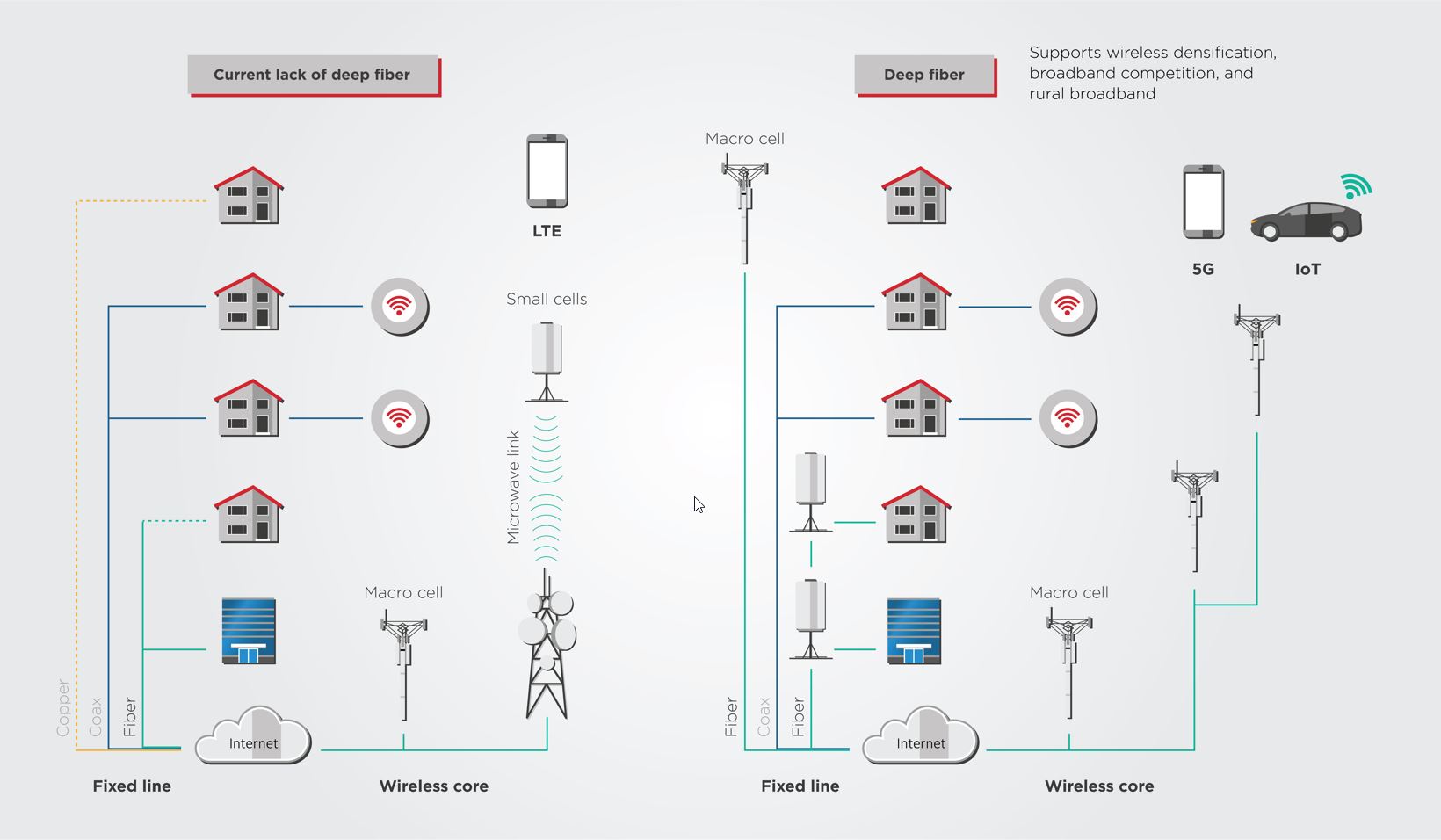

Fiber-to-the-home (FTTH) systems, which bring fiber right to the customer’s door, are also proliferating and enabling Gigabit connections quicker than HFC networks. Overall, extending optical fiber deeper into communities (see Figure 1 for a graphic example) is a critical economic driver, increasing connectivity for the rural and underserved. These investments also lead to more robust competition among cable companies and a denser, higher-performance wireless network.

Passive optical networks (PONs) are a vital technology to cost-effectively expand the use of optical fiber within access networks and make FTTH systems more viable. By creating networks using passive optical splitters, PONs avoid the power consumption and cost of active components in optical networks such as electronics and amplifiers. PONs can be deployed in mobile fronthaul and mid-haul for macro sites, metro networks, and enterprise scenarios.

Despite some success from PONs, the cost of laying more fiber and the optical modems for the end users continue to deter carriers from using FTTH more broadly across their networks. This cost problem will only grow as the industry moves into higher bandwidths, such as 50G and 100G, requiring coherent technology in the modems.

Therefore, new technology and manufacturing methods are required to make PON technology more affordable and accessible. For example, wavelength division multiplexing (WDM)-PON allows providers to make the most of their existing fiber infrastructure. Meanwhile, simplified designs for coherent digital signal processors (DSPs) manufactured at large volumes can help lower the cost of coherent PON technology for access networks.

Previous PON solutions, such as Gigabit PON (GPON) and Ethernet PON (EPON), used time-division multiplexing (TDM) solutions. In these cases, the fiber was shared sequentially by multiple channels. These technologies were initially meant for the residential services market, but they scale poorly for the higher capacity of business or carrier services. PON standardization for 25G and 50G capacities is ready but sharing a limited bitrate among multiple users with TDM technology is an insufficient approach for future-proof access networks.

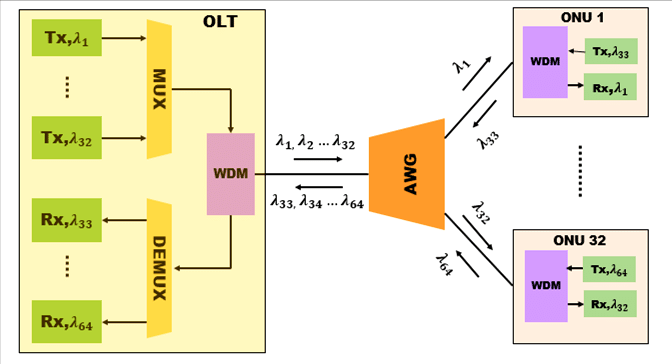

This WDM-PON uses WDM multiplexing/demultiplexing technology to ensure that data signals can be divided into individual outgoing signals connected to buildings or homes. This hardware-based traffic separation gives customers the benefits of a secure and scalable point-to-point wavelength link. Since many wavelength channels are inside a single fiber, the carrier can retain very low fiber counts, yielding lower operating costs.

WDM-PON has the potential to become the unified access and backhaul technology of the future, carrying data from residential, business, and carrier wholesale services on a single platform. We discussed this converged access solution in one of our previous articles. Its long-reach capability and bandwidth scalability enable carriers to serve more customers from fewer active sites without compromising security and availability.

Migration to the WDM-PON access network does require a carrier to reassess how it views its network topology. It is not only a move away from operating parallel purpose-built platforms for different user groups to one converged access and backhaul infrastructure. It is also a change from today’s power-hungry and labor-intensive switch and router systems to a simplified, energy-efficient, and transport-centric environment with more passive optical components.

As data demands continue to grow, direct detect optical technology used in prior PON standards will not be enough. The roadmap for this update remains a bit blurry, with different carriers taking different paths. For example, future expansions might require using 25G or 50G transceivers in the cable network, but the required number of channels in the fiber might not be enough for the conventional optical band (the C-band). Such a capacity expansion would therefore require using other bands (such as the O-band), which comes with additional challenges. An expansion to other optical bands would require changes in other optical networking equipment, such as multiplexers and filters, which increases the cost of the upgrade.

An alternative solution could be upgrading instead to coherent 100G technology. An upgrade to 100G could provide the necessary capacity in cable networks while remaining in the C-band and avoiding using other optical bands. This path has also been facilitated by the decreasing costs of coherent transceivers, which are becoming more integrated, sustainable, and affordable. You can read more about this subject in one of our previous articles.

For example, the renowned non-profit R&D center CableLabs announced a project to develop a symmetric 100G Coherent PON (C-PON). According to CableLabs, the scenarios for a C-PON are many: aggregation of 10G PON and DOCSIS 4.0 links, transport for macro-cell sites in some 5G network configurations, fiber-to-the-building (FTTB), long-reach rural scenarios, and high-density urban networks.

CableLabs anticipates C-PON and its 100G capabilities to play a significant role in the future of access networks, starting with data aggregation on networks that implement a distributed access architecture (DAA) like Remote PH. You can learn more about these networks here.

The main challenge of C-PON is the higher cost of coherent modulation and detection. Coherent technology requires more complex and expensive optics and digital signal processors (DSPs). Plenty of research is happening on simplifying these coherent designs for access networks. However, a first step towards making these optics more accessible is the 100ZR standard.

100ZR is currently a term for a short-reach (~80 km) coherent 100Gbps transceiver in a QSFP pluggable size. Targeted at the metro edge and enterprise applications that do not require 400ZR solutions, 100ZR provides a lower-cost, lower-power pluggable that also benefits from compatibility with the large installed base of 50 GHz and legacy 100 GHz multiplexer systems.

Another way to reduce the cost of PON technology is through the economics of scale, manufacturing pluggable transceiver devices at a high volume to drive down the cost per device. And with greater photonic integration, even more, devices can be produced on a single wafer. This economy-of-scale principle is the same behind electronics manufacturing, which must be applied to photonics.

Researchers at the Technical University of Eindhoven and the JePPIX consortium have modeled how this economy of scale principle would apply to photonics. If production volumes can increase from a few thousand chips per year to a few million, the price per optical chip can decrease from thousands of Euros to tens of Euros. This must be the goal of the optical transceiver industry.

Integrated photonics and volume manufacturing will be vital for developing future passive optical networks. PONs will use more WDM-PON solutions for increased capacity, secure channels, and easier management through self-tuning algorithms.

Meanwhile, PONs are also moving into incorporating coherent technology. These coherent transceivers have been traditionally too expensive for end-user modems. Fortunately, more affordable coherent transceiver designs and standards manufactured at larger volumes can change this situation and decrease the cost per device.

Tags: 100G, 5G, 6G, access, access networks, aggregation, backhaul, capacity, coherent, DWDM, fronthaul, Integrated Photonics, LightCounting, live events, metro, midhaul, mobile, mobile access, mobile networks, network, optical networking, optical technology, photonic integrated chip, photonic integration, Photonics, PIC, PON, programmable photonic system-on-chip, solutions, technology, VR, WDM

Optical fiber and dense wavelength division multiplex (DWDM) technology are moving towards the edges of…

Optical fiber and dense wavelength division multiplex (DWDM) technology are moving towards the edges of networks. In the case of new 5G networks, operators will need more fiber capacity to interconnect the increased density of cell sites, often requiring replacing legacy time-division multiplexing transmission with higher-capacity DWDM links. In the case of cable and other fixed access networks, new distributed access architectures like Remote PHY free up ports in cable operator headends to serve more bandwidth to more customers.

A report by Deloitte summarizes the reasons to expand the reach and capacity of optical access networks: “Extending fiber deeper into communities is a critical economic driver, promoting competition, increasing connectivity for the rural and underserved, and supporting densification for wireless.”

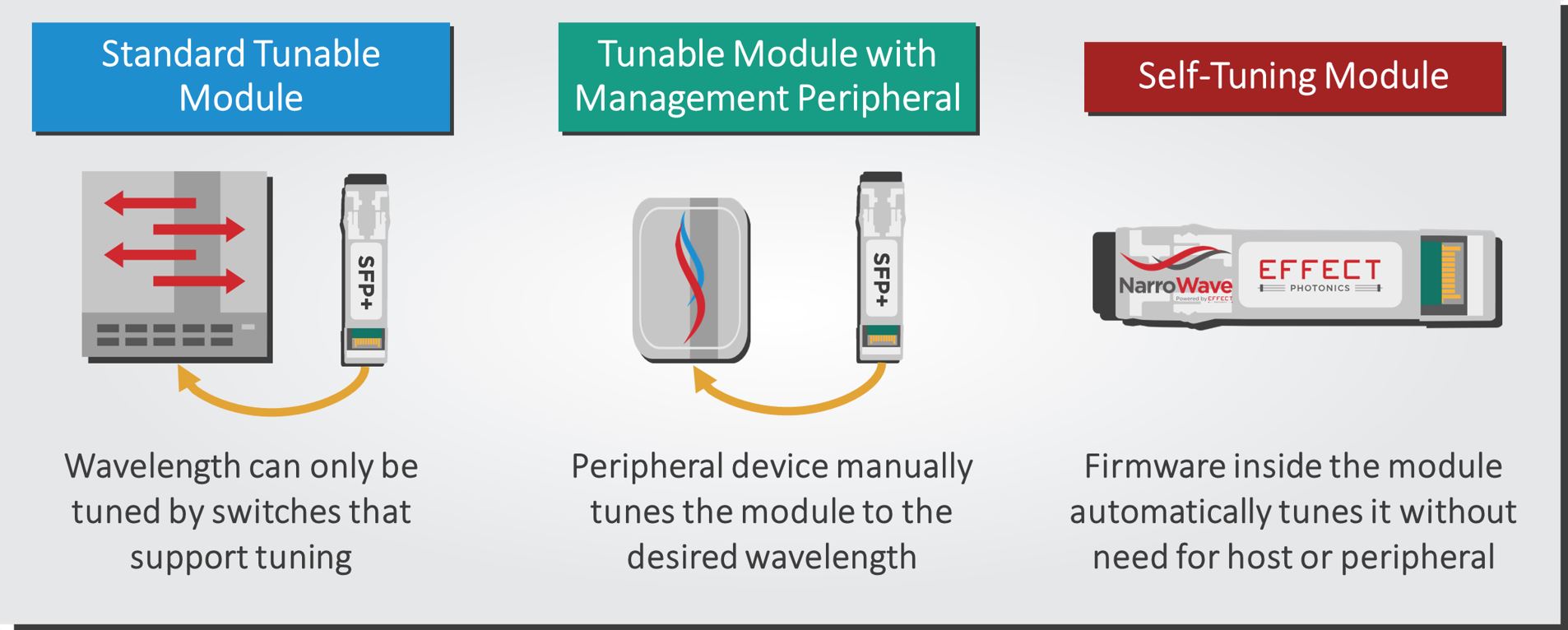

To achieve such a deep fiber deployment, operators look to DWDM solutions to expand their fiber capacity without the expensive laying of new fiber. DWDM technology has become more affordable than ever due to the availability of low-cost filters and SFP transceiver modules with greater photonic integration and manufacturing volumes. Furthermore, self-tuning technology has made the installation and maintenance of transceivers easier and more affordable.

Despite the advantages of DWDM solutions, their price still causes operators to second-guess whether the upgrade is worth it. For example, mobile fronthaul applications don’t require all 40, 80, or 100 channels of many existing tunable modules. Fortunately, operators can now choose between narrow- or full-band tunable solutions that offer a greater variety of wavelength channels to fit different budgets and network requirements.

Let’s look at what happens when a fixed access network needs to migrate to a distributed access architecture like Remote PHY.

A provider has a legacy access network with eight optical nodes, and each node services 500 customers. To give higher bandwidth capacity to these 500 customers, the provider wants to split each node into ten new nodes for fifty customers. Thus, the provider goes from having eight to eighty nodes. Each node requires the provider to assign a new DWDM channel, occupying more and more of the optical C-band. This network upgrade is an example that requires a fullband tunable module with coverage across the entire C-band to provide many DWDM channels with narrow (50 GHz) grid spacing.

Furthermore, using a fullband tunable module means that a single part number can handle all the necessary wavelengths for the network. In the past, network operators used fixed wavelength DWDM modules that must go into specific ports. For example, an SFP+ module with a C16 wavelength could only work with the C16 wavelength port of a DWDM multiplexer. However, tunable SFP+ modules can connect to any port of a DWDM multiplexer. This advantage means technicians no longer have to navigate a confusing sea of fixed modules with specific wavelengths; a single tunable module and part number will do the job.

Overall, fullband tunable modules will fit applications that need a large number of wavelength channels to maximize the capacity of fiber infrastructure. Metro transport or data center interconnects (DCIs) are good examples of applications with such requirements.

The transition to 5G and beyond will require a significant restructuring of mobile network architecture. 5G networks will use higher frequency bands, which require more cell sites and antennas to cover the same geographical areas as 4G. Existing antennas must upgrade to denser antenna arrays. These requirements will put more pressure on the existing fiber infrastructure, and mobile network operators are expected to deliver their 5G promises with relatively little expansion in their fiber infrastructure.

DWDM solutions will be vital for mobile network operators to scale capacity without laying new fiber. However, operators often regard traditional fullband tunable modules as expensive for this application. Mobile fronthaul links don’t need anything close to the 40 or 80 DWDM channels of a fullband transceiver. It’s like having a cable subscription where you only watch 10 out of the 80 TV channels.

This issue led EFFECT Photonics to develop narrowband tunable modules with just nine channels. They offer a more affordable and moderate capacity expansion that better fits the needs of mobile fronthaul networks. These networks often feature nodes that aggregate two or three different cell sites, each with three antenna arrays (each antenna provides 120° coverage at the tower) with their unique wavelength channel. Therefore, these aggregation points often need six or nine different wavelength channels, but not the entire 80-100 channels of a typical fullband module.

With the narrowband tunable option, operators can reduce their part number inventory compared to grey transceivers while avoiding the cost of a fullband transceiver.

The number of channels in a tunable module (up to 100 in the case of EFFECT Photonics fullband modules) can quickly become overwhelming for technicians in the field. There will be more records to examine, more programming for tuning equipment, more trucks to load with tuning equipment, and more verifications to do in the field. These tasks can take a couple of hours just for a single node. If there are hundreds of nodes to install or repair, the required hours of labor will quickly rack up into the thousands and the associated costs into hundreds of thousands.

Self-tuning allows technicians to treat DWDM tunable modules the same way they treat grey transceivers. There is no need for additional training for technicians to install the tunable module. There is no need to program tuning equipment or obsessively check the wavelength records and tables to avoid deployment errors on the field. Technicians only need to follow the typical cleaning and handling procedures, plug the transceiver, and the device will automatically scan and find the correct wavelength once plugged. This feature can save providers thousands of person-hours in their network installation and maintenance and reduce the probability of human errors, effectively reducing capital and operational expenditures.

Self-tuning algorithms make installing and maintaining narrowband and fullband tunable modules more straightforward and affordable for network deployment.

Fullband self-tuning modules will allow providers to deploy extensive fiber capacity upgrades more quickly than ever. However, in use cases such as mobile access networks where operators don’t need a wide array of DWDM channels, they can opt for narrowband solutions that are more affordable than their fullband alternatives. By combining fullband and narrowband solutions with self-tuning algorithms, operators can expand their networks in the most affordable and accessible ways for their budget and network requirements.

Tags: 100G, 5G, 6G, access, access networks, aggregation, backhaul, capacity, coherent, DWDM, fronthaul, Integrated Photonics, LightCounting, live events, metro, midhaul, mobile, mobile access, mobile networks, network, optical networking, optical technology, photonic integrated chip, photonic integration, Photonics, PIC, PON, programmable photonic system-on-chip, solutions, technology, VR, WDM© 2025 EFFECT PHOTONICS All rights reserved. T&C of Website - T&C of Purchase - Privacy Policy - Cookie Policy - Supplier Code of Conduct