One Watt Matters

Data centers and 5G networks might be hot commodities, but the infrastructure that enables them runs even hotter. Electronic equipment generates plenty of heat; the more heat energy an electronic device dissipates, the more money and energy must be spent to cool it down.

The Uptime Institute estimates that the average power usage effectiveness (PUE) ratio for data centers in 2022 is 1.55. This implies that for every 1 kWh used to power data center equipment, an extra 0.55 kWh—about 35% of total power consumption—is needed to power auxiliary equipment like lighting and, more importantly, cooling. While the advent of centralized hyperscale data centers will improve energy efficiency in the coming decade, that trend is offset by the construction of many smaller local data centers on the network edge to address the exponential growth of 5G services such as the Internet of Things (IoT).

These opposing trends are one of the reasons why the Uptime Institute has only observed a marginal improvement of 10% in the average data center PUE since 2014 (which was 1.7 back then). Such a slow improvement in average data center power efficiency cannot compensate for the fast growth of new edge data centers.

For all the bad reputation data centers receive for their energy consumption, though, wireless transmission generates even more heat than wired links. While 5G standards are more energy-efficient per bit than 4G, Huawei expects that the maximum power consumption of one of their 5G base stations will be 68% higher than their 4G stations. To make things worse, the use of higher frequency spectrum bands and new IoT use cases require the deployment of more base stations too.

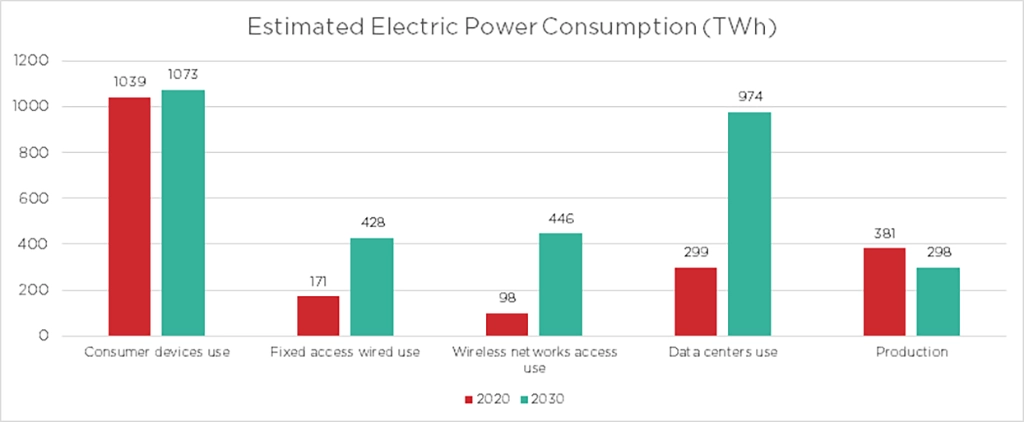

Prof. Earl McCune from TU Delft estimates that nine out of ten watts of electrical power in 5G systems turn into heat. This Huawei study also predicts that the energy consumption of wireless access networks will increase even more quickly than data centers in the next ten years—more than quadrupling between 2020 and 2030.

These power efficiency issues do not just affect the environment but also the bottom lines of communications companies. In such a scenario, saving even one watt of power per pluggable transceiver could quickly multiply and scale up into a massive improvement on the sustainability and profitability of telecom and datacom providers.

How One Watt of Savings Scales Up

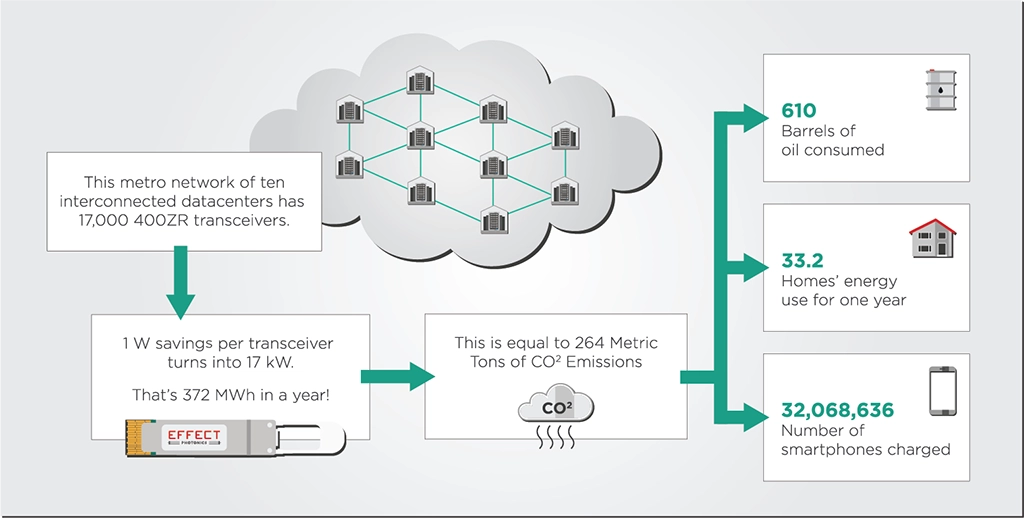

Let’s discuss an example to show how a seemingly small improvement of one Watt in pluggable transceiver power consumption can quickly scale up into major energy savings.

A 2020 paper from Microsoft Research estimates that for a metropolitan region of 10 data centers with 16 fiber pairs each and 100-GHz DWDM per fiber, the regional interconnect network needs to host 12,800 transceivers. This number of transceivers could increase by a third in the coming years since the 400ZR transceiver ecosystem supports a denser 75 GHz DWDM grid, so this number of transceivers would increase to 17,000. Therefore, saving a watt of power in each transceiver would lead to a total of 17 kW in savings.

The power savings don’t end there, however. The transceiver is powered by the server, which is then powered by its power supply and, ultimately, the national electricity grid. On average, 2.5 Watts must be supplied from the national grid for every watt of power the transceiver uses. When applying that 2.5 factor, the 17 kW in savings we discussed earlier are, in reality, 42.5 kW. In a year of power consumption, this rate adds up to a total of 372 MWh in power consumption savings. According to the US Environmental Protection Agency (EPA), these amounts of power savings in a single metro data center network are equivalent to 264 metric tons of carbon dioxide emissions. These emissions are equivalent to consuming 610 barrels of oil and could power up to 33 American homes for a year.

Saving Power through Integration and Co-Design

Before 2020, Apple made its computer processors with discrete components. In other words, electronic components were manufactured on separate chips, and then these chips were assembled into a single package. However, the interconnections between the chips produced losses and incompatibilities that made their devices less energy efficient. After 2020, starting with Apple’s M1 processor, they fully integrate all components on a single chip, avoiding losses and incompatibilities. As shown in the table below, this electronic system-on-chip (SoC) consumes a third of the power compared to the processors with discrete components used in their previous generations of computers.

| 𝗠𝗮𝗰 𝗠𝗶𝗻𝗶 𝗠𝗼𝗱𝗲𝗹 | 𝗣𝗼𝘄𝗲𝗿 𝗖𝗼𝗻𝘀𝘂𝗺𝗽𝘁𝗶𝗼𝗻 | |

| 𝗜𝗱𝗹𝗲 | 𝗠𝗮𝘅 | |

| 2023, M2 | 7 | 5 |

| 2020, M1 | 7 | 39 |

| 2018, Core i7 | 20 | 122 |

| 2014, Core i5 | 6 | 85 |

| 2010, Core 2 Duo | 10 | 85 |

| 2006, Core Solo or Duo | 23 | 110 |

| 2005, PowerPC G4 | 32 | 85 |

| Table 1: Comparing the power consumption of a Mac Mini with an M1 and M2 SoC chips to previous generations of Mac Minis. [Source: Apple’s website] | ||

The photonics industry would benefit from a similar goal: implementing a photonic system-on-chip. Integrating all the optical components (lasers, detectors, modulators, etc.) on a single chip can minimize the losses and make devices such as optical transceivers more efficient. This approach doesn’t just optimize the efficiency of the devices themselves but also of the resource-hungry chip manufacturing process. For example, a system-on-chip approach enables earlier optical testing on the semiconductor wafer and dies. By testing the dies and wafers directly before packaging, manufacturers need only discard the bad dies rather than the whole package, which saves valuable energy and materials. You can read our previous article on the subject to know more about the energy efficiency benefits of system-on-chip integration.

Another way of improving power consumption in photonic devices is co-designing their optical and electronic systems. A co-design approach helps identify in greater detail the trade-offs between various parameters in the optics and electronics, optimizing their fit with each other and ultimately improving the overall power efficiency of the device. In the case of coherent optical transceivers, an electronic digital signal processor specifically optimized to drive an indium-phosphide optical engine directly could lead to power savings.

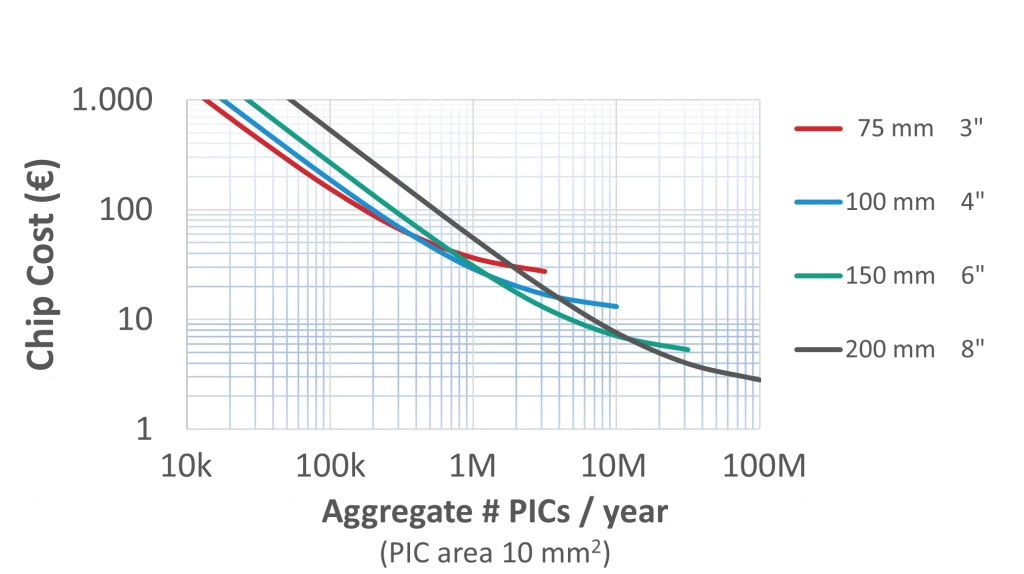

When Sustainability is Profitable

System-on-chip (SoC) approaches might reduce not only the footprint and energy consumption of photonic devices but also their cost. The economics of scale principles that rule the electronic semiconductor industry can also reduce the cost of photonic systems-on-chip. After all, SoCs minimize the footprint of photonic devices, allowing photonics developers to fit more of them within a single wafer, which decreases the price of each photonic system. As the graphic below shows, the more chips and wafers are produced, the lower the cost per chip becomes.

Integrating all optical components—including the laser—on a single chip shifts the complexity from the expensive assembly and packaging process to the more affordable and scalable semiconductor wafer process. For example, it’s much easier to combine optical components on a wafer at a high volume than to align components from different chips together in the assembly process. This shift to wafer processes also helps drive down the cost of the device.

Takeaways

With data and energy demands rising yearly, telecom and datacom providers are constantly finding ways to reduce their power and cost per transmitted bit. As we showed earlier in this article, even one watt of power saved in an optical transceiver can snowball into major savings that providers and the environment can profit from. These improvements in the power consumption of optical transceivers can be achieved by deepening the integration of optical components and co-designing them with electronics. Highly compact and integrated optical systems can also be manufactured at greater scale and efficiency, reducing their financial and environmental costs. These details help paint a bigger picture for providers: sustainability now goes hand-in-hand with profitability.