Photonics For the Energy Transition

The world relies heavily on traditional fossil fuels like coal, oil, and natural gas, but their environmental impact has prompted a critical reevaluation. The energy transition is a strategic pivot towards cleaner and more sustainable energy sources to reduce carbon emissions.

The energy transition has gained momentum over the last decade, with many countries setting ambitious targets for carbon neutrality and renewable energy adoption. Governments, industries, and communities worldwide are investing heavily in renewable infrastructure and implementing policies to reduce emissions.

In the information and communication technology (ICT) sector, the exponential increase in data traffic makes it difficult to keep emissions down and contribute to the energy transition. Data centers and 5G networks might be hot commodities, but the infrastructure that enables them runs even hotter. Electronic equipment generates plenty of heat; the more heat energy an electronic device dissipates, the more money and energy must be spent to cool it down.

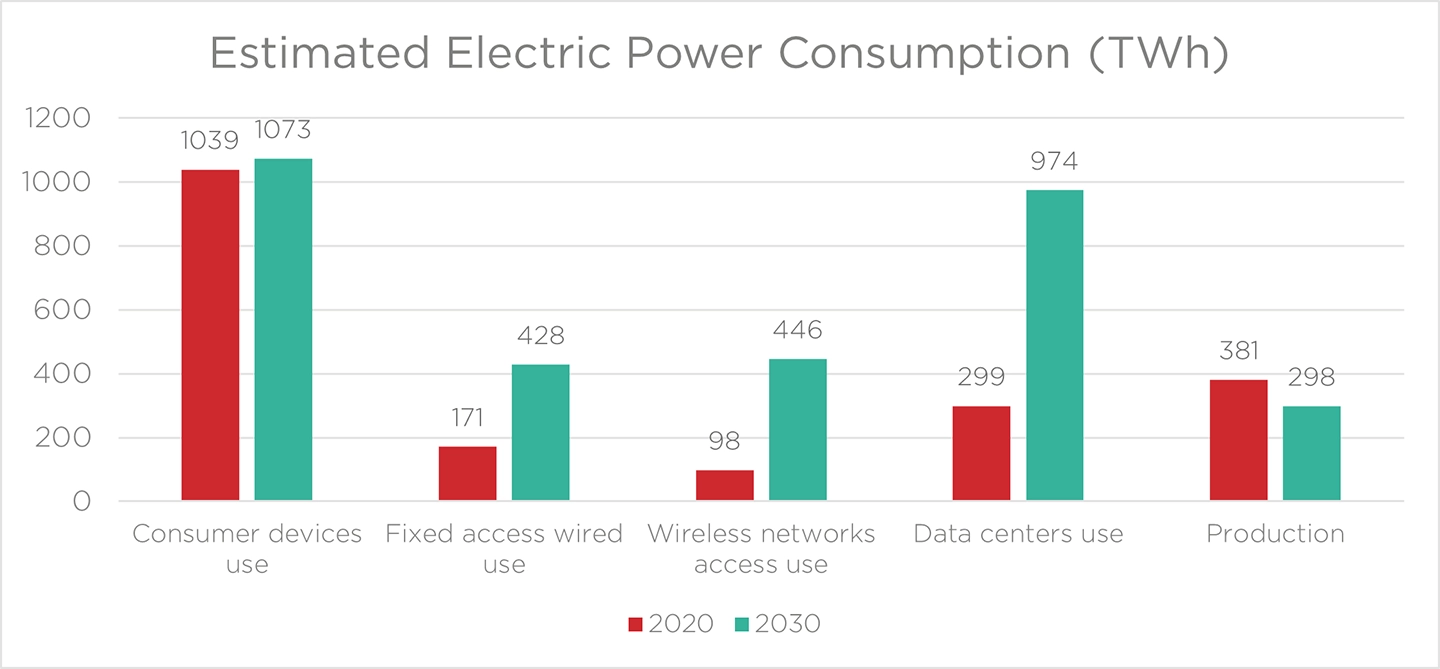

A 2020 study by Huawei estimates that the power consumption of the data center sector will increase threefold in the next ten years. Meanwhile, wireless access networks are expected to increase their power consumption even faster, more than quadrupling between 2020 and 2030.

These issues affect the environment as well as the bottom lines of communications companies, which must commit increasingly larger percentages of their operating expenditure to cooling solutions.

Decreasing energy consumption and costs in the ICT sector requires more efficient equipment, and photonics technology will be vital in enabling such a goal. Photonics can transmit information more efficiently than electronics and ensure that the exponential increase in data traffic does not become an exponential increase in power consumption.

Photonics’ Power Advantages

Photonics and light have a few properties that improve the energy efficiency of data transmission compared to electronics and electric signals.

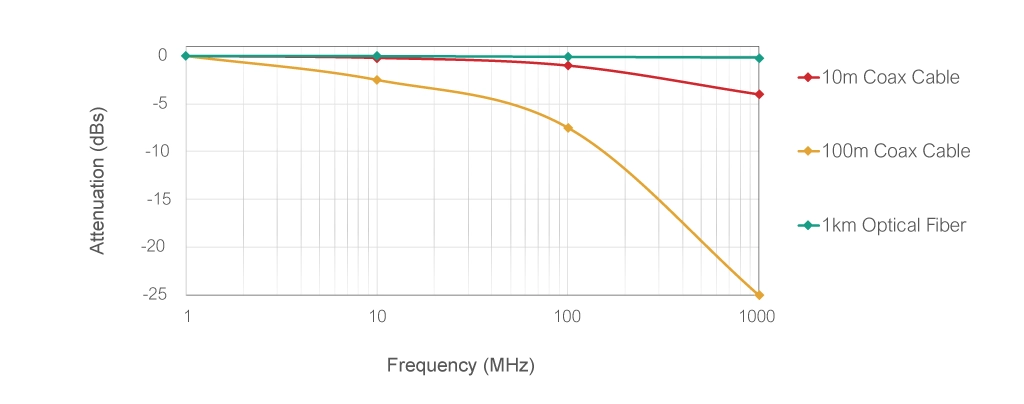

When electricity moves through a wire or coaxial cable, it encounters resistance, which leads to energy loss in the form of heat. Conversely, light experiences much less resistance when traveling through optical fiber, resulting in significantly lower energy loss during data transmission. As shown in the figure below, this energy loss gets exponentially worse with faster (i.e., higher-frequency) signals that can carry more data. Photonics scales much better with increasing frequency and data.

These losses and heat generation in electronic data transmission lead to higher power consumption, more cooling systems use, and reduced transmission distances compared to photonic transmission.

The low-loss properties of optical fibers enable light to be transmitted over vastly longer distances than electrical signals. Due to their longer reach, optical signals also save more power than electrical signals by reducing the number of times the signal needs regeneration.

With all these advantages, photonics entails a lower power per bit transmitted compared to electronic transmission, which often translates to a lower cost per bit.

Photonics’ Capacity Advantages

Aside from being more power efficient than electronics, another factor that decreases the power and cost per bit of photonic transmission is its data capacity and bandwidth.

Light waves have much higher frequencies than electrical signals. This means they oscillate more rapidly, allowing for a higher information-carrying capacity. In other words, light waves can encode more information than electrical signals.

Optical fibers have a much wider bandwidth than electrical wires or coaxial cables. This means they can carry a broader range of signals, allowing for higher data rates and more transmission of parallel data streams. Thanks to technologies such as dense wavelength division multiplexing (DWDM), multiple data channels can be sent and received simultaneously, significantly increasing the transmission capacity of an optical fiber.

Overall, the properties of light make it a superior medium for transmitting large volumes of data over long distances compared to electricity.

Transfer Data, Not Power

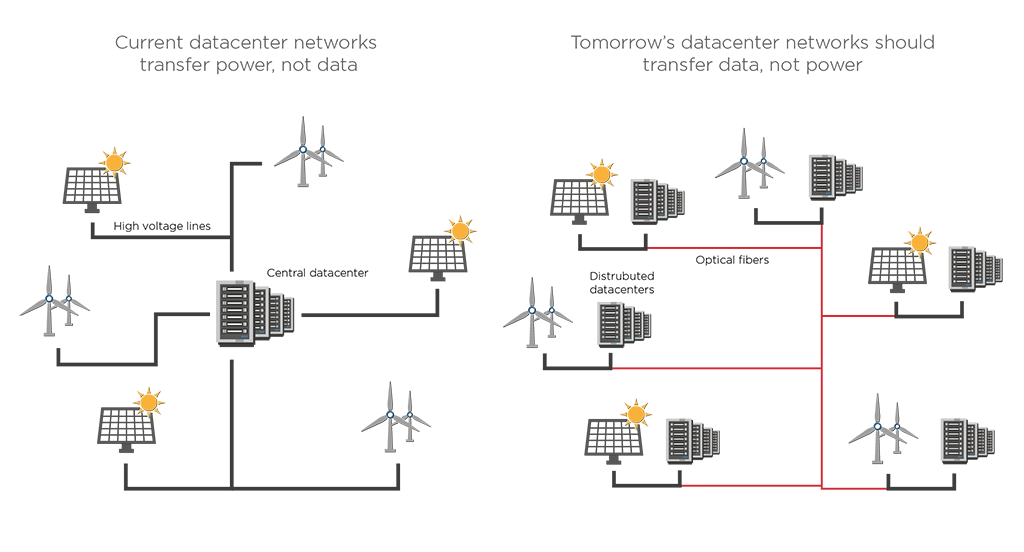

Photonics can also play a key role in rethinking the architecture of data centers. Photonics enables a more decentralized system of data centers with branches in different geographical areas connected through high-speed optical fiber links to cope with the strain of data center clusters on power grids.

For example, data centers can relocate to areas with available spare power, preferably from nearby renewable energy sources. Efficiency can increase further by sending data to branches with spare capacity. The Dutch government has already proposed this kind of decentralization as part of its spatial strategy for data centers.

Takeaways

Despite all these advantages, electronics’s one significant advantage over photonics is accessibility.

Electronic components can be easily manufactured at scale, ordered online from a catalog, soldered into a board, and integrated into a product. For photonics to enable an energy transition in the ICT sector, it must be as accessible and easy to use as electronics.

However, for photonics to truly scale and become as accessible as electronics, more investment is necessary to scale production and adapt existing electronics processes to photonics. This scaling will drive down production costs, making integrated photonics more widely available and paving the way for its impactful integration into numerous technologies across the globe.