Home » Industries » Core Industries »

AI & Cloud

Generative Artificial Intelligence

AI models will impact several industries, and data centers are no exception. AI models are computationally heavy, and their increasing complexity will require faster and more efficient interconnections than ever between GPUs, nodes, server racks, and data center campuses. These interconnects will significantly impact the ability of data center architectures to scale and handle the demands of AI models sustainably.

The Increasing Demand For Compute Power And Energy

In this era of AI and deep learning, the demand for training compute power far outstrips the growth in compute power. Meanwhile, edge computing and networks continue spreading and enhancing AI applications by processing data closer to the source, reducing latency and bandwidth usage. However, cloud providers cannot distribute their processing capabilities without considerable investments in real estate, infrastructure deployment, and management. In this context, AI and edge computing pose a few problems for telecom providers, too.

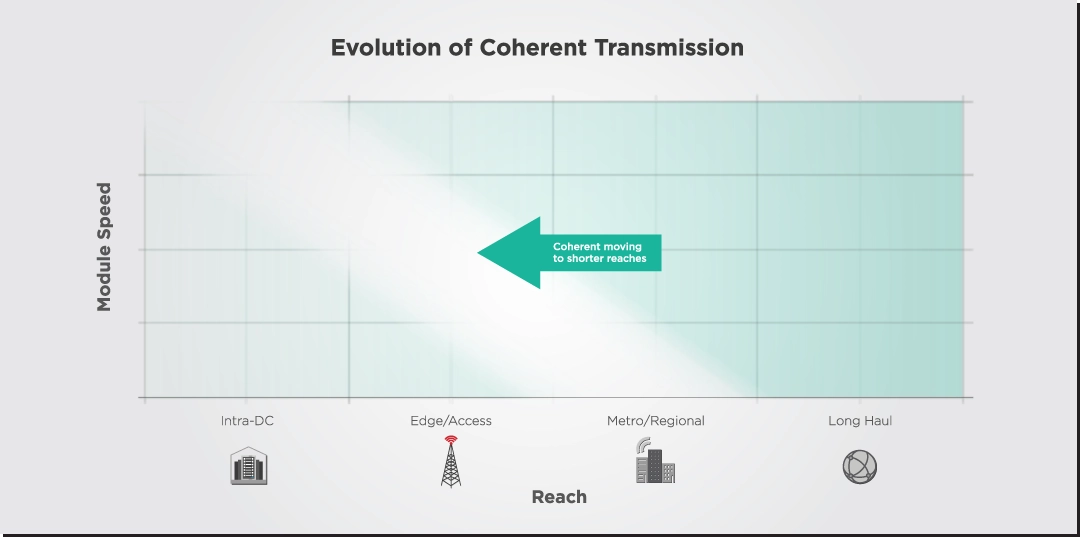

Sustainable And Affordable Coherent Interconnections

While a single optical coherent transceiver might be more expensive than a direct detect (IM-DD) one, it transmits much more data over longer distances, doing the work of several IM-DD transceivers in parallel. In other words, coherent technology can provide a lower cost and power per bit in certain application cases.

The Growing Pains Of Scaling

- AI consumes lots of energy: the International Energy Agency (IEA) estimates that a single ChatGPT query consumes almost ten times more than a single Google search.

- The production of high-quality optical components is expensive, which can limit widespread adoption and scalability.

- Optical interconnects could scale running many direct detect (IM-DD) transceivers in parallel, but at some point, this parallelization could run into electrical memory bandwidth limits.

Key Solutions

- Thanks to advances in photonic and electronic integration, coherent technology has been miniaturized into pluggable and co-packaged solutions that fit many different data center use cases.

- Coherent optics can reduce the power and cost per bit compared to running several direct-detect optics in parallel.

- DSPs and lasers optimized for lower power consumption can squeeze more performance from the same fiber optic infrastructure.